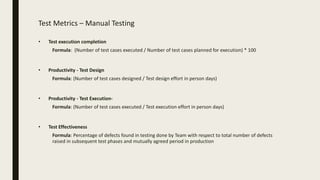

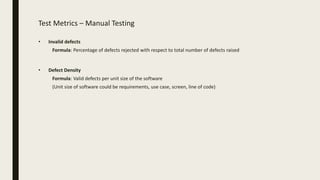

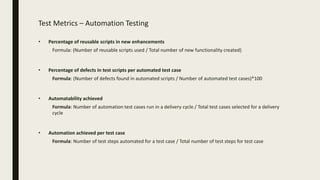

The document discusses various software testing metrics critical for improving software projects, covering manual, automation, and performance testing. It emphasizes the significance of these metrics in decision-making and risk management for test managers, who should possess extensive experience in software testing. It provides detailed formulas for different testing metrics, highlighting their applications and importance in assessing software quality and progress.

![Test Metrics – Automation Testing

• Return Of Investment (ROI) of Automation

Formula: (Gain + Investment) / Investment

Investment = (a) + (b) + (c) + (d) + (e)

Gain = [(g) * (h) * Period of ROI] / 8

(a) Automated test script development time = (Hourly automation time per test * Number of automated

test cases) / 8

(b) Automated test script execution time = (Automated test execution time per test * Number of automated

test cases * Period of ROI) / 18

(c) Automated test analysis time = (Test Analysis time * Period of ROI) / 8

(d) Automated test maintenance time = (Maintenance time * Period of ROI) / 8

(e) Manual Execution Time = (Manual test execution time * Number of manual test cases * Period of ROI) /

8

(g) Manual test execution time or analysis time

(h) Total number of test cases (automated + manual)

Period of ROI is the number of weeks for which ROI is to be calculated. Divided by 8 is done wherever

manual effort is needed. Divided by 18 is done wherever automation is done.](https://image.slidesharecdn.com/softwaretestingmetrics-200630105353/85/Software-Testing-Metrics-8-320.jpg)

![• Effort Variance (EV)

Formula: [(Actual effort - Estimated effort) / Estimated efforts ] * 100

• Schedule Variance (SV)

Formula: [(Actual number of days - Estimated number of days) / Estimated number of days] * 100

• Scope Change

Formula: [(Total scope - Previous scope) / Previous scope] * 100

Common Metrics For All Types Of Testing](https://image.slidesharecdn.com/softwaretestingmetrics-200630105353/85/Software-Testing-Metrics-14-320.jpg)