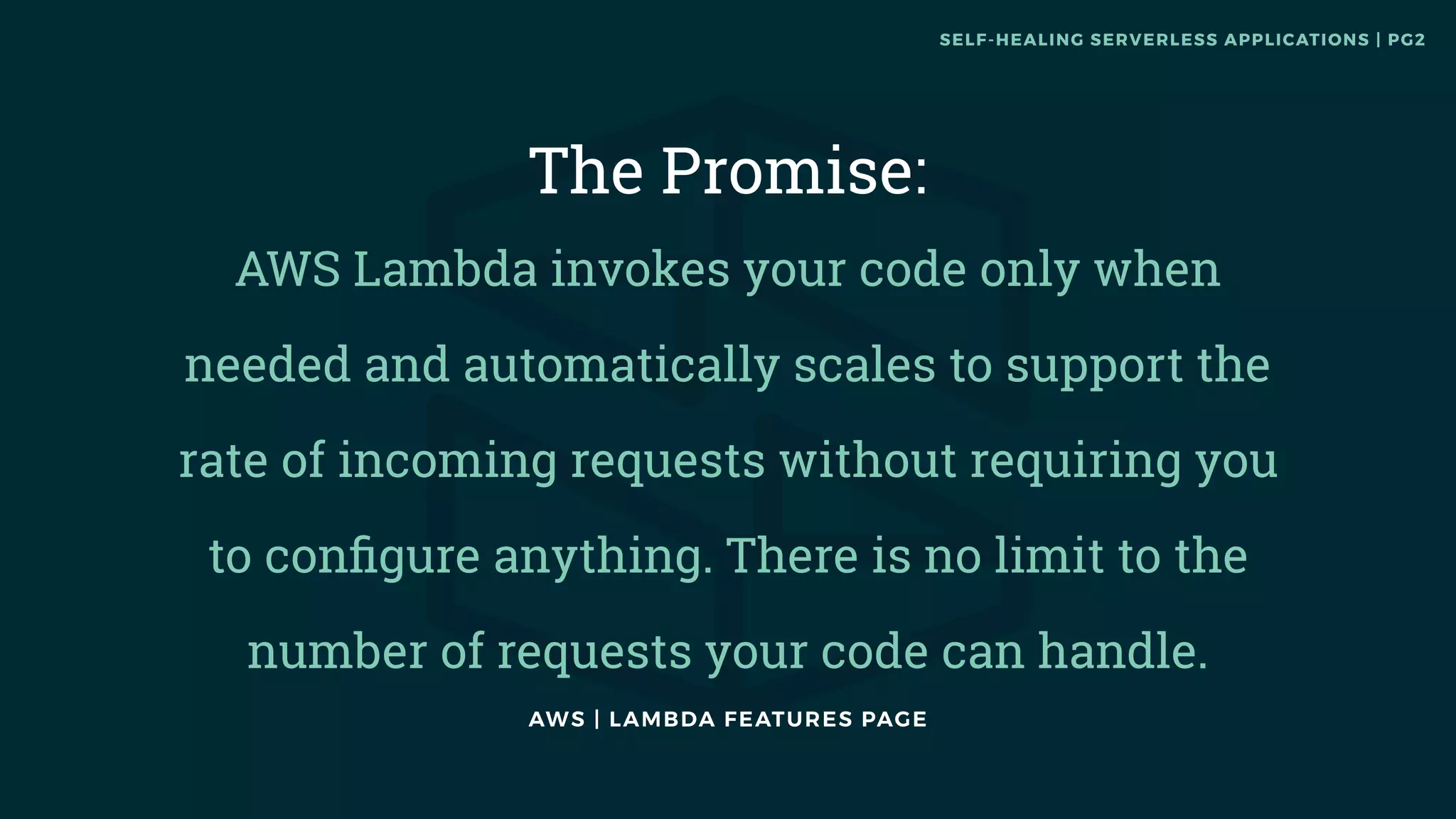

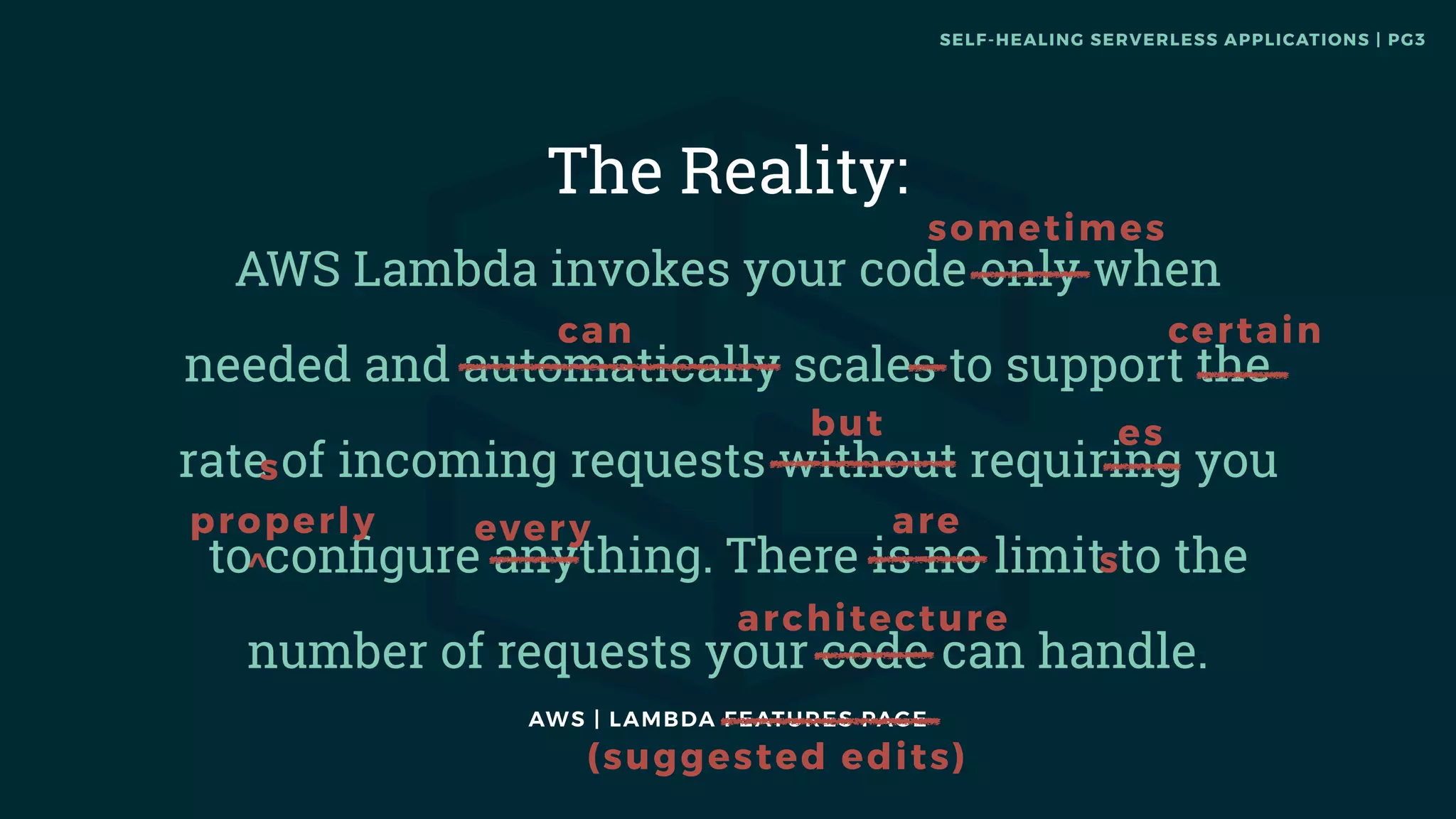

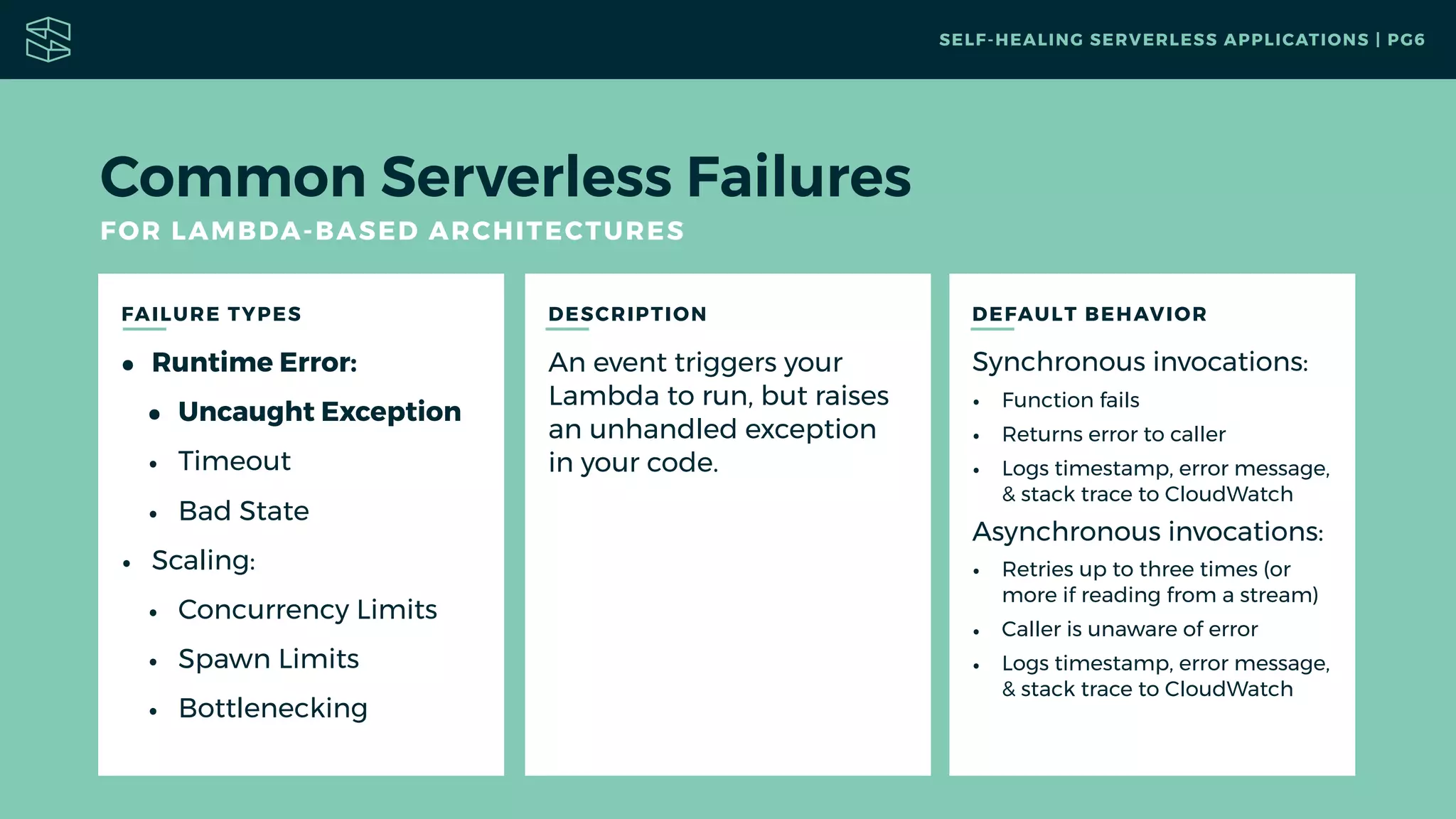

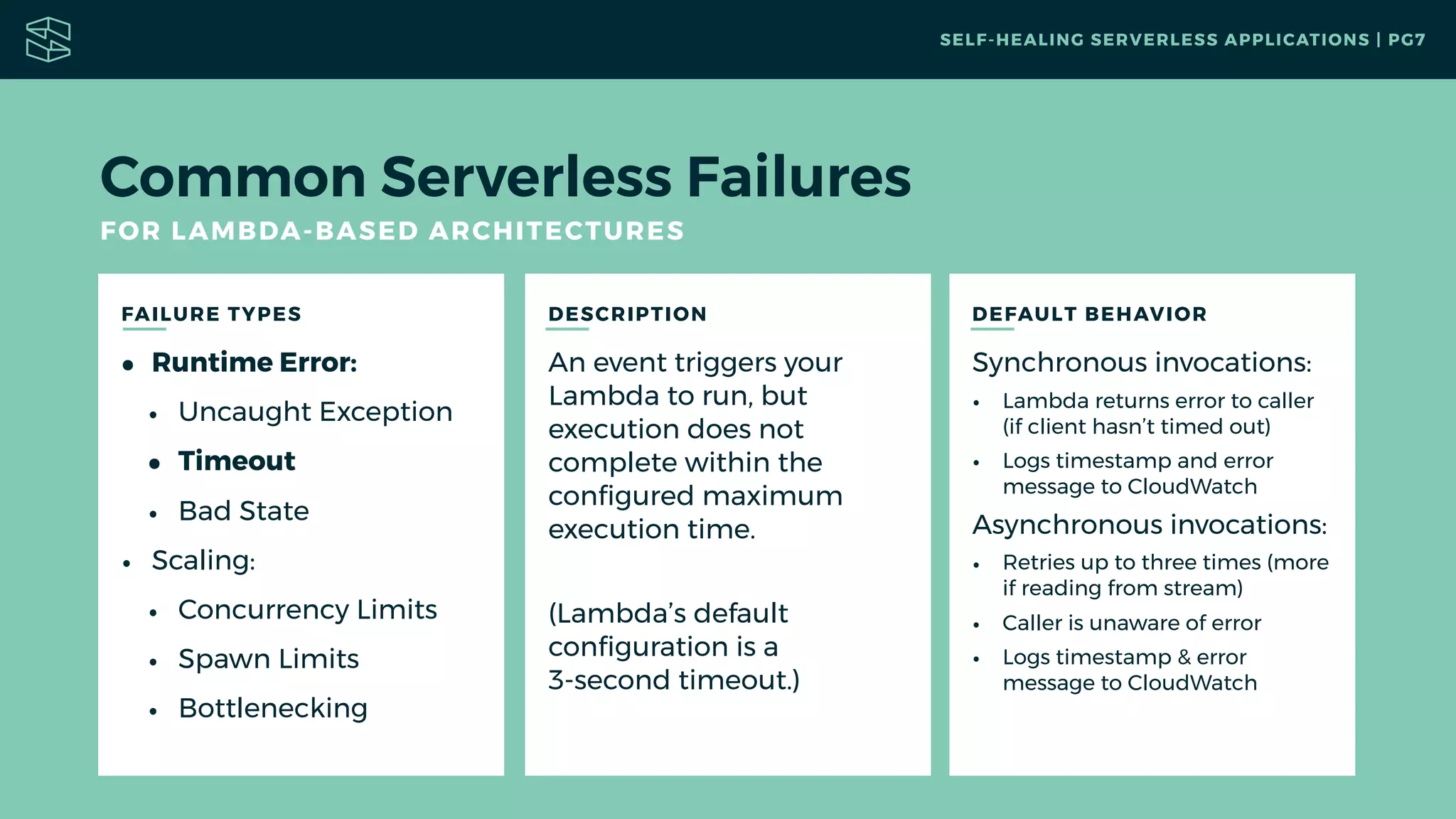

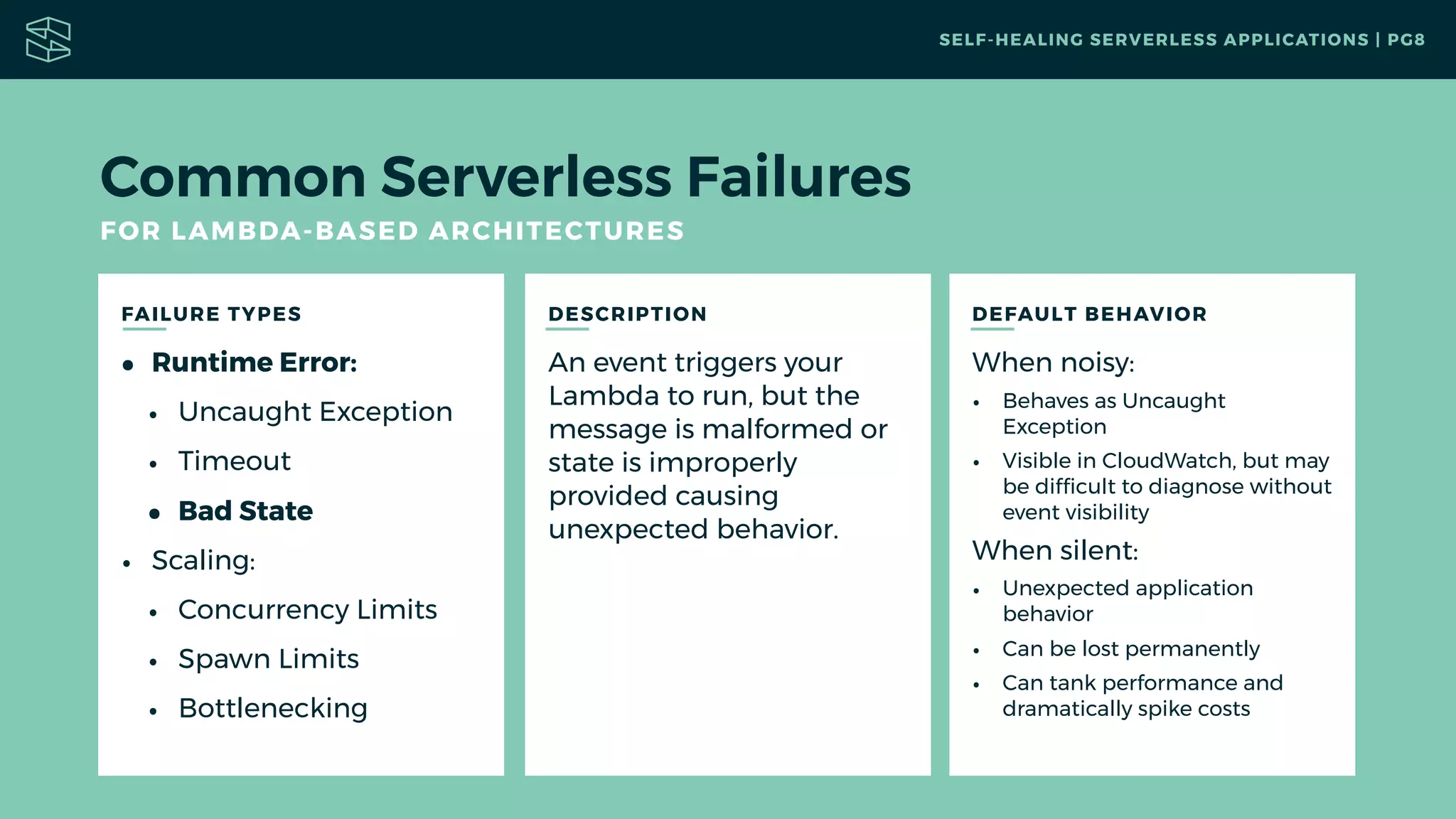

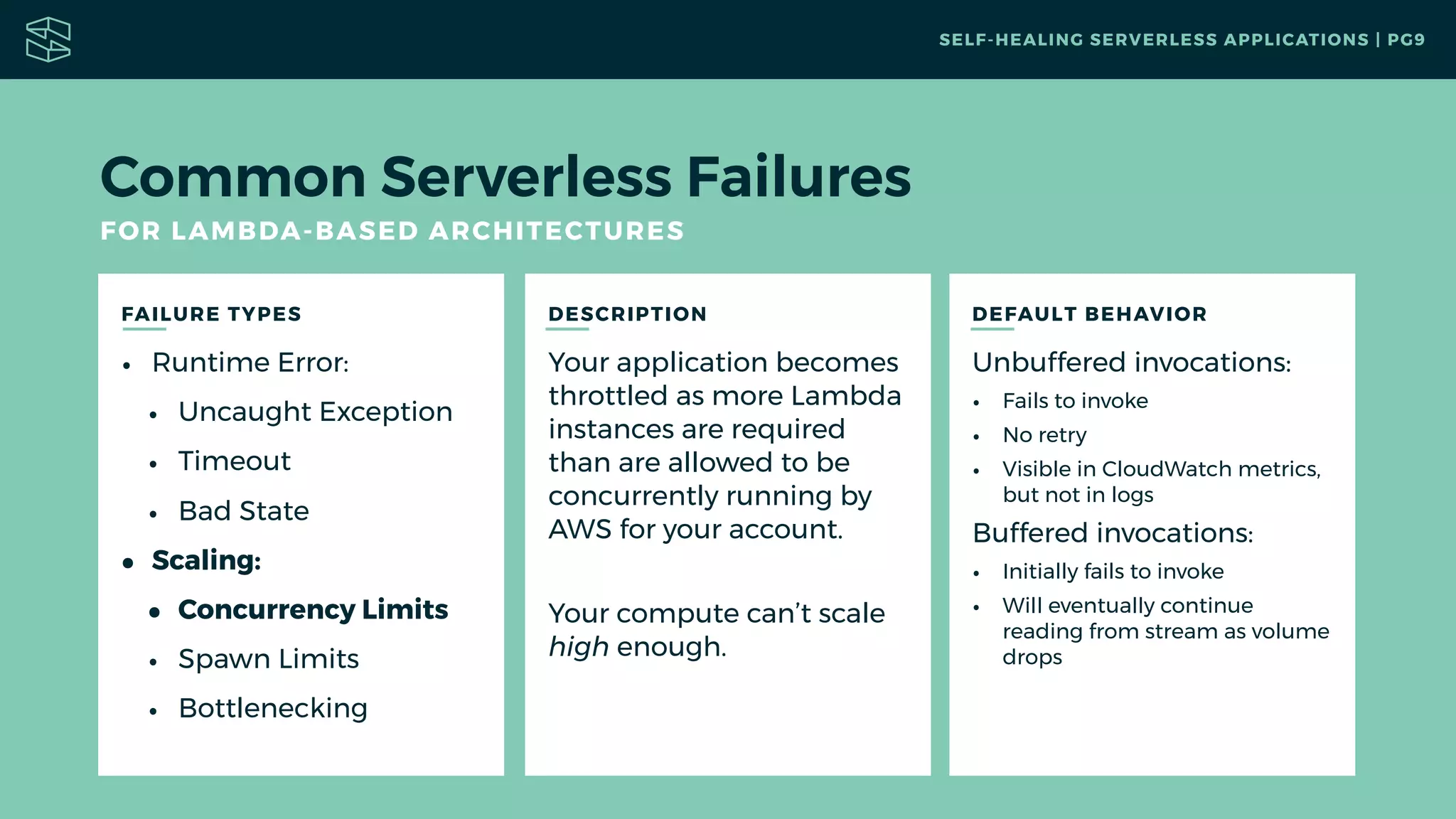

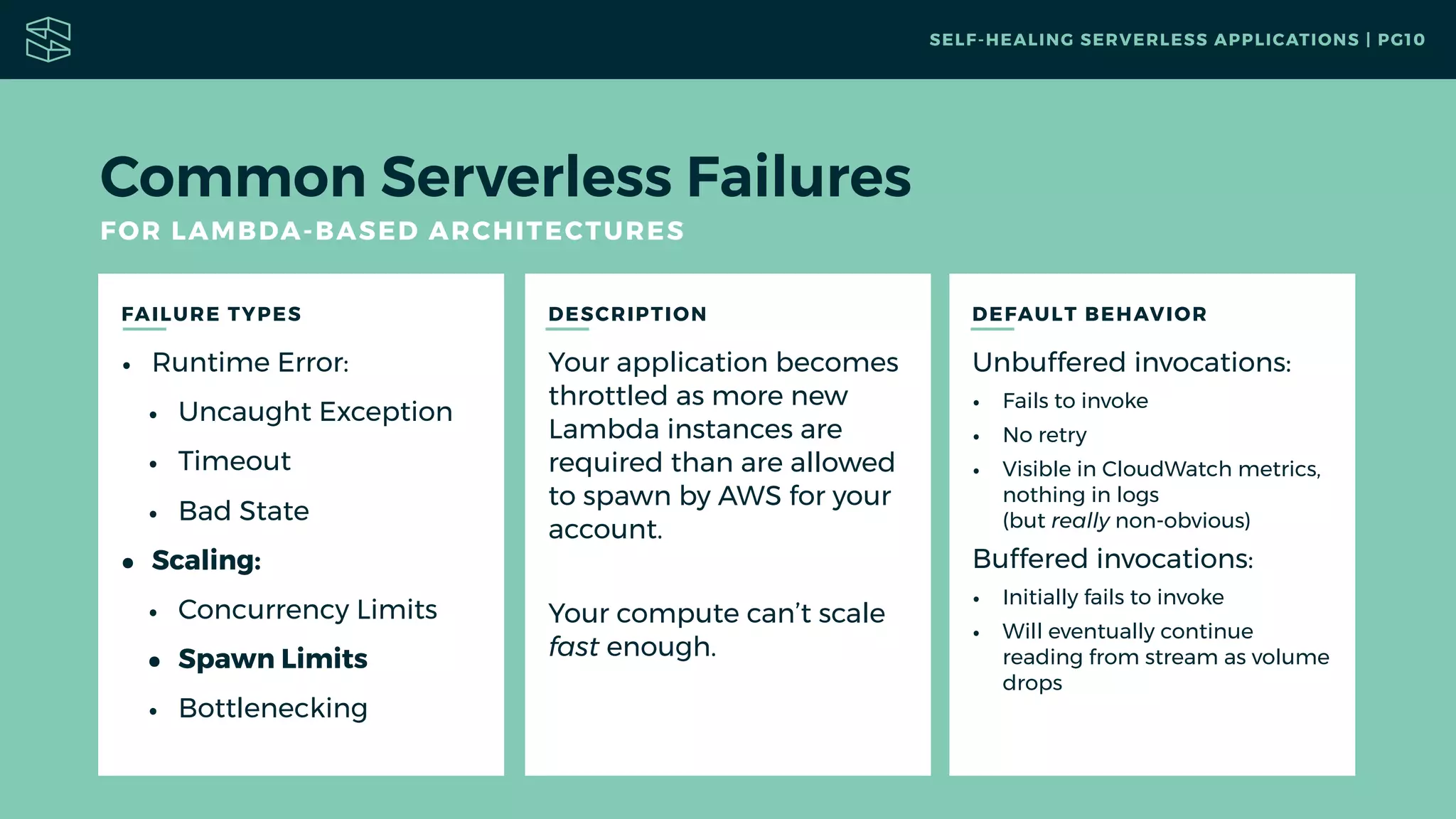

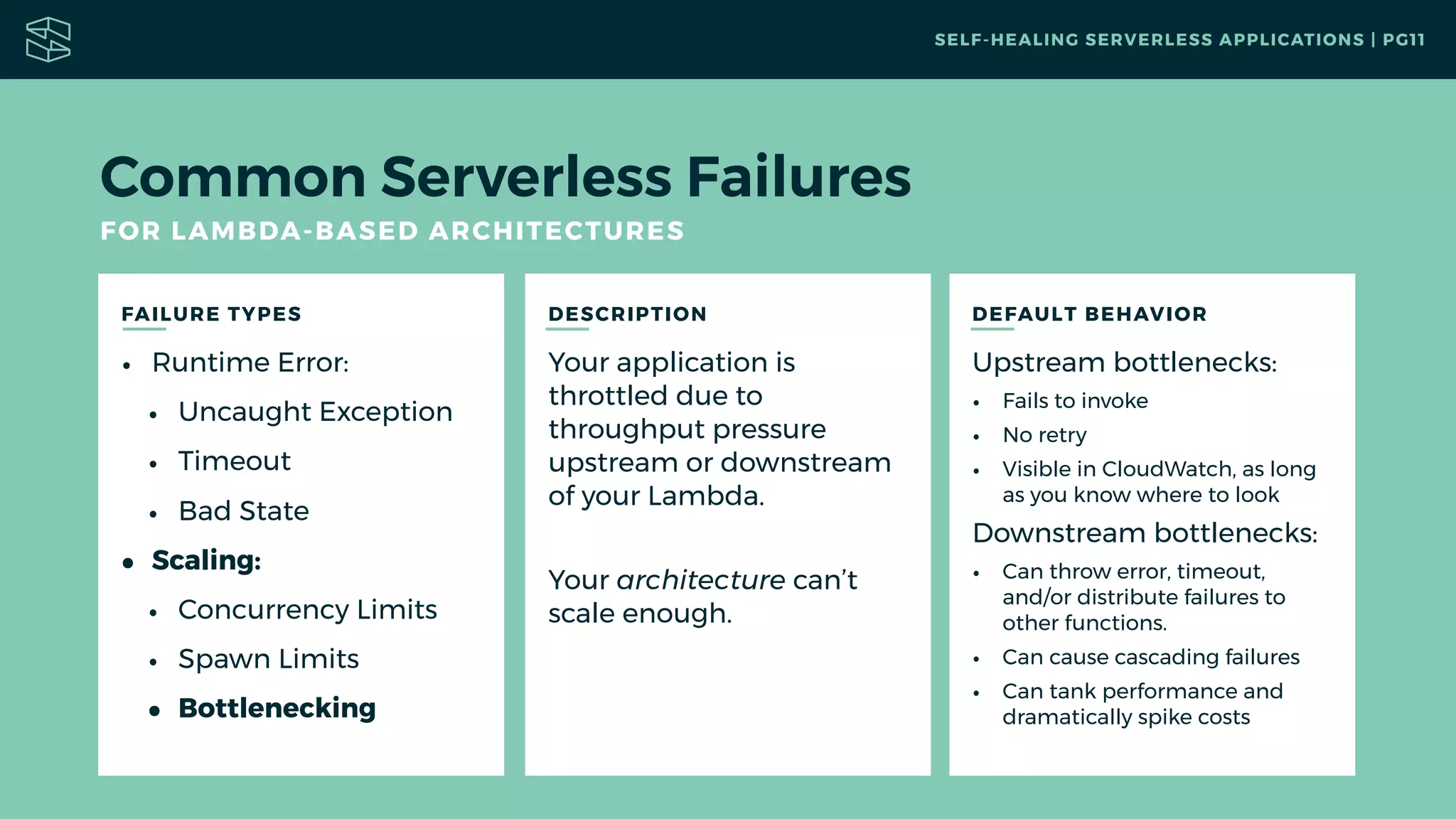

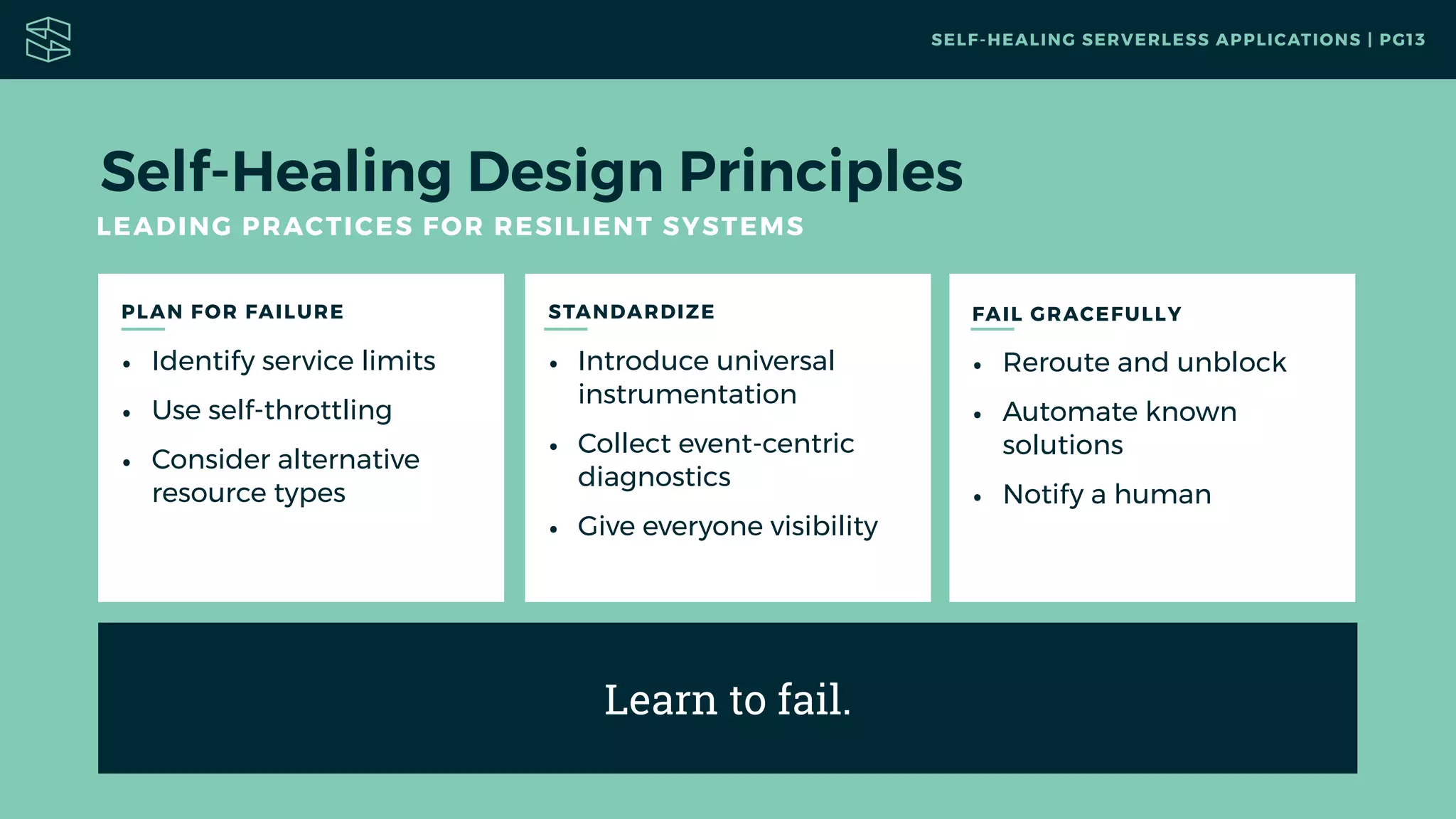

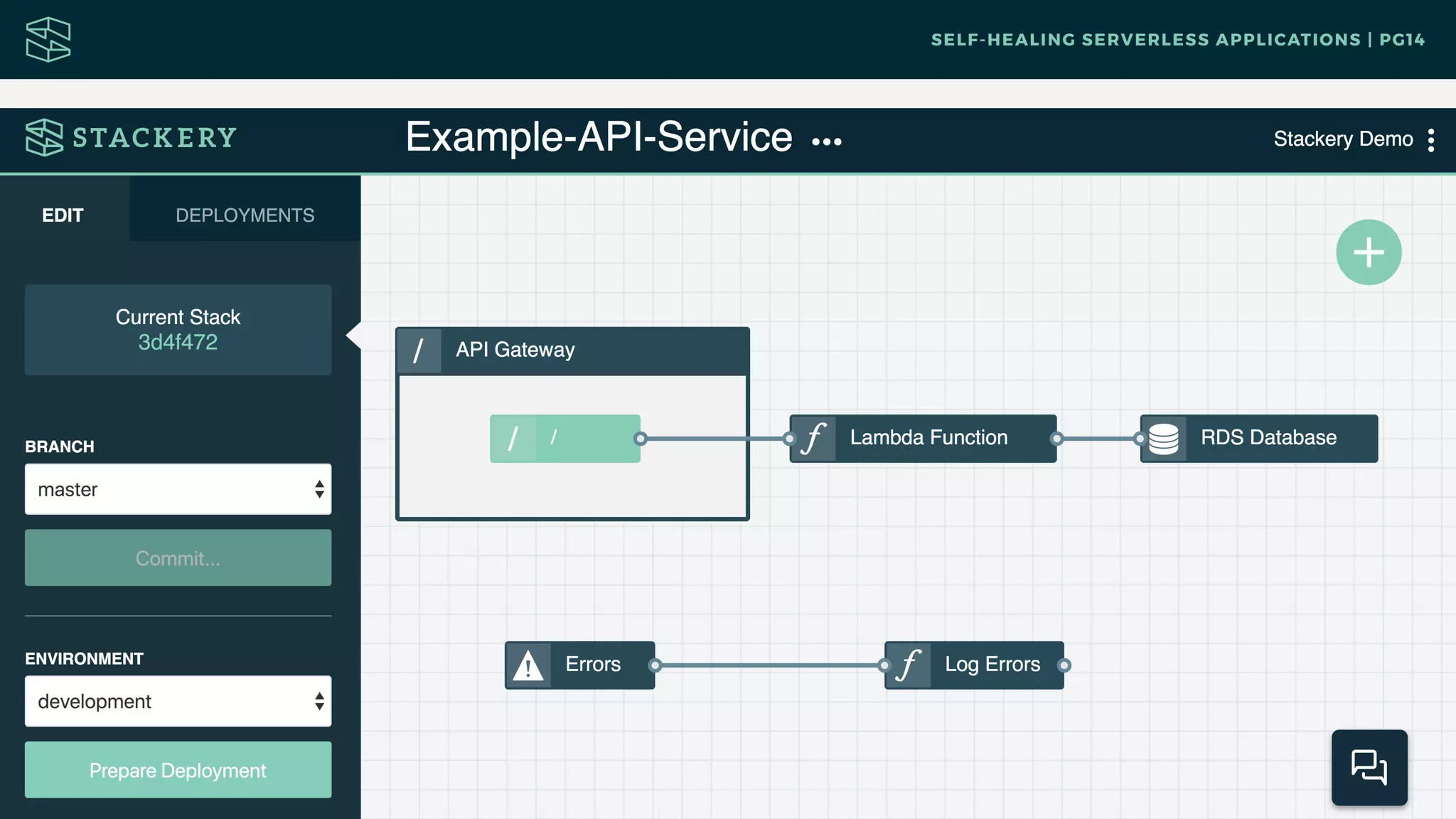

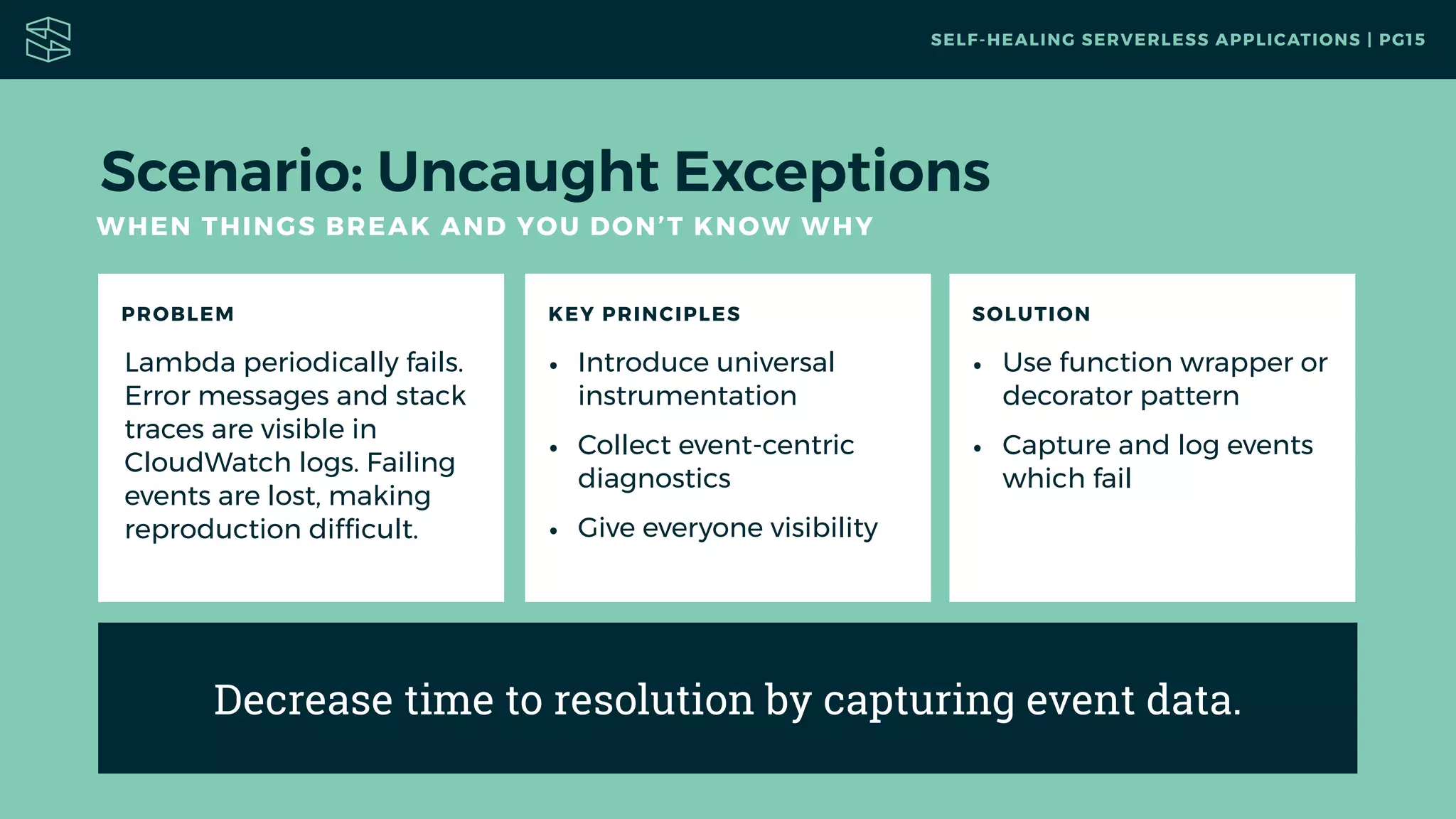

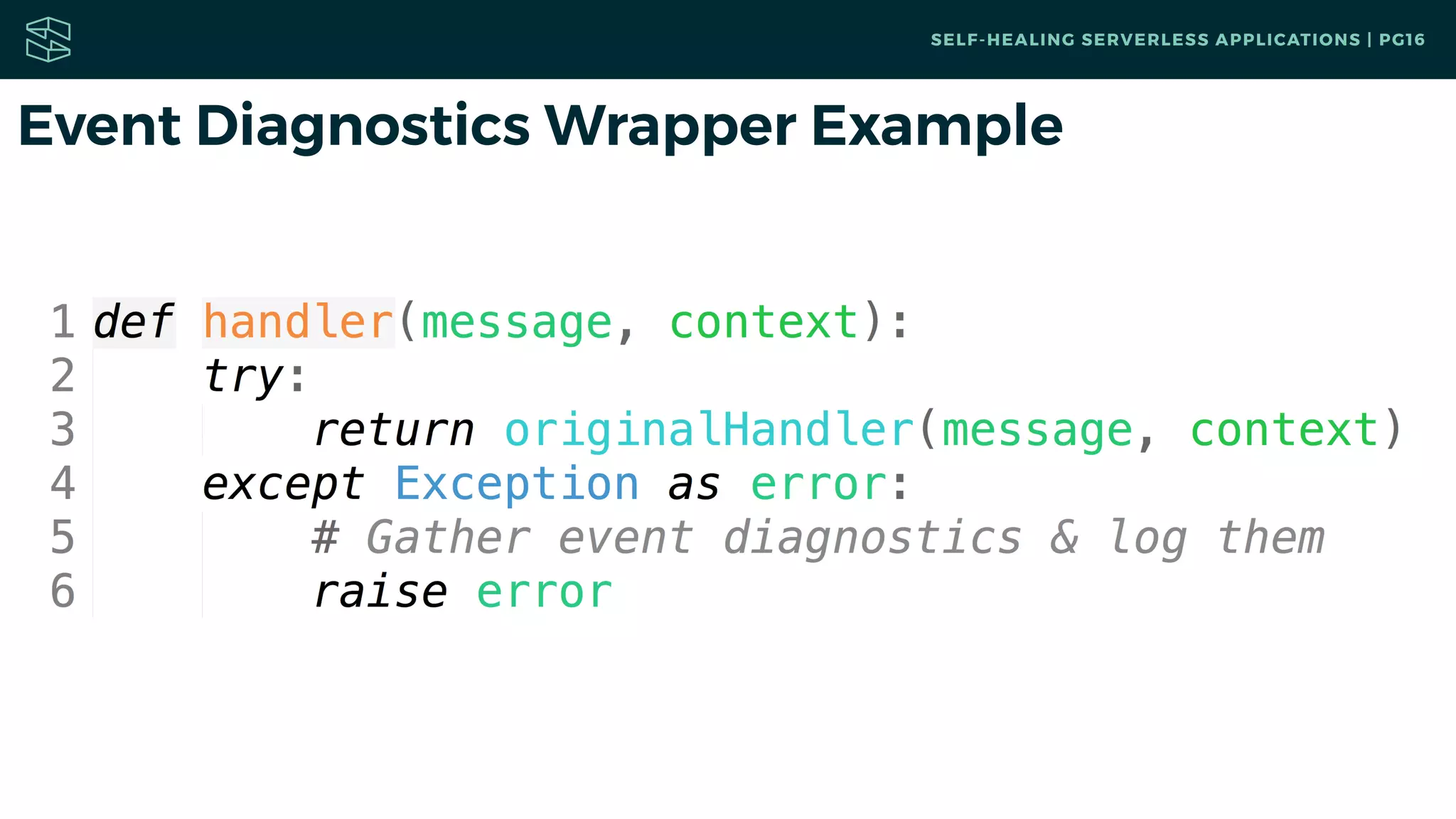

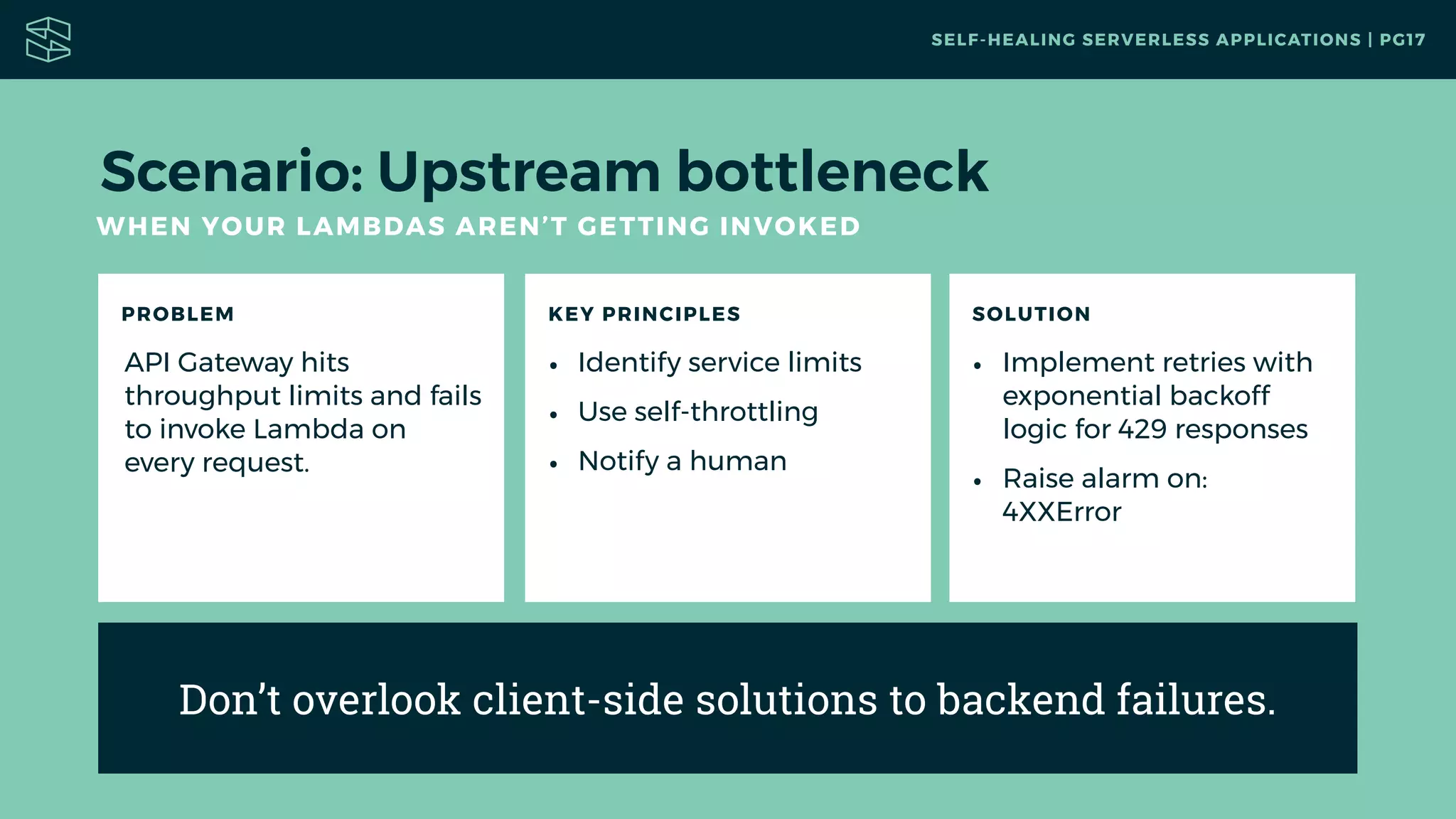

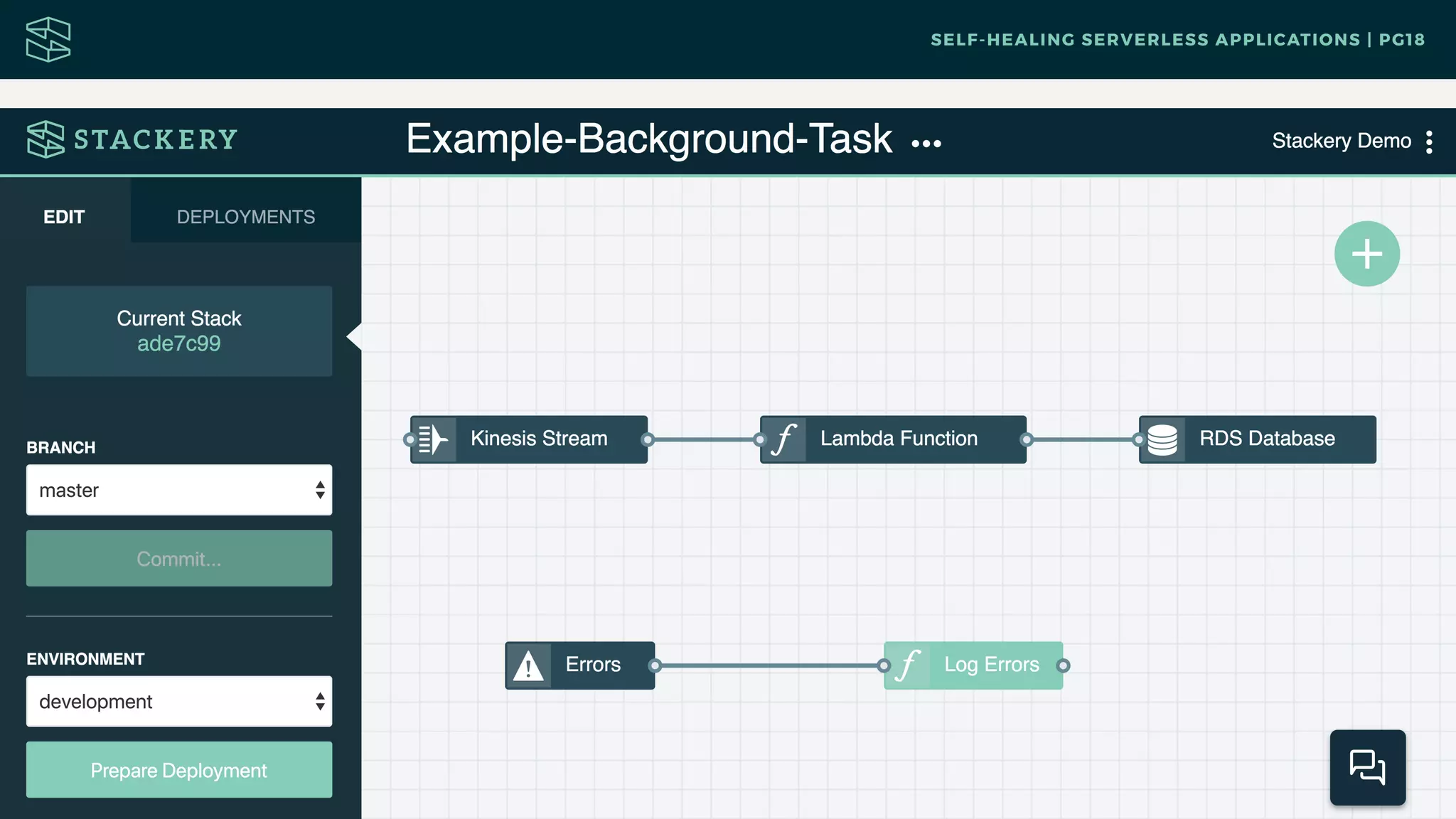

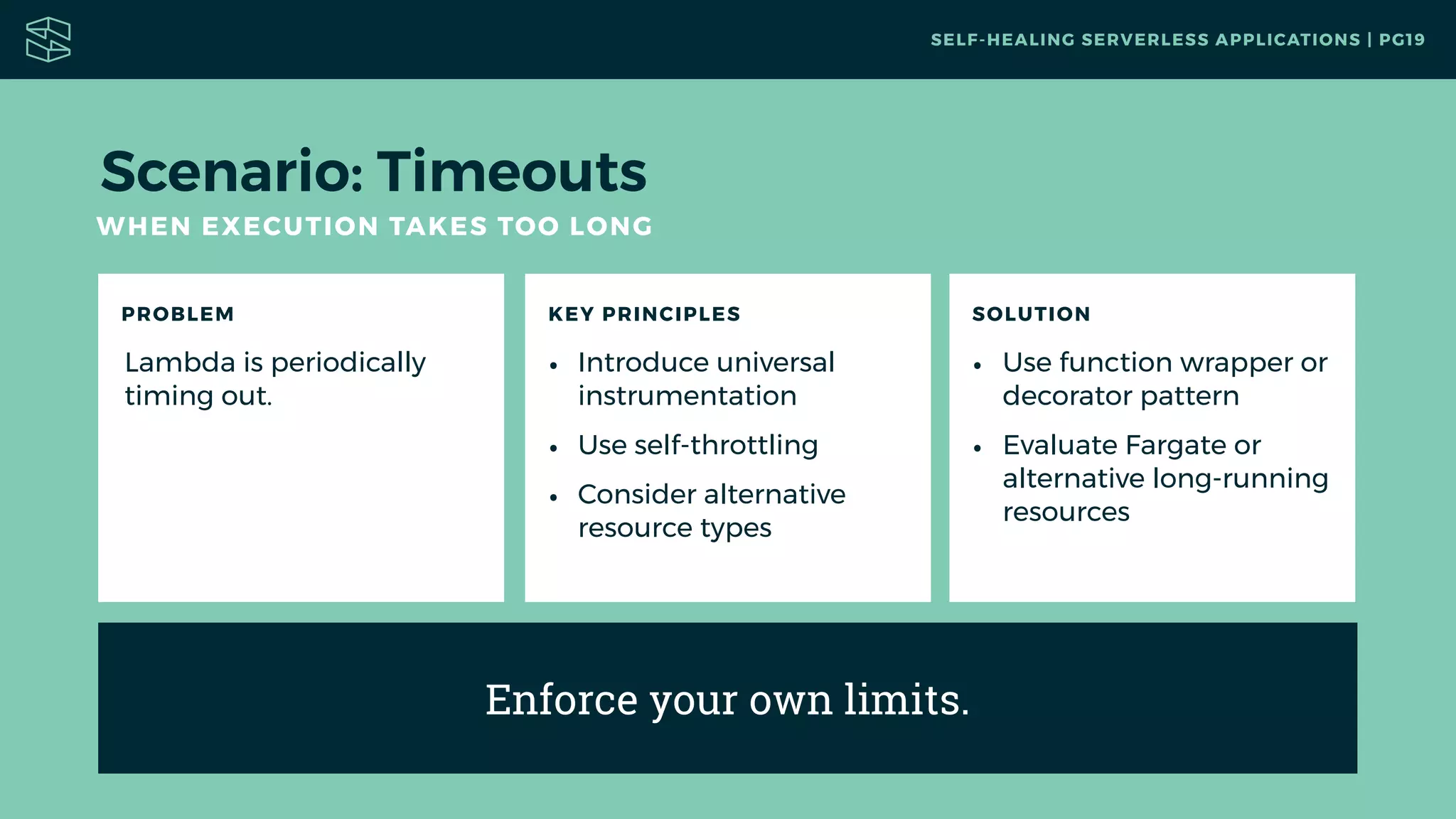

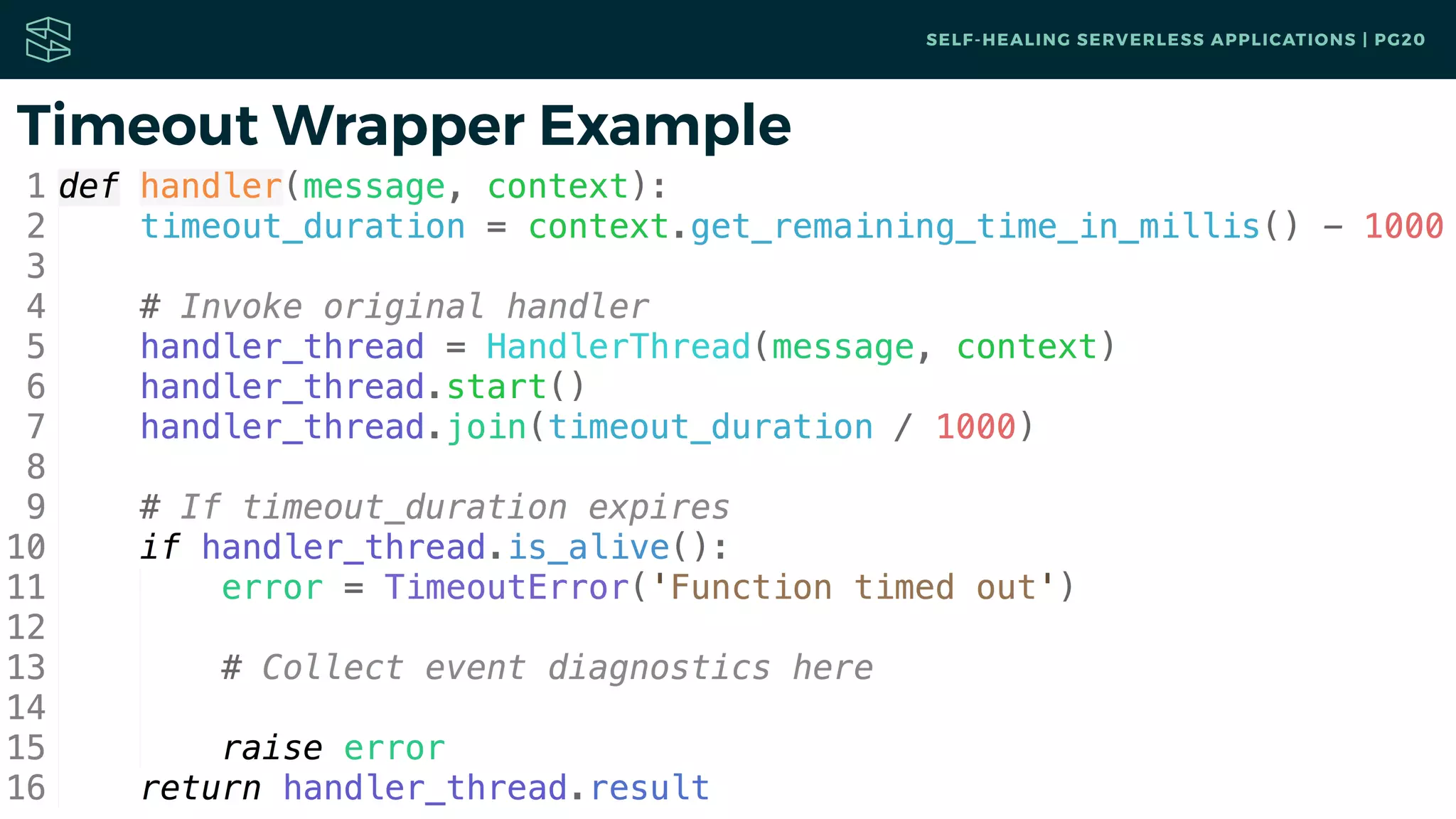

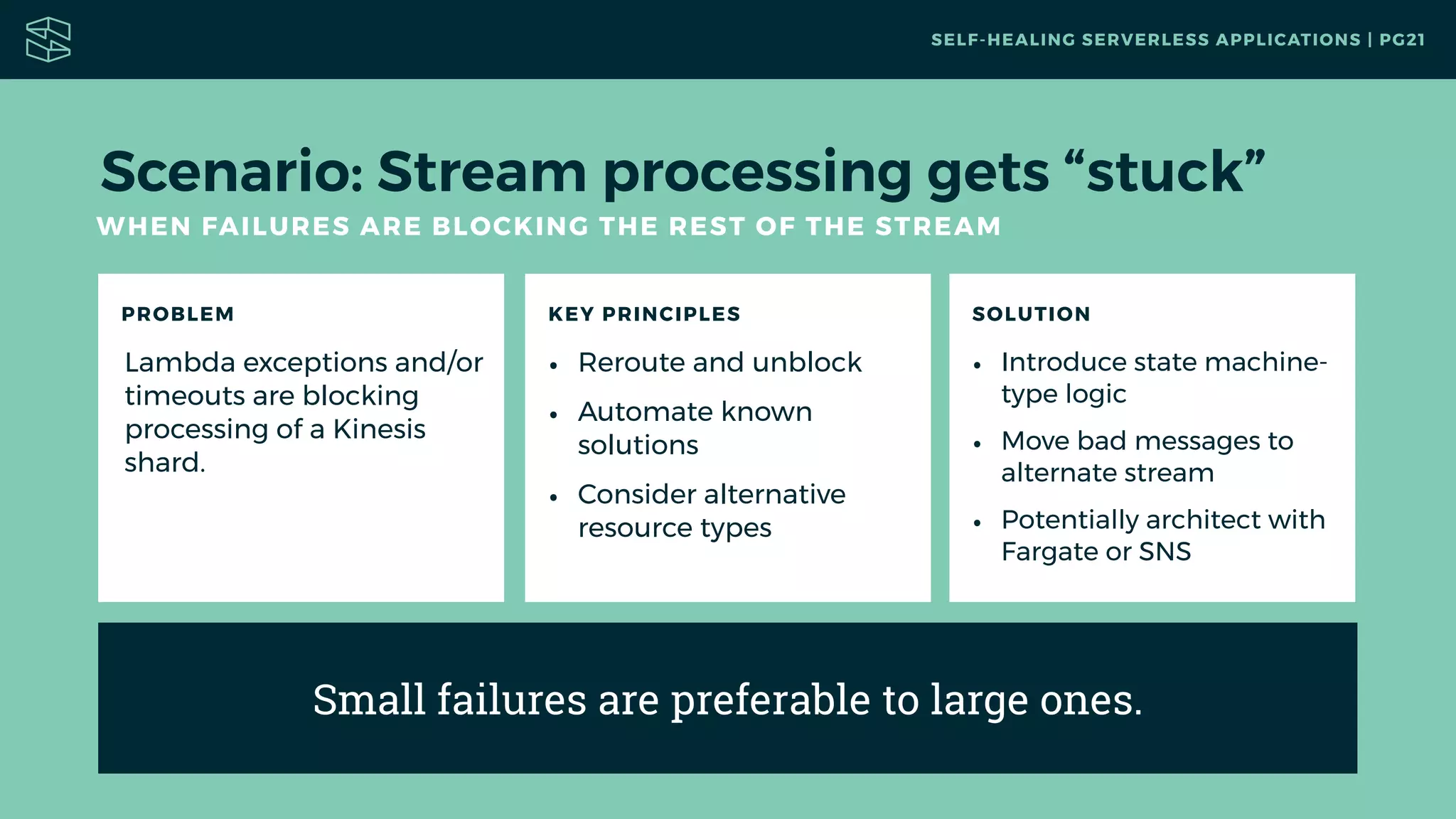

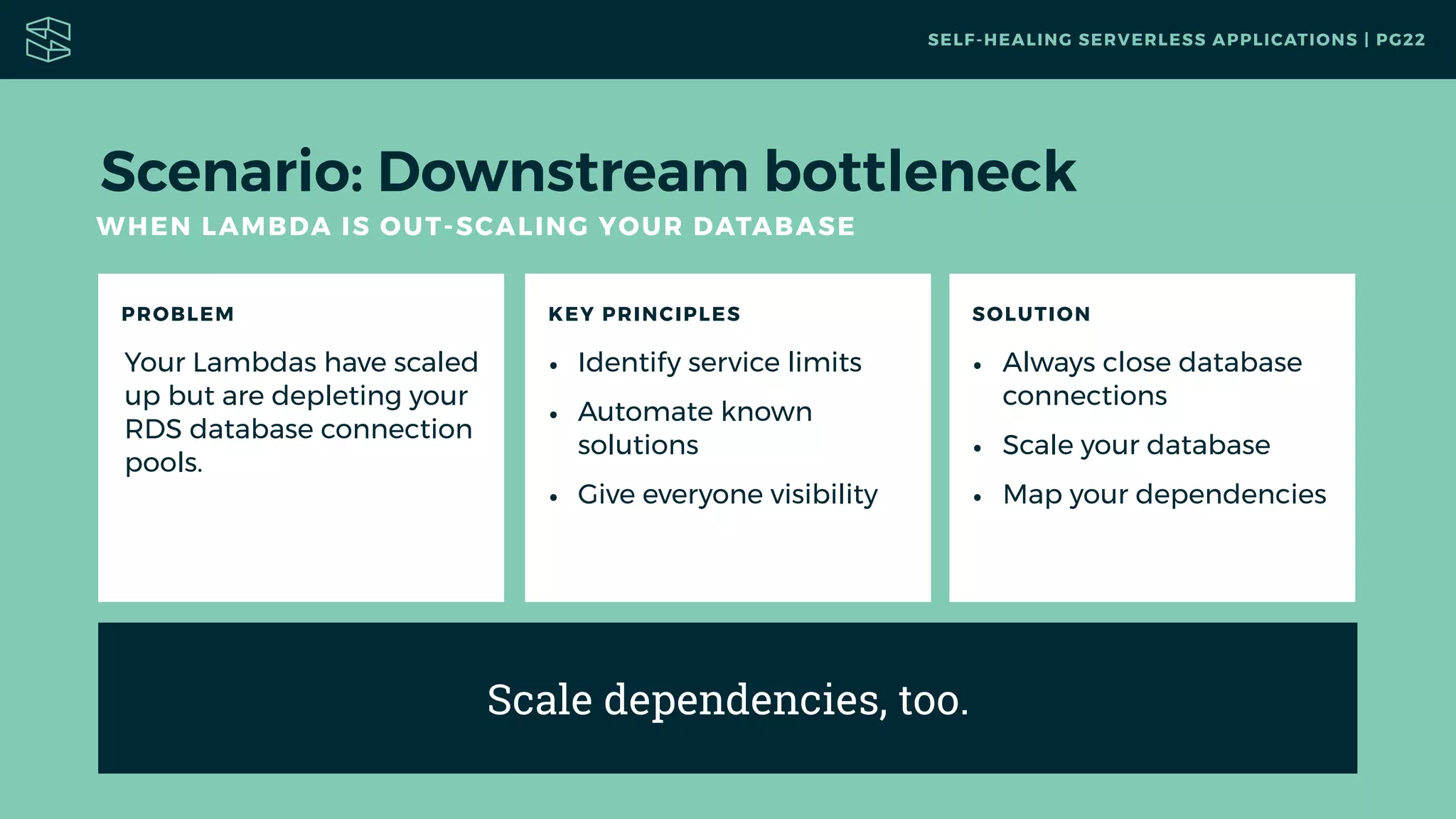

This document discusses self-healing serverless applications. It begins by outlining common serverless failures like runtime errors, timeouts, bad states, and scaling issues. It then presents design principles for building resilient systems like standardizing failure handling, learning from failures, and planning for failures. Several scenarios are presented, like uncaught exceptions, upstream bottlenecks, timeouts, blocked streams, and downstream bottlenecks. For each, it outlines the problem, key principles, and example solutions like adding instrumentation, retries, timeouts, moving messages, and scaling dependencies. The overall message is that applications need to be designed to gracefully handle failures.