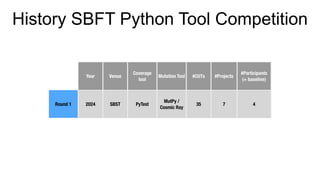

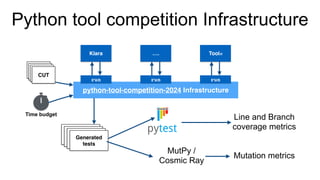

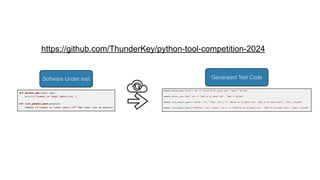

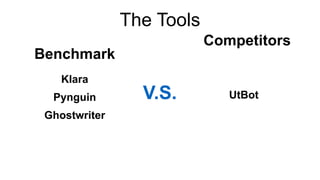

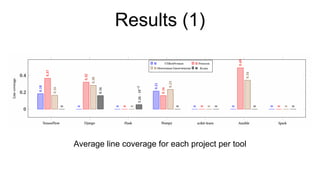

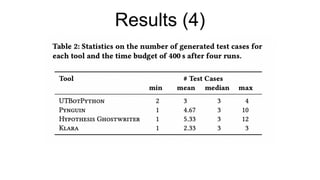

The 2024 Python Tool Competition invites researchers to participate by using their test generation tools to assess code coverage and mutation scores. The competition will evaluate various tools through benchmarks involving open-source projects, with criteria focused on code accessibility and execution environment. Lessons learned highlight opportunities for improved infrastructure and greater participation from academic and industry sectors in future contests.