This document provides a quick reference guide for interoperability between components in IBM Flex System, including:

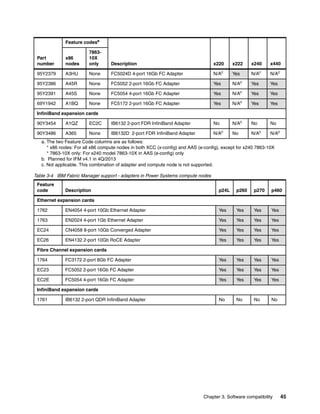

- Compatibility between chassis, switches, adapters, transceivers, power supplies, and racks

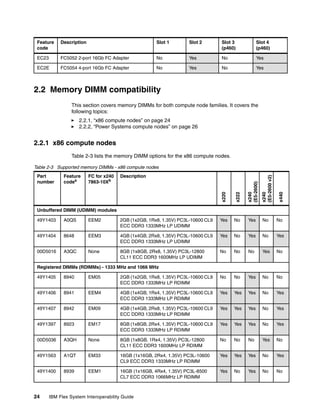

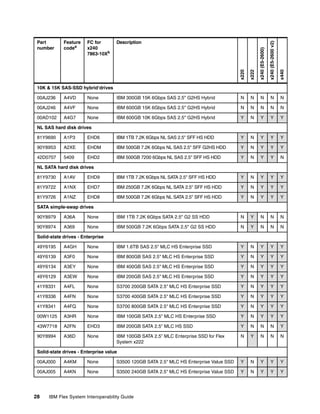

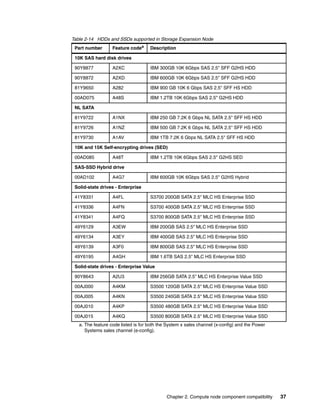

- Compatibility between compute nodes, expansion nodes, memory, storage, I/O adapters, and software

- Storage interoperability with unified NAS storage, FCoE, iSCSI, NPIV, and Fibre Channel

The guide covers the latest updates as of November 2013 and is intended to help ensure components work together seamlessly in a Flex System environment.