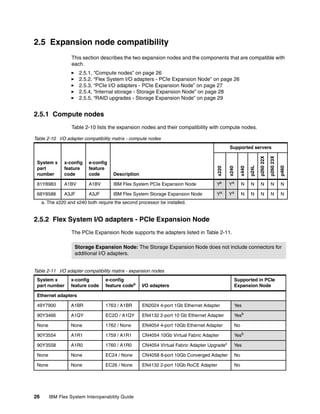

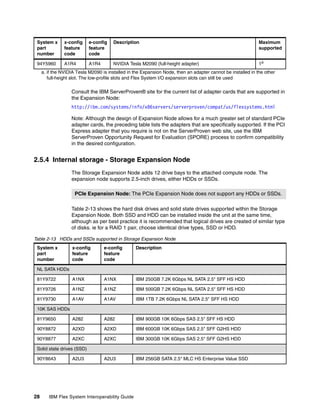

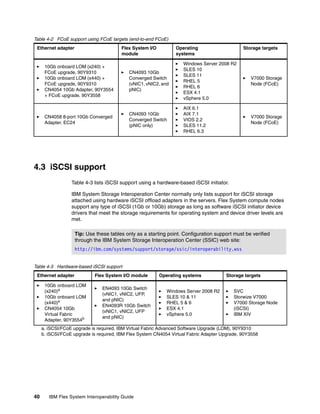

The IBM Flex System Interoperability Guide provides a comprehensive overview of the internal components and external connectivity for various IBM Flex System hardware, including the PureFlex System and multiple compute nodes. It includes details on chassis interoperability, compute node compatibility, and software compatibility, along with updates as of January 2013. This guide serves as a technical reference for ensuring proper integration and functionality within IBM Flex System environments.