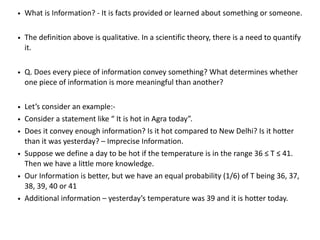

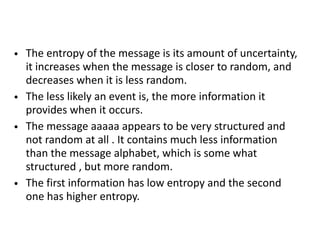

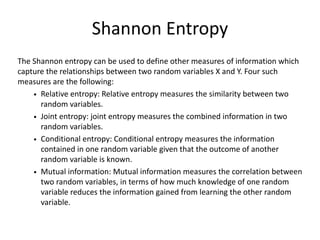

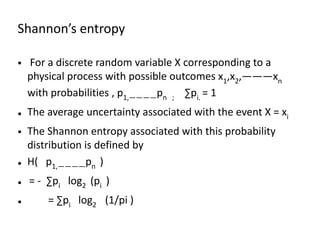

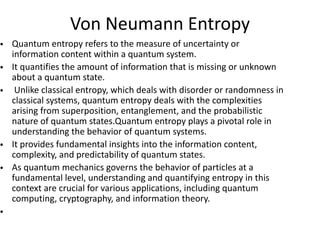

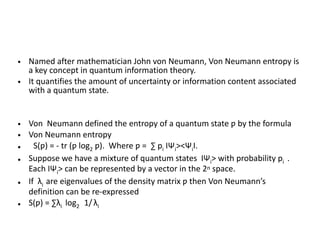

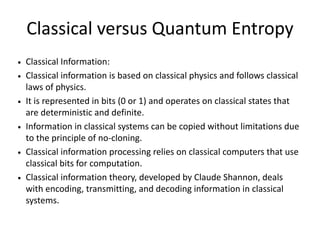

The document discusses the concepts of information and entropy in both classical and quantum contexts, emphasizing the importance of measurement in information theory. Shannon's and von Neumann's entropies are highlighted as key measures for understanding information content, with Shannon’s focusing on classical systems and von Neumann’s applicable to quantum states. It contrasts classical information, which follows deterministic rules, with quantum information that operates under the principles of superposition and uncertainty.