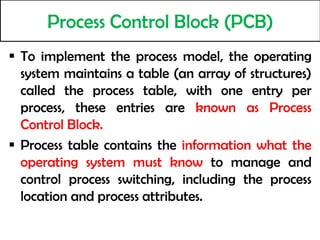

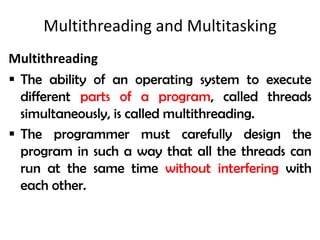

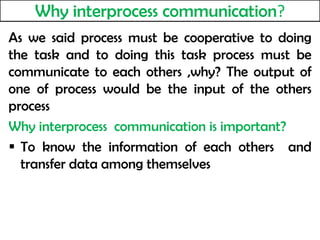

The document explains the concept of Process Control Block (PCB) and threads in operating systems, detailing their structures, functions, and differences. It highlights the significance of multithreading and multitasking, describes context switching, interprocess communication, and issues like race conditions in concurrent programming. Additionally, it touches on process scheduling, critical sections, and methods to avoid race conditions to ensure smooth operation and resource sharing among processes.