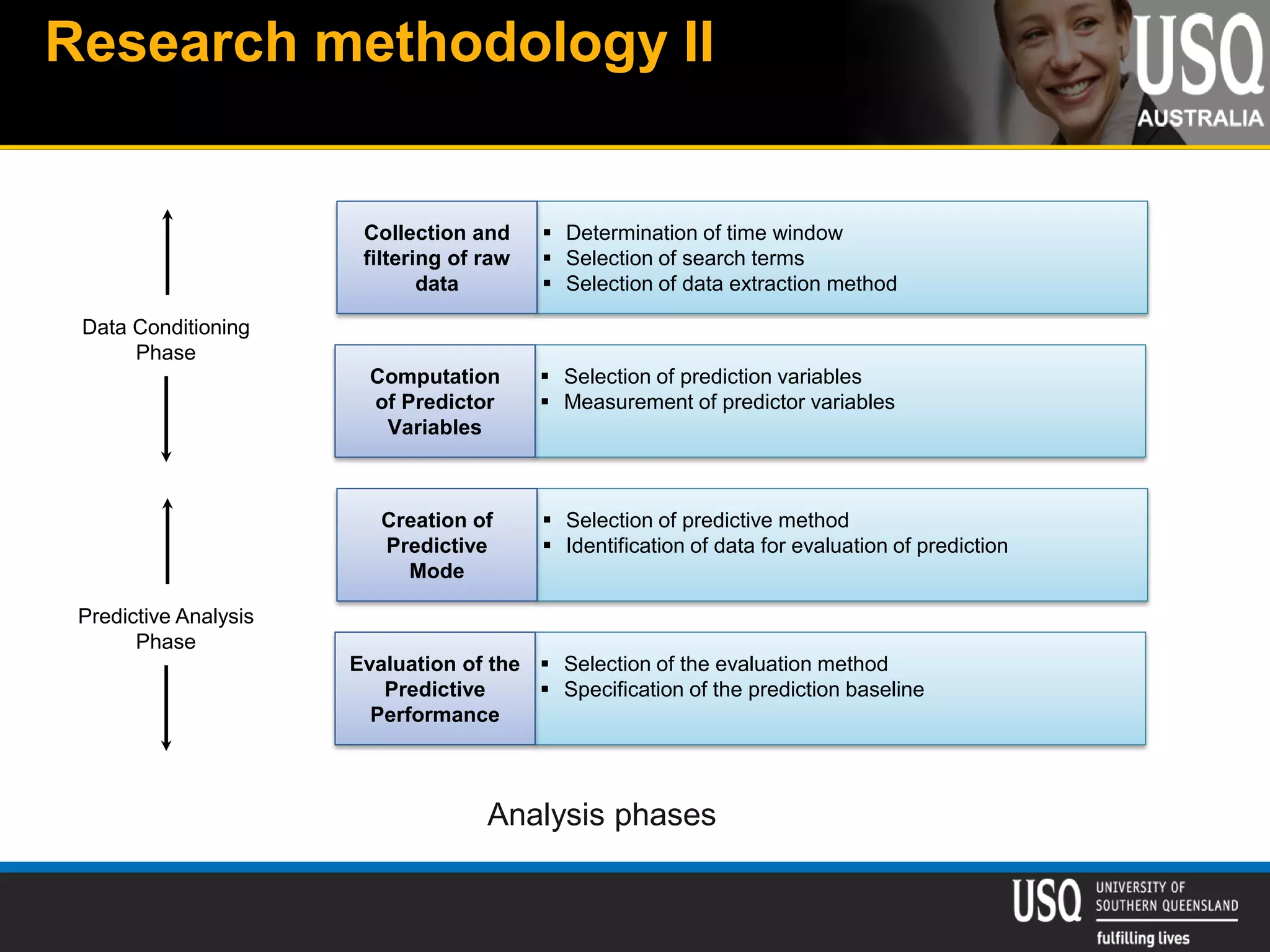

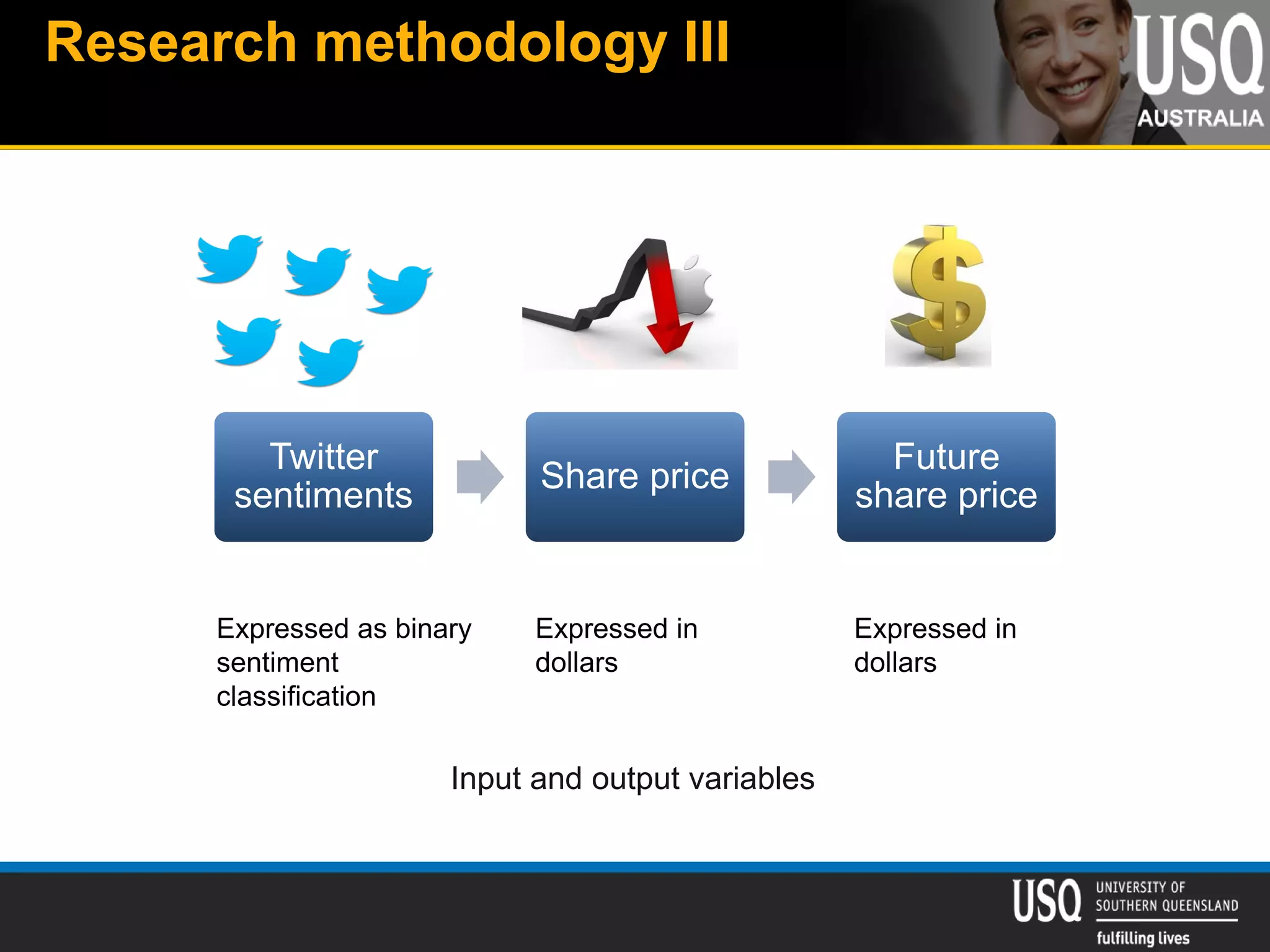

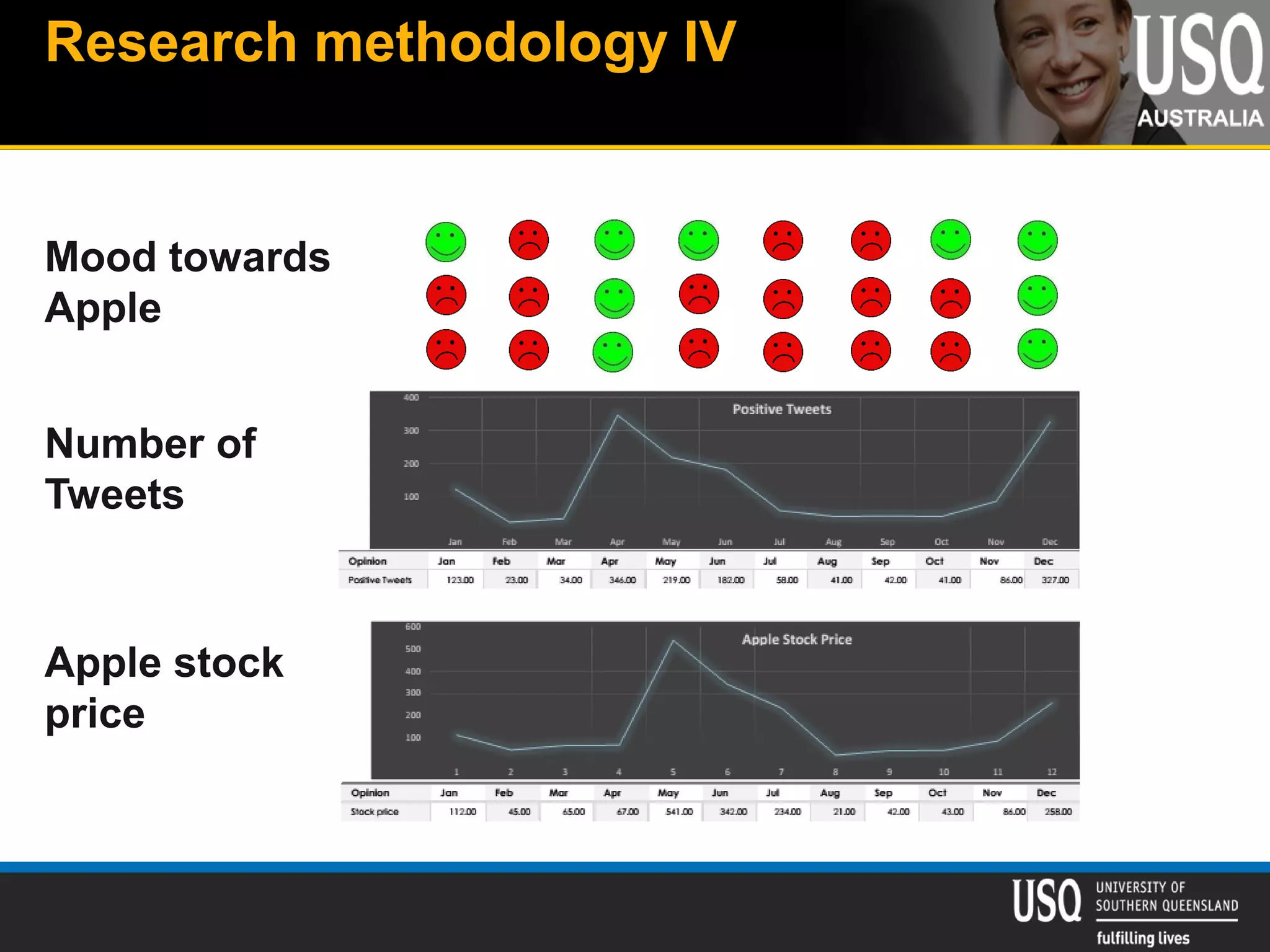

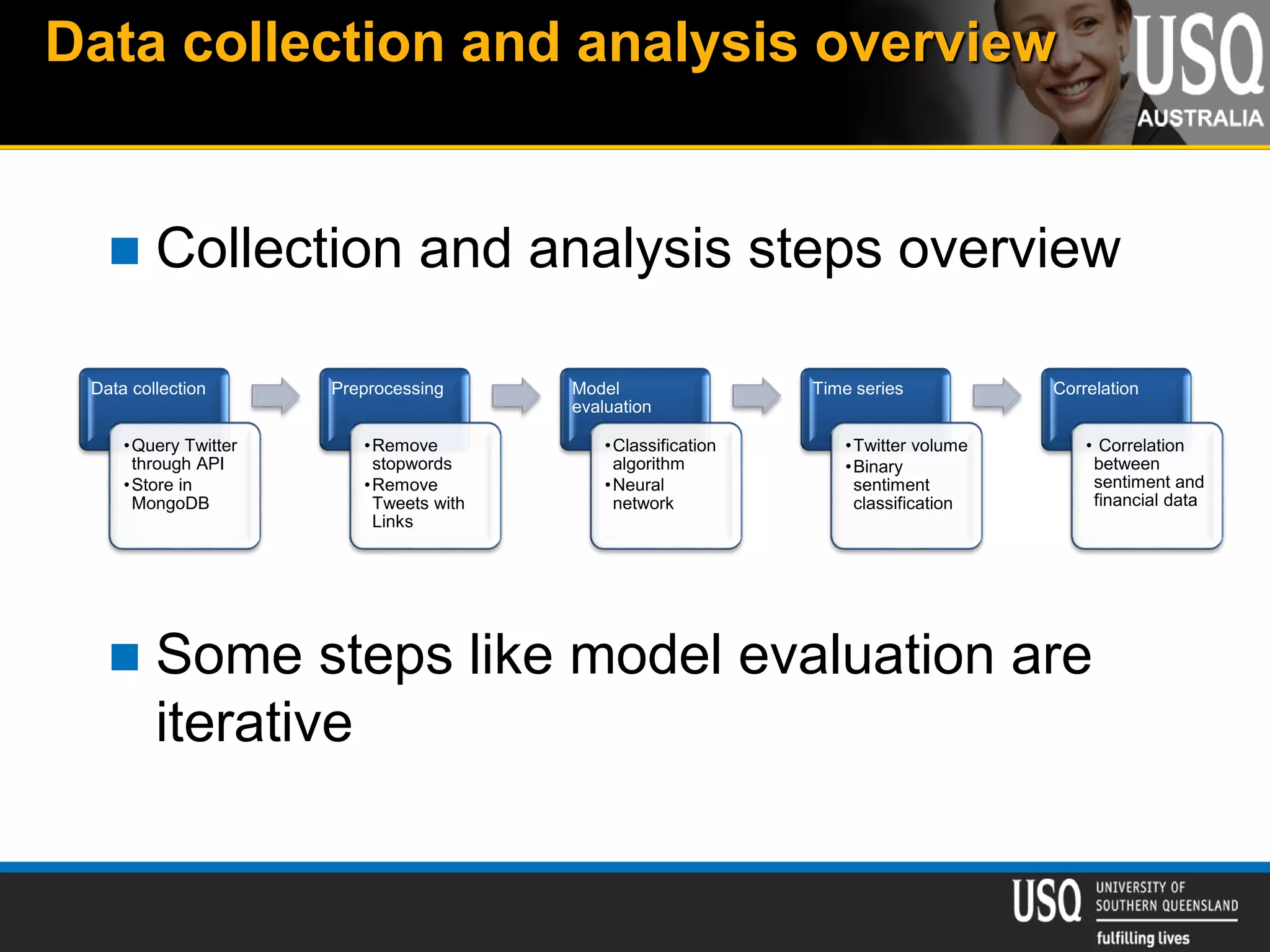

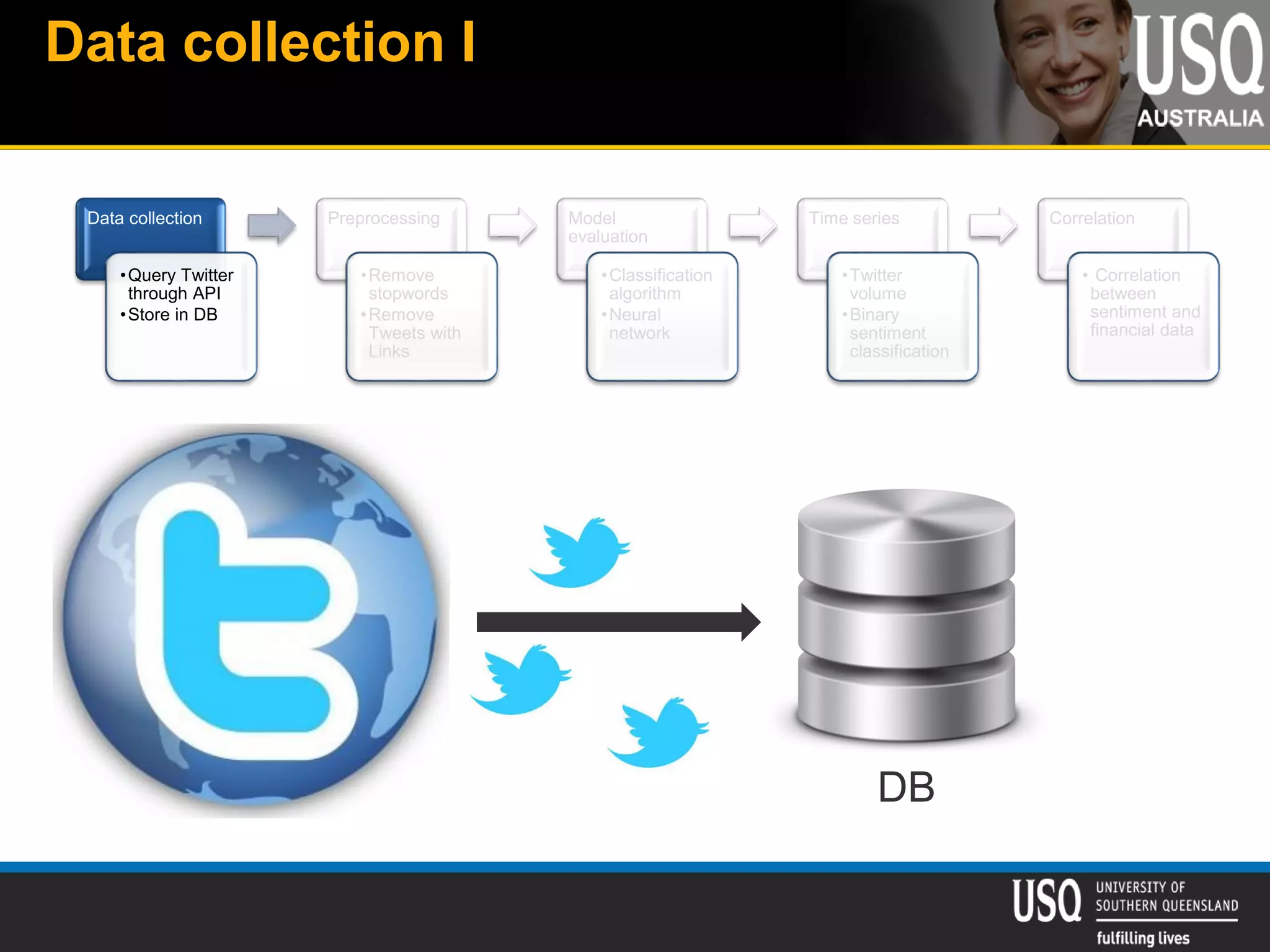

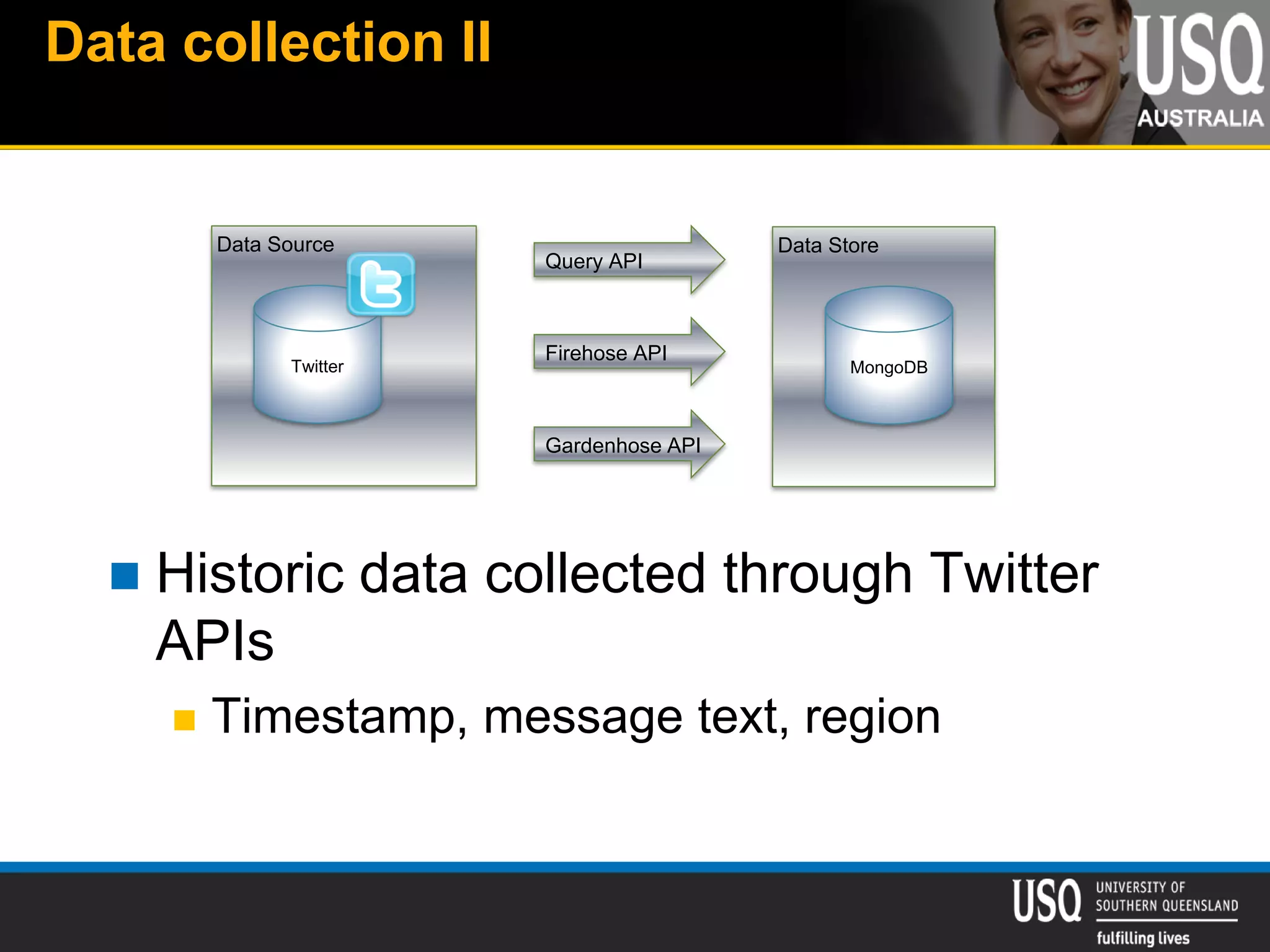

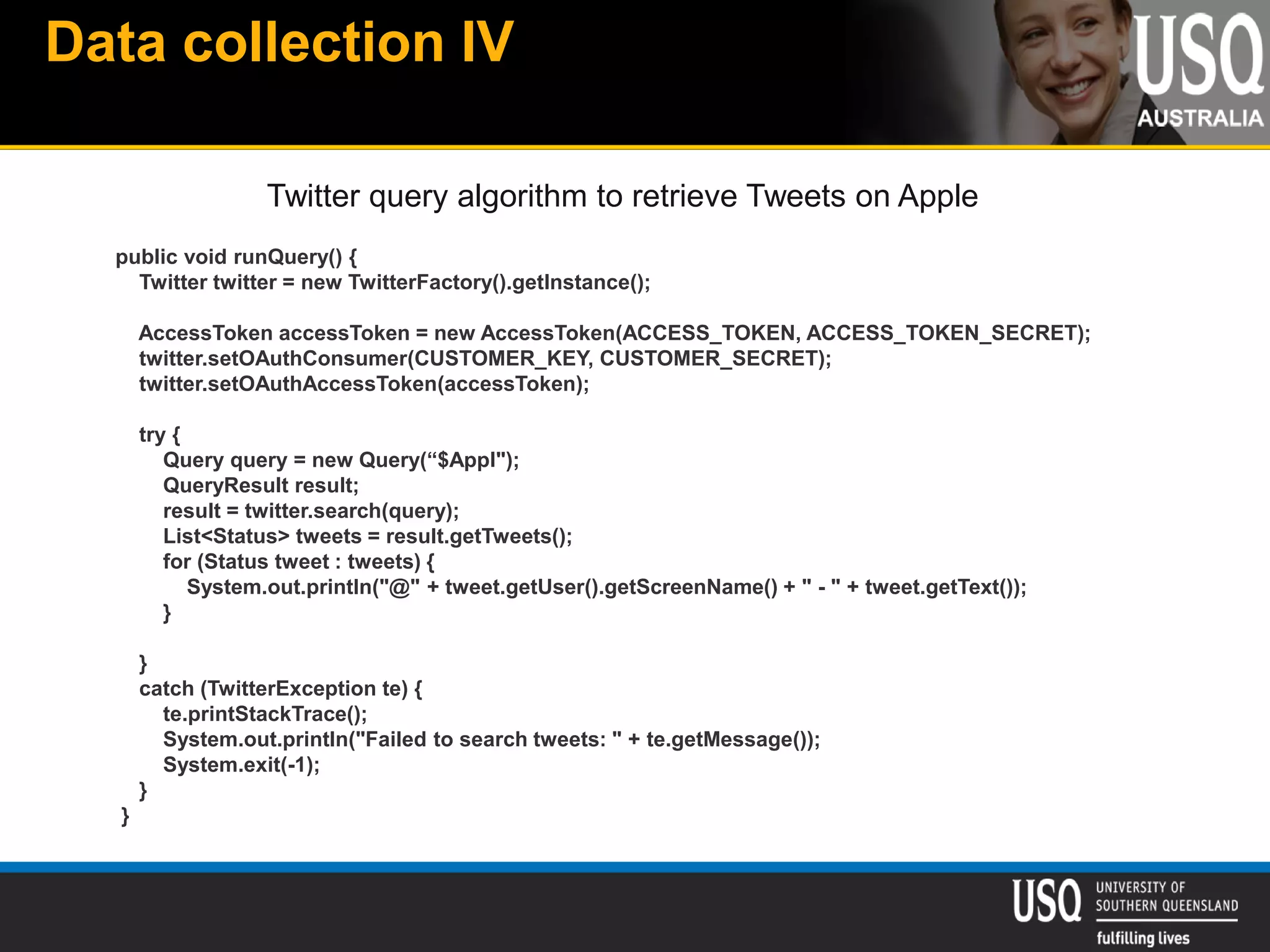

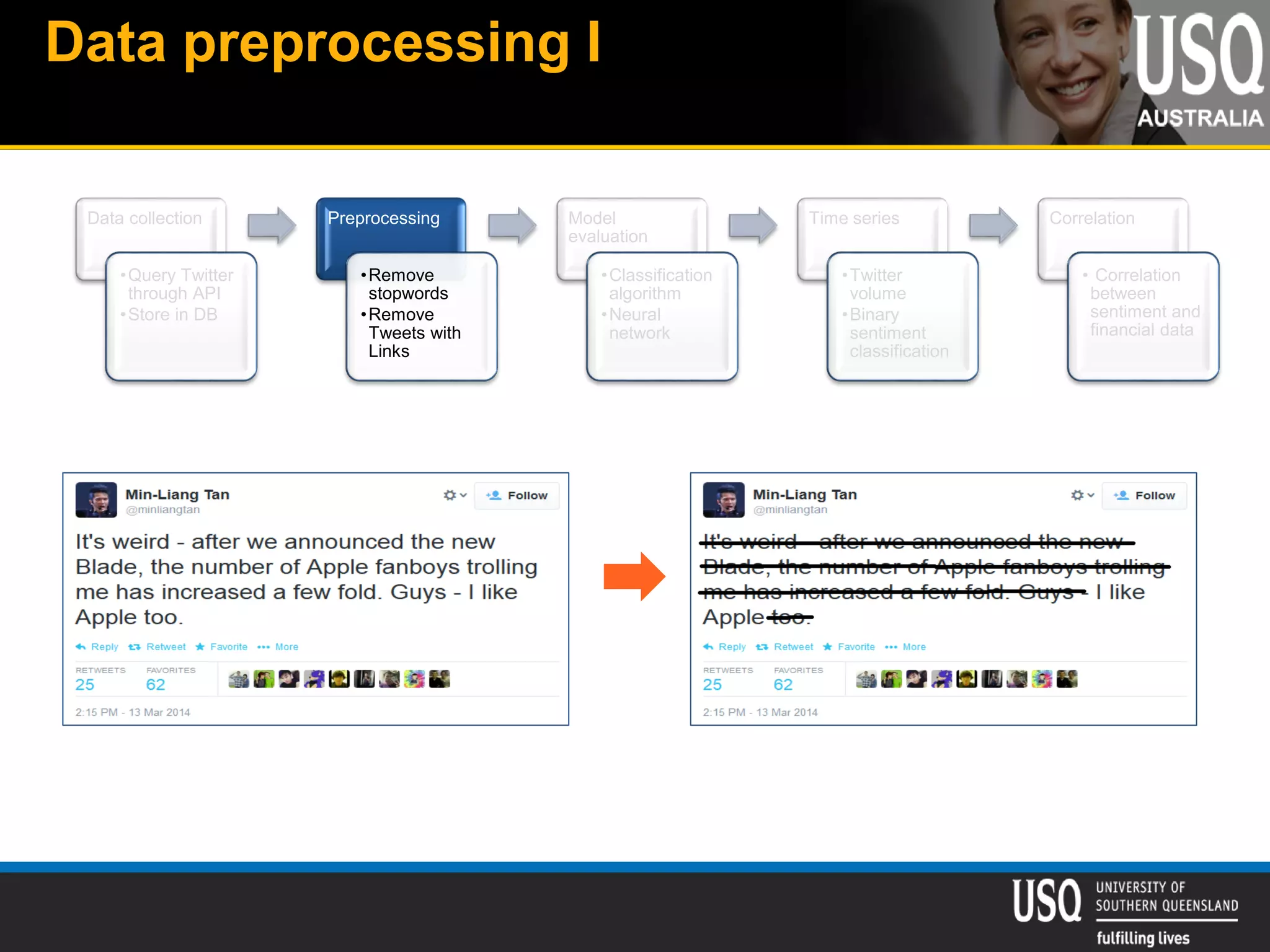

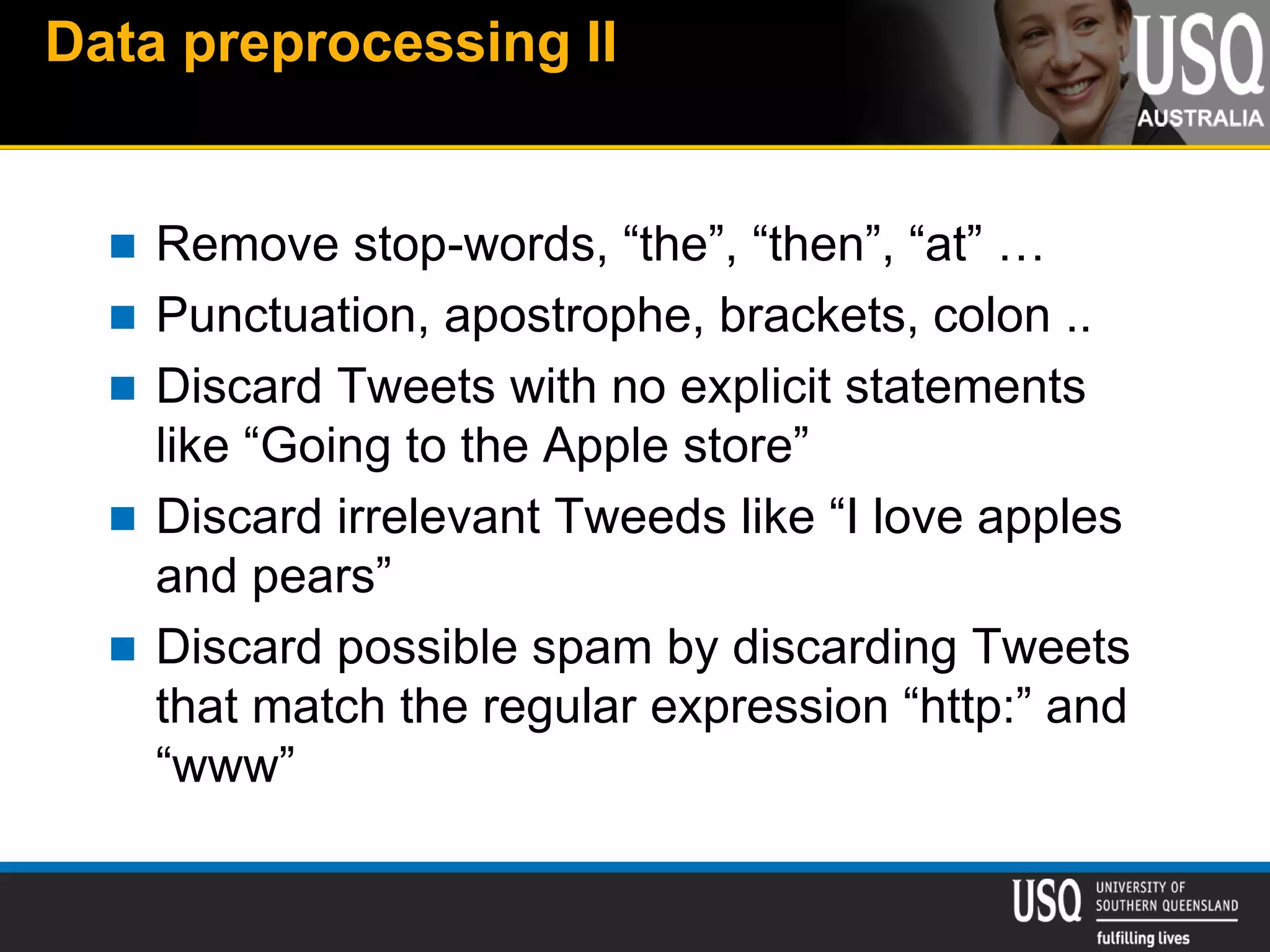

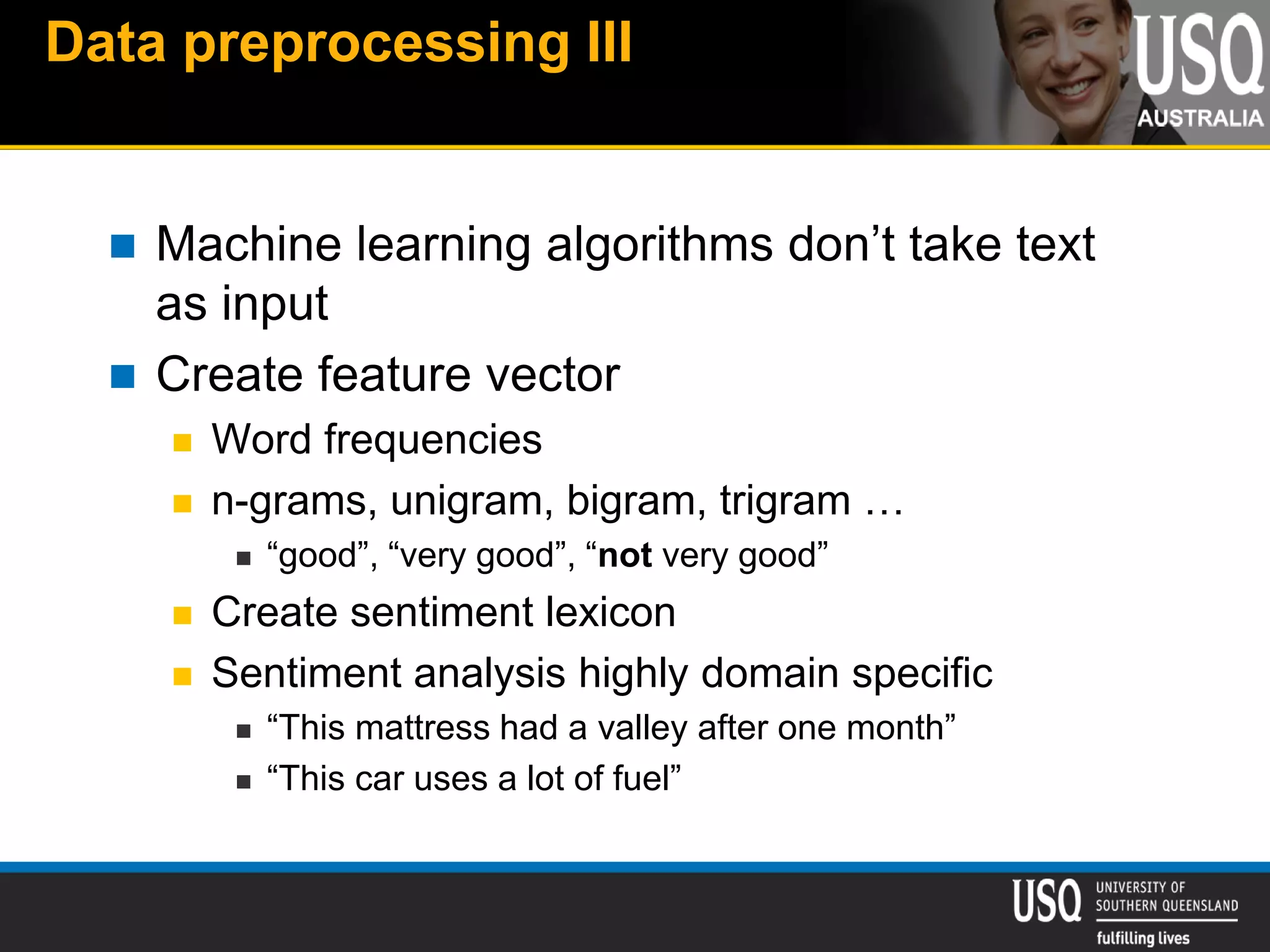

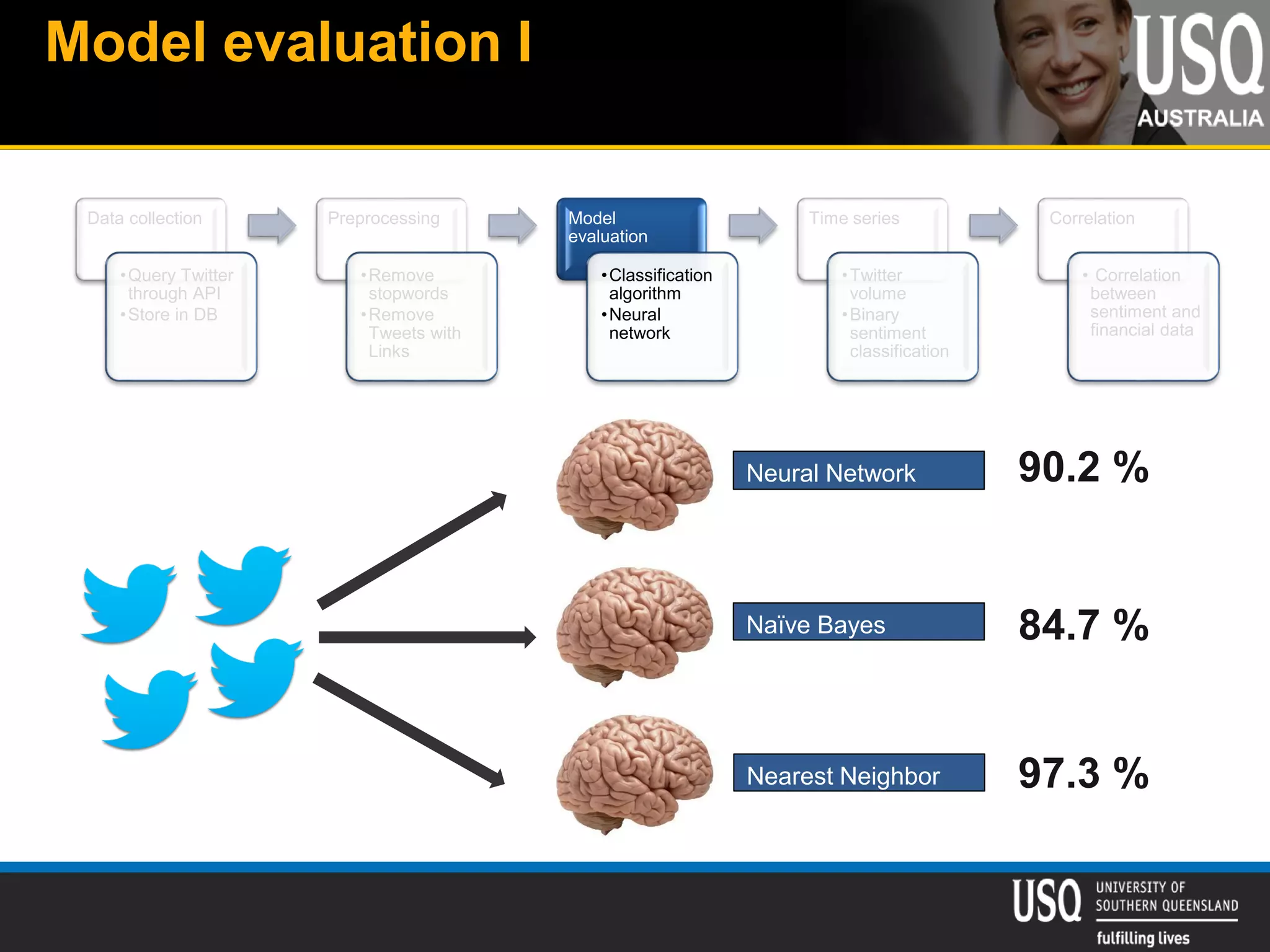

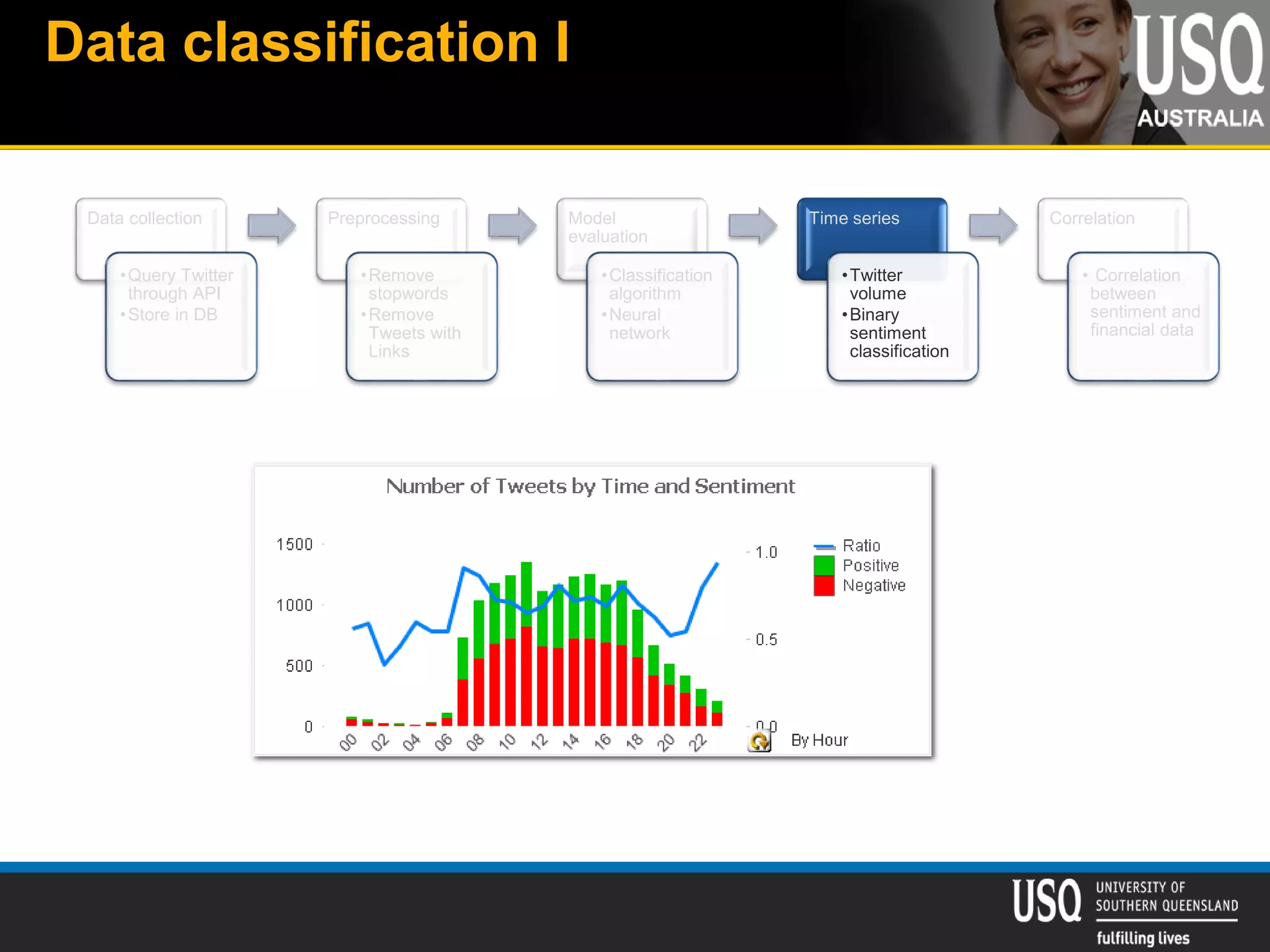

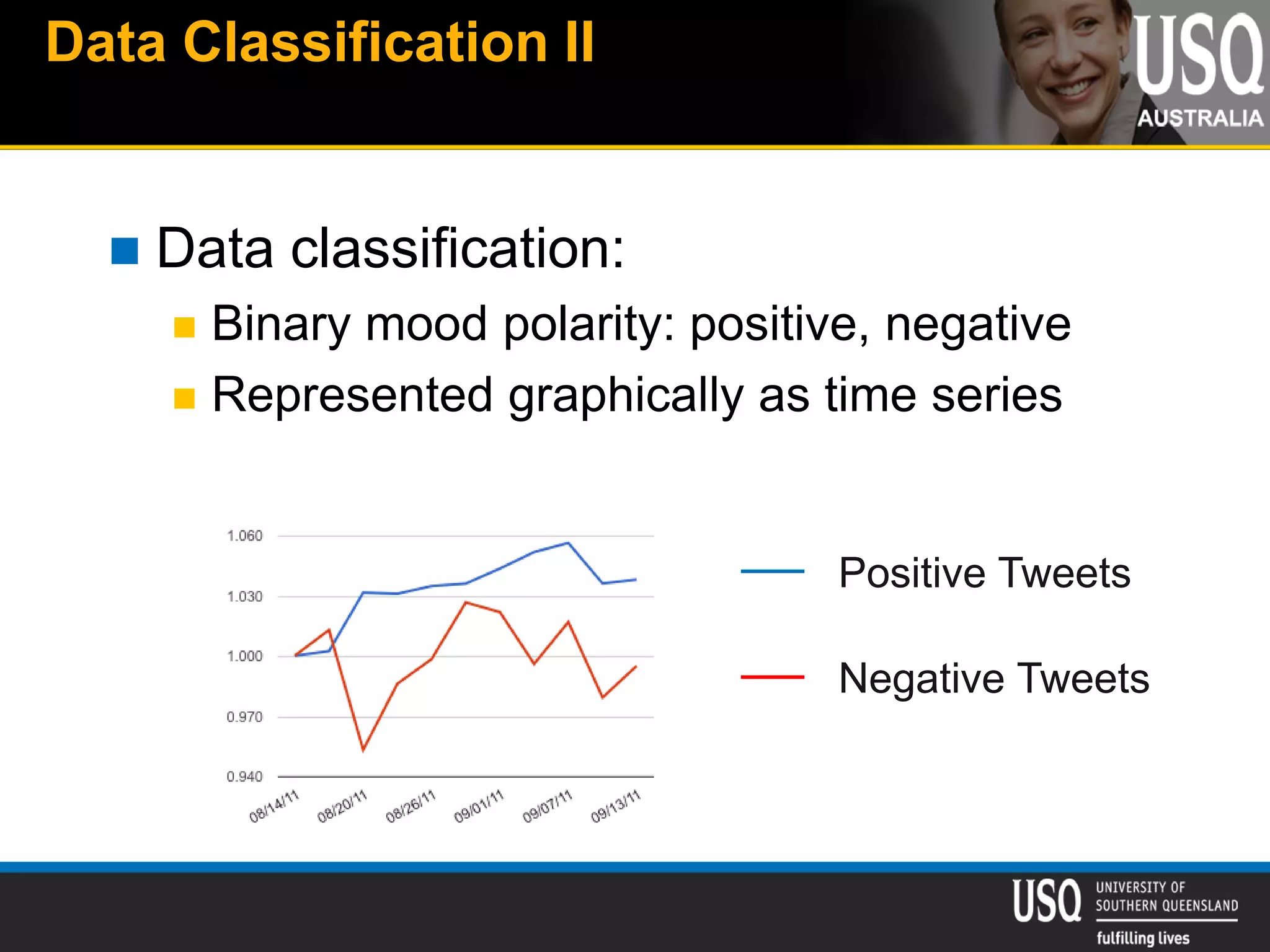

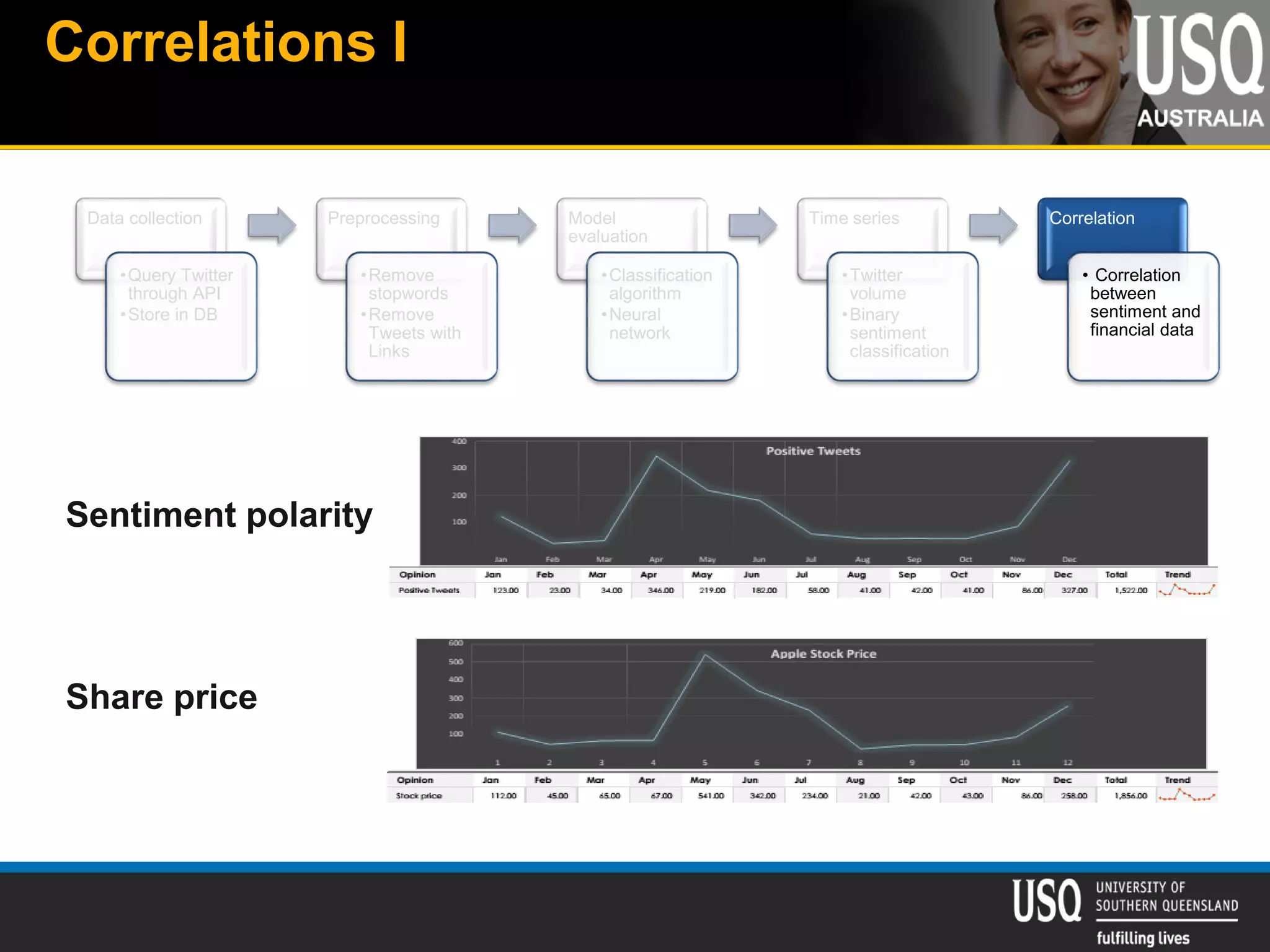

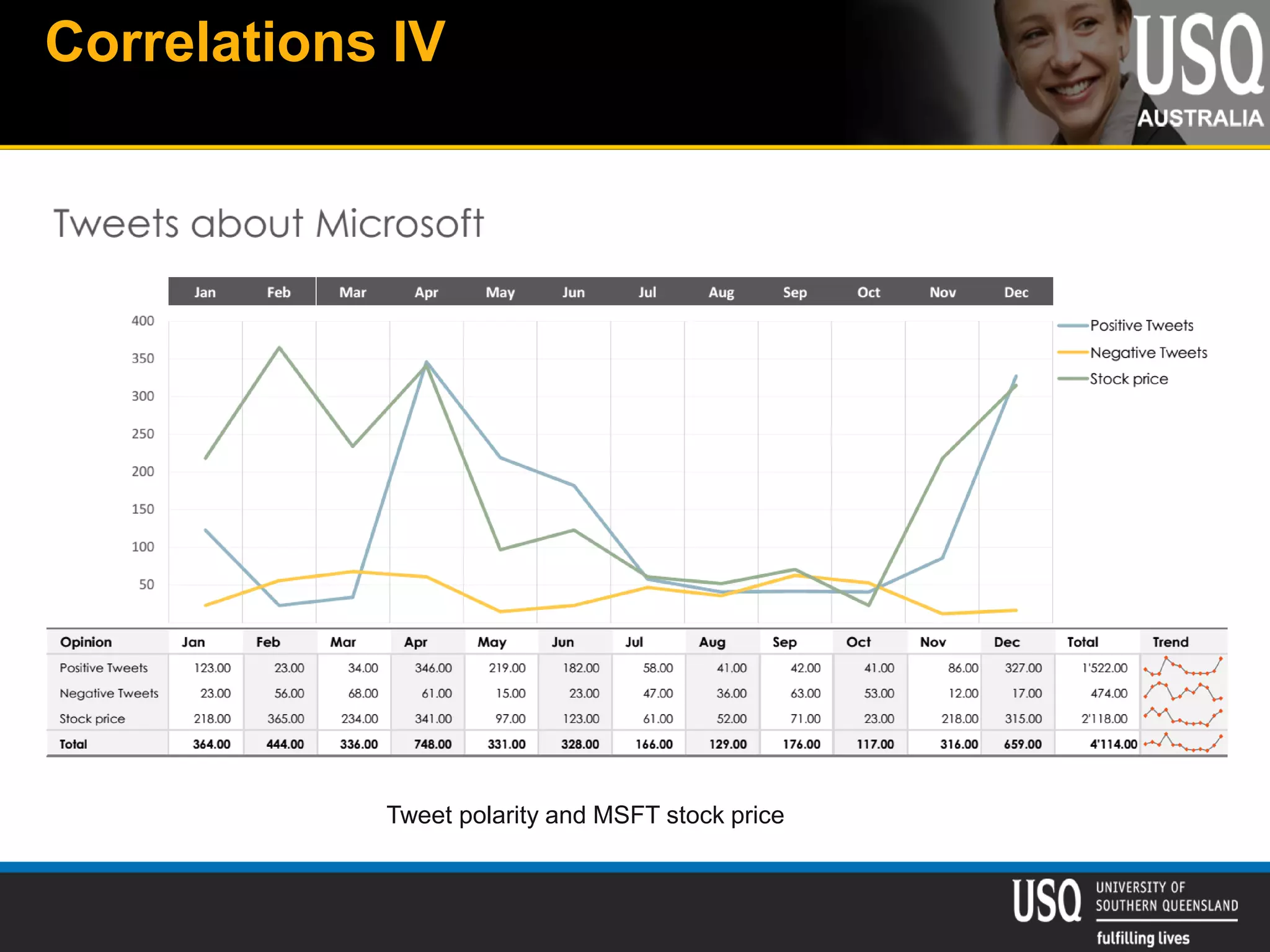

The document discusses the future of social media data analysis, particularly focusing on predictive analytics using Twitter data. It outlines the shift from publisher-generated to user-created content, highlights the challenges of data quality and analysis in social media, and emphasizes the use of machine learning techniques for prediction. Additionally, it details methodologies for data collection, preprocessing, and model evaluation, underscoring the growing significance of computational social science in extracting insights from large datasets.