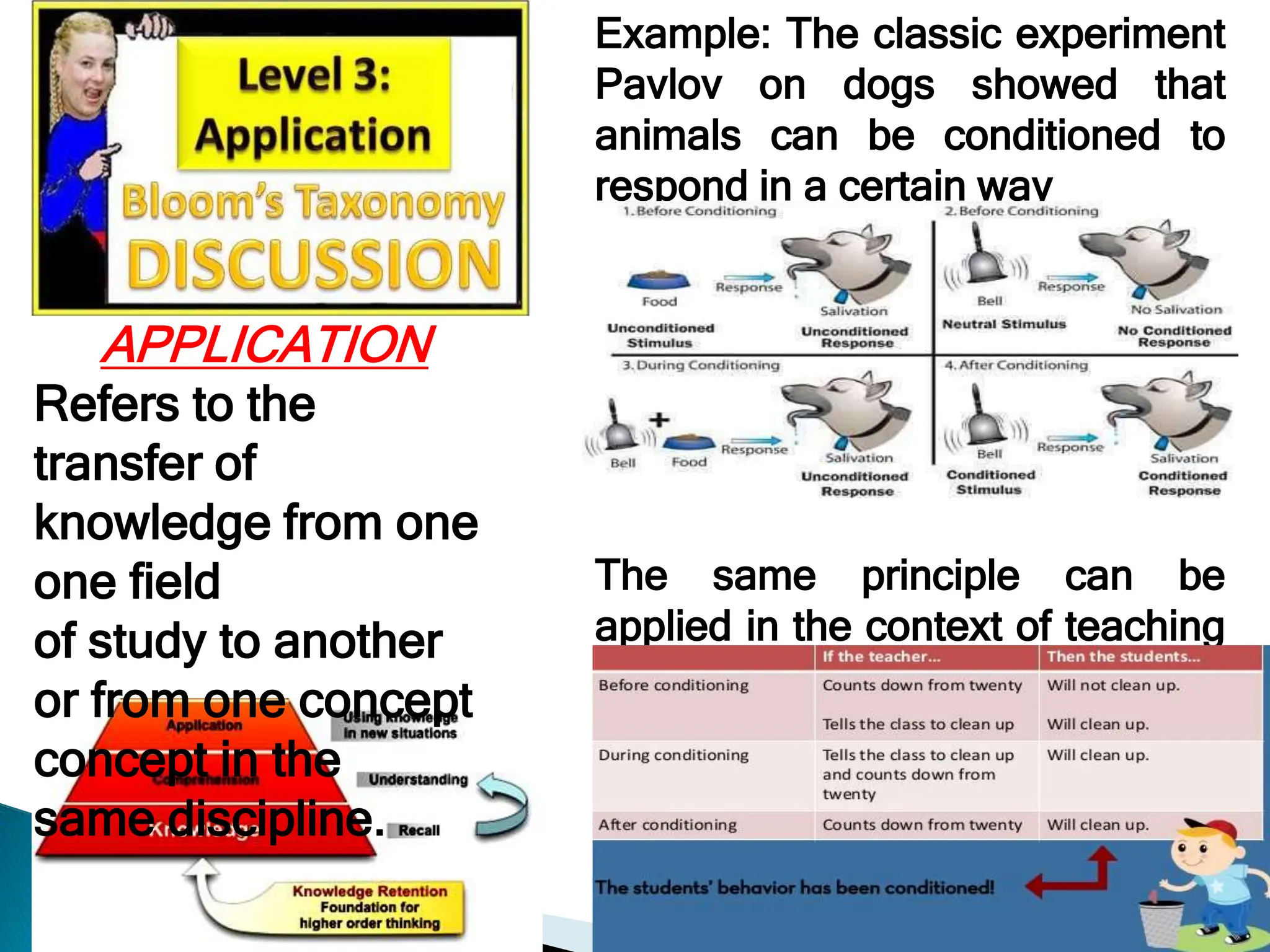

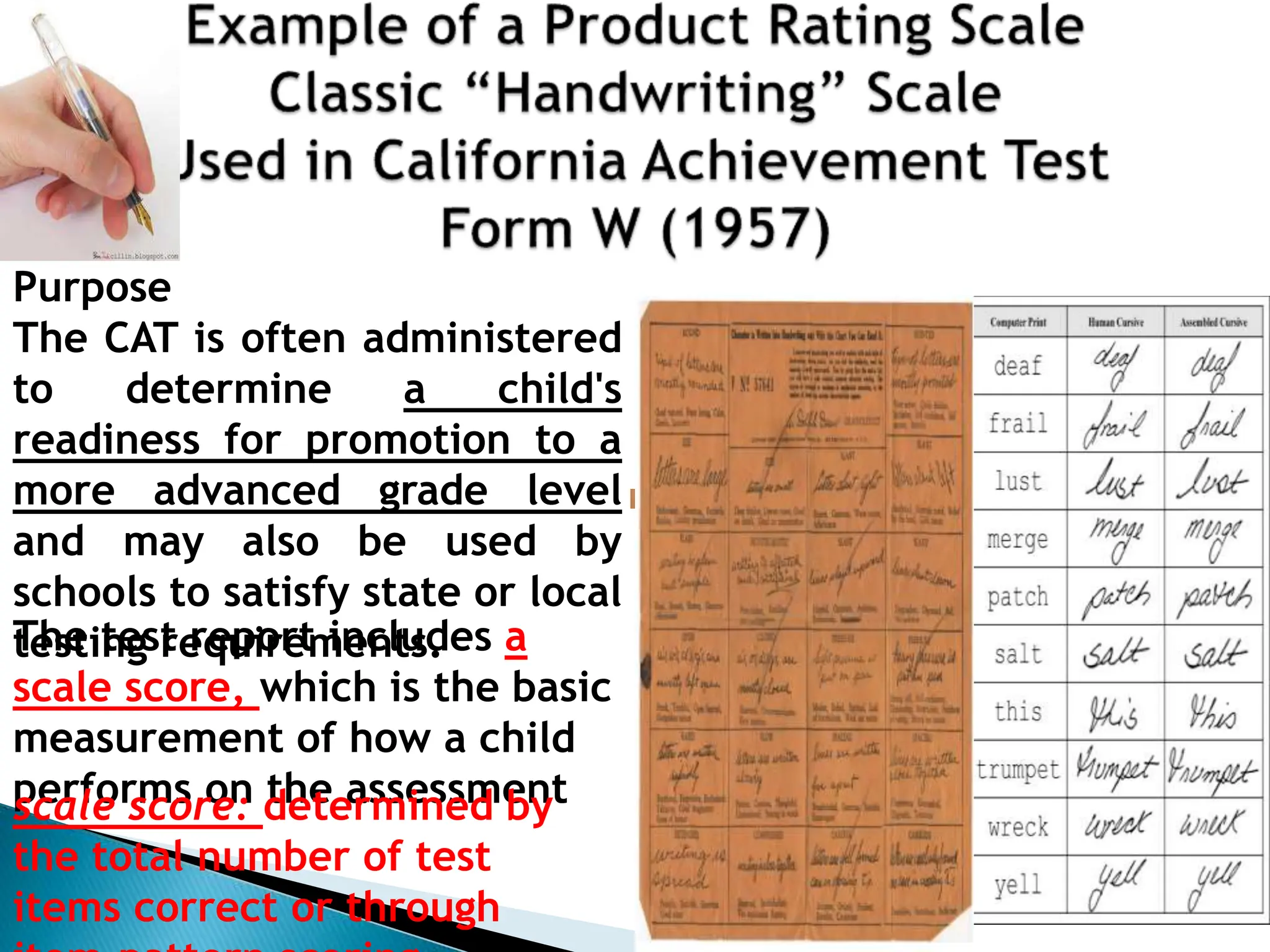

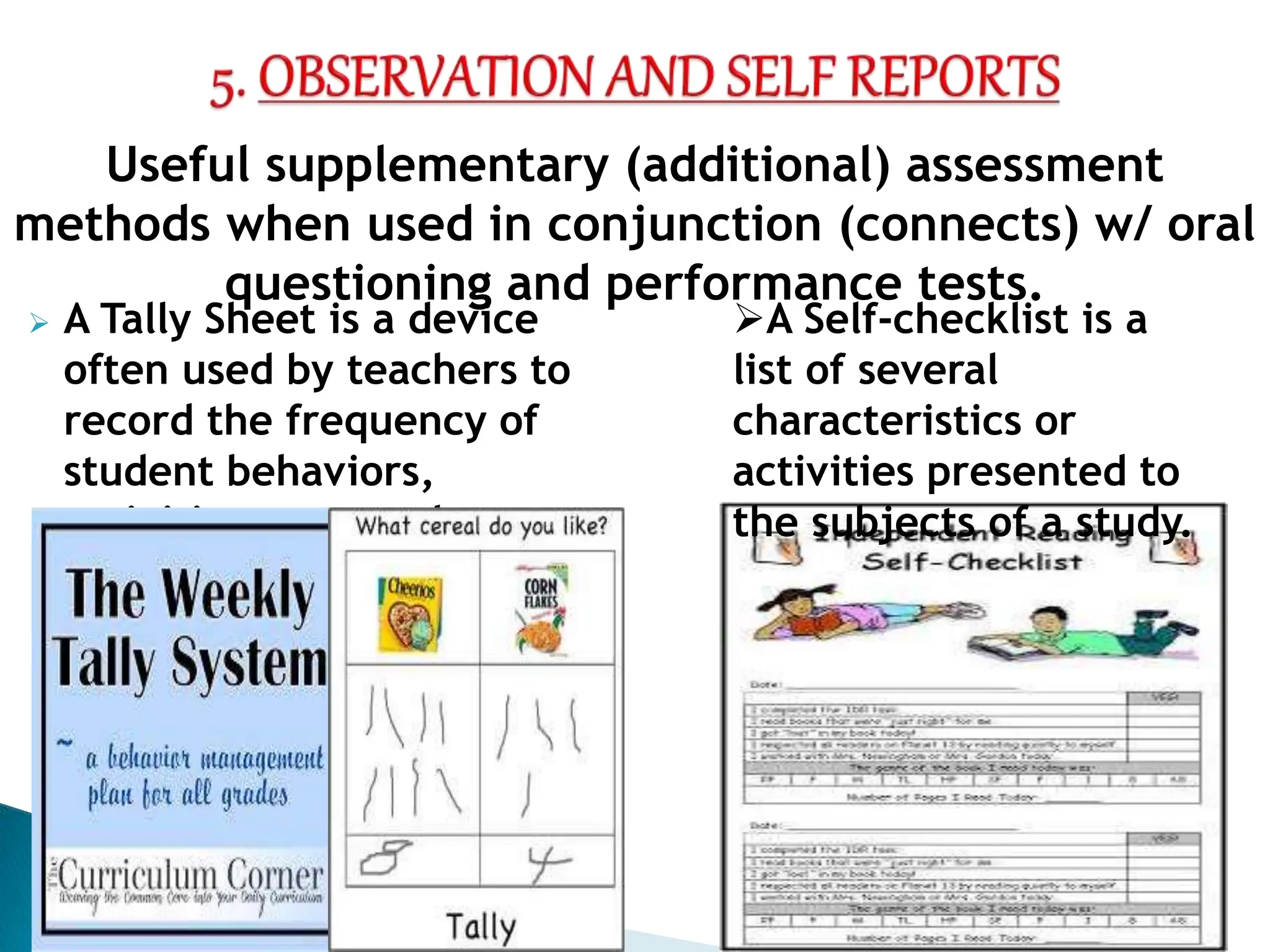

The document reviews the principles of high-quality assessment, emphasizing the importance of clear learning targets, appropriate assessment methods, and properties of assessment such as validity, reliability, and fairness. It details various cognitive targets and skills students should achieve, incorporating Bloom’s taxonomy to illustrate the hierarchy of educational objectives from knowledge to evaluation. It also discusses the ethical implications of assessment practices and the need for assessments to be fair and transparent.