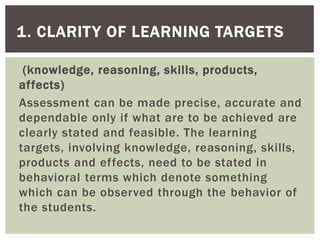

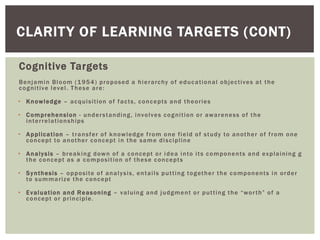

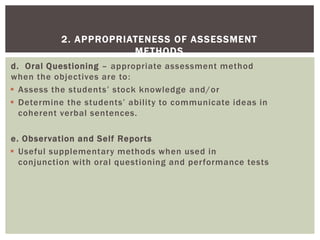

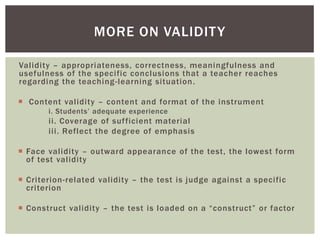

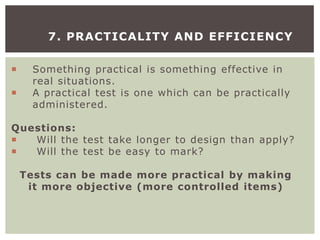

This document discusses principles of high quality assessment. It outlines 9 key principles: 1) clarity of learning targets, 2) appropriateness of assessment methods, 3) validity, 4) reliability, 5) fairness, 6) positive consequences, 7) practicality and efficiency, 8) ethics, and 9) clarity of learning targets which should include knowledge, reasoning, skills, products and affects. It then provides further details on each principle, including definitions and examples. The document emphasizes establishing clear learning goals and using valid and reliable assessment methods that are also fair, practical and ethical.