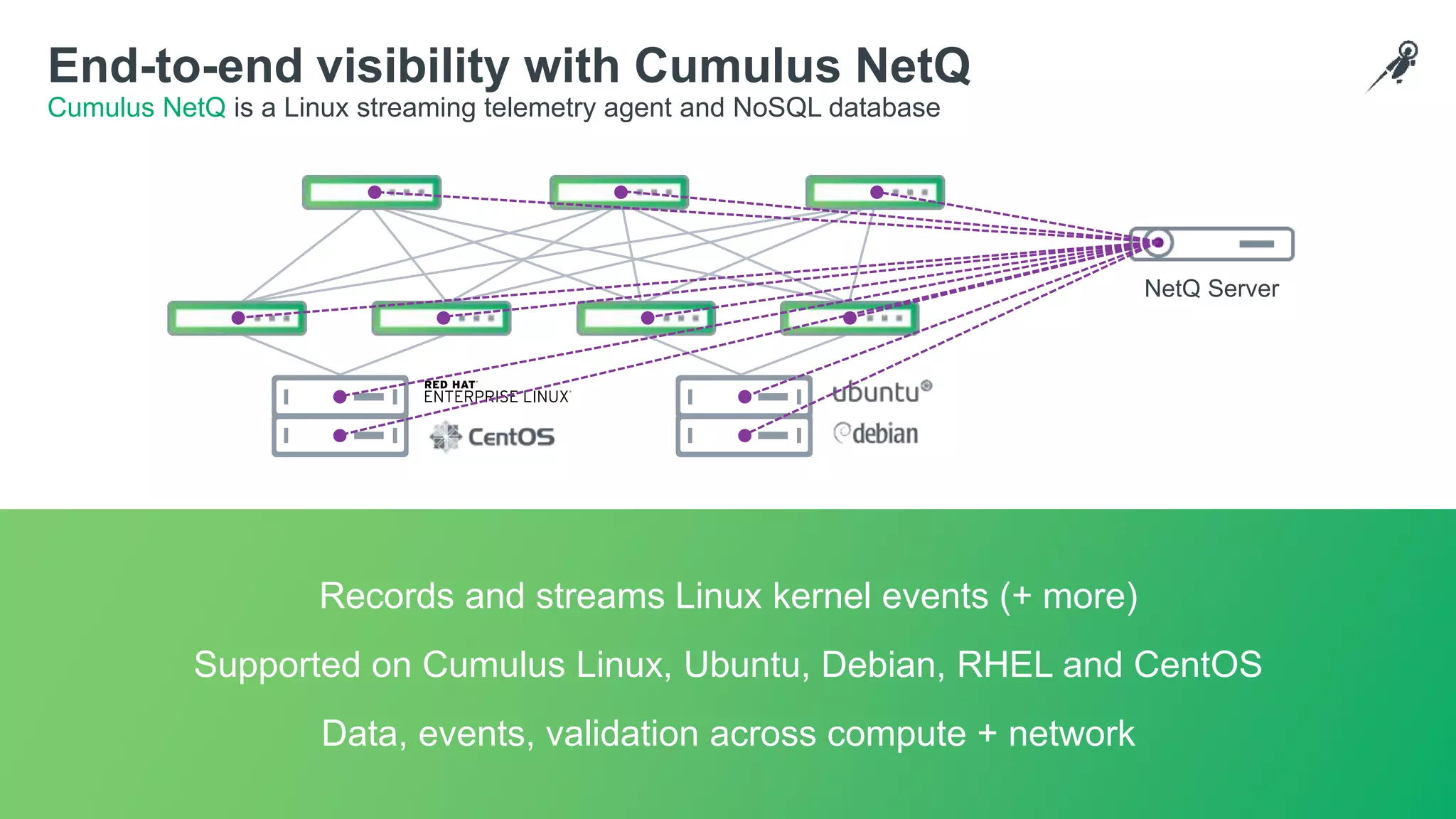

The document discusses the importance of continuous integration (CI) and continuous deployment (CD) in network management to solve issues like human error and slow change cycles. It details automated testing strategies and various infrastructure tools, notably Cumulus Linux and Cumulus NetQ, to enhance network simulation and visibility. Additionally, it outlines the CI/CD pipeline process using platforms like GitLab for infrastructure as code implementation.