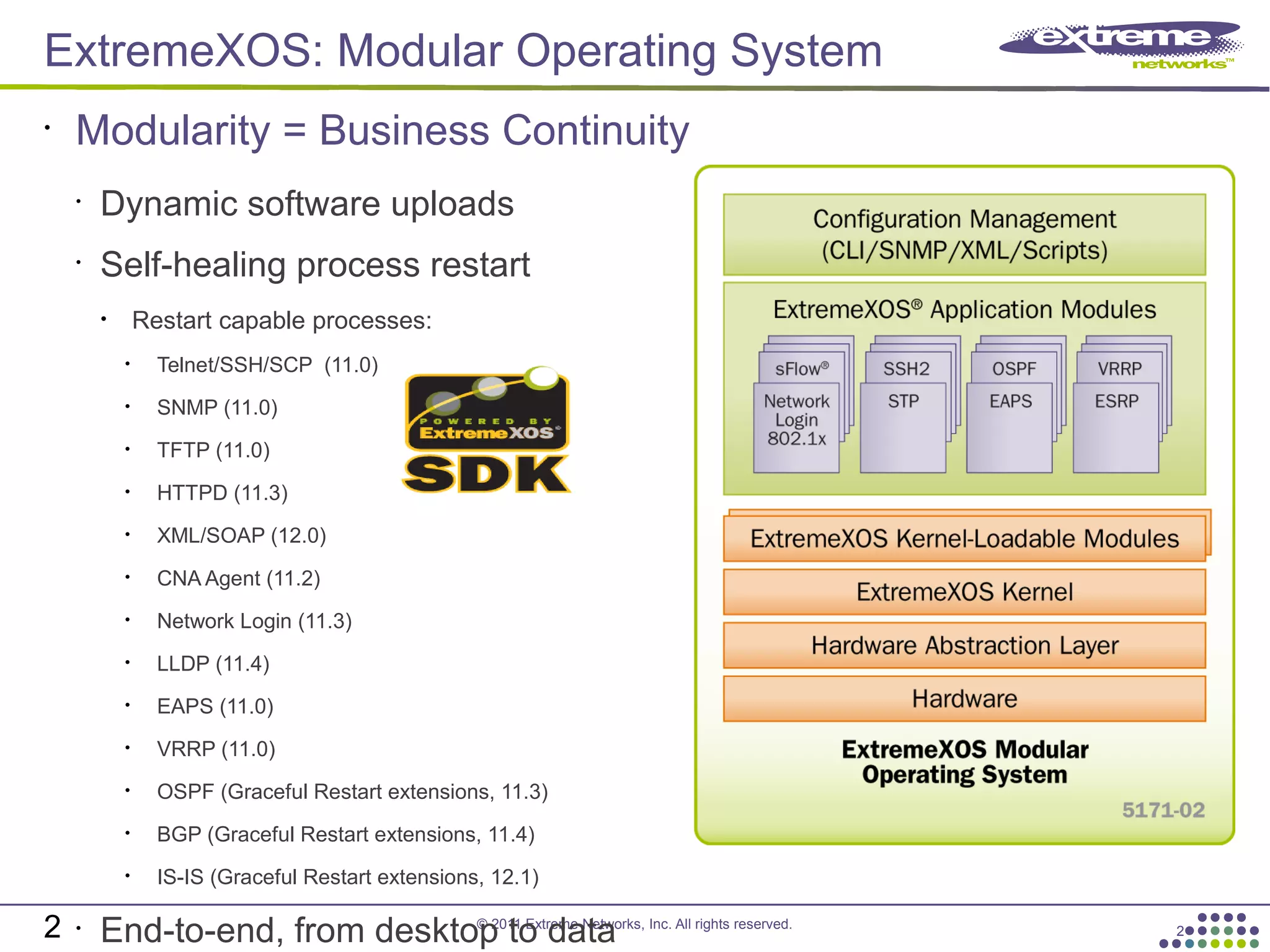

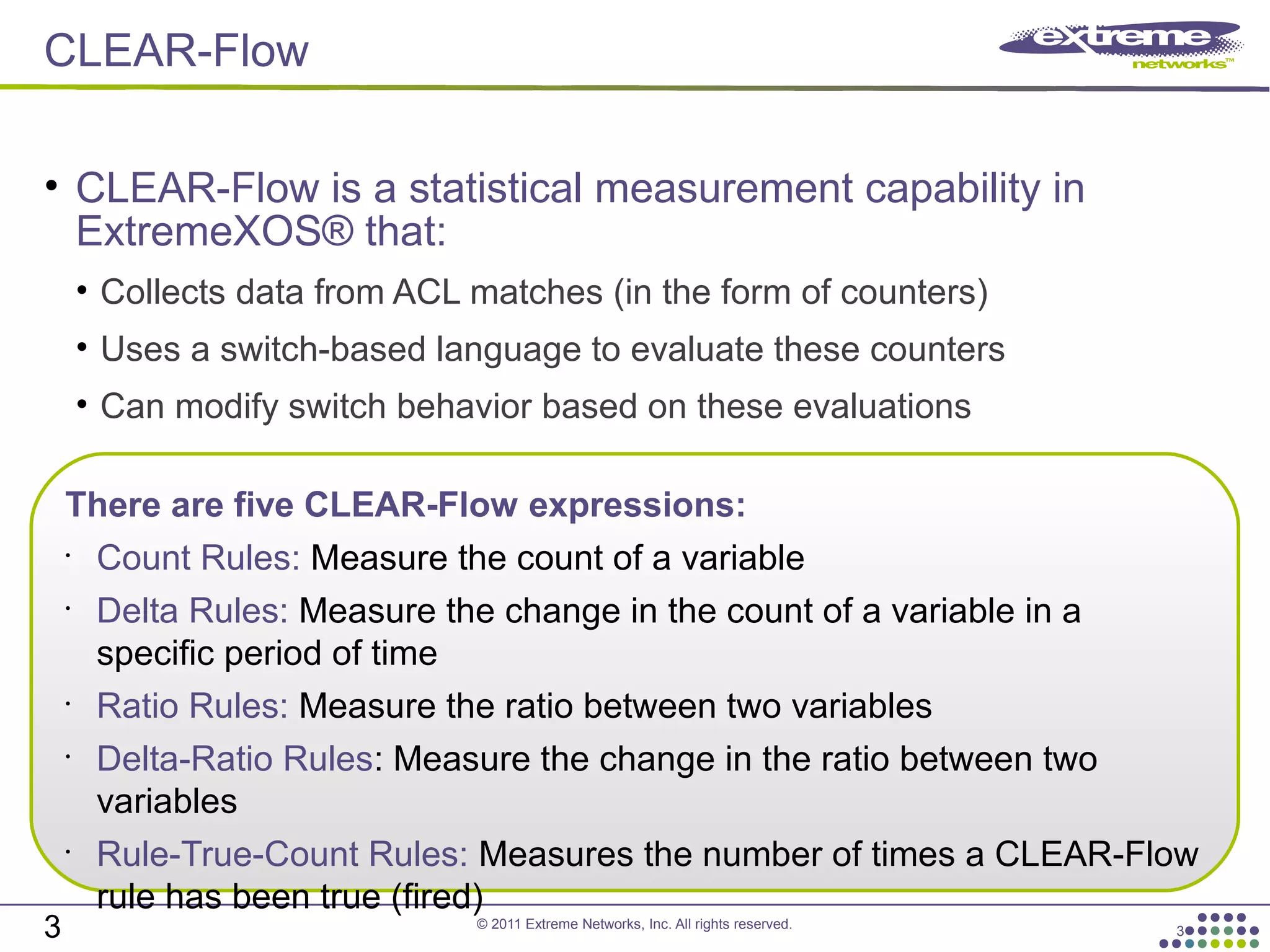

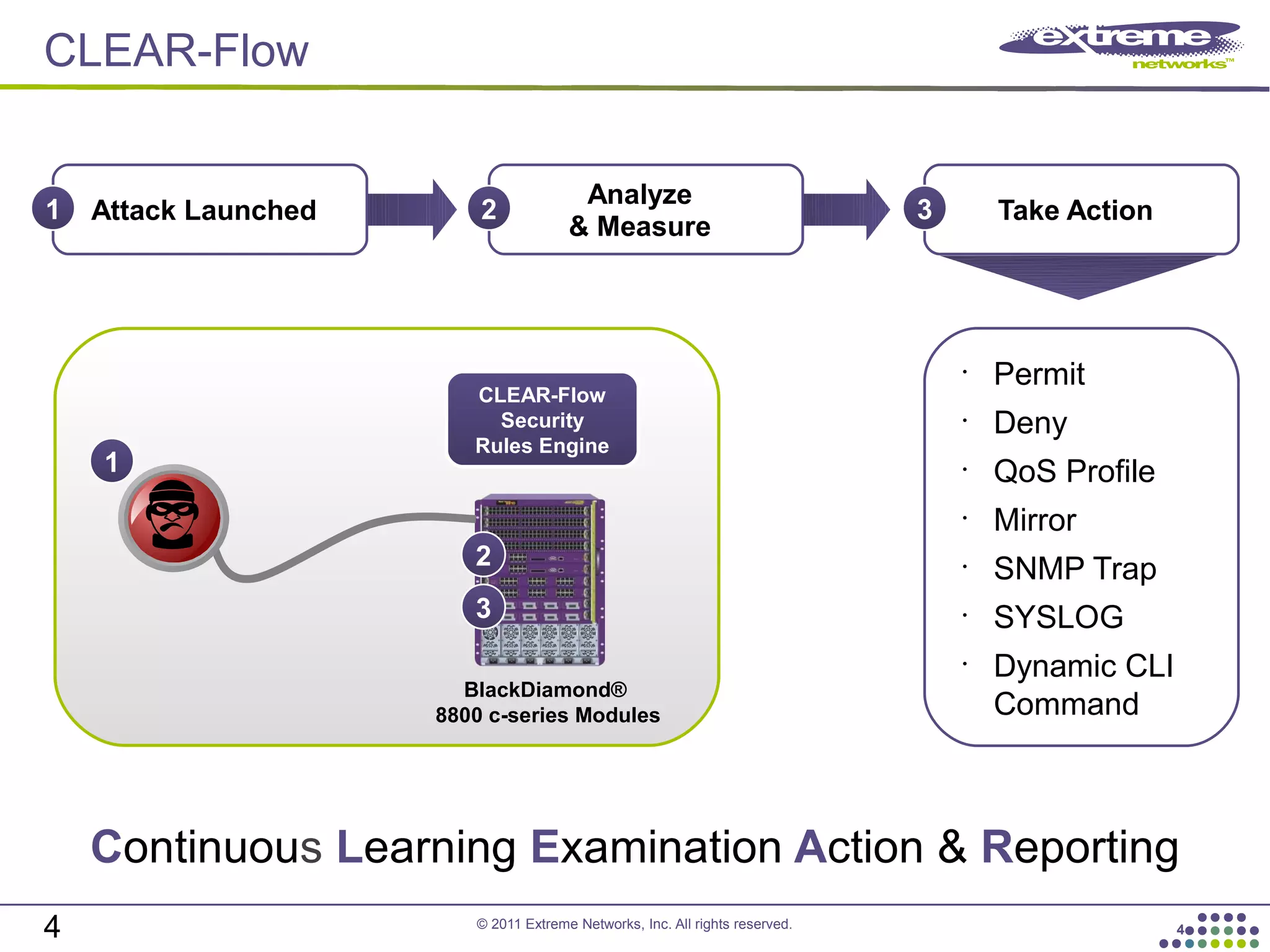

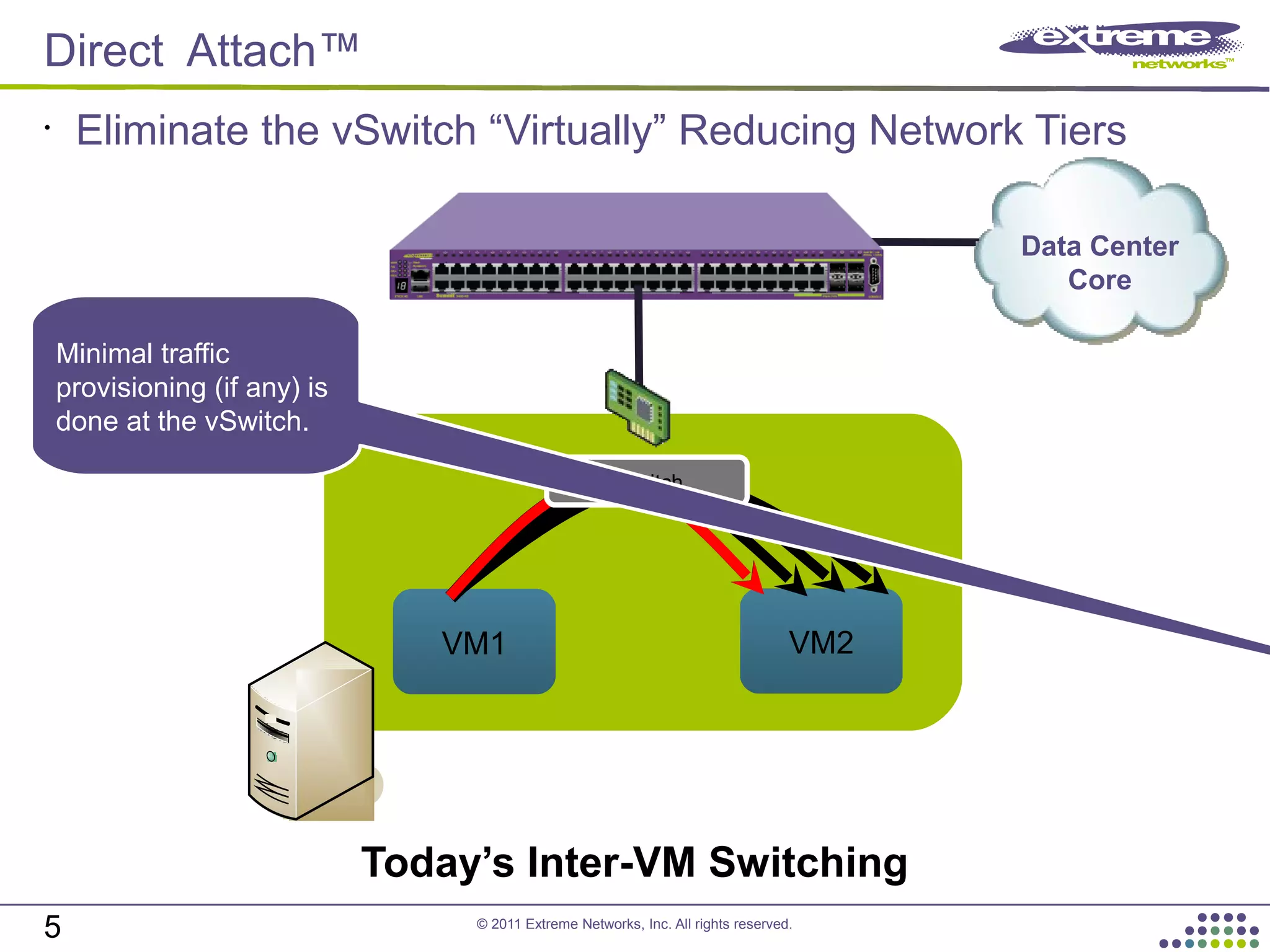

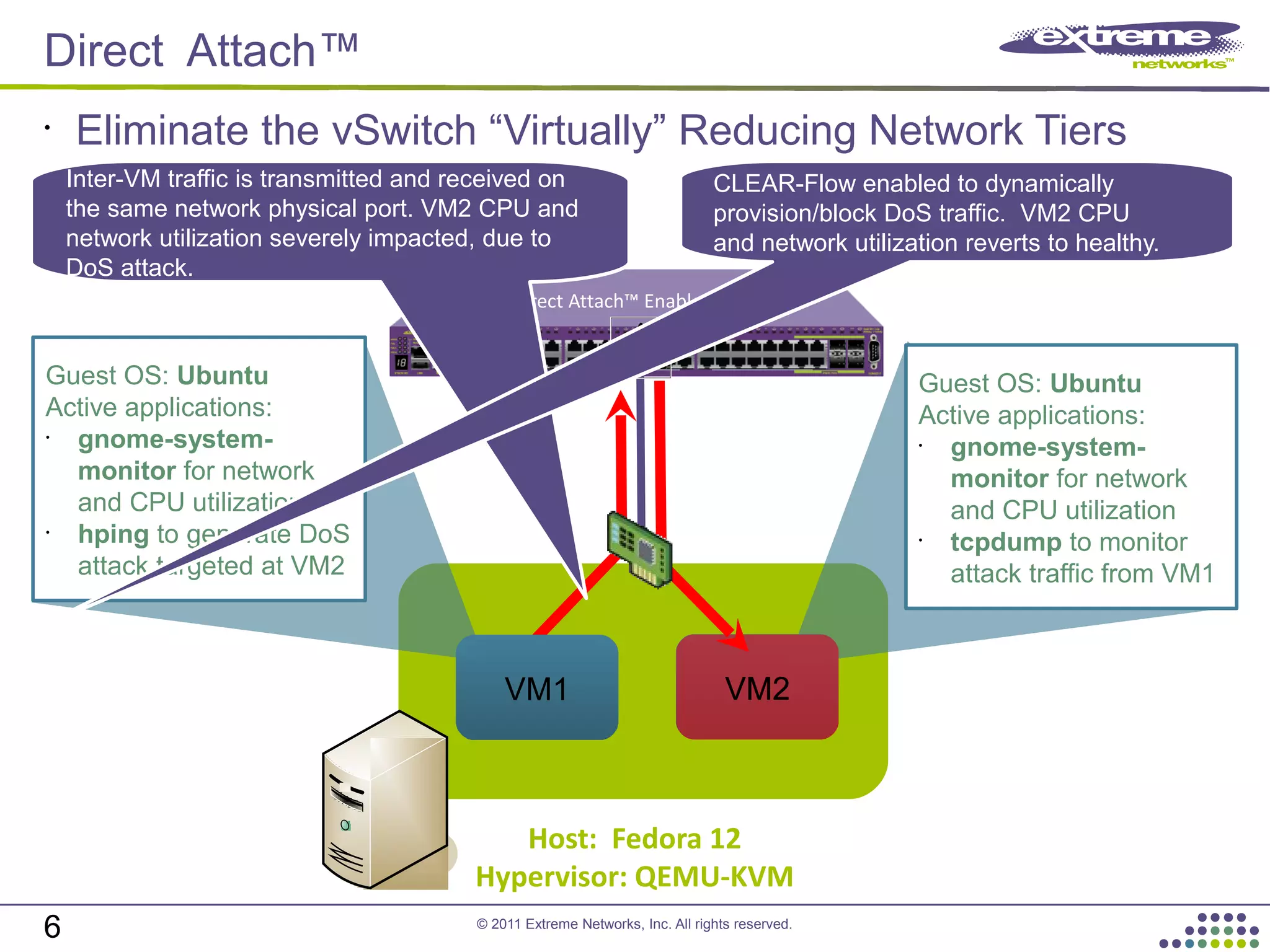

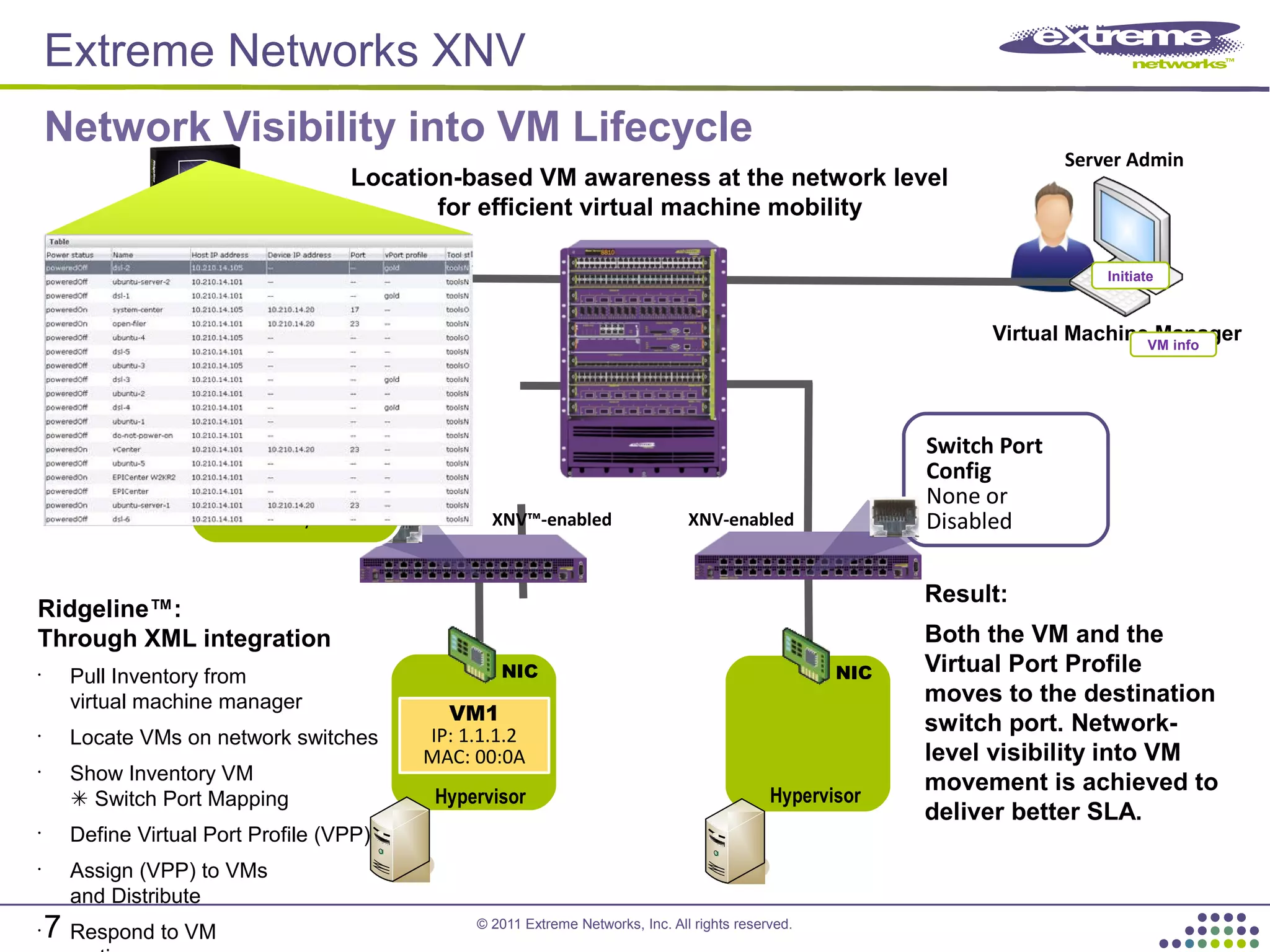

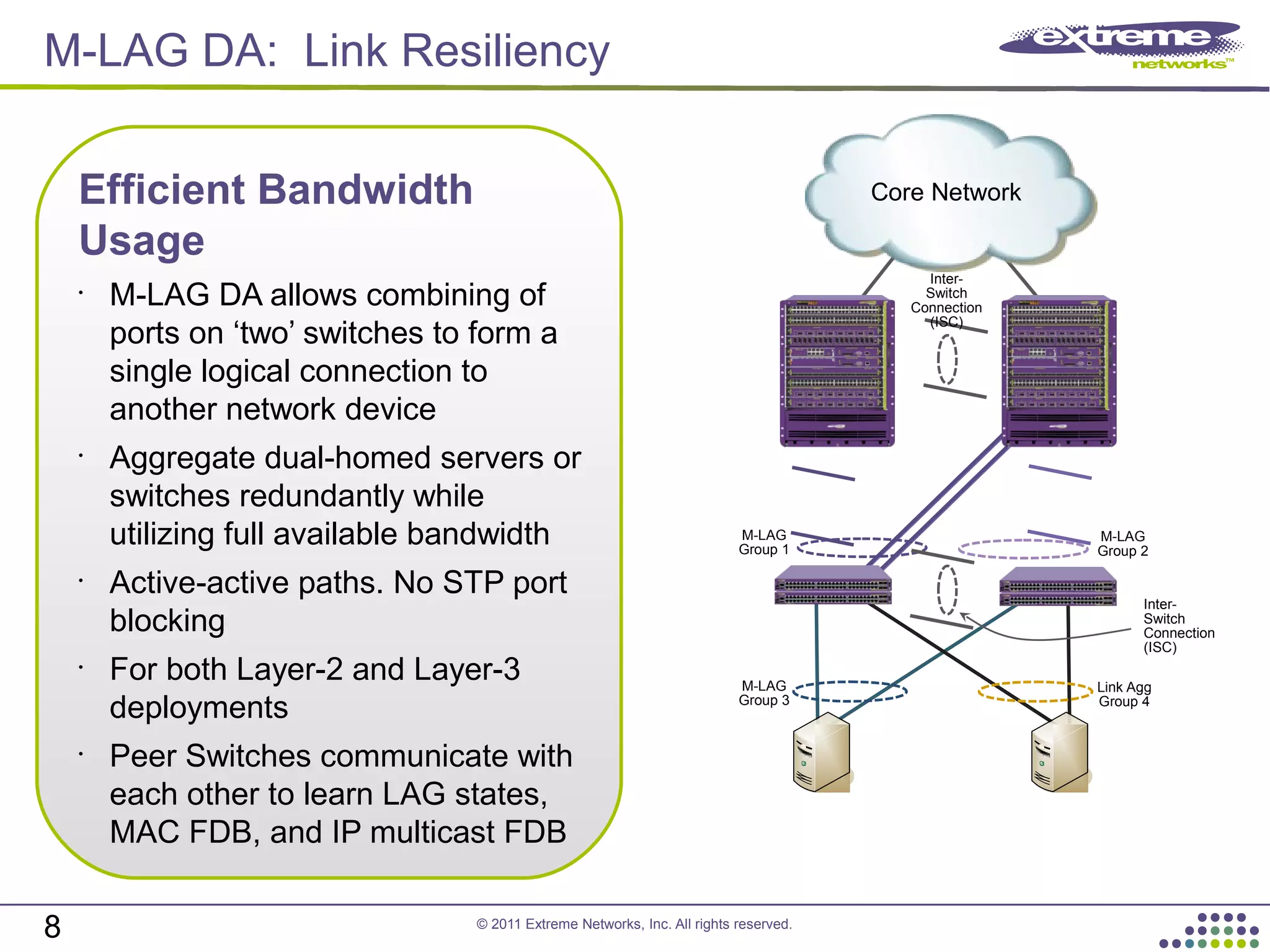

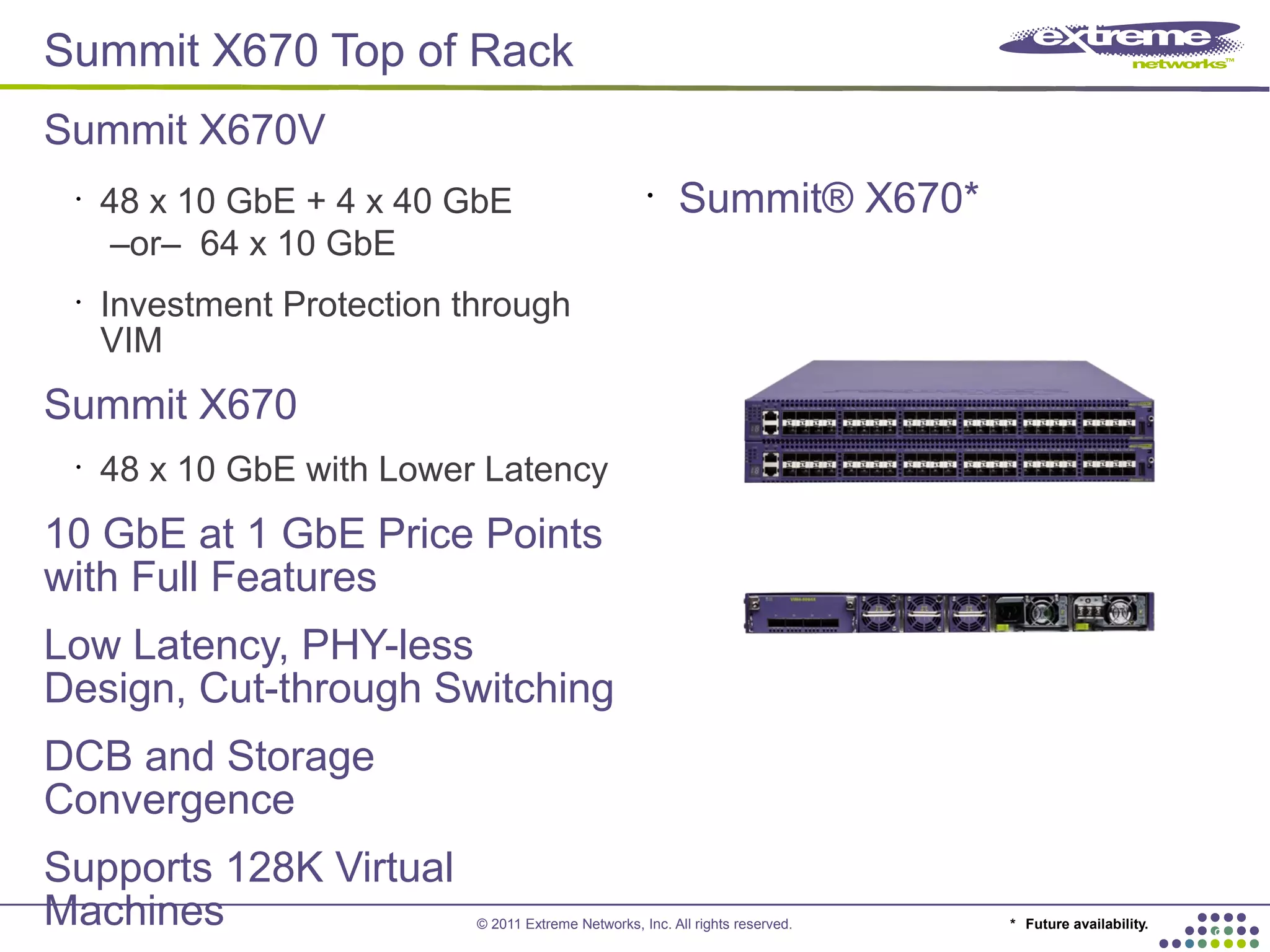

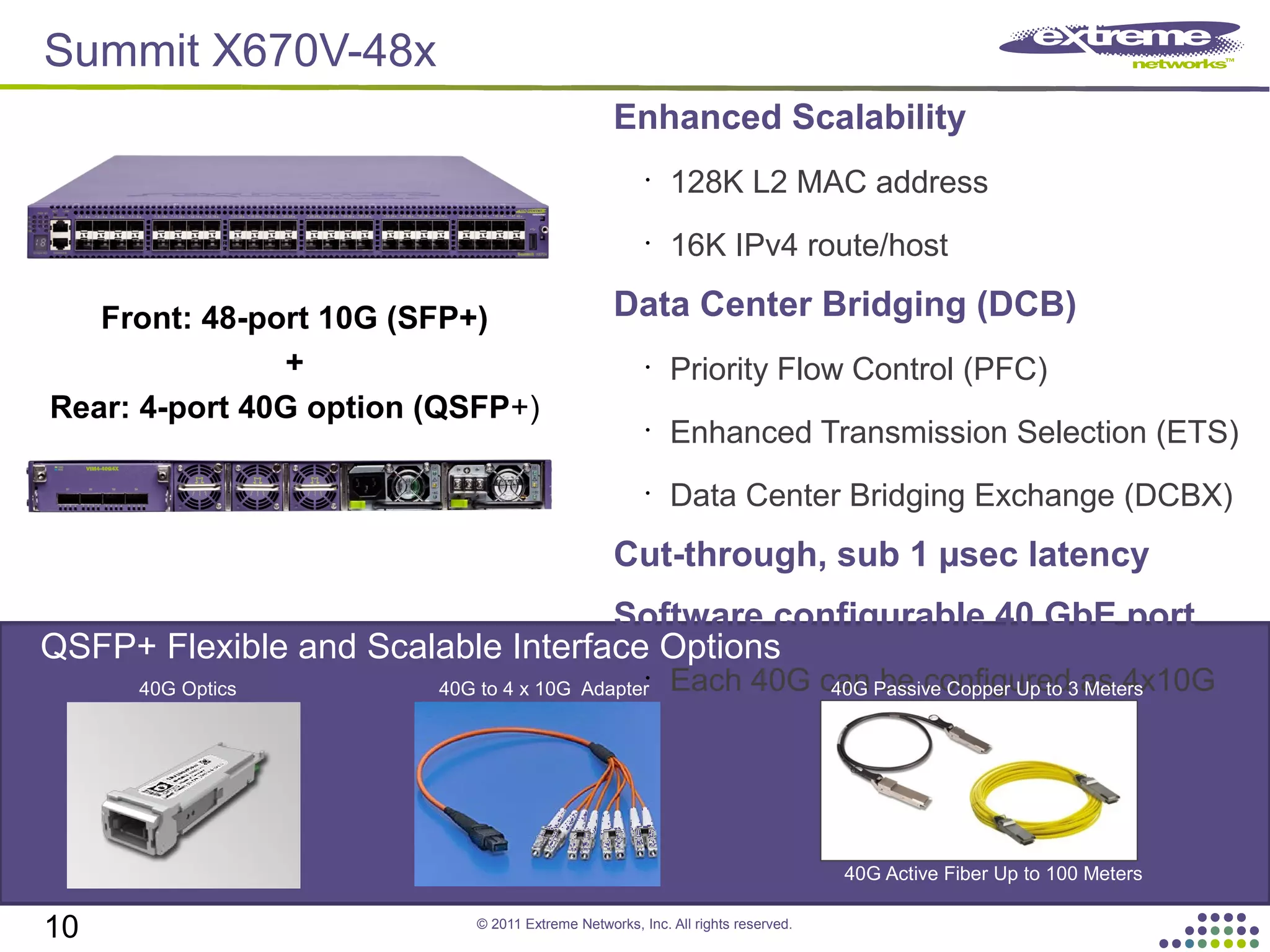

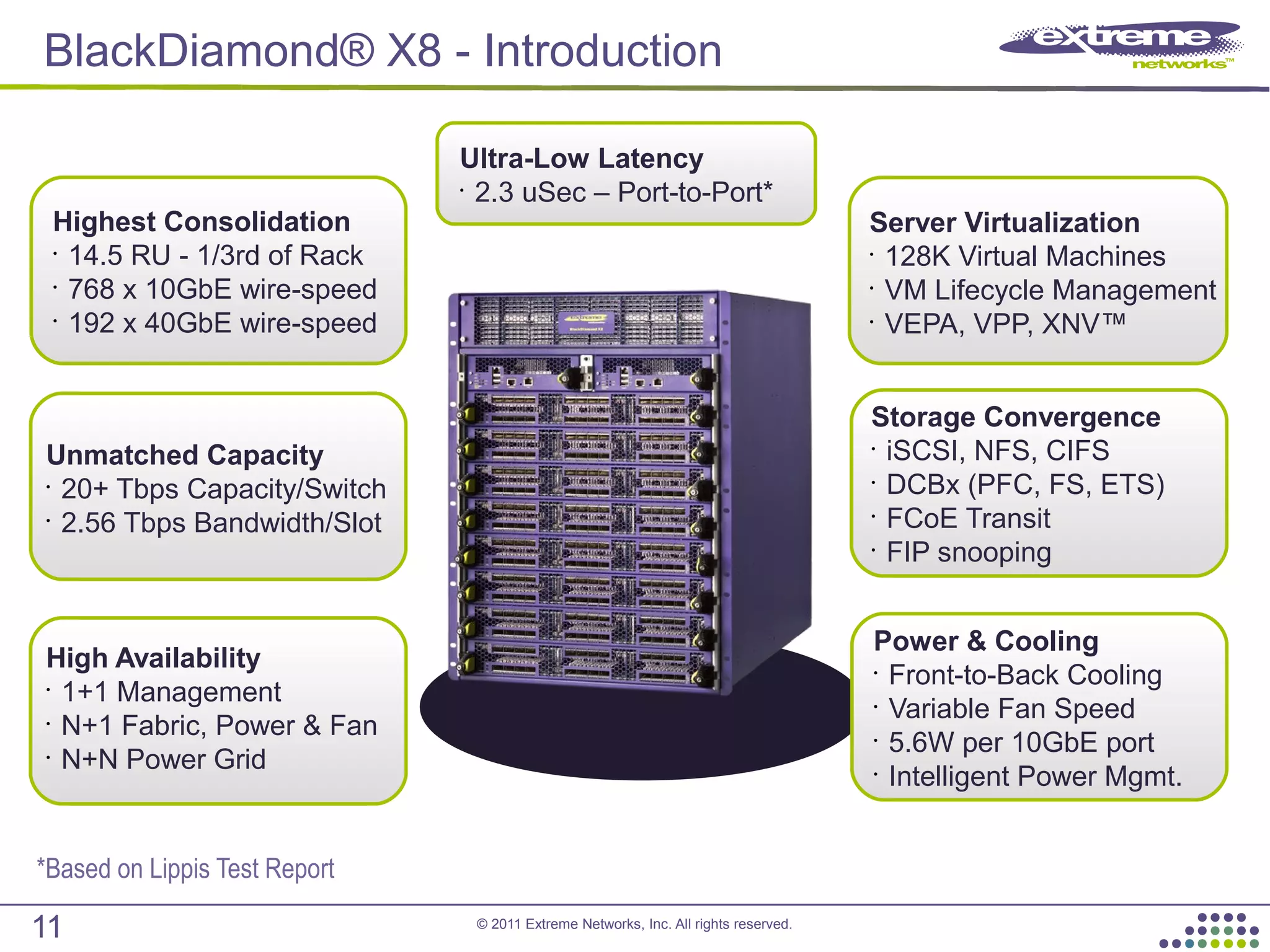

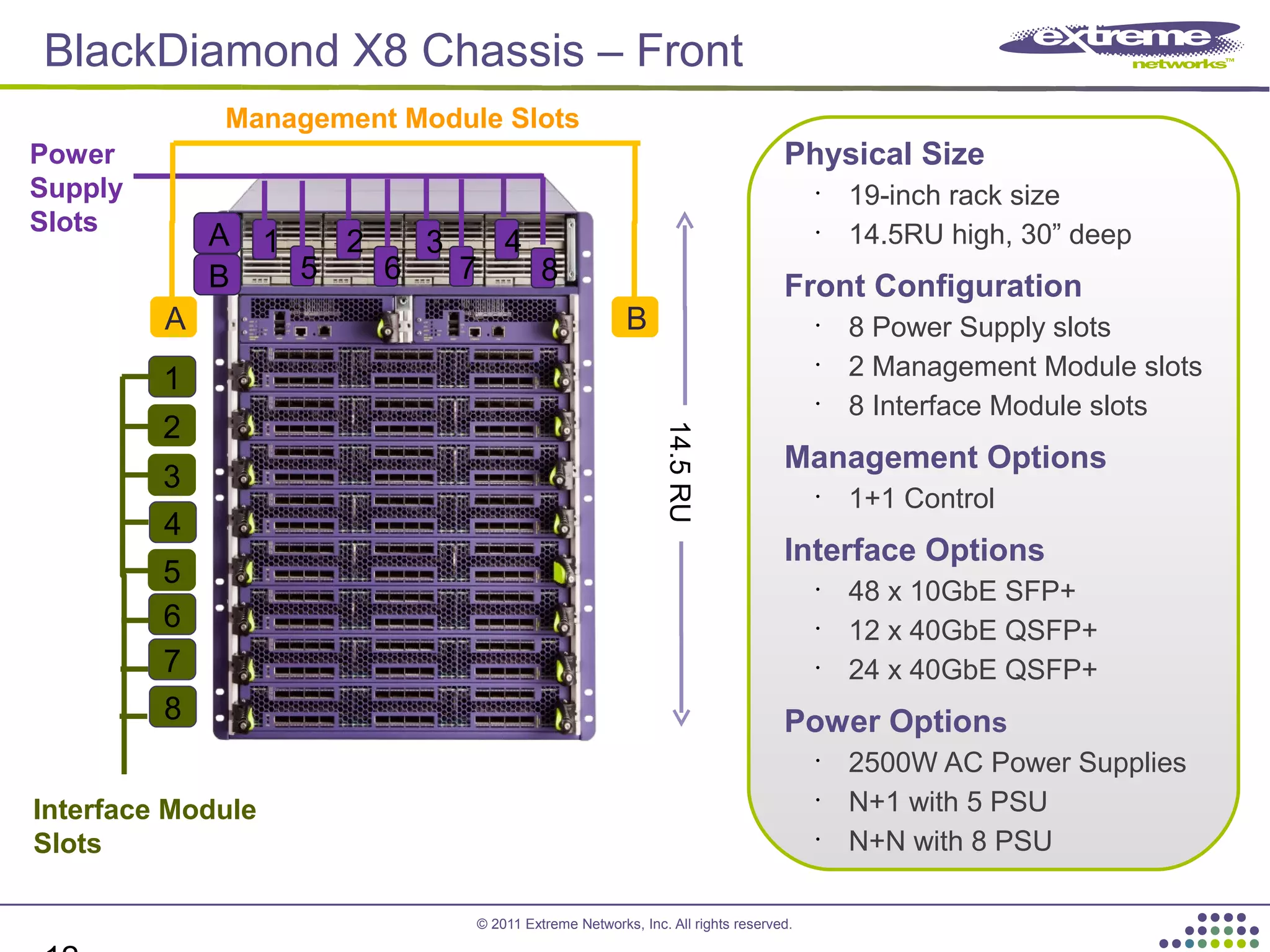

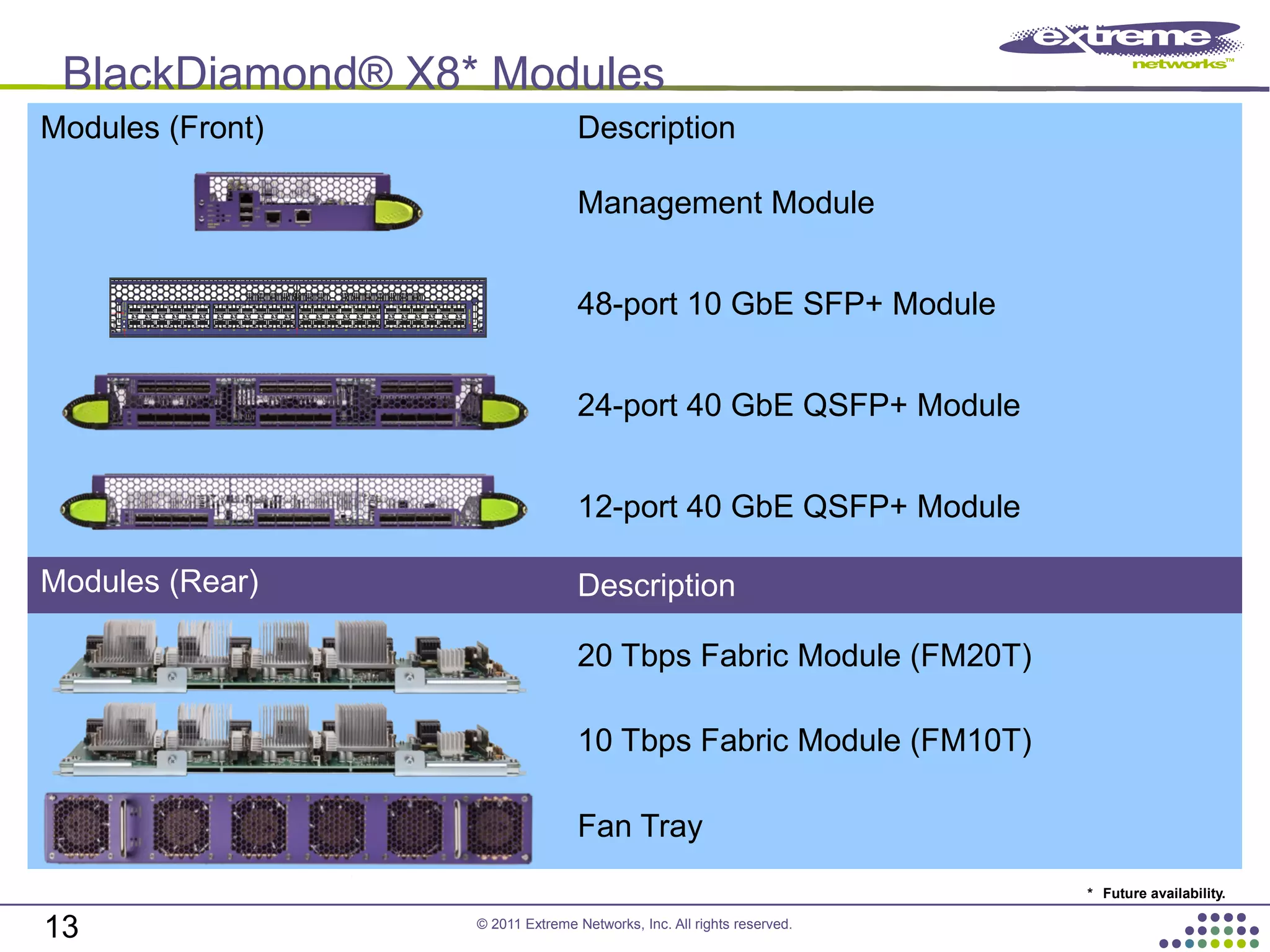

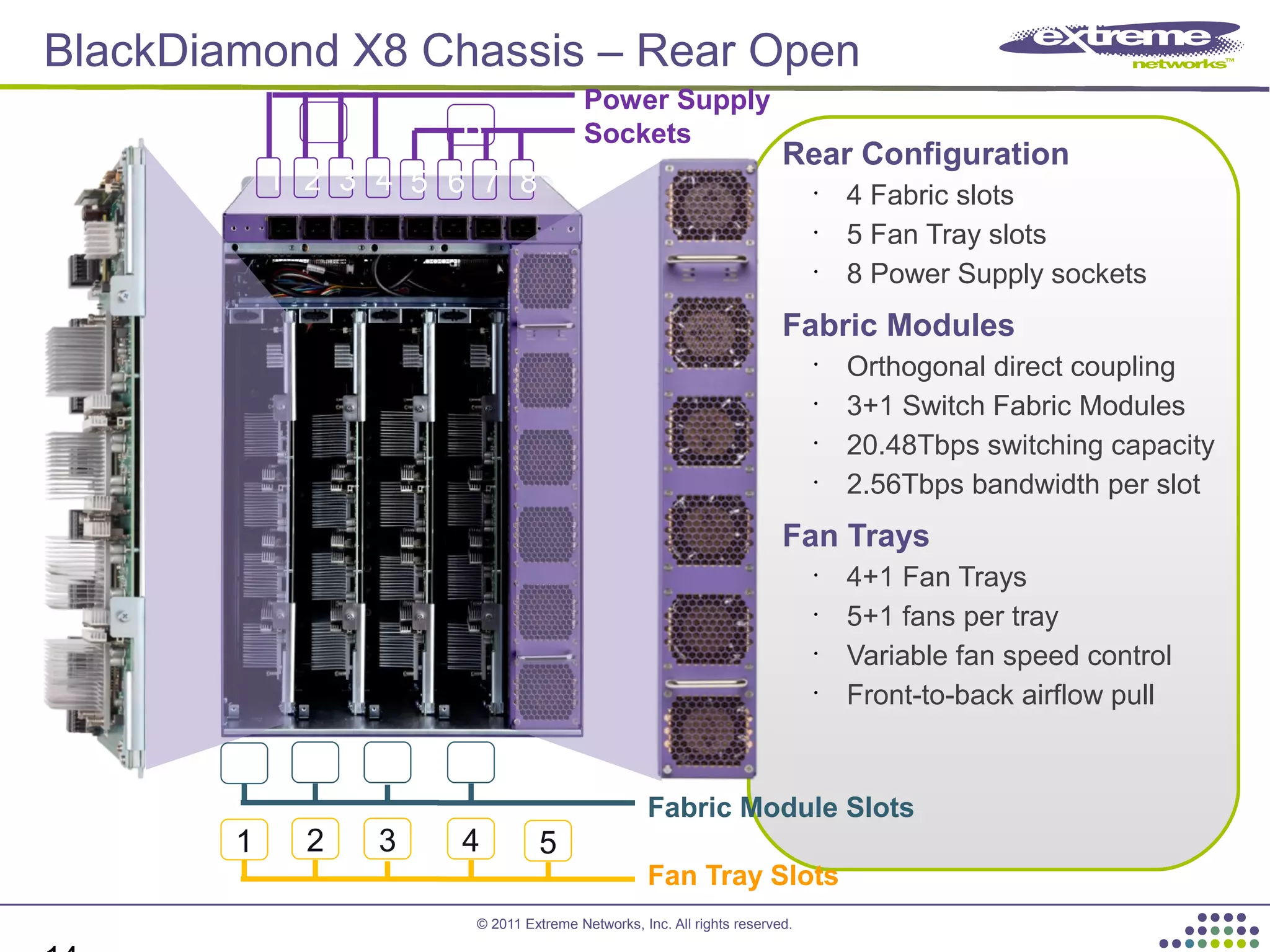

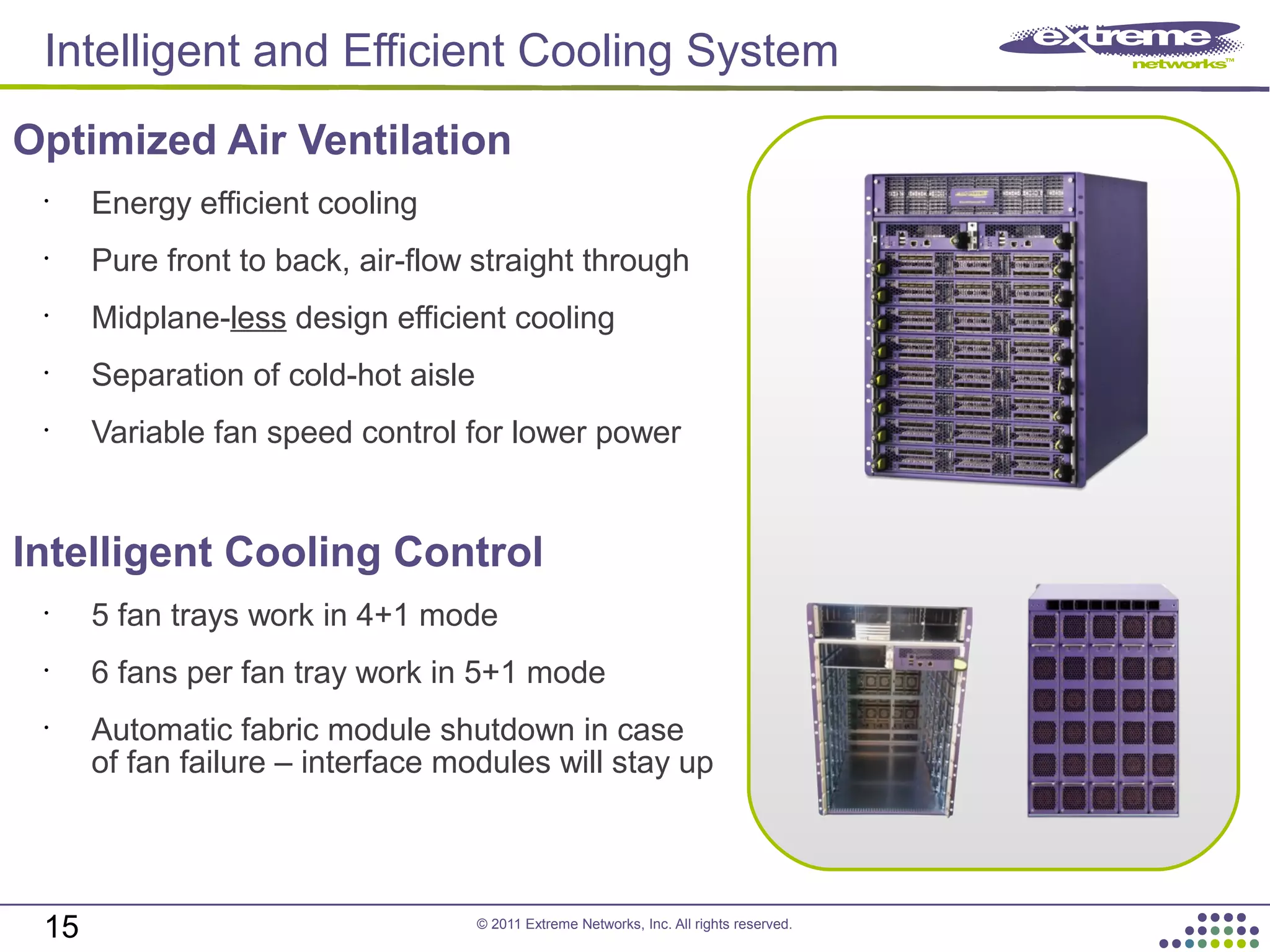

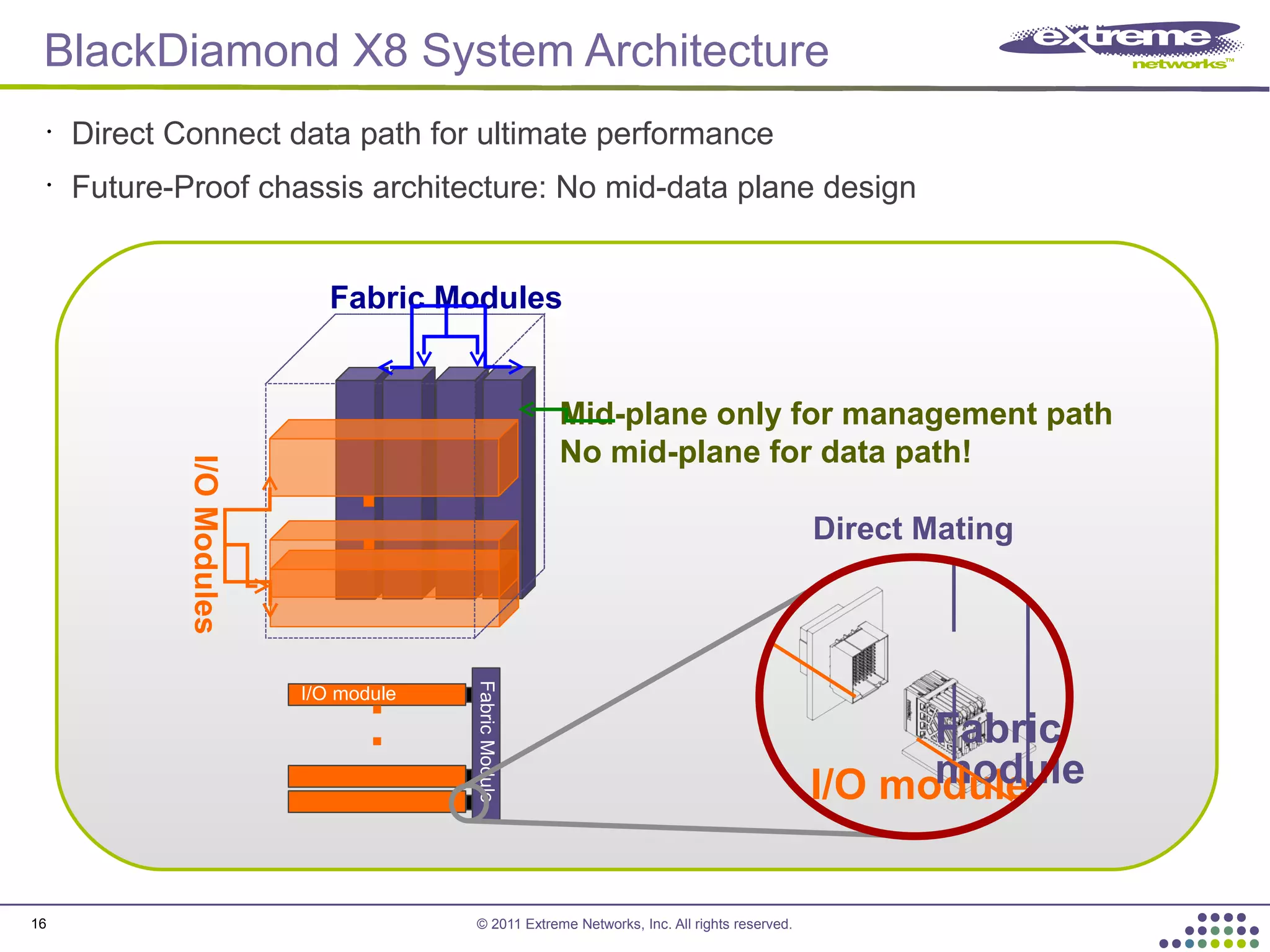

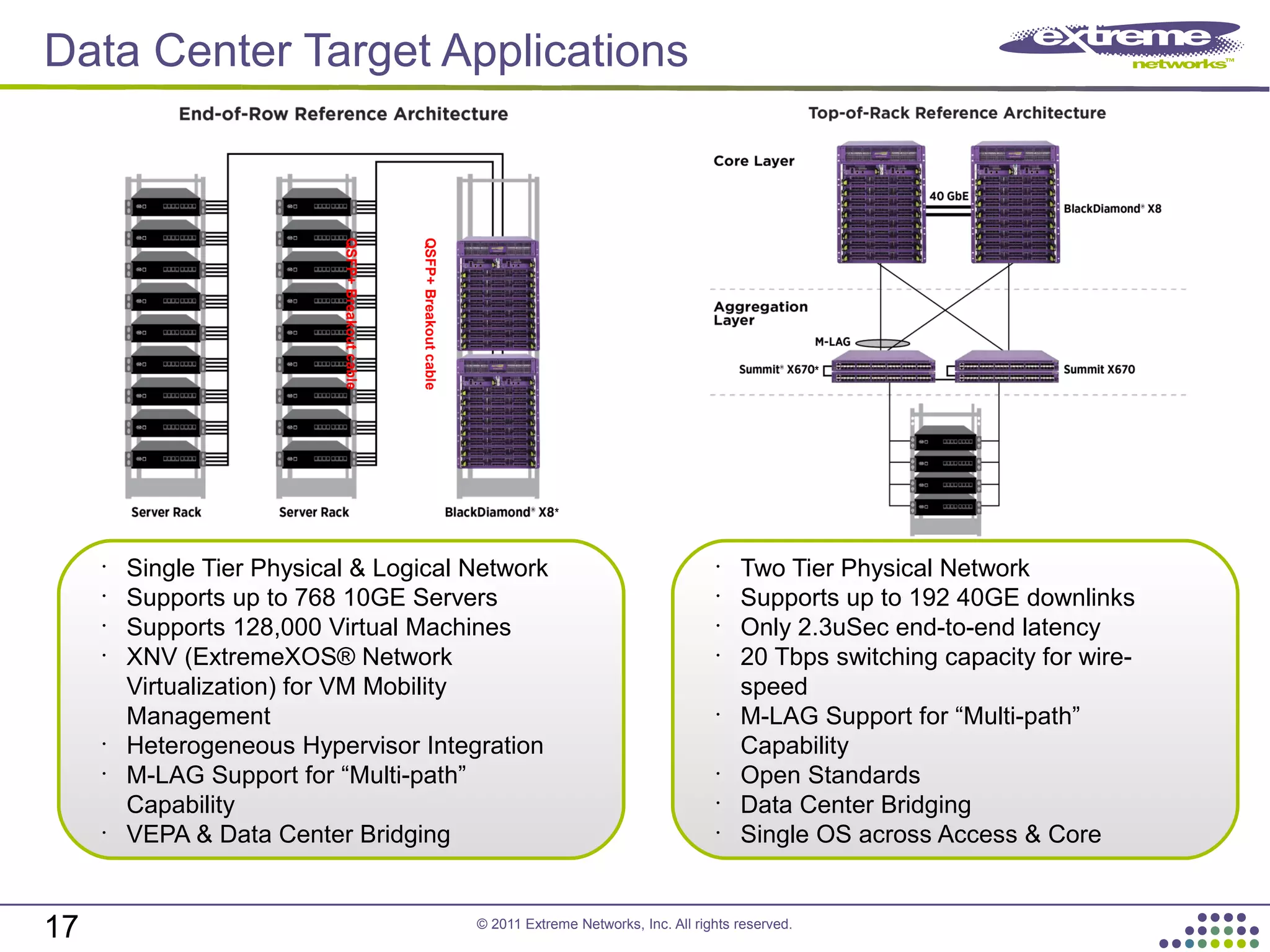

This document discusses networking solutions from Extreme Networks for data centers. It describes the modular operating system ExtremeXOS which allows for dynamic software uploads and self-healing processes. It also discusses CLEAR-Flow for statistical measurement and security rules, Direct Attach for eliminating virtual switches, virtual machine management capabilities, and technologies like M-LAG for link resiliency. The document provides an overview of product lines like the Summit X670 top-of-rack switch and the BlackDiamond X8 core switch, highlighting their performance, scalability, and virtualization support.