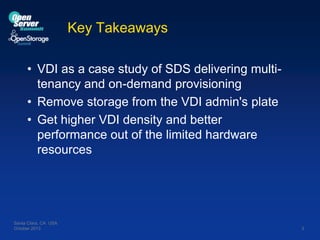

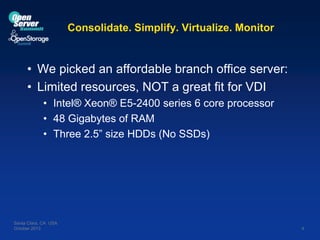

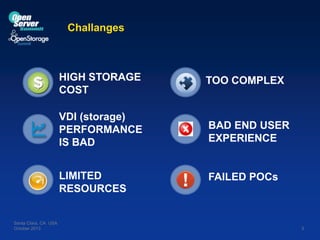

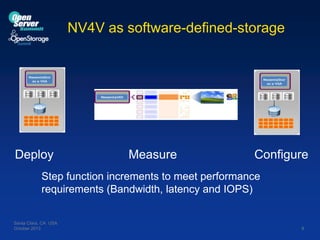

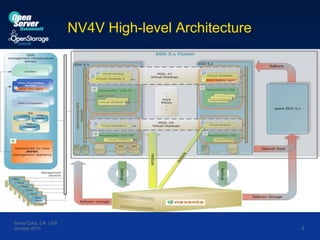

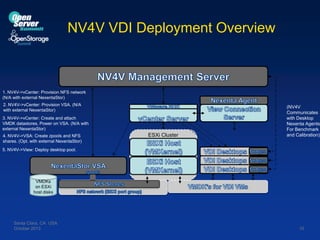

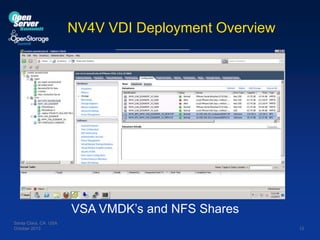

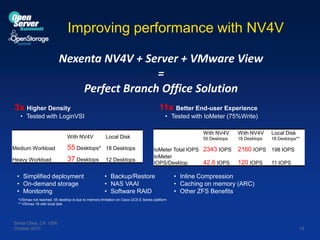

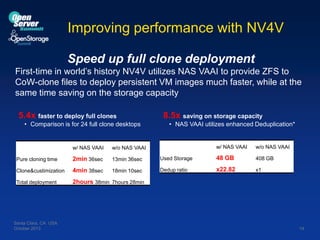

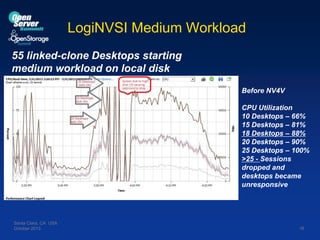

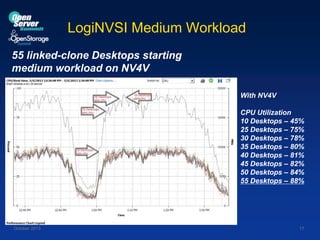

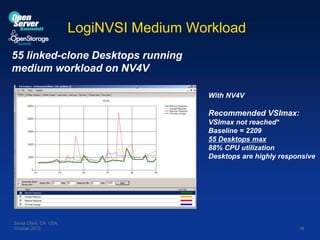

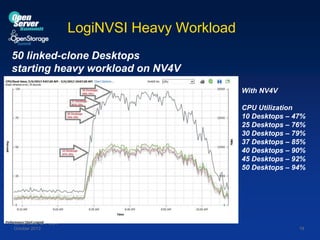

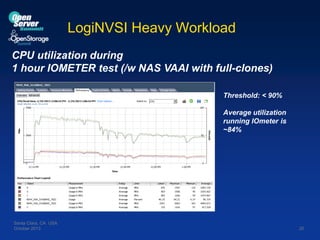

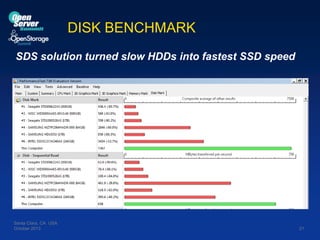

The document discusses the implementation of software-defined storage (SDS) using Nexenta's NV4V in a virtual desktop infrastructure (VDI) setting, highlighting its advantages such as improved performance, reduced storage complexity, and better resource utilization. Key takeaways include optimizations for faster deployment and a higher number of virtual desktops on limited hardware. The results show significant enhancements in user experience and operational efficiency through real-world testing and deployment strategies.