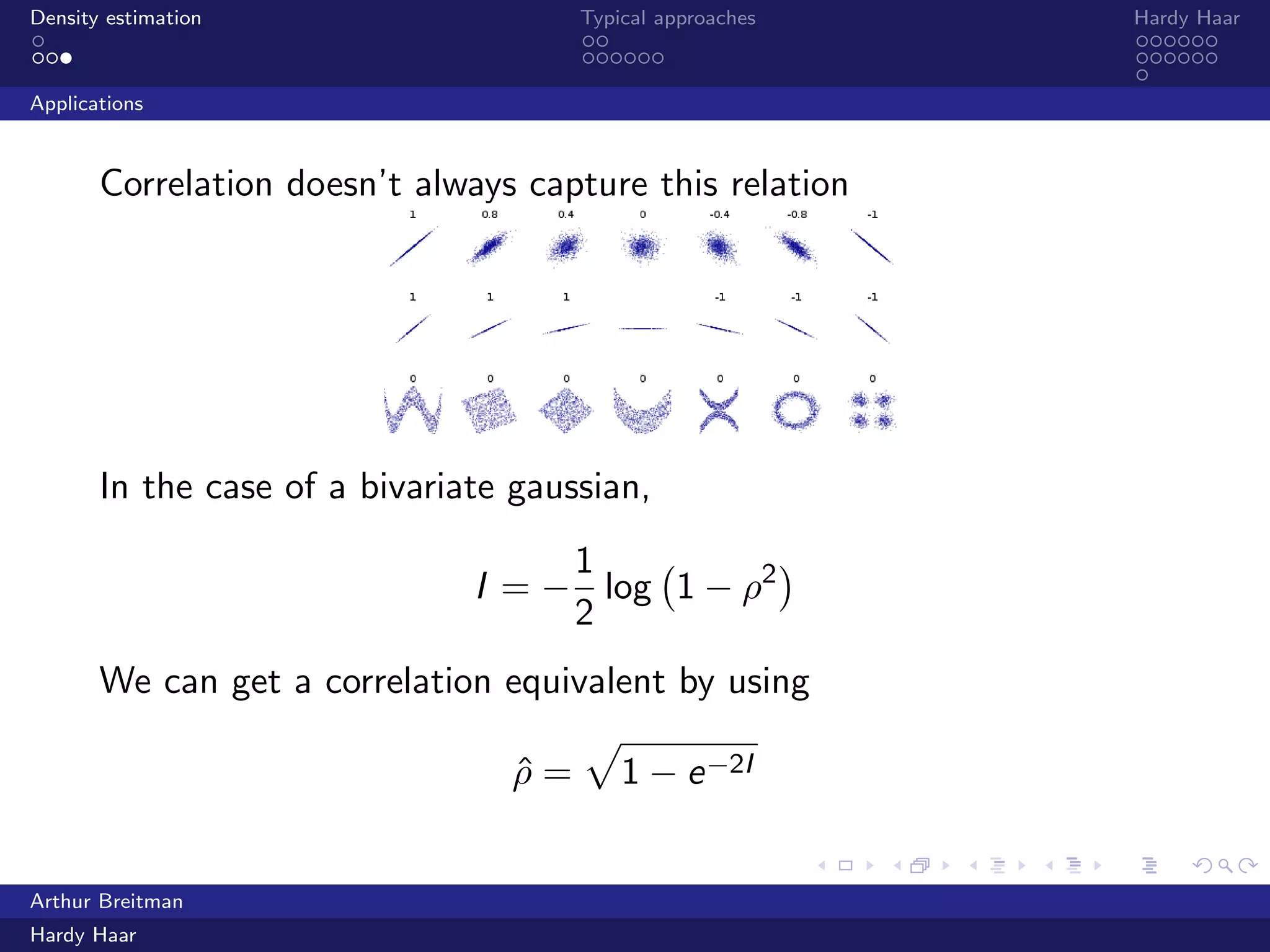

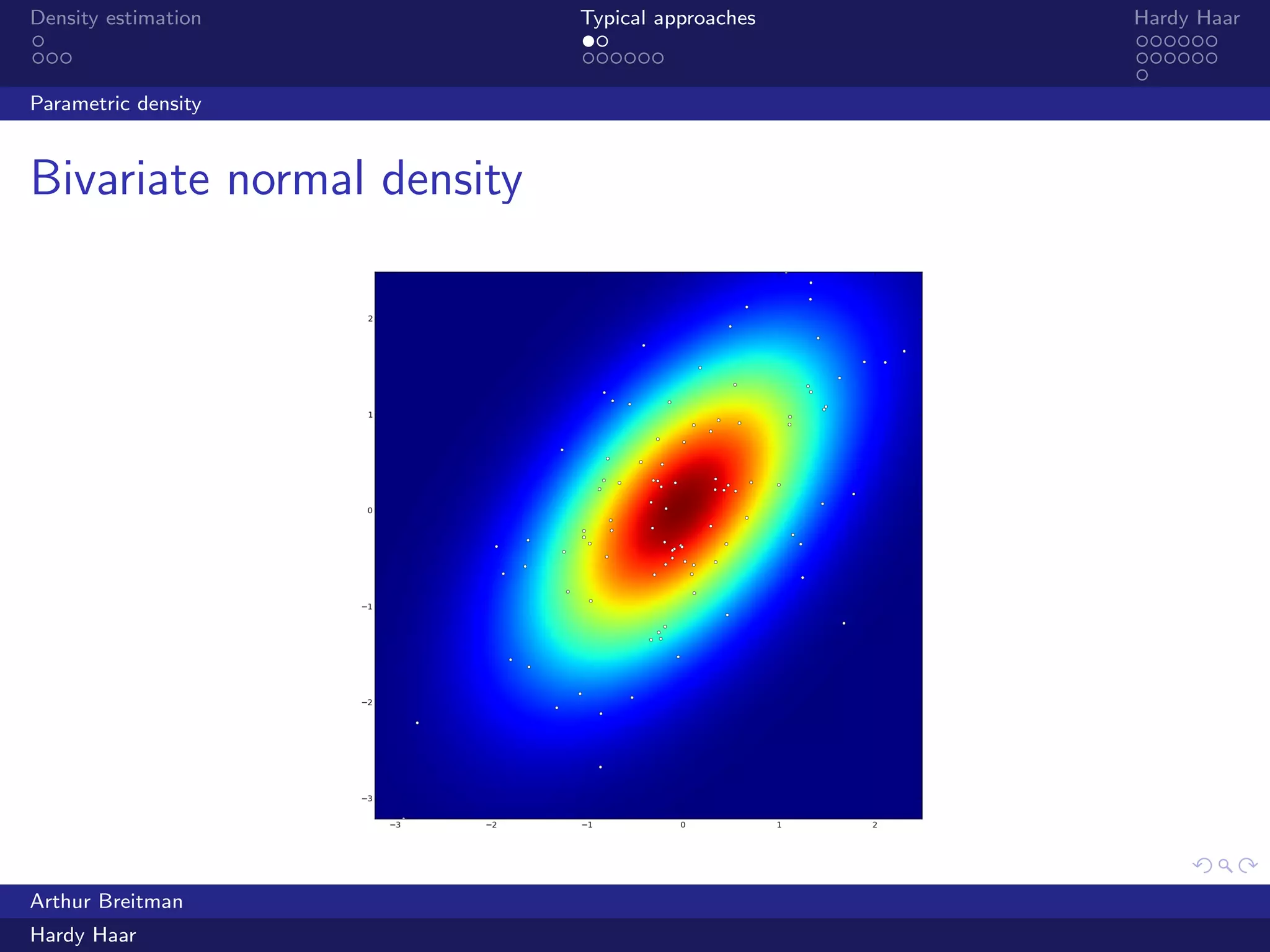

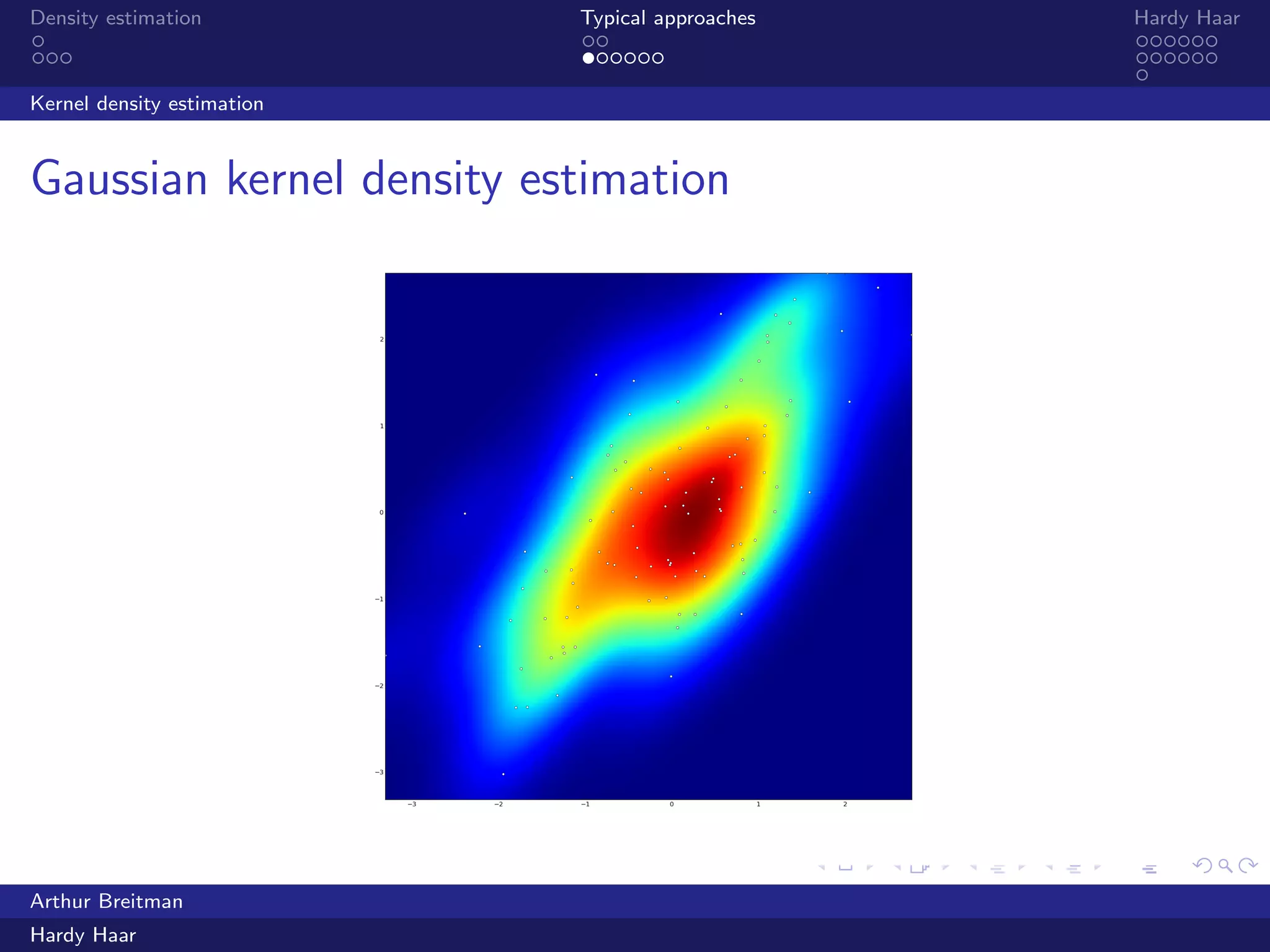

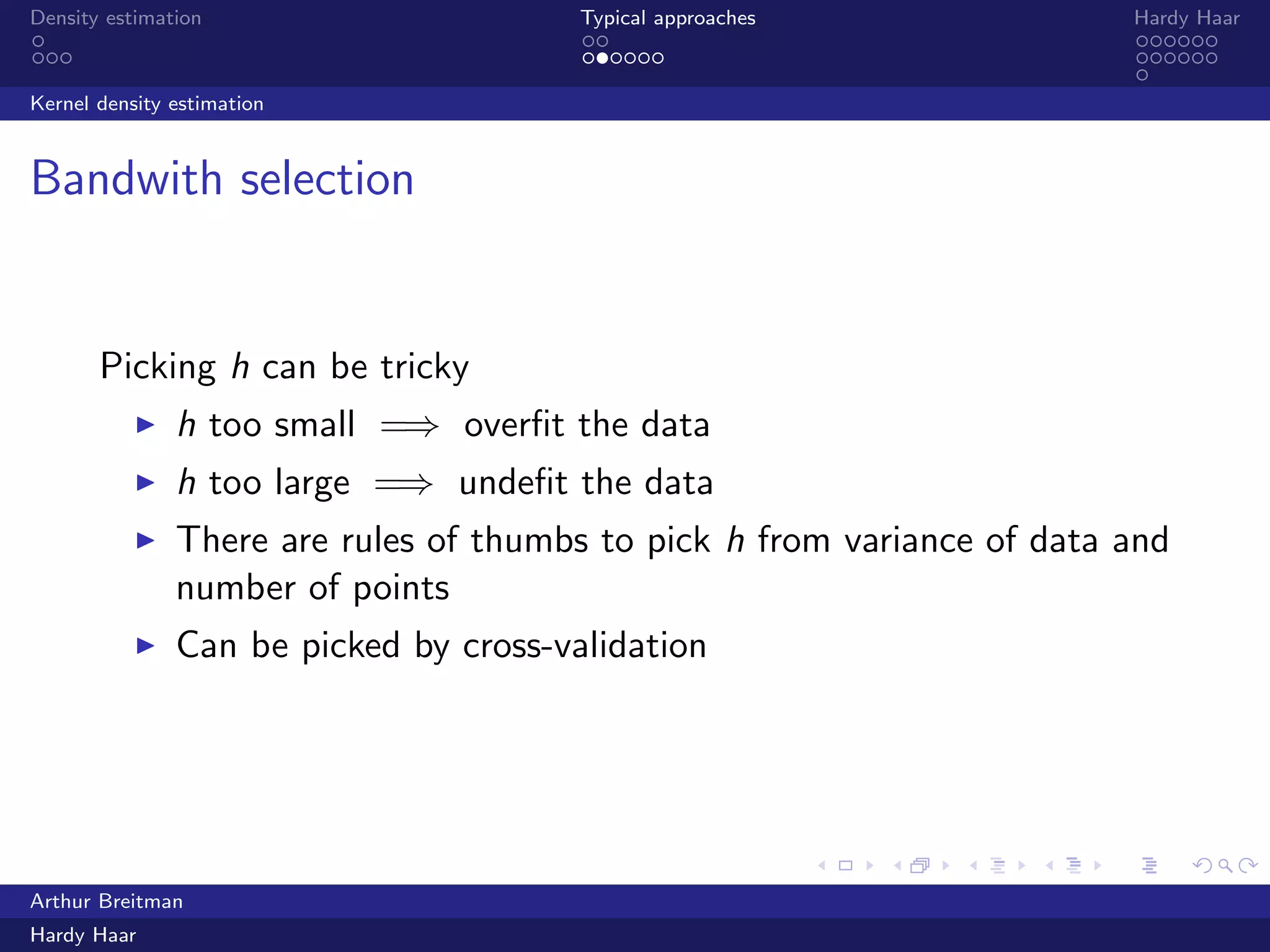

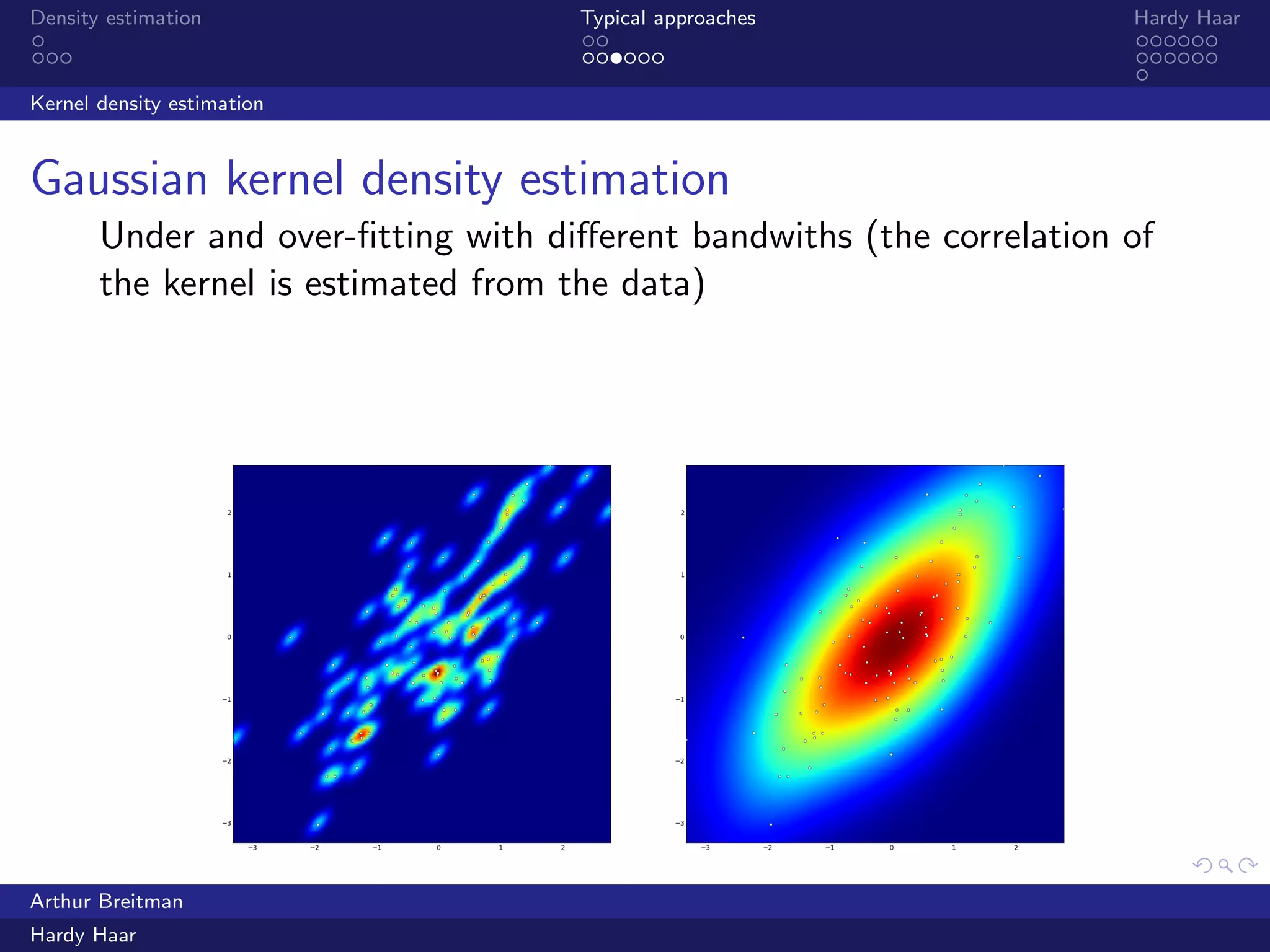

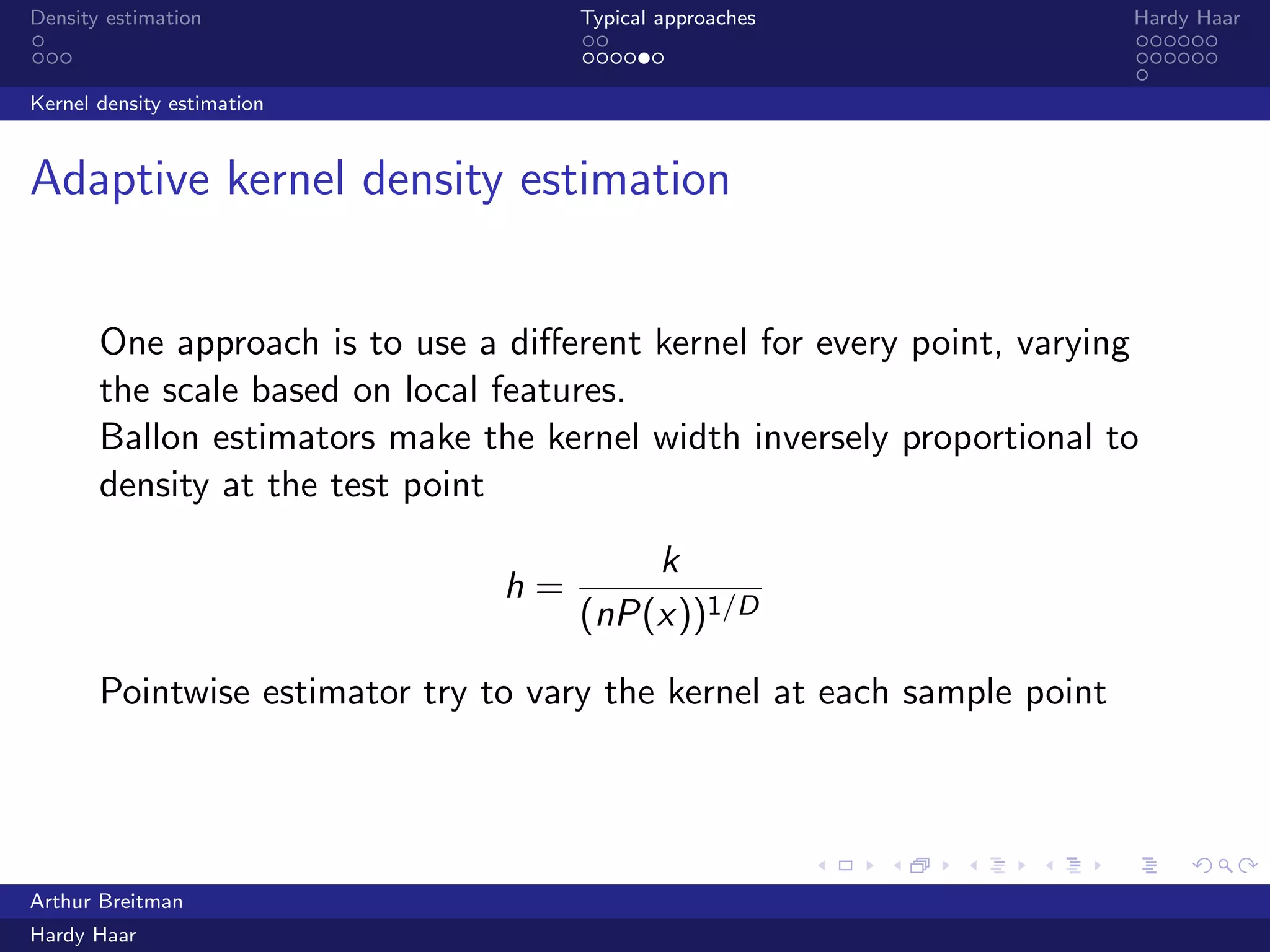

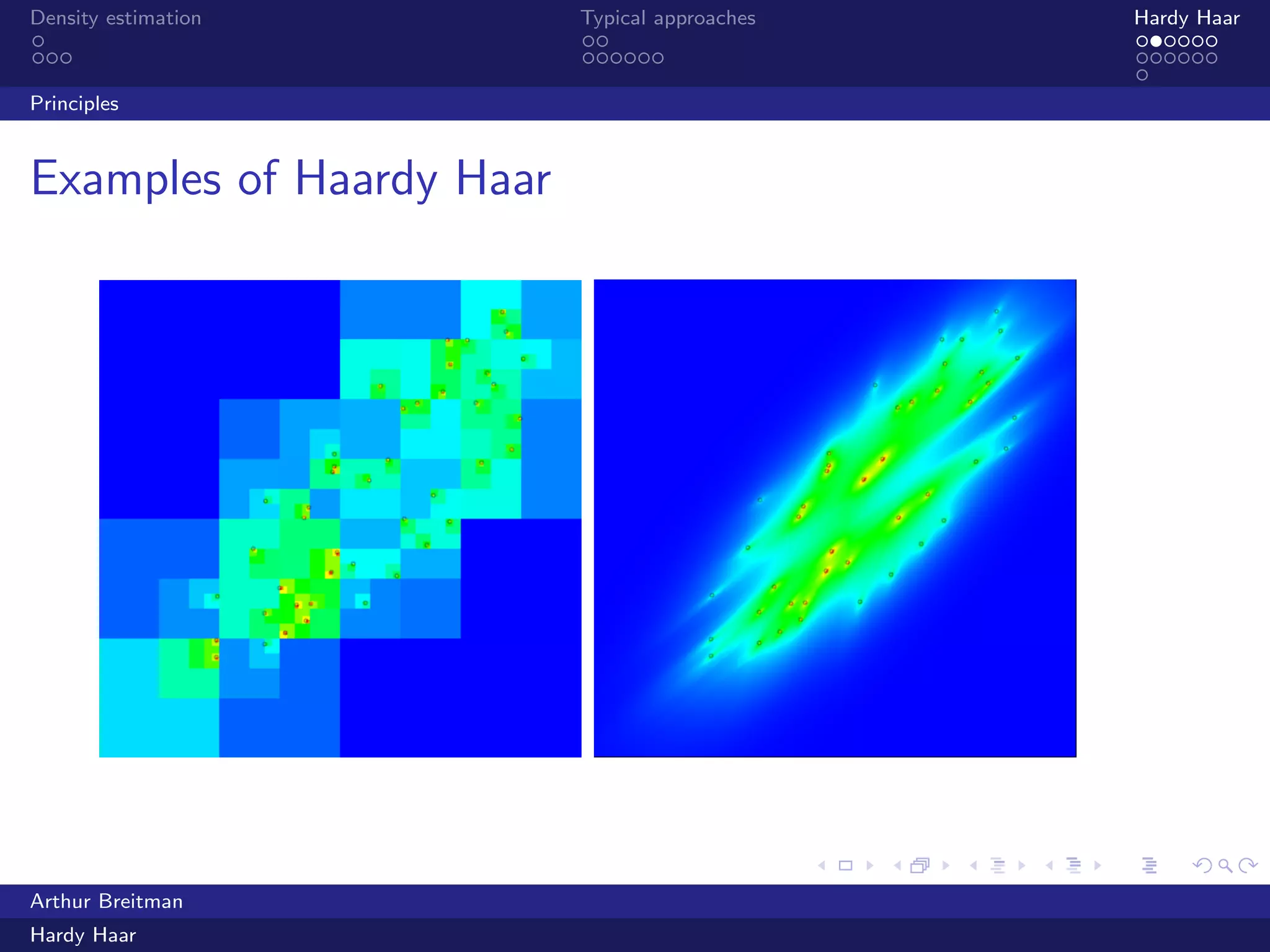

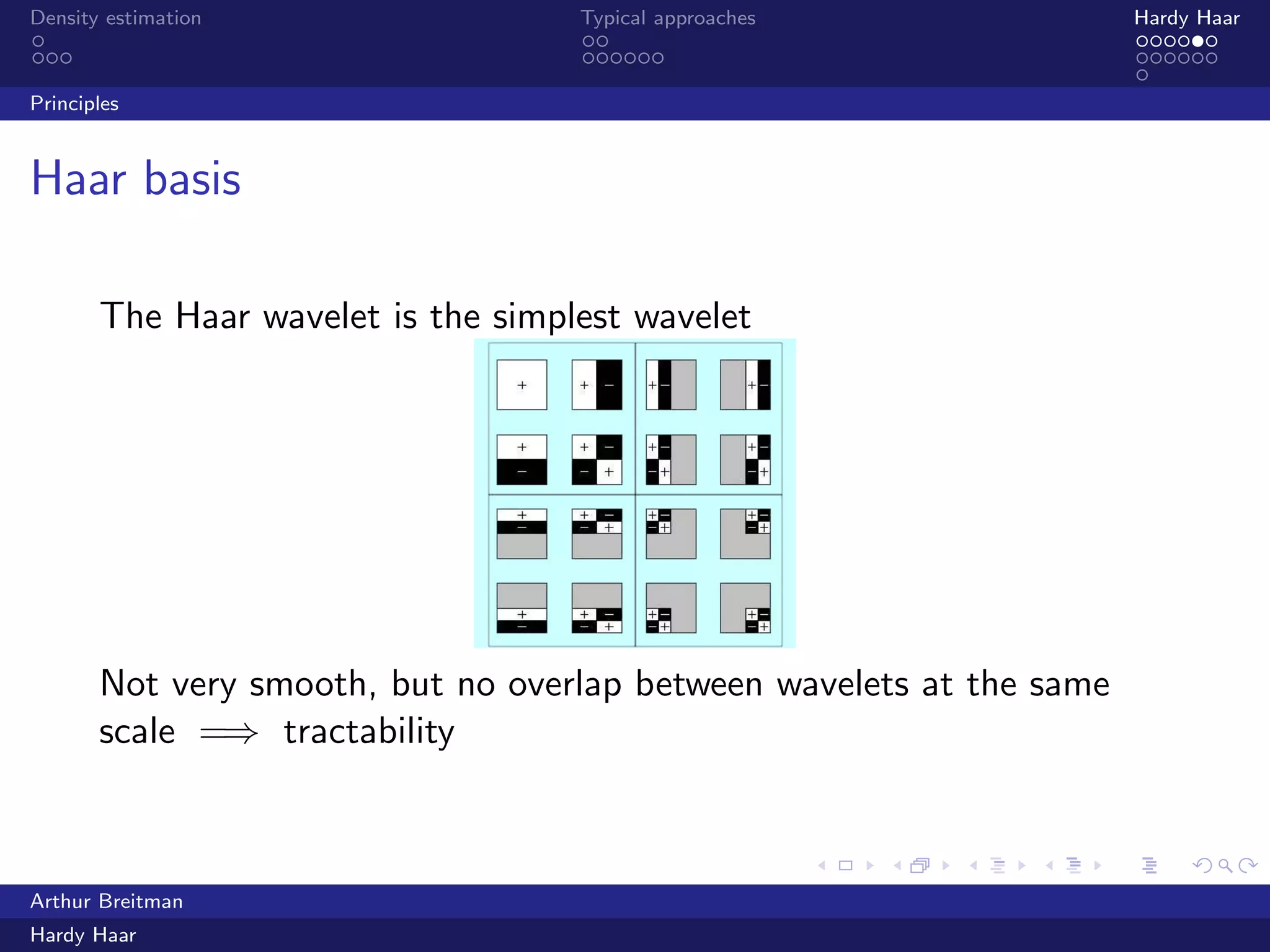

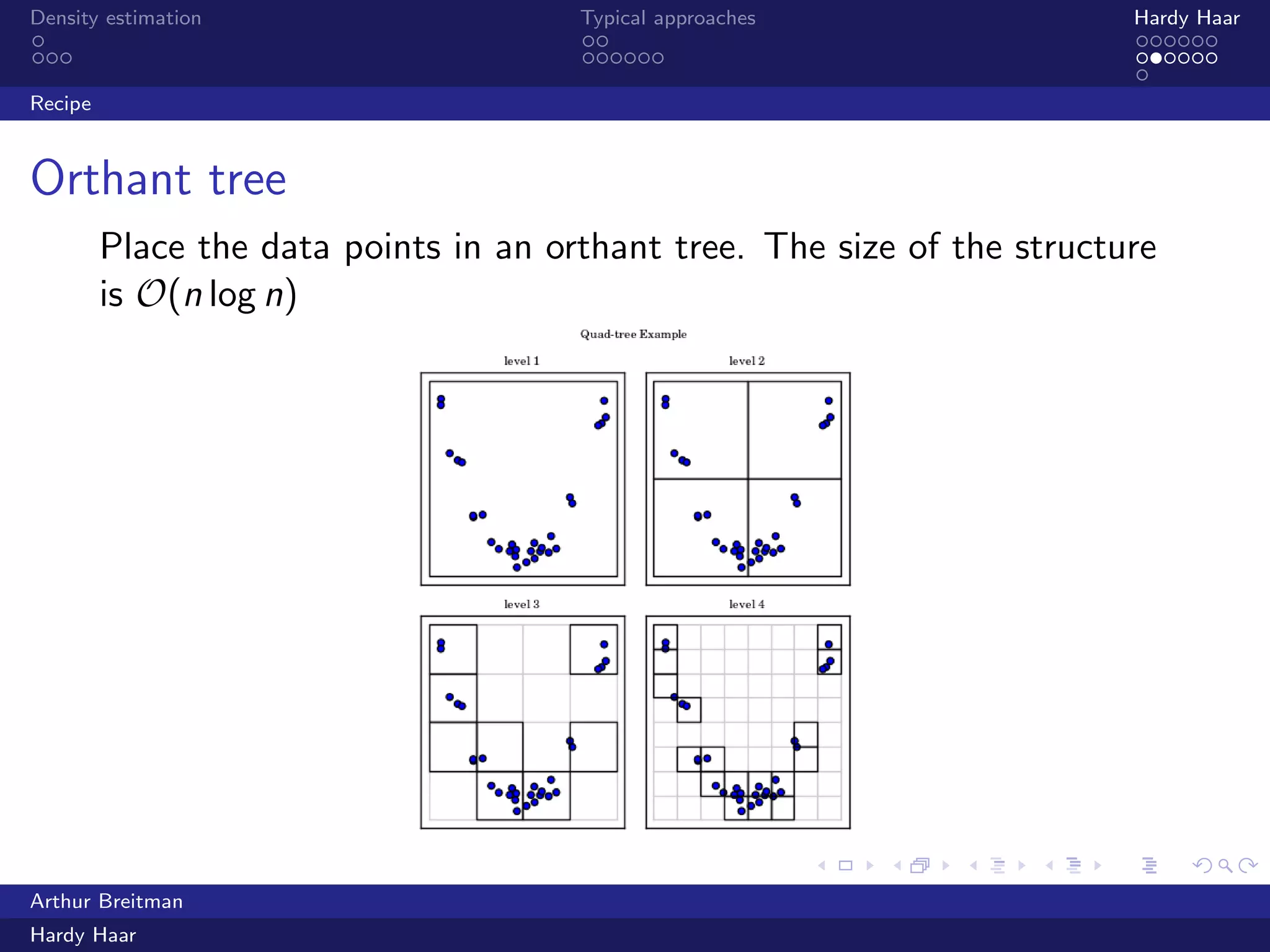

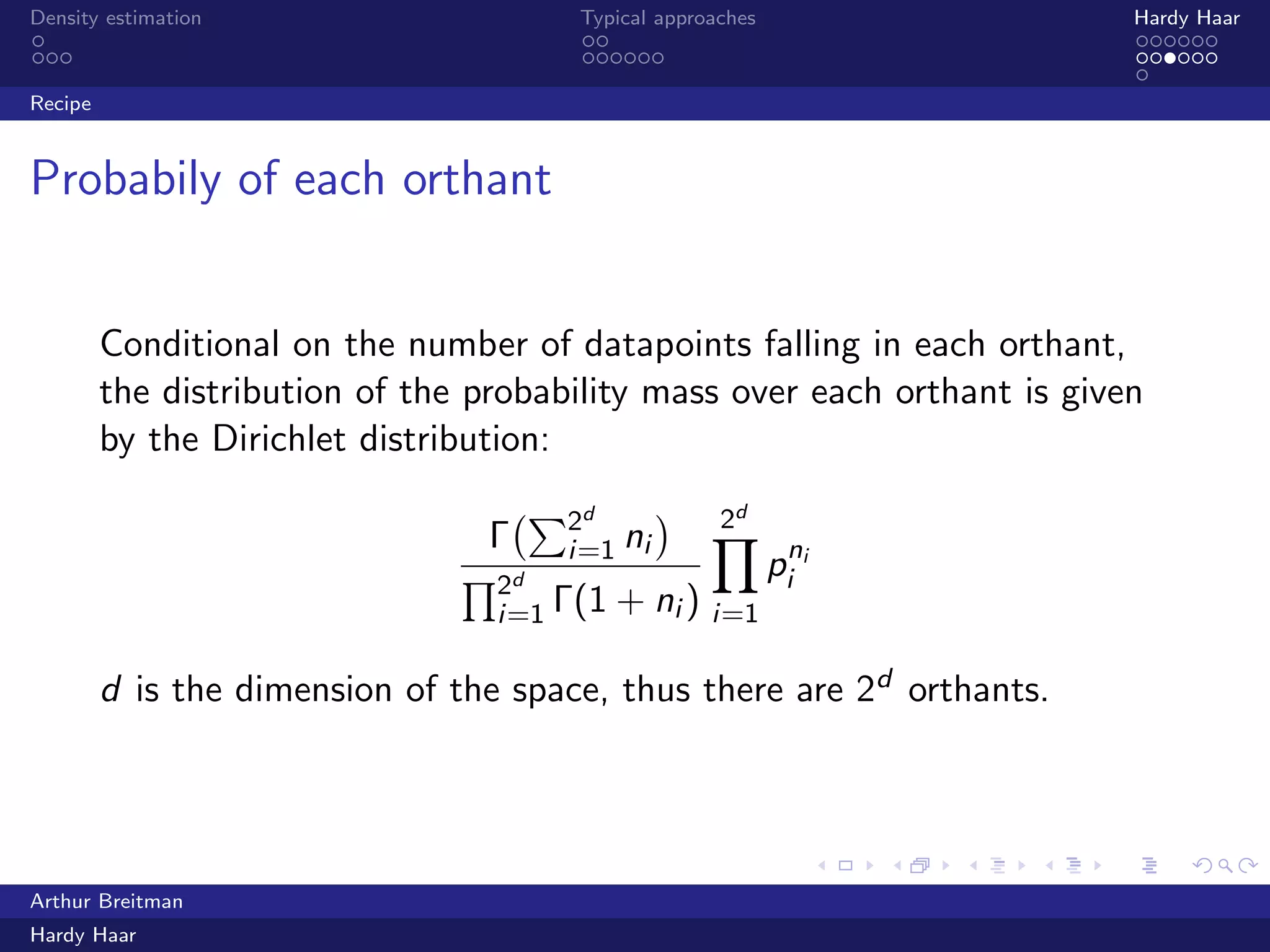

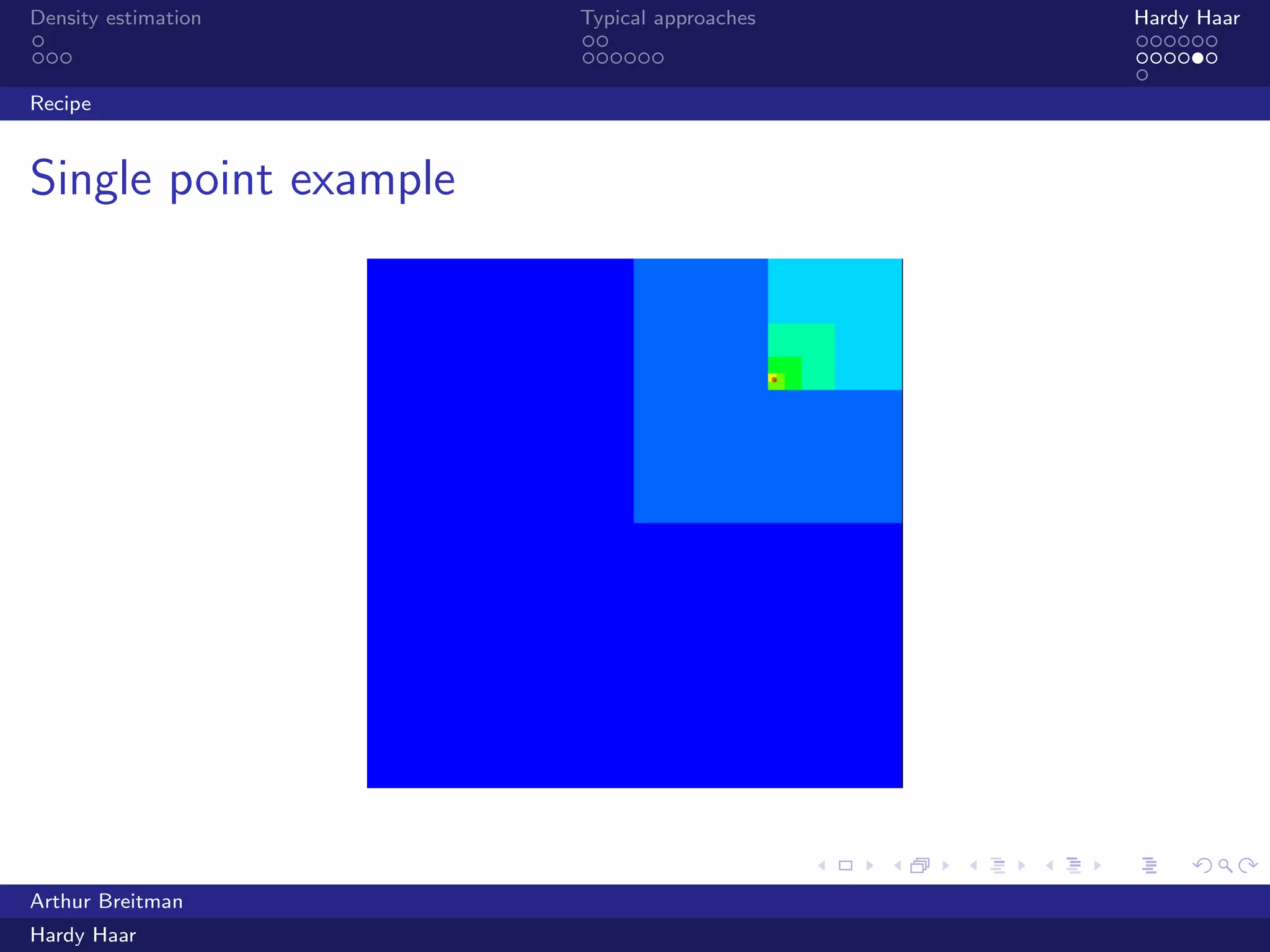

The document discusses density estimation, particularly through the Hardy Haar method, which addresses shortcomings in traditional approaches and employs a Bayesian treatment. It emphasizes applications in both unsupervised and supervised learning, with mutual information as a key concept for understanding relationships between variables. Additionally, it outlines the technical details of kernel density estimation and the use of wavelet decomposition for spatial coherence in prior distributions.