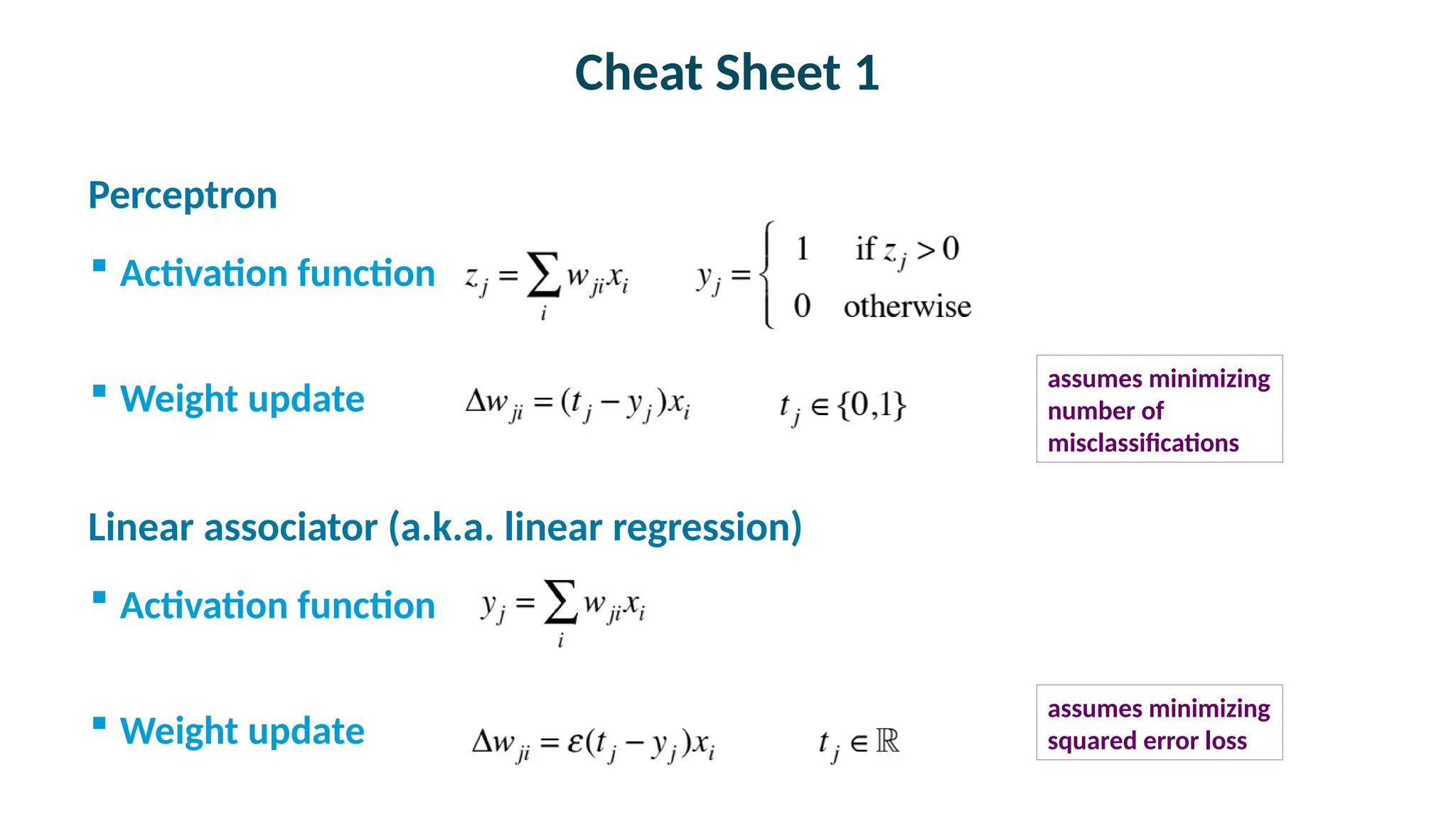

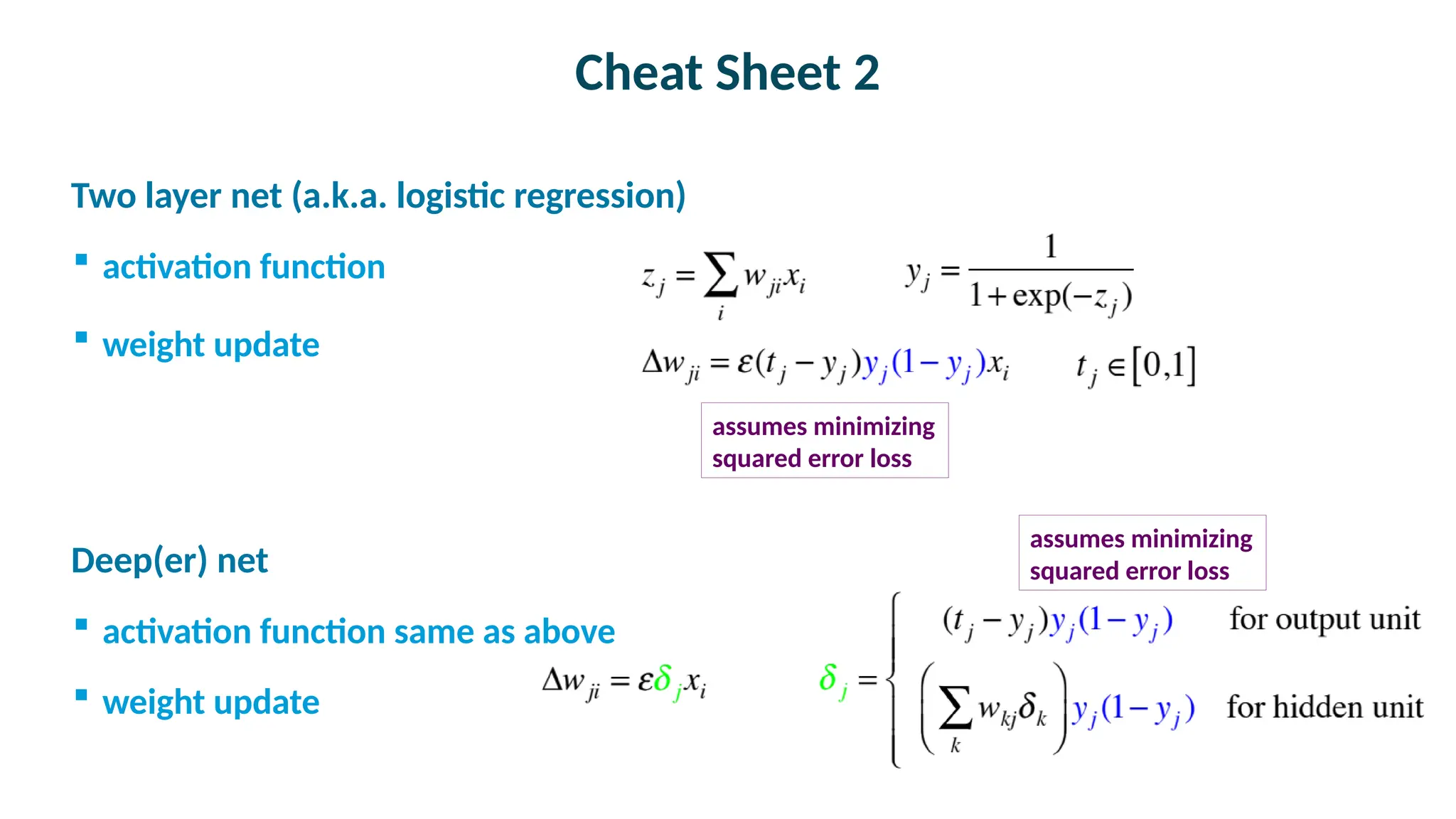

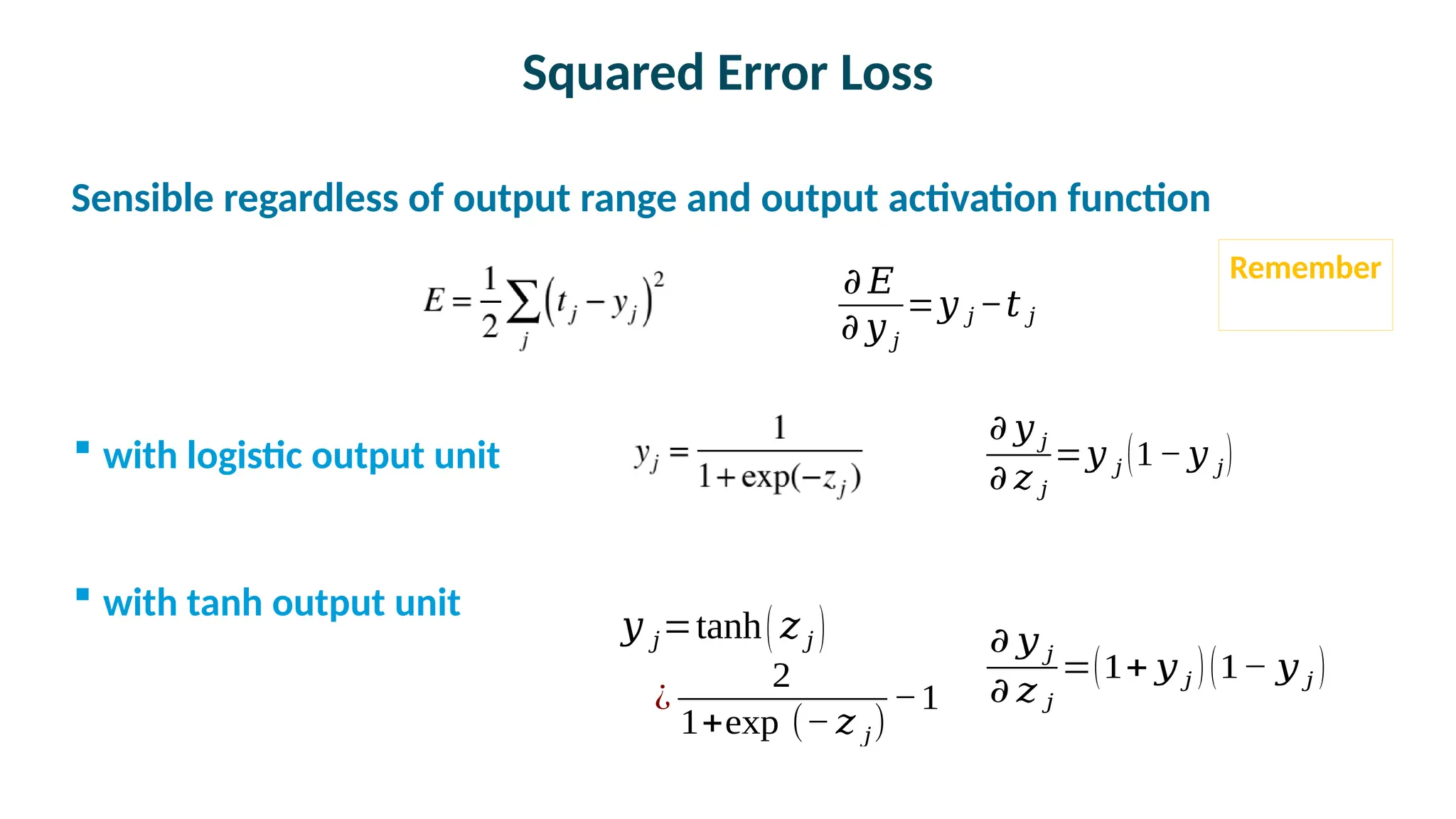

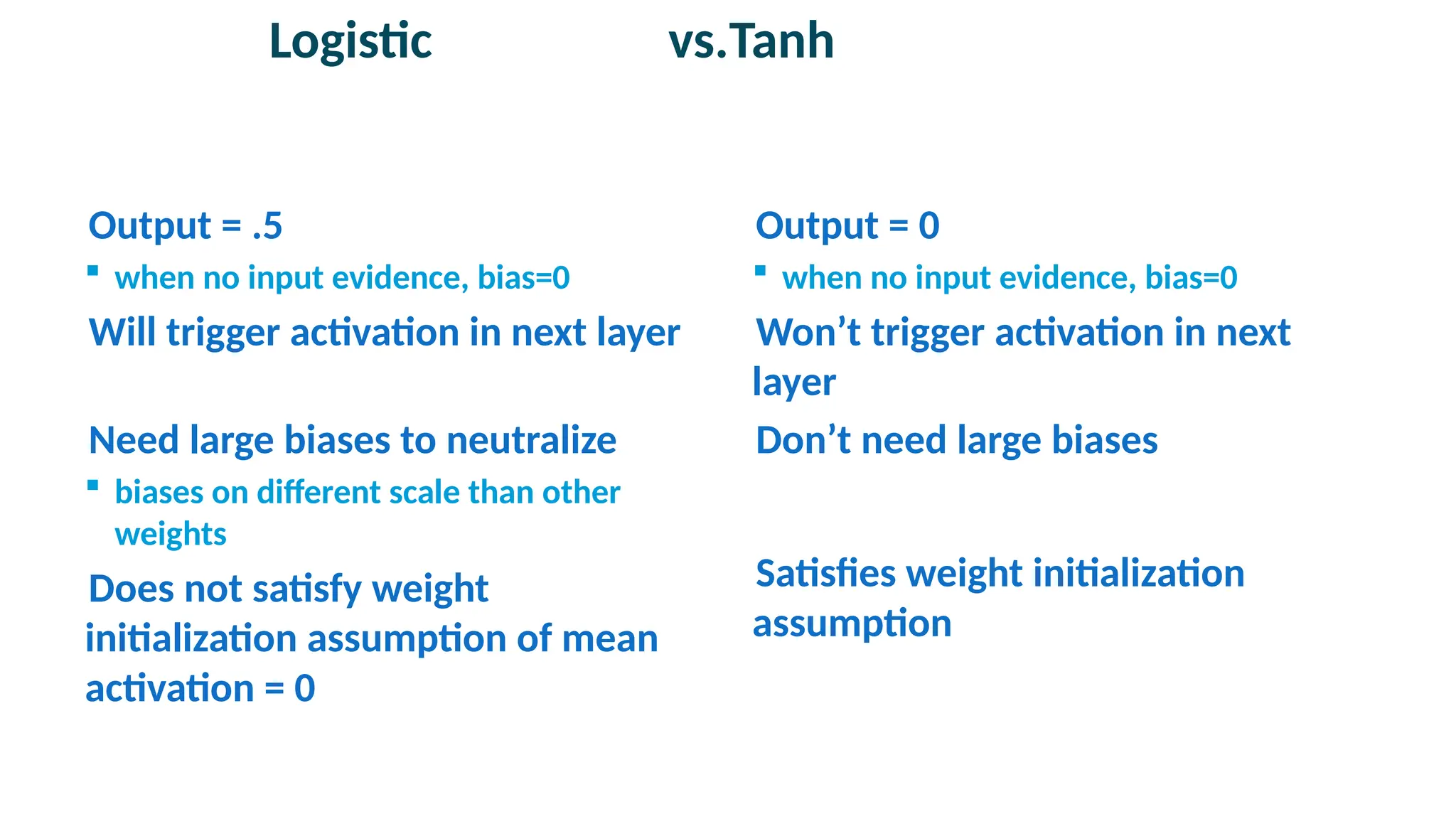

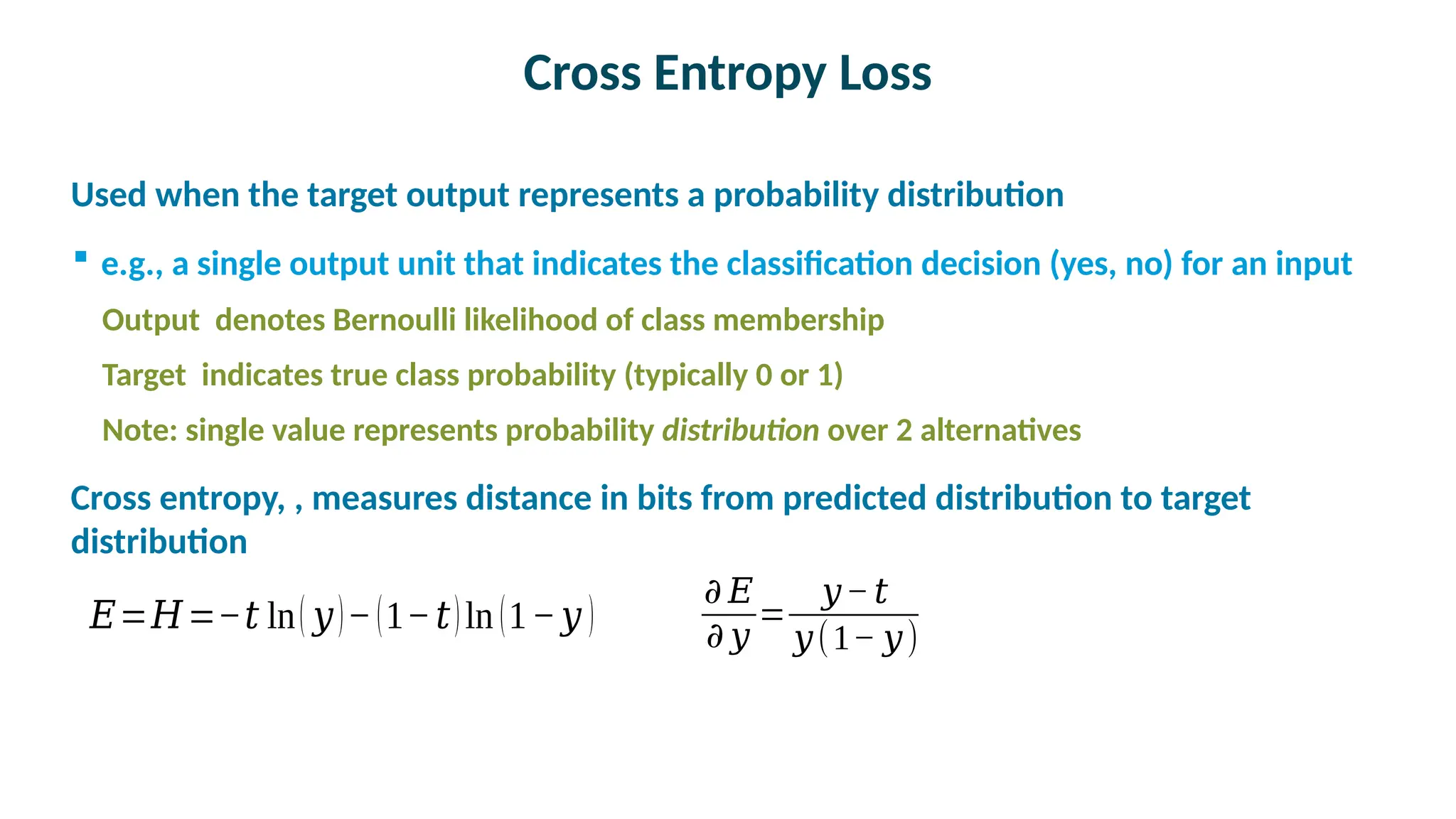

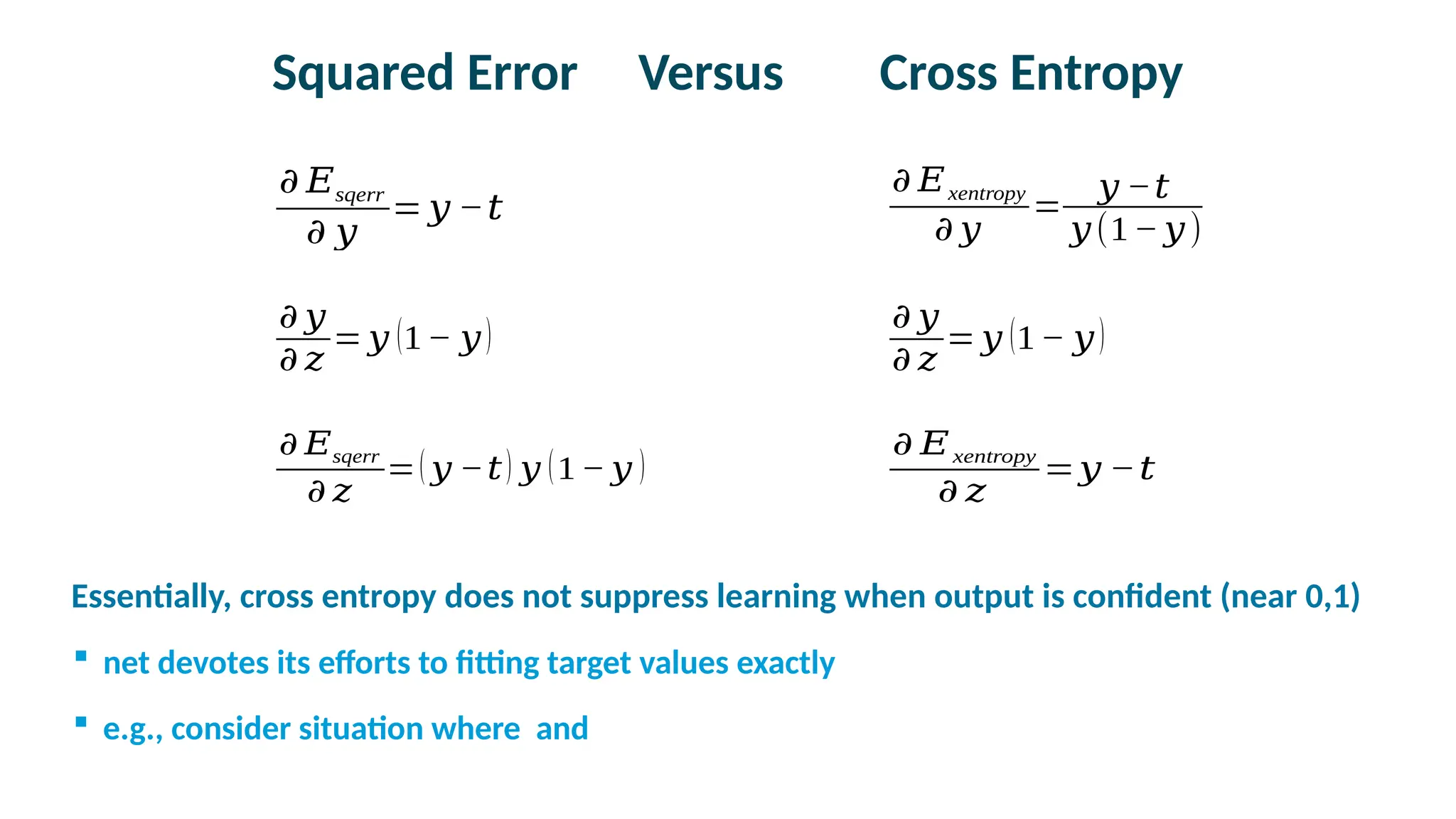

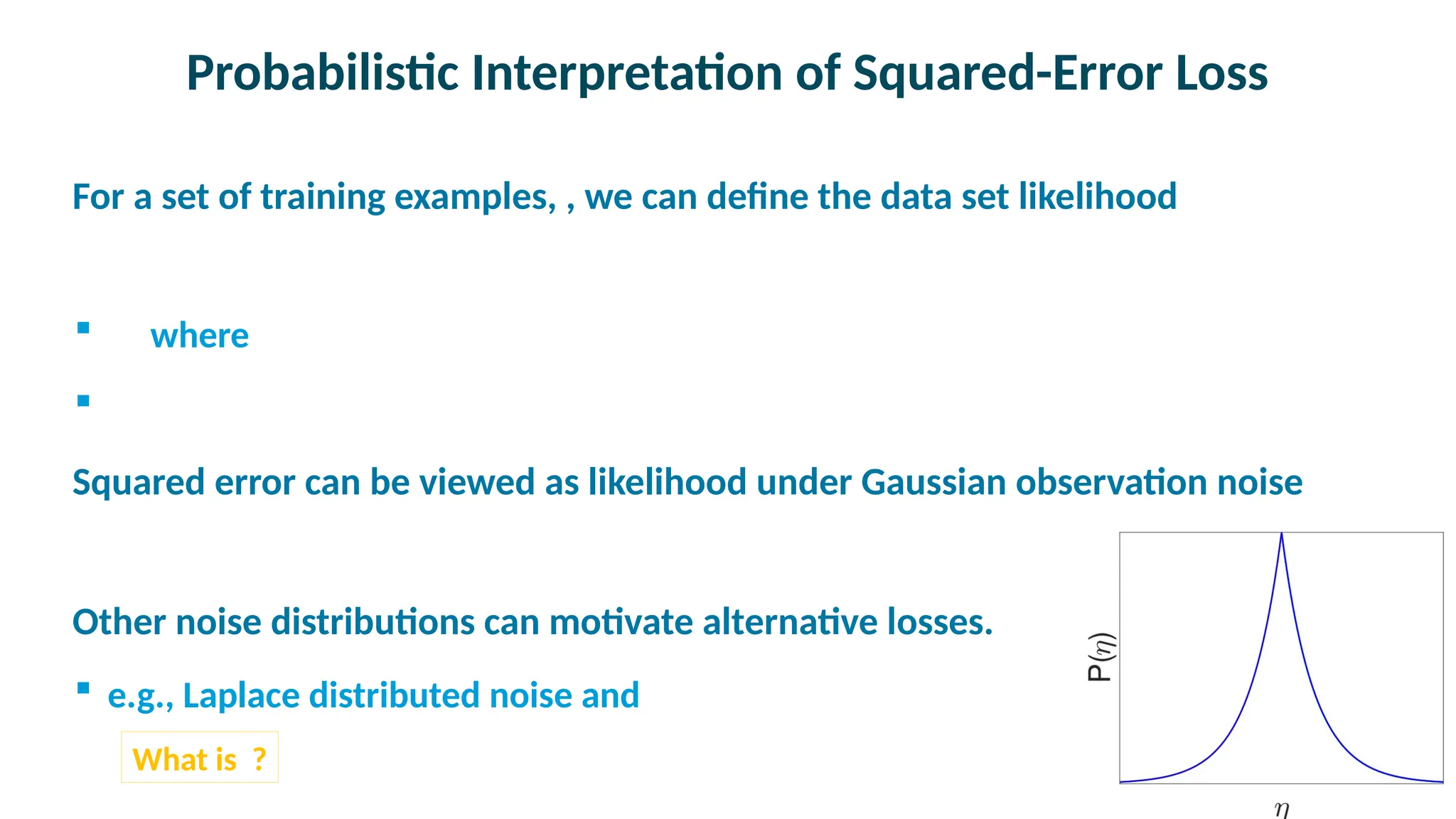

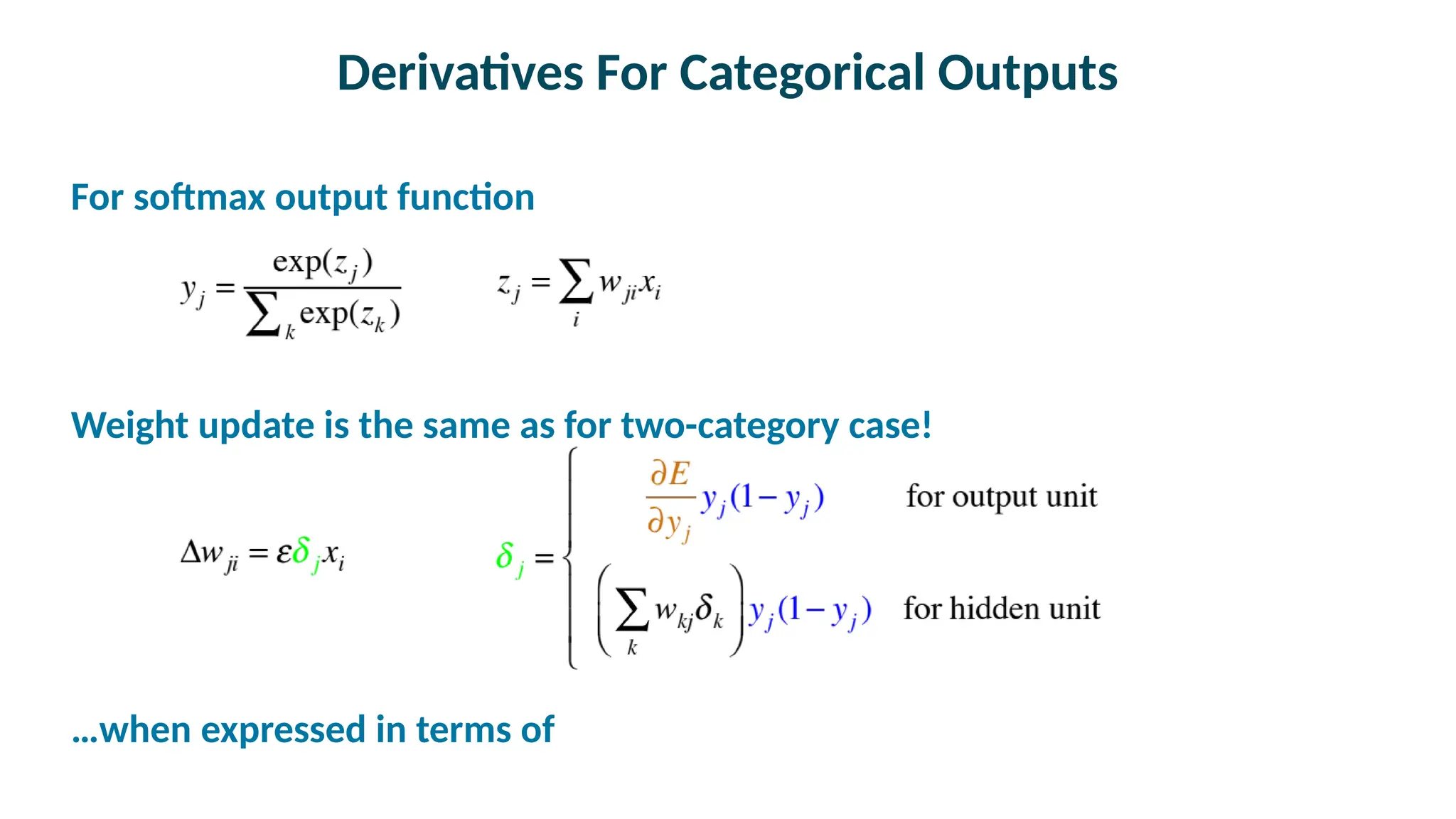

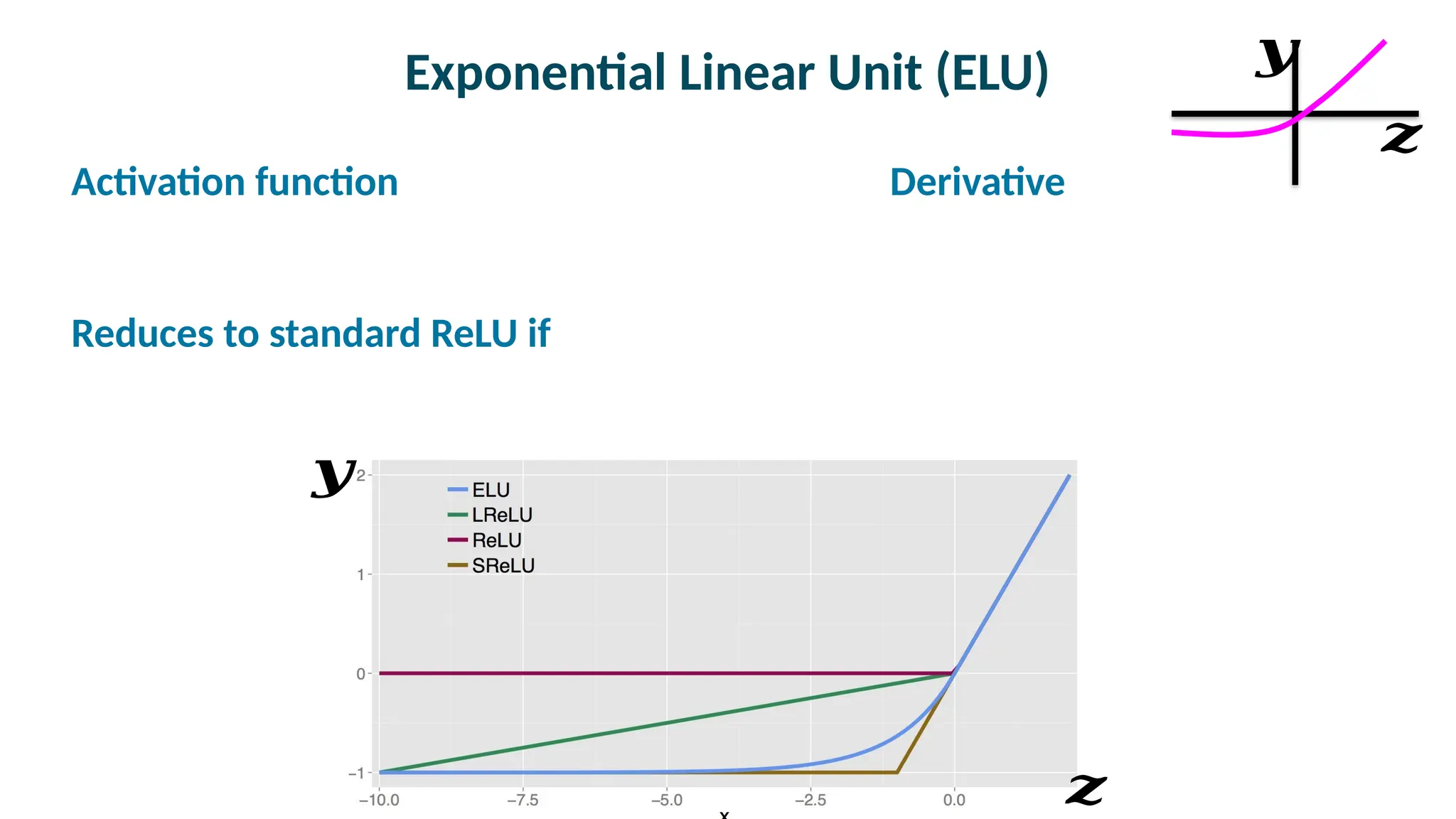

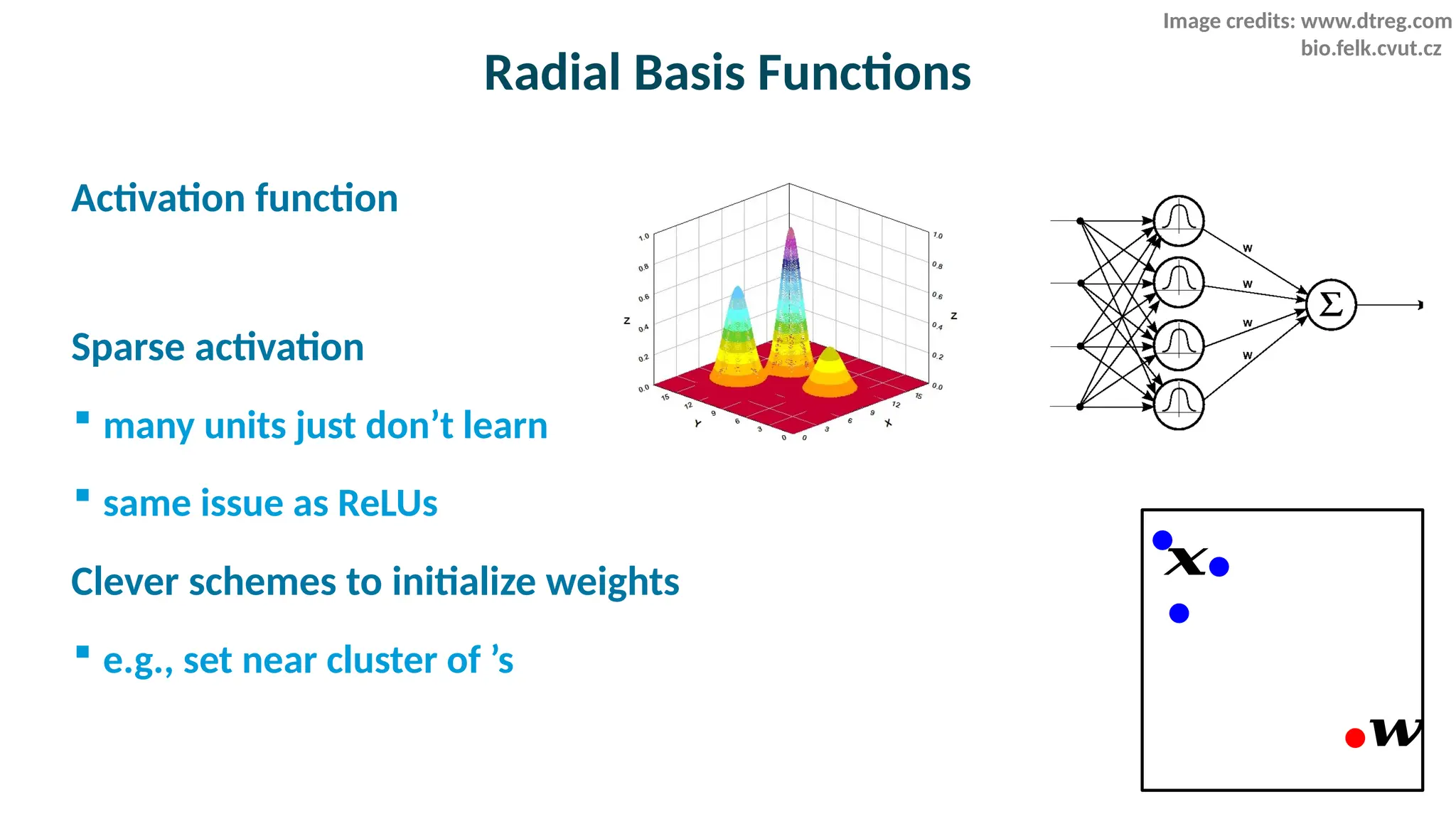

The document provides an overview of activation and loss functions in neural networks, discussing various types including perceptrons, linear associators, and logistic regression. It compares squared error loss and cross-entropy loss, explaining their applications and differences, particularly in terms of output types and biases. The document also covers categorical outputs, activation functions like softmax and ReLU, and addresses issues such as gradient behavior and the efficiency of unit usage.