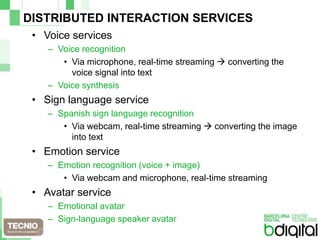

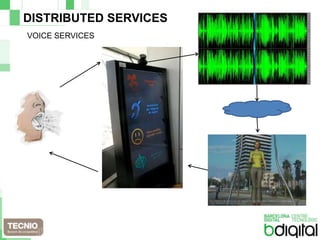

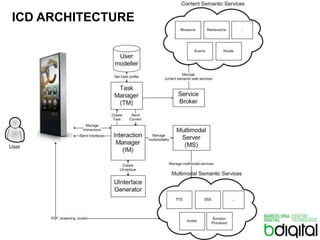

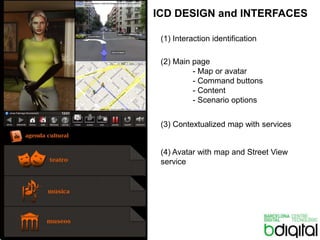

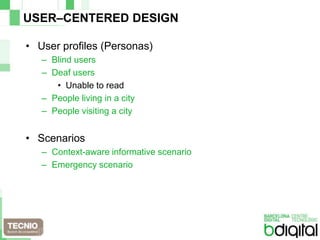

This presentation discusses a research project that developed an Interactive Community Display (ICD) to provide multimodal interaction in distributed and ubiquitous computing environments. The ICD allows users to access distributed services like voice recognition, sign language recognition, and emotion recognition through a web interface. These services can be used to build context-aware and emergency scenarios. An implementation of the ICD architecture was tested with users and received positive feedback, and future work will focus on improving real-time interaction capabilities and mobile usability.