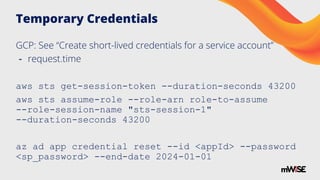

The document provides a practical guide for minimizing permissions to enhance cloud forensics and incident response. It emphasizes strategies like establishing dedicated forensics accounts, using temporary credentials, and implementing tag-based access control to ensure effective data access without compromising security. Additionally, it outlines specific challenges and solutions related to forensic investigations in various cloud environments, including AWS, Azure, and Google Cloud.

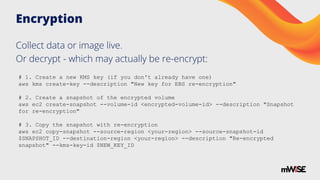

![Controlling Access with Tags

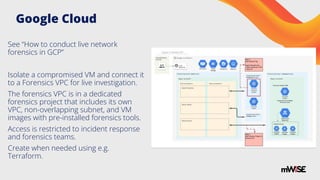

GCP

expression: > resource.matchTag('tagKeys/ForensicsEnabled', '*')

AWS

Condition: StringLike: aws:ResourceTag/Name: ForensicsEnabled

Condition: StringLike: ssm:resourceTag/SSMEnabled: True

Azure

"Condition": "StringLike(Resource[Microsoft.Resources/tags.example_key], '*')"](https://image.slidesharecdn.com/minimizingpermissionsforcloudforensicsapracticalguidefortighteningaccessinthecloud-240922191903-559c2349/85/Minimizing-Permissions-for-Cloud-Forensics_-A-Practical-Guide-for-Tightening-Access-in-the-Cloud-16-320.jpg)

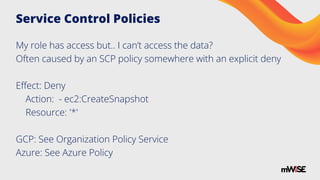

![Example: Pulling Live Data from EC2s

{

"Sid":"ScopedSsmForensicTriage",

"Effect":"Allow",

"Action":["ssm:SendCommand","ssm:DescribeInstanceInformation","ssm:S

tartSession","ssm:TerminateSession"],

"Resource":["arn:aws:ec2:*:*:instance/*"],

"Condition":{"StringLike":{"ssm:resourceTag/SSMEnabled":["True"]}}

}

Pre-deploy the role - this can take more time.

Then tag systems as needed - this is generally easier for the cloud team.](https://image.slidesharecdn.com/minimizingpermissionsforcloudforensicsapracticalguidefortighteningaccessinthecloud-240922191903-559c2349/85/Minimizing-Permissions-for-Cloud-Forensics_-A-Practical-Guide-for-Tightening-Access-in-the-Cloud-17-320.jpg)