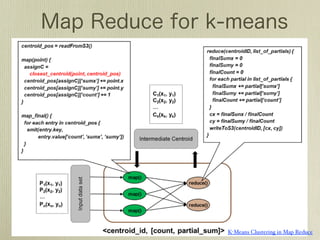

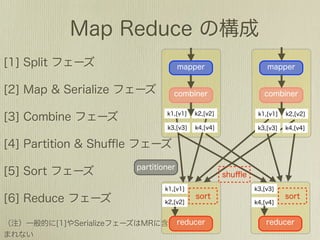

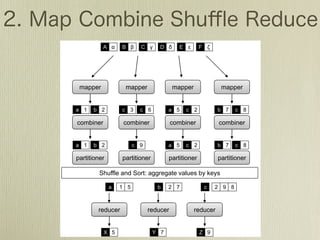

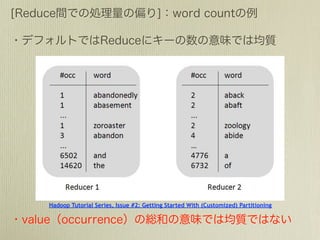

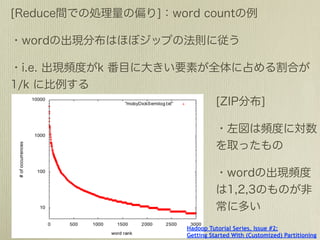

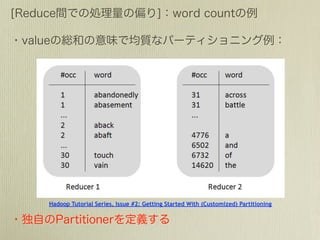

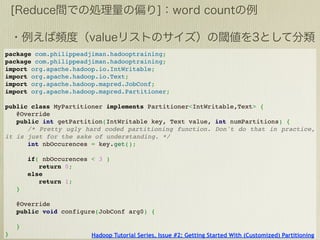

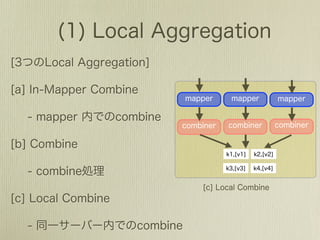

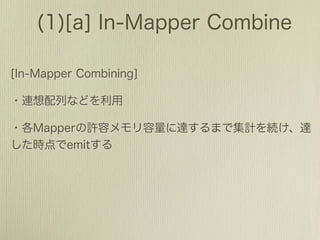

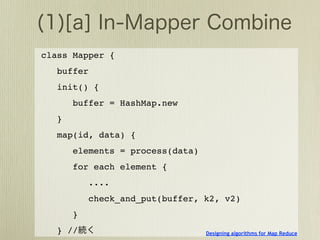

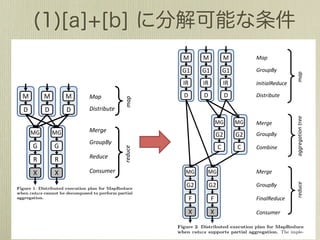

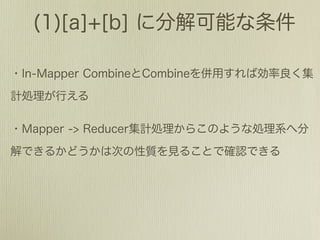

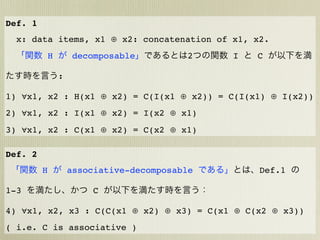

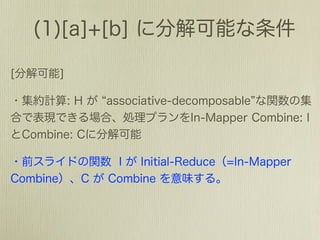

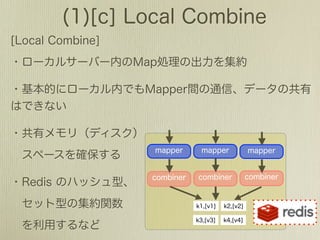

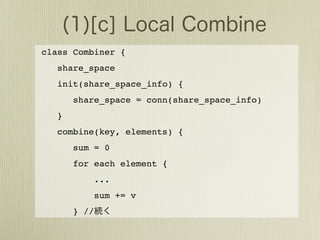

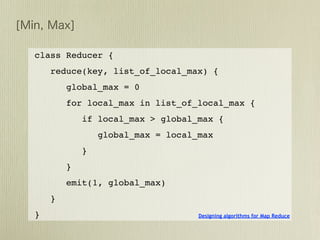

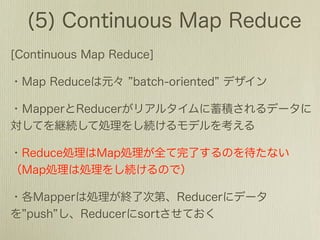

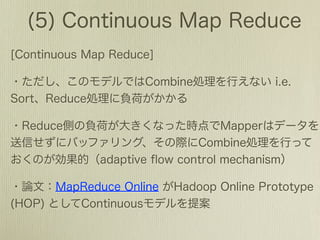

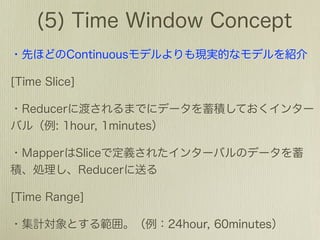

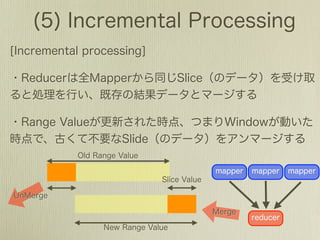

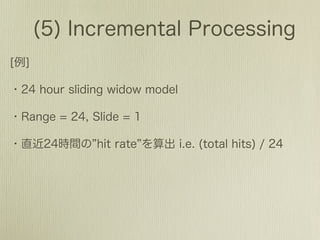

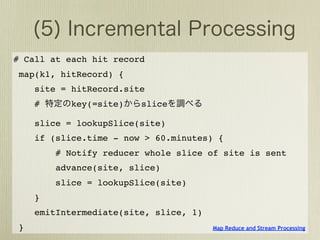

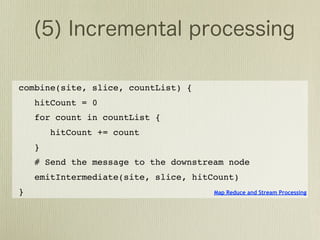

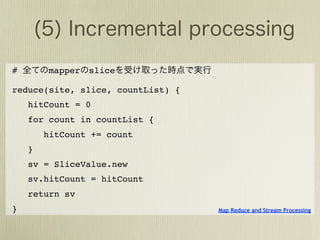

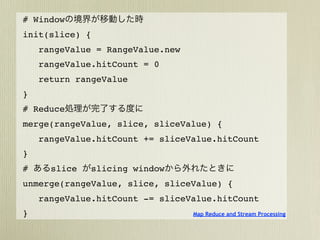

This document discusses using sliding windows to aggregate streaming data in MapReduce. It proposes buffering input tuples in mappers until a window is full, then emitting the aggregate. Combiners and reducers combine partial aggregates across windows. Window ranges are initialized and updated during merging to remove outdated data and handle late arrivals. This approach allows streaming aggregation queries to be executed with MapReduce.

![map: (k1, v1) ! [(k2, v2)] // []

//word count

class Mapper

method Map(docid a, doc d)

for all term t ∈ doc d do

Emit(term t, count 1)](https://image.slidesharecdn.com/mapreduce1-110618220620-phpapp01/85/Map-Reduce-22-320.jpg)

![> require 'msgpack'

> msg = [1,2,3].to_msgpack

#=>"x93x01x02x03"

> MessagePack.unpack(msg) #=> [1,2,3]](https://image.slidesharecdn.com/mapreduce1-110618220620-phpapp01/85/Map-Reduce-23-320.jpg)

![// word count

class Combiner

method Combine(string t, counts [c1, c2, . . .])

sum ← 0

for all count c ∈ counts [c1, c2, . . .] do

sum ← sum + c

Emit(string t, count sum)](https://image.slidesharecdn.com/mapreduce1-110618220620-phpapp01/85/Map-Reduce-24-320.jpg)

![reduce: (k2, [v2]) ! [(k3, v3)]

//word count

class Reducer

method Reduce(term t, counts [c1, c2, . . .])

sum ← 0

for all count c ∈ counts [c1,c2,...] do

sum ← sum + c

Emit(term t, count sum)](https://image.slidesharecdn.com/mapreduce1-110618220620-phpapp01/85/Map-Reduce-27-320.jpg)

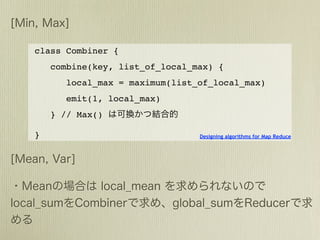

![check_and_put(buffer, k2, v2) {

if buffer.full {

for each k2 in buffer.keys {

emit(k2, buffer[k2])

}

} else {

buffer.incrby(k2, v2) // H[k2]+=v2

}

}

close() {

for each k2 in buffer.keys {

emit(k2, buffer[k2])

}

}

} Designing algorithms for Map Reduce](https://image.slidesharecdn.com/mapreduce1-110618220620-phpapp01/85/Map-Reduce-43-320.jpg)

![(t1, m1, r80521), (t1, m2, r14209), (t1, m3, r76042),

(t2, m1, r21823), (t2, m2, r66508), (t2, m3, r98347),...

map: m1 ! (t1, r80521) //

// t1,t2,t3,...

(m1) ! [(t1, r80521), (t3, r146925), (t2, r21823)]

(m2) ! [(t2, r66508), (t1, r14209), (t3, r14720)]](https://image.slidesharecdn.com/mapreduce1-110618220620-phpapp01/85/Map-Reduce-52-320.jpg)

![map: (m1, t1) ! r80521

(m1, t1) ! [(r80521)] // t1,t2,t3,...

(m1, t2) ! [(r21823)]

(m1, t3) ! [(r146925)]](https://image.slidesharecdn.com/mapreduce1-110618220620-phpapp01/85/Map-Reduce-53-320.jpg)

![5&4.)1*,!,);3-00+*0-1*,!&/*+!*-58!.-$*9!-$%!@+&22,!).A!18*! -!:2*=#;2*!'-<!1&!4&$#1&+!,1+*-4#$0!%-1-6!!

.-$*3-00+*0-1*,! 1&! 5&4.)1*! '#$%&'3-00+*0-1*,6! >)+! *=3 R)++*$1!.+&.&,-2,!:&+!*/-2)-1#$0!,2#%#$03'#$%&'!-00+*0-1*!

.*+#4*$1-2! ,1)%<! ,8&',! 18-1! ),#$0! .-$*,! 8-,! ,#0$#:#5-$1! ()*+#*,!;)::*+!*-58!#$.)1!1).2*!)$1#2!#1!#,!$&!2&$0*+!$**%*%!

.*+:&+4-$5*!;*$*:#1,6!! INP6! D#$5*! *-58! #$.)1! 1).2*! ;*2&$0,! 1&! 4)21#.2*! '#$%&',9!

,)58!-..+&-58*,!;)::*+!-!1).2*!)$1#2!#1!#,!.+&5*,,*%!:&+!18*!

'(# )*+,-./0+1-*2 -00+*0-1*! &/*+! 18*! 2-,1! '#$%&'! 1&! '8#58! #1! ;*2&$0,6! -58!

B-$<! -..2#5-1#&$,! $**%! 1&! .+&5*,,! ,1+*-4,9! :&+! *=-4.2*9! #$.)1! 1).2*! #,! -55*,,*%! 4)21#.2*! 1#4*,9! &$5*! :&+! *-58! '#$3

:#$-$5#-2! %-1-! -$-2<,#,9! $*1'&+C! 1+-::#5! 4&$#1&+#$09! -$%! %&'!18-1!#1!.-+1#5#.-1*,!#$6!!!

1*2*5&44)$#5-1#&$! 4&$#1&+#$06! D*/*+-2! %-1-;-,*! +*,*-+58!

0+&).,! -+*! ;)#2%#$0! --1-! .1+*-4! /-$-0*4*$1! .<,1*4,! "*! ,**! 1'&! .+&;2*4,! '#18! ,)58! -..+&-58*,6! W#+,1! 18*!

EFDBDG!,&!18-1!-..2#5-1#&$,!5-$!#,,)*!()*+#*,!1&!0*1!1#4*2<! ;)::*+!,#H*!+*()#+*%!#,!)$;&)$%*%T!Q1!-$<!1#4*!#$,1-$19!-22!

#$:&+4-1#&$! :+&4! ,1+*-4,6! B-$-0#$0! -$%! .+&5*,,#$0! 1).2*,! 5&$1-#$*%! #$! 18*! 5)++*$1! '#$%&'! -+*! #$! 18*! ;)::*+9!

,1+*-4,!0#/*,!+#,*!1&!58-22*$0*,!18-1!8-/*!;**$!*=1*$,#/*2<! -$%!,&!18*!,#H*!&:!18*!+*()#+*%!;)::*+,!#,!%*1*+4#$*%!;<!18*!

%#,5),,*%!-$%!+*5&0$#H*%!IJ9!K9!L9!M9!NOP6!! '#$%&'!+-$0*!-$%!18*!%-1-!-++#/-2!+-1*6!D*5&$%9!.+&5*,,#$0!

*-58!#$.)1!1).2*!4)21#.2*!1#4*,!2*-%,!1&!-!8#08!5&4.)1-1#&$!

Q$!#4.&+1-$1!52-,,!&:!()*+#*,!&/*+!%-1-!,1+*-4,!#,!,2#%#$03 5&,16!W&+!*=-4.2*!#$!X)*+<!N9!*-58!#$.)1!1).2*!#,!.+&5*,,*%!

'#$%&'!-00+*0-1*!()*+#*,6!R&$,#%*+!-$!&$2#$*!-)51#&$!,<,3 :&)+!1#4*,6!Q,!18*!+-1#&!&:!YQZ[!&/*+!D]7F!#$5+*-,*,9!

1*4!#$!'8#58!;#%,!&$!-)51#&$!#1*4,!-+*!,1+*-4*%!#$1&!-!5*$3 ,&!%&*,!18*!$)4;*+!&:!1#4*,!*-58!1).2*!#,!.+&5*,,*%6!R&$3

1+-2!-)51#&$!.+&5*,,#$0!,<,1*46!S8*!,58*4-!&:!*-58!;#%!#,T! ,#%*+#$0!18*!2-+0*!/&2)4*!-$%!:-,1!-++#/-2!+-1*!&:!,1+*-4#$0!

U#1*43#%9! ;#%3.+#5*9! 1#4*,1-4.V6! W&+! *-,*! &:! .+*,*$1-1#&$9! %-1-9!+*%)5#$0!18*!-4&)$1!&:!+*()#+*%!;)::*+!,.-5*!E#%*-22<!

'*!-,,)4*!18-1!;#%,!-++#/*!#$!&+%*+!&$!18*#+!1#4*,1-4.!-13 1&!-!5&$,1-$1!;&)$%G!-$%!5&4.)1-1#&$!1#4*!#,!-$!#4.&+1-$1!

1+#;)1*6! E"*! -+*! -51#/*2<! #$/*,1#0-1#$0! .+&5*,,#$0! %#,&+3

%*+*%!%-1-!,1+*-4,G!X)*+<!N!,8&',!-$!*=-4.2*!&:!-!,2#%#$03

'#$%&'!-00+*0-1*!()*+<6!

3/4,52'T!@W#$%!18*!4-=#4)4!;#%!.+#5*!:&+!18*!.-,1!K!4#$3

)1*,!-$%!).%-1*!18*!+*,)21!*/*+<!N!4#$)1*6A!

!"#"$%&'()*+,-./0,123&

4567&+,-89:;%%5&<,'28<('/&

&&&&&&&&&&5;=>"&?&',@A<28&

&&&&&&&&&&!#BC"&D&',@A<2E&

7$! 18*! ()*+<! -;&/*9! '*! #$1+&%)5*! -! '#$%&'! ,.*5#:#5-1#&$!

'#18!18+**!.-+-4*1*+,T!YQZ[!,.*5#:#*,!18*!'#$%&'!,#H*9!

D]7F! ,.*5#:#*,! 8&'! 18*! '#$%&'! 4&/*,9! -$%! "QSSY!

,.*5#:#*,! 18*! '#$%&'#$0! -11+#;)1*! &$! '8#58! 18-1! 18*!

YQZ[! -$%! D]7F! .-+-4*1*+,! -+*! %*:#$*%6! S8*! '#$%&'!

,.*5#:#5-1#&$! &:! X)*+<! N! ;+*-C,! 18*! ;#%! ,1+*-4! #$1&! &/*+3

2-..#$0!K34#$)1*!,);3,1+*-4,!18-1!,1-+1!*/*+<!4#$)1*9!'#18!

+*,.*51! 1&! 18*! 1#4*,1-4.! -11+#;)1*6! S8*,*! &/*+2-..#$0! ,);3

,1+*-4,!-+*!5-22*%!!"#$#%&0(#%$)(!6!X)*+<!N!5-25)2-1*,!18*! 617/,42'8291*.-:;2&-<=-;4.2->26-/,2?@*4;2

No Pane, No Gain: Efficient Evaluation of Sliding-Window

Aggregates over Data Streams](https://image.slidesharecdn.com/mapreduce1-110618220620-phpapp01/85/Map-Reduce-70-320.jpg)