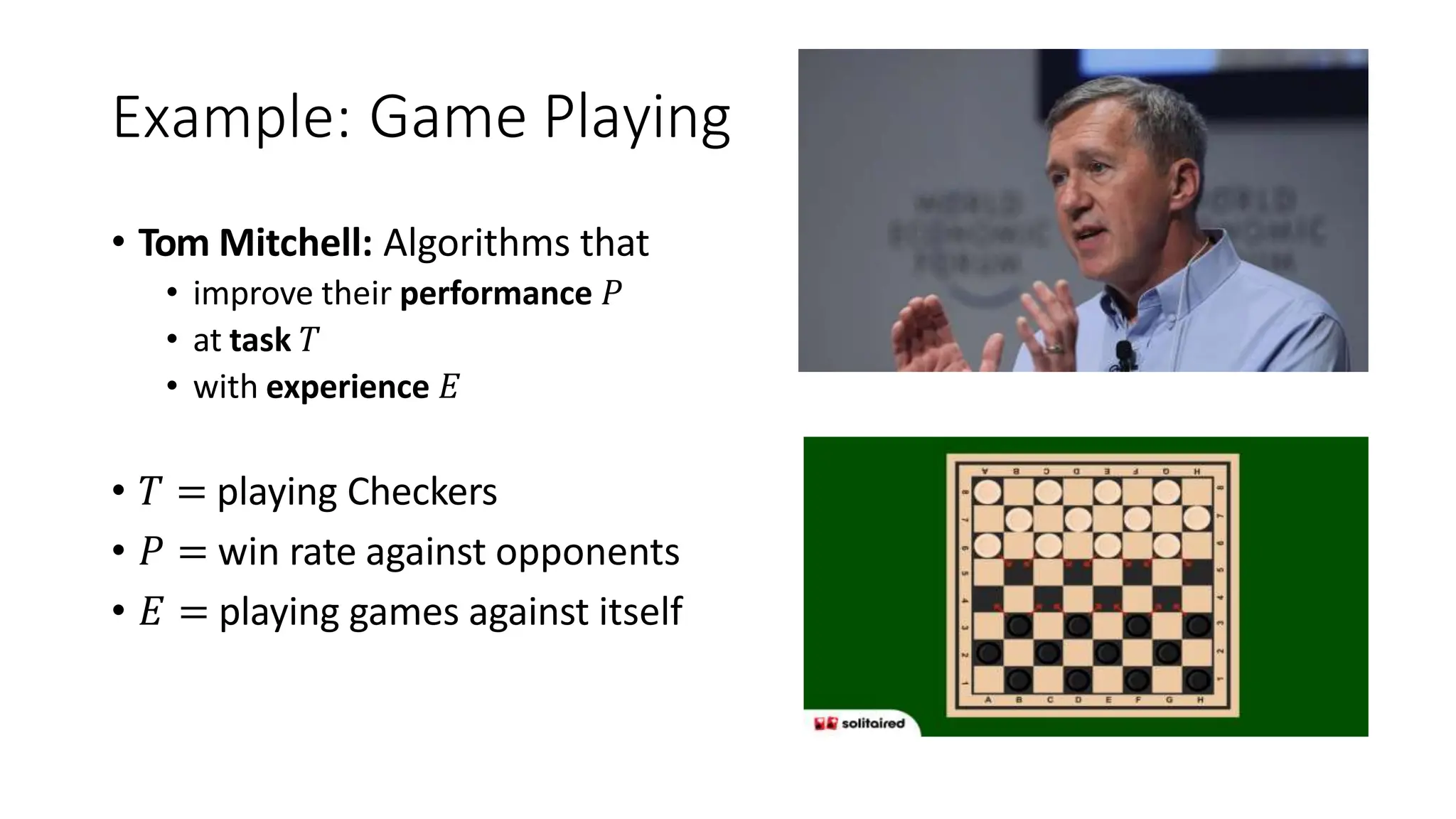

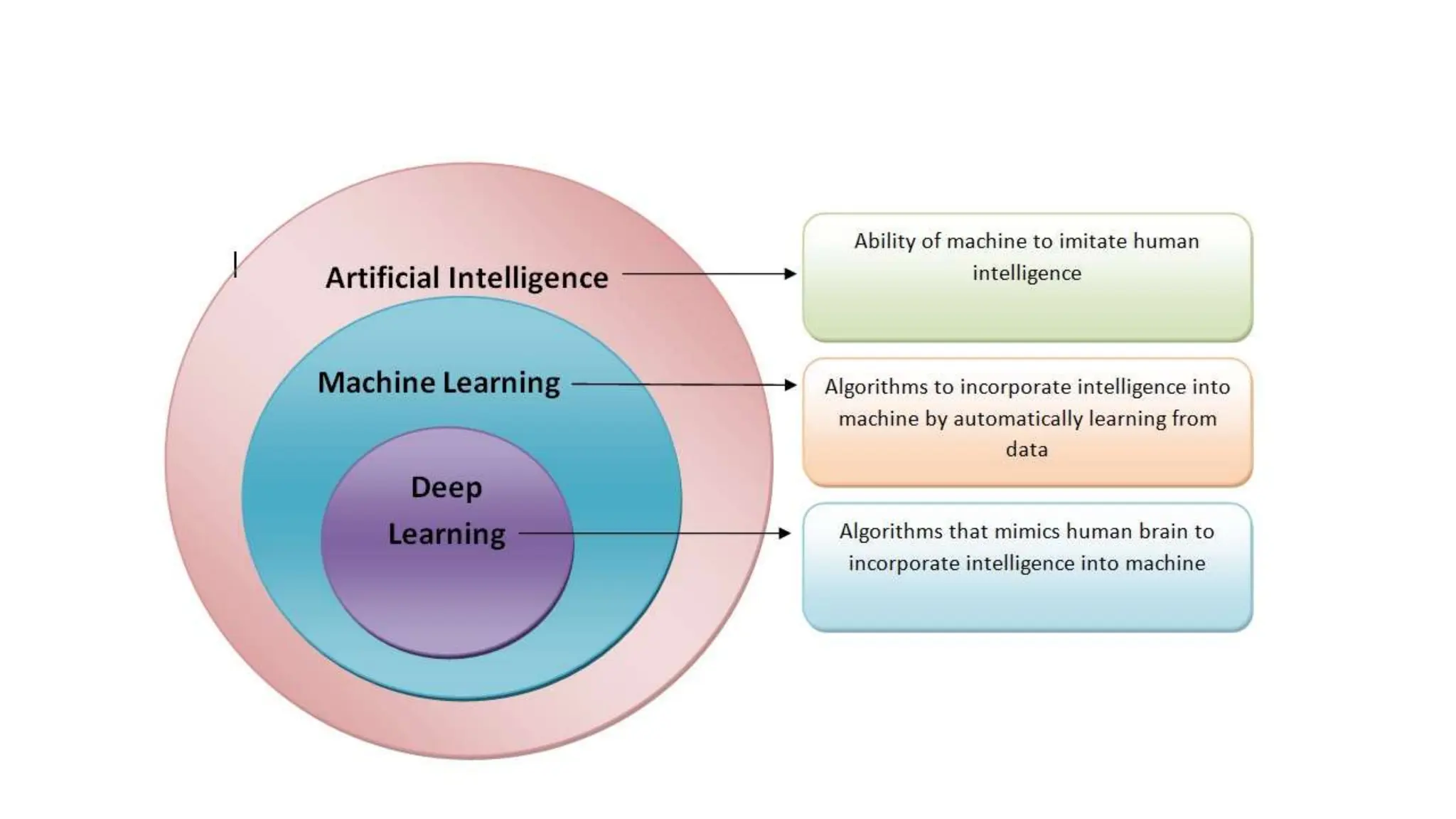

This document provides an overview of machine learning concepts including supervised learning, unsupervised learning, and reinforcement learning. It discusses common machine learning applications and challenges. Key topics covered include linear regression, classification, clustering, neural networks, bias-variance tradeoff, and model selection. Evaluation techniques like training error, validation error, and test error are also summarized.

![Tower property

Let's consider a sequence of random variables X, Y, and

Z. The tower property states:

E[E[X∣Y,Z]]=E[E[X∣Y]],

Tower property says that the expectation of the

conditional expectation of X given both Y and Z is equal

to the expectation of the conditional expectation of X

given only Y.](https://image.slidesharecdn.com/mlpptunit-1-240208163221-bd94da6b/75/Machine-learning-ppt-unit-one-syllabuspptx-55-2048.jpg)