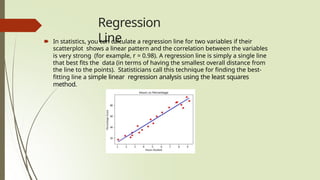

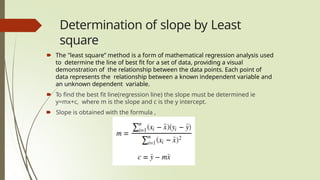

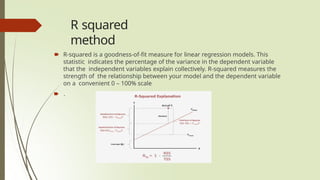

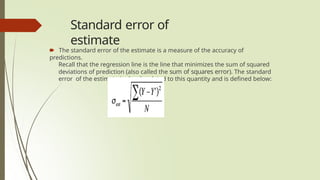

Linear regression is a supervised learning technique in machine learning used to predict continuous quantities by determining the relationship between independent and dependent variables. It is commonly applied in prediction, forecasting, and financial analysis and utilizes methods like least squares for determining the best fit line. While it is easy to implement and interpret, it has limitations such as sensitivity to outliers, assumptions of linearity, and the potential for overfitting.