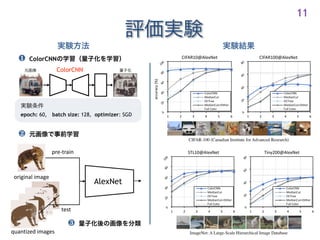

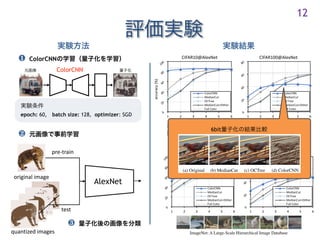

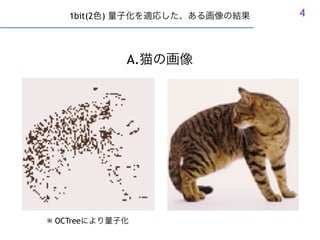

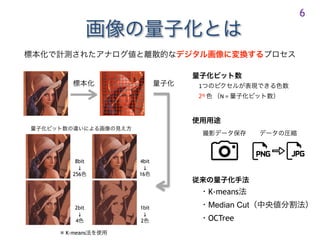

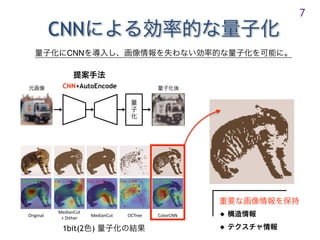

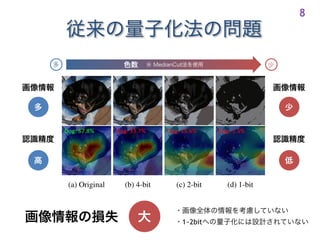

This document summarizes a research paper about using a convolutional neural network called ColorCNN to learn how to structure images using a small number of colors. ColorCNN takes an input image and maps it to a low-dimensional color space, represented by a color codebook. It then reconstructs the original image from the color codebook. The network is trained end-to-end to minimize reconstruction loss on datasets like ImageNet and CIFAR-100. The authors demonstrate that ColorCNN can represent images using only 1-2 bits per pixel while retaining good visual quality.

![ColorCNN

9

ColorCNN

activation

[ ⋅ ]i : i

∙ :

1,2bit

C-channel

one pixel

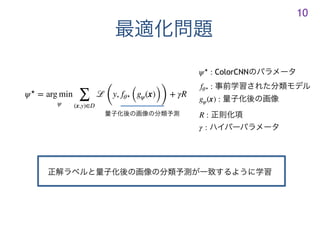

0.2

0.0

0.7

0.1

{c = 4

activation

❶

[t(x)]c =

∑(u,v)

[x]u,v ⋅ [m(x)]u,v,c

∑(u,v)

[m(x)]u,v,c

❷

˜x =

∑

c

[t(x)]c ⋅ [m(x)]c

❸

Umap](https://image.slidesharecdn.com/colornet-201107082740/85/Learning-to-Structure-an-Image-with-Few-Colors-9-320.jpg)