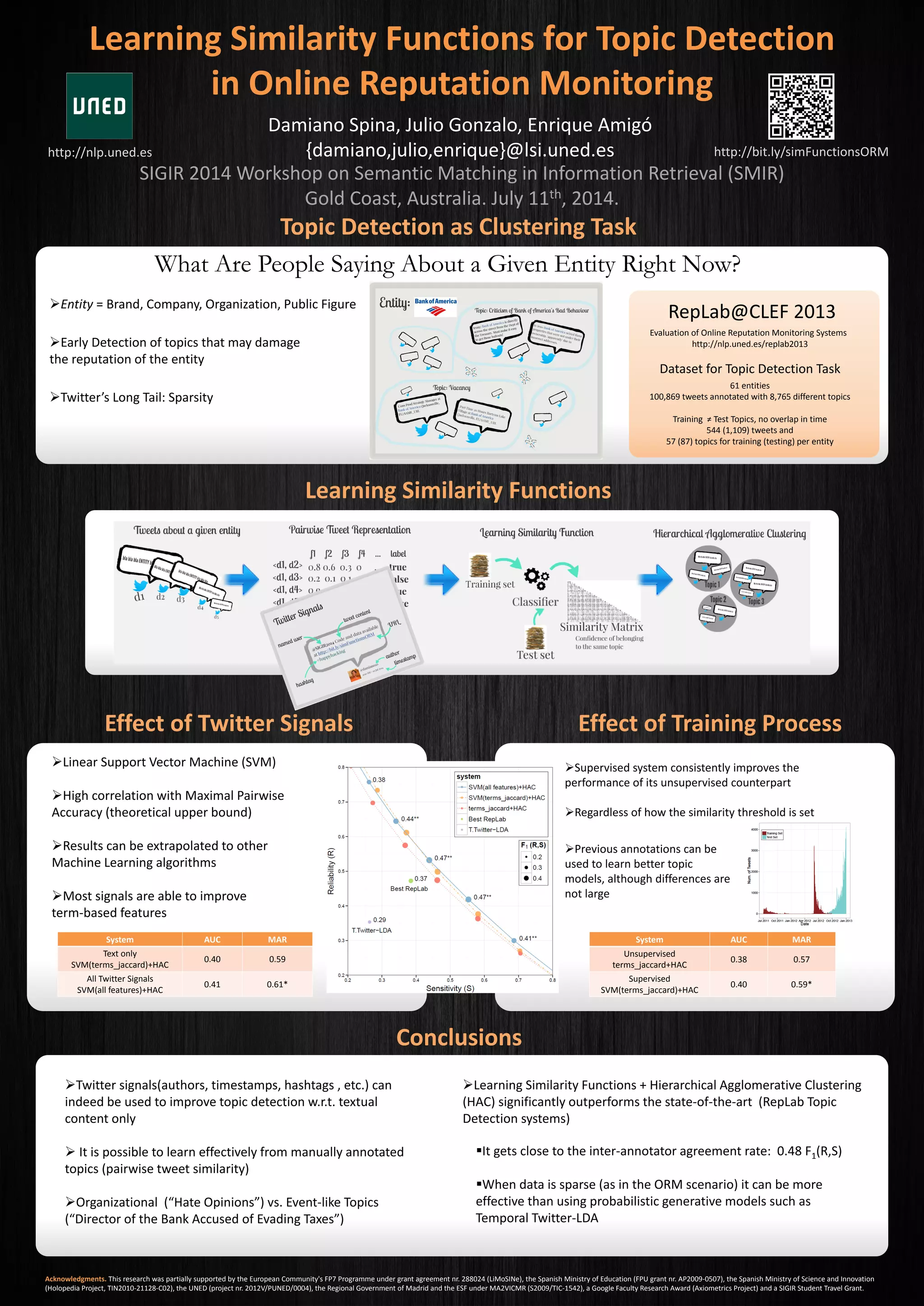

The document summarizes research on using supervised machine learning algorithms and Twitter signals to improve topic detection performance for online reputation monitoring. Key points:

- Linear Support Vector Machines showed high correlation with theoretical upper bounds and results can apply to other machine learning algorithms.

- Most Twitter signals, like authors, timestamps, hashtags, were able to improve topic detection based on text alone.

- A supervised system that learns similarity functions from previous annotations consistently outperforms unsupervised counterparts and is effective even with sparse data, like in online reputation monitoring.