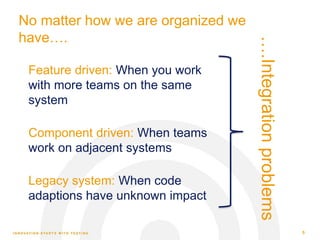

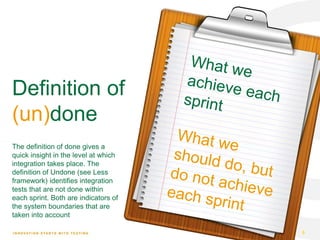

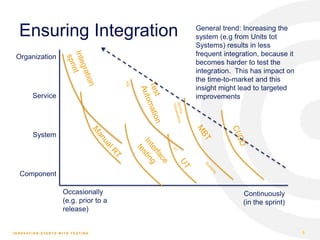

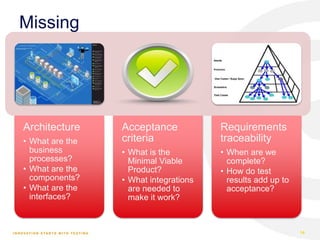

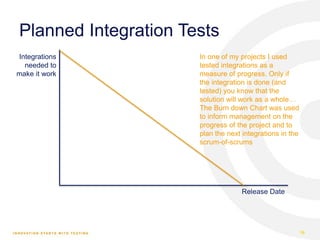

The document discusses the importance of integration testing in large-scale agile projects, emphasizing the relationship between time-to-market and the level of integration. It highlights the need for continuous integration, clear acceptance criteria, and frequent product launches, while also addressing challenges posed by organizational structure and system complexity. The summary concludes that effective communication with management can help manage expectations and identify areas for improvement in the integration process.

![Integration testing

at large scaled agile projects

1

The relation between time-to-market

and the level of integration

Derk-Jan de Grood

[SC]2 – 25 May 2016](https://image.slidesharecdn.com/sc2integrationtestingv02-160527080605/85/Integration-testing-in-Scaled-agile-projects-1-320.jpg)