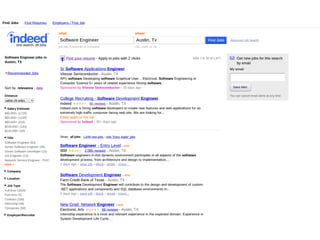

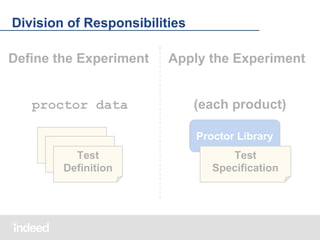

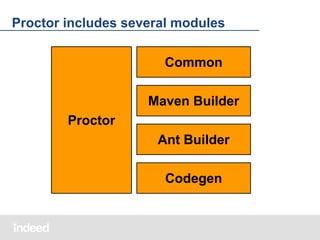

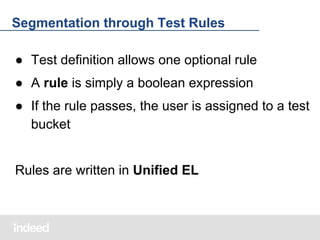

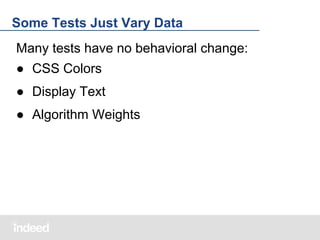

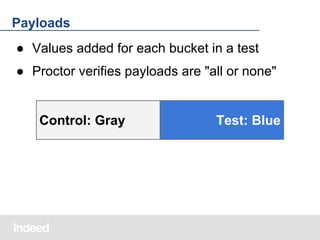

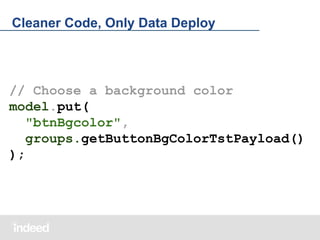

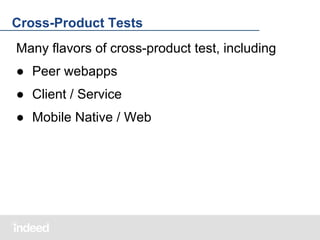

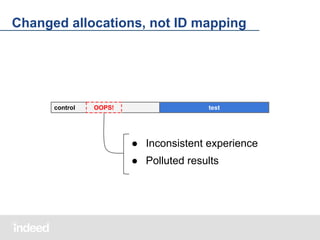

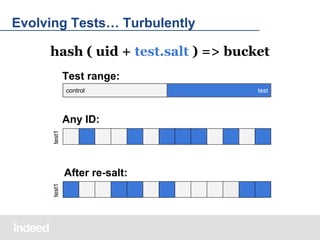

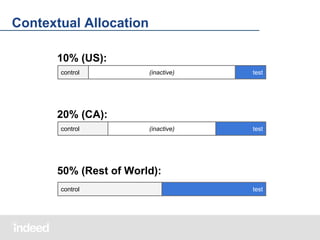

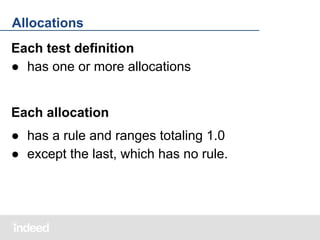

The document discusses Proctor, an open-source Java framework developed for managing A/B tests, aiming to provide efficient testing methodologies for web applications. It details the framework's architecture, including the design of experiments, selection of user groups, implementation of test behaviors, and logging results, emphasizing the importance of unbiased and independent sampling. Additionally, it covers advanced features like test segmentation, payload management, and cross-product coordination for comprehensive testing strategies across multiple environments.

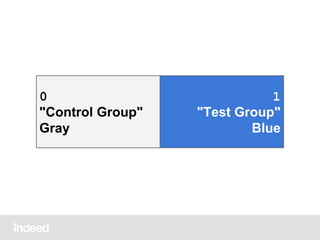

![Buckets in the Test Definition

"buckets": [{

"id": 0,

"name": "gray",

"description": "Control group"

}, {

"id": 1,

"name": "blue",

"description": "Test group"

}]](https://image.slidesharecdn.com/indeedeng2013-10-02-proctor-131010161930-phpapp01/85/IndeedEng-Managing-Experiments-and-Behavior-Dynamically-with-Proctor-86-320.jpg)

![Mapping Buckets to Ranges

"ranges": [{

"bucketValue": 0,

"length": 0.5

}, {

"bucketValue": 1,

"length": 0.5

}]](https://image.slidesharecdn.com/indeedeng2013-10-02-proctor-131010161930-phpapp01/85/IndeedEng-Managing-Experiments-and-Behavior-Dynamically-with-Proctor-87-320.jpg)

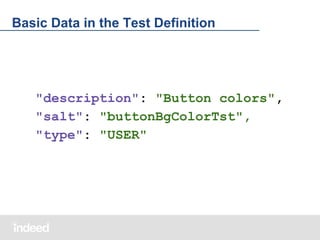

![Complete Test Definition

{

"description": "Button colors",

"type": "USER",

"salt": "buttonBgColorTst",

"buckets": […],

"allocations": [{

"ranges": […]

}],

}](https://image.slidesharecdn.com/indeedeng2013-10-02-proctor-131010161930-phpapp01/85/IndeedEng-Managing-Experiments-and-Behavior-Dynamically-with-Proctor-88-320.jpg)

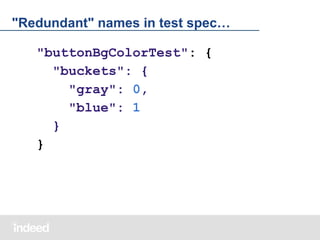

![Product Test Specification lists active tests

References into the global pool:

"tests": [{

"buttonBgcolorTest": {

"buckets": {

"gray": 0,

"blue": 1

}

}

}]](https://image.slidesharecdn.com/indeedeng2013-10-02-proctor-131010161930-phpapp01/85/IndeedEng-Managing-Experiments-and-Behavior-Dynamically-with-Proctor-91-320.jpg)

![Simple Things are Simple

● No deployment needed

● Changes live within minutes

{

"description": "Button colors",

"rule": "country == ‘CA’"

"buckets": […]

}](https://image.slidesharecdn.com/indeedeng2013-10-02-proctor-131010161930-phpapp01/85/IndeedEng-Managing-Experiments-and-Behavior-Dynamically-with-Proctor-114-320.jpg)

![Commons EL is Easily Extended

JSTL Standard Functions

"rule":

"fn:endsWith(

account.email, '@indeed.com')"

Custom code

"rule":

"proctor:contains(

['US', 'CA'], country)"](https://image.slidesharecdn.com/indeedeng2013-10-02-proctor-131010161930-phpapp01/85/IndeedEng-Managing-Experiments-and-Behavior-Dynamically-with-Proctor-116-320.jpg)

![Context Defined in Test Specification

● Test spec declares available context variables

● This is a contract to provide values at runtime

{

"tests": […],

"providedContext": {

"country": "String",

"language": "String"

"userAgent":

"com.indeed.web.UserAgent"

}

}](https://image.slidesharecdn.com/indeedeng2013-10-02-proctor-131010161930-phpapp01/85/IndeedEng-Managing-Experiments-and-Behavior-Dynamically-with-Proctor-119-320.jpg)

![Part of Test Definition

● No deployment needed

● Changes live within minutes

"buckets": [{

"id": 0, "name": "gray",

"description": "Control group",

"payload": {

"stringValue": "#ccc"

}

}, …]](https://image.slidesharecdn.com/indeedeng2013-10-02-proctor-131010161930-phpapp01/85/IndeedEng-Managing-Experiments-and-Behavior-Dynamically-with-Proctor-126-320.jpg)

![Declared in Project Test Specification

● Type definition only

● Must match test definition

"buttonBgColorTst": {

"buckets": […],

"payload": {

"type": "stringValue"

}

}](https://image.slidesharecdn.com/indeedeng2013-10-02-proctor-131010161930-phpapp01/85/IndeedEng-Managing-Experiments-and-Behavior-Dynamically-with-Proctor-127-320.jpg)

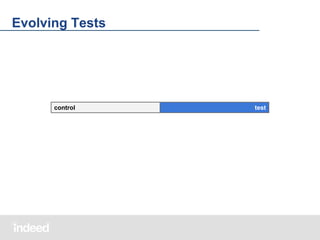

![Evolving Tests Smoothly

control

test

(inactive)

[ 10%, 10%]

[ 10%, 10%, 80% ]](https://image.slidesharecdn.com/indeedeng2013-10-02-proctor-131010161930-phpapp01/85/IndeedEng-Managing-Experiments-and-Behavior-Dynamically-with-Proctor-137-320.jpg)

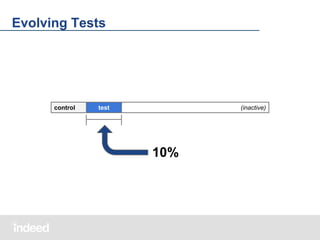

![Evolving Tests Smoothly

control

test

(inactive)

[ 10%, 10%, 80% ]

[ 10%, 10%, 40%, 40%]

control

test

control

test](https://image.slidesharecdn.com/indeedeng2013-10-02-proctor-131010161930-phpapp01/85/IndeedEng-Managing-Experiments-and-Behavior-Dynamically-with-Proctor-138-320.jpg)

![Evolving Tests Smoothly

control

(inactive)

test

[ 10%, 80%, 10% ]

[ 50%, 50% ]

control

test](https://image.slidesharecdn.com/indeedeng2013-10-02-proctor-131010161930-phpapp01/85/IndeedEng-Managing-Experiments-and-Behavior-Dynamically-with-Proctor-139-320.jpg)

![Allocations in the Test Definition

{ "description": "Button colors",

"type": "USER",

"salt": "buttonBgColorTst",

"buckets": [ … ],

"allocations": [{

"rule": "country == 'US'",

"ranges": [ … ]

}, {

"ranges": [ … ]

}]

}](https://image.slidesharecdn.com/indeedeng2013-10-02-proctor-131010161930-phpapp01/85/IndeedEng-Managing-Experiments-and-Behavior-Dynamically-with-Proctor-145-320.jpg)