This white paper discusses methods for handling data corruption in Elasticsearch, detailing how to recover data from corrupted indices and re-index it into a new index. It provides insights into Elasticsearch's architecture, data replication for high availability, and the steps required for data recovery, including identifying corrupted shards and reading data files. The paper also highlights the significance of maintaining stable index states and presents code samples for the data recovery and re-indexing processes.

![© 2014 Impetus Technologies, Inc.

All rights reserved. Product and

company names mentioned herein

may be trademarks of their

respective companies.

August 2014

Impetus is a Software Solutions and Services Company with deep technical

maturity that brings you thought leadership, proactive innovation, and a

track record of success. Our Services and Solutions portfolio includes

Carrier grade large systems, Big Data, Cloud, Enterprise Mobility, and Test

and Performance Engineering.

Visit www.impetus.com or write to us at inquiry@impetus.com

About Impetus

Conclusion

As the data volume is increasing rapidly, it is a challenge for organizations to

replicate the data due to storage cost. Elasticsearch addresses this challenge

effectively and helps organizations recover data from corrupted Elasticsearch

index.

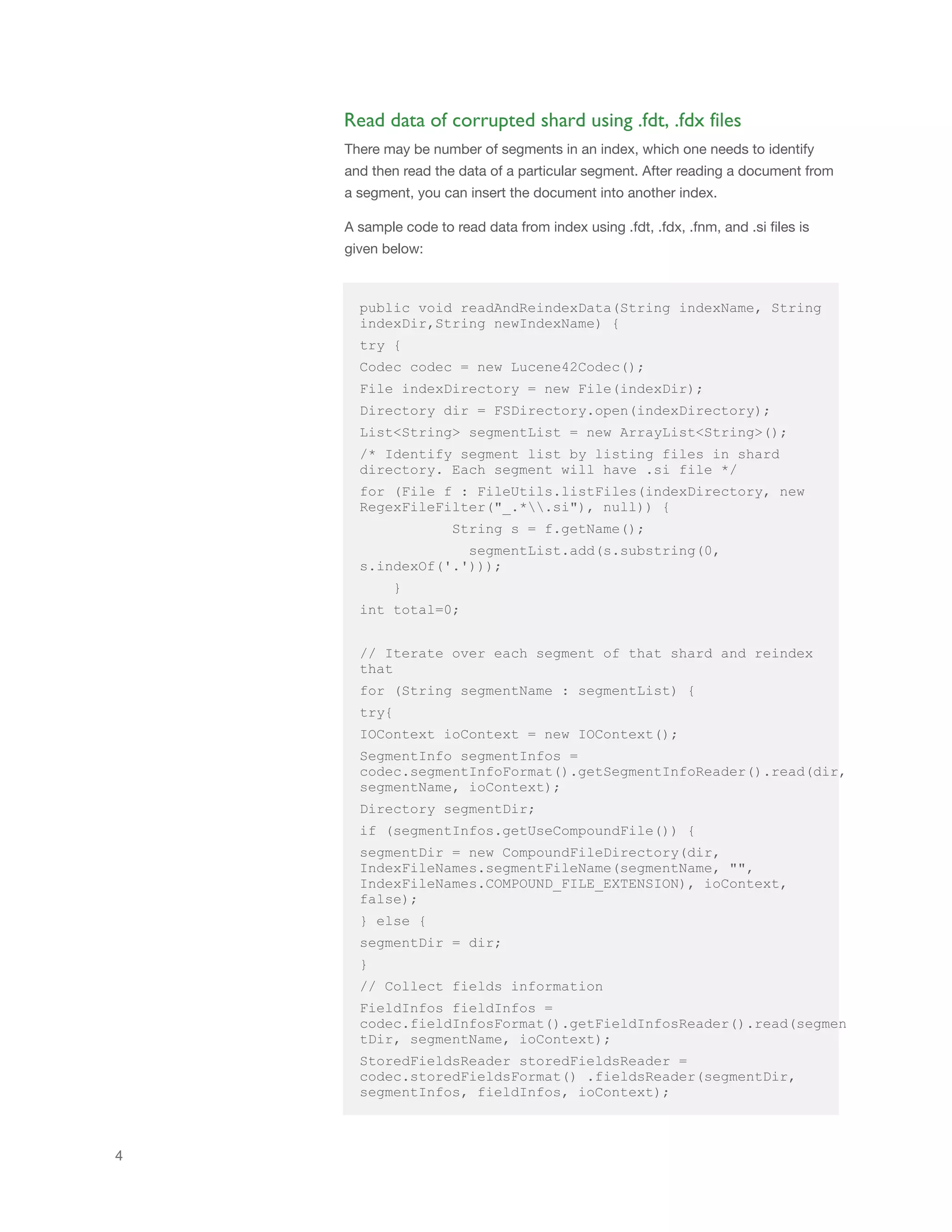

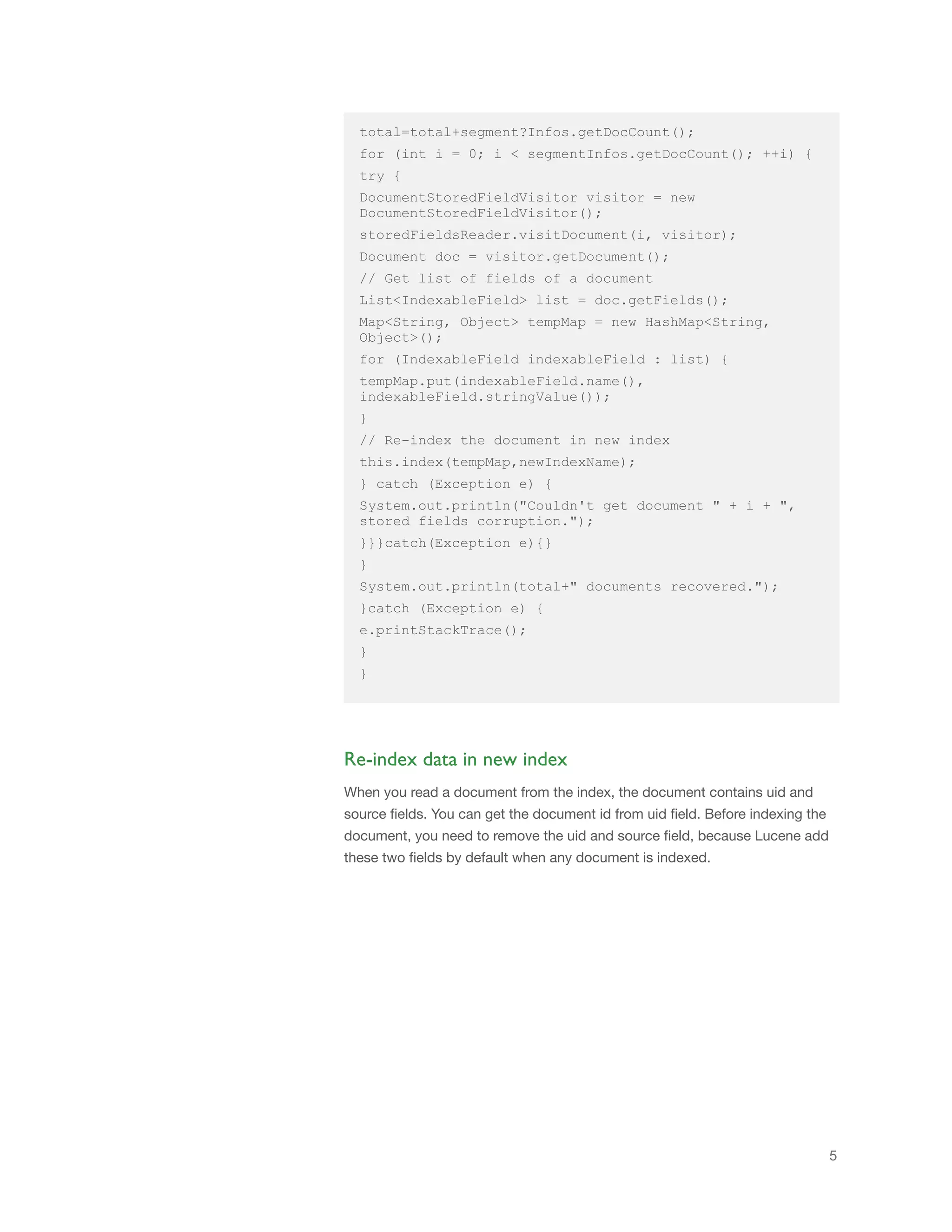

// Re-index the document in new index

private void index(Map<String, Object> record,String

newIndexName){

String docId=((String) record.get("_uid")).split("#")[1];

String mappingType=((String)

record.get("_uid")).split("#")[0];

record.remove("_uid");

record.remove("_source");

IndexRequest indexRequest = new IndexRequest(newIndexName,

mappingType, docId);

indexRequest.source(record);

BulkRequestBuilder bulkRequestBuilder =

client.prepareBulk();

bulkRequestBuilder.add(indexRequest);

bulkRequestBuilder.execute().actionGet();

}

Testing Environment:

Elasticsearch- 0.90.5

Java - 1.6.45

Operating System- RHEL

A sample code to re-index the documents using same document ids is given

below:](https://image.slidesharecdn.com/datarecoveryinelasticsearch-150106081202-conversion-gate01/75/Impetus-White-Paper-Handling-Data-Corruption-in-Elasticsearch-6-2048.jpg)