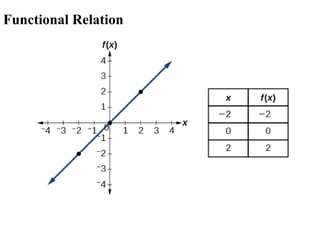

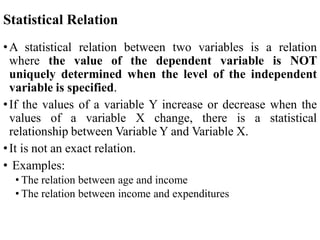

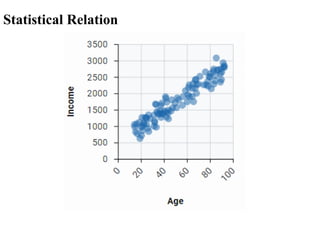

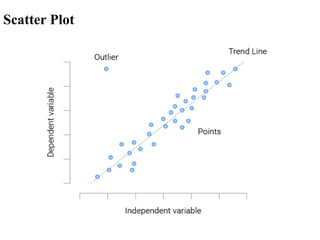

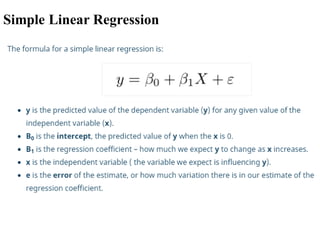

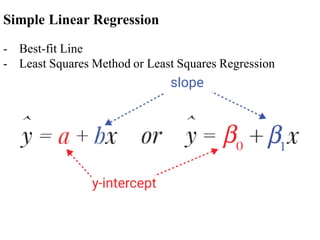

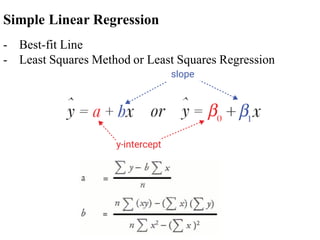

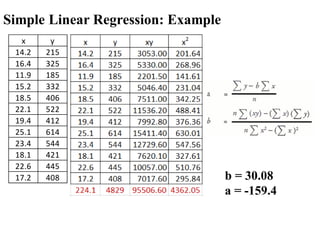

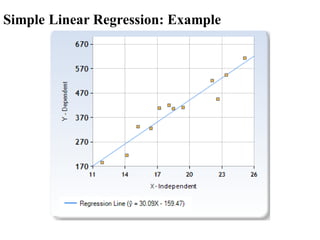

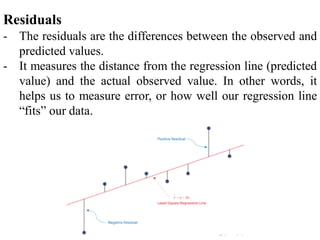

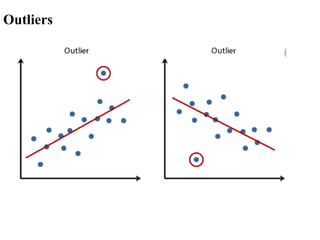

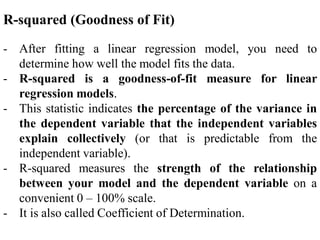

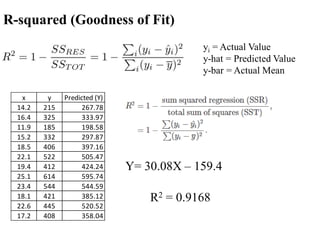

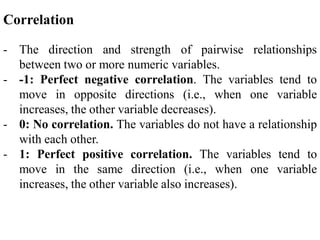

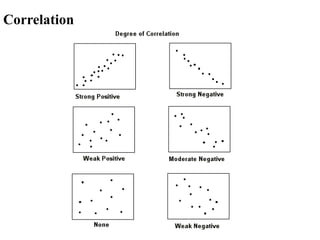

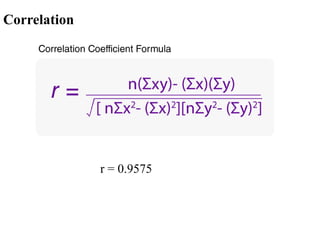

The document covers an introduction to data science, specifically discussing linear regression analysis and the relationships between variables. It explains the distinctions between functional and statistical relations, the use of scatter plots, and the concepts of regressors and regressands in regression models. Additionally, it addresses the evaluation of model fit through R-squared and outlines the limitations of linear regression.