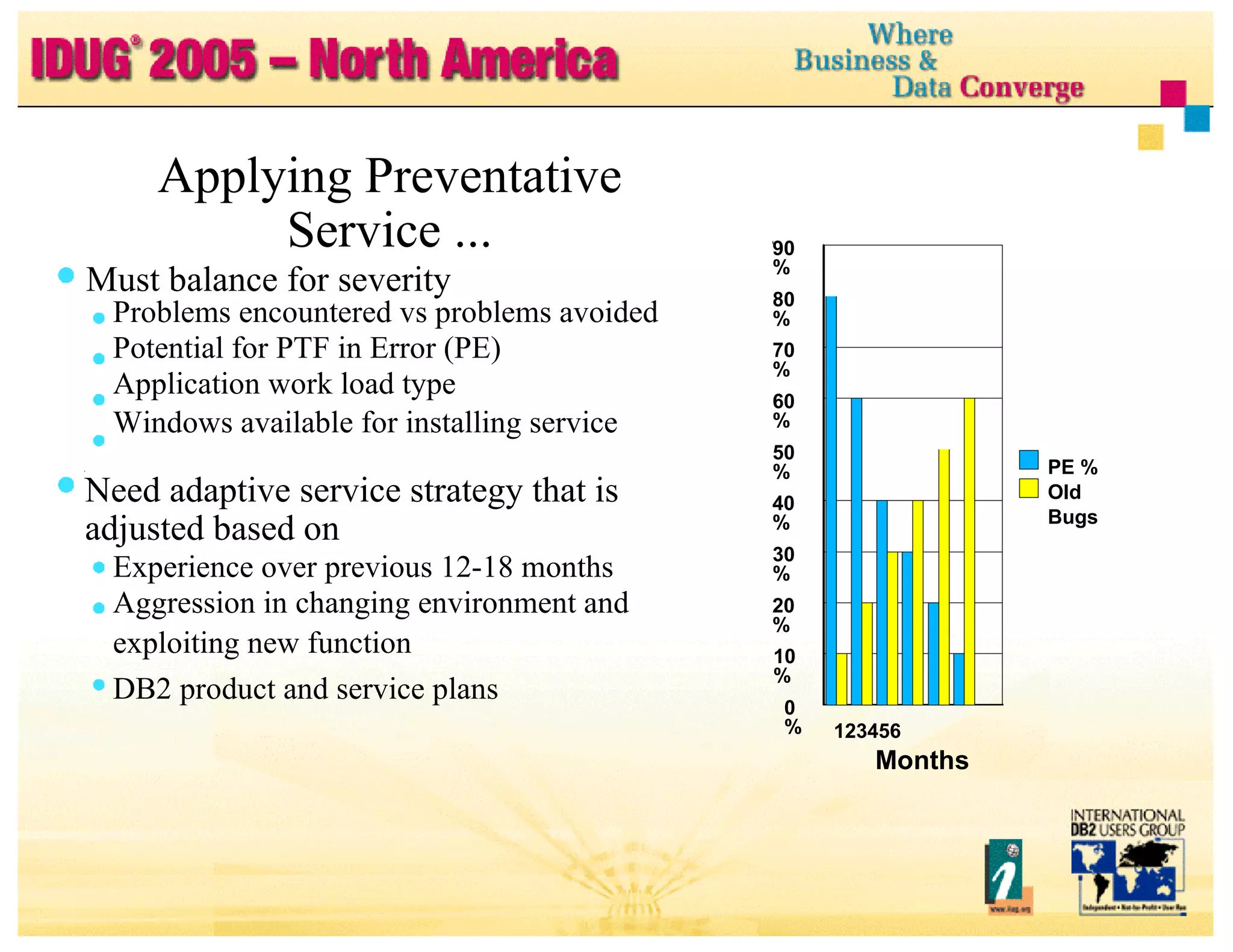

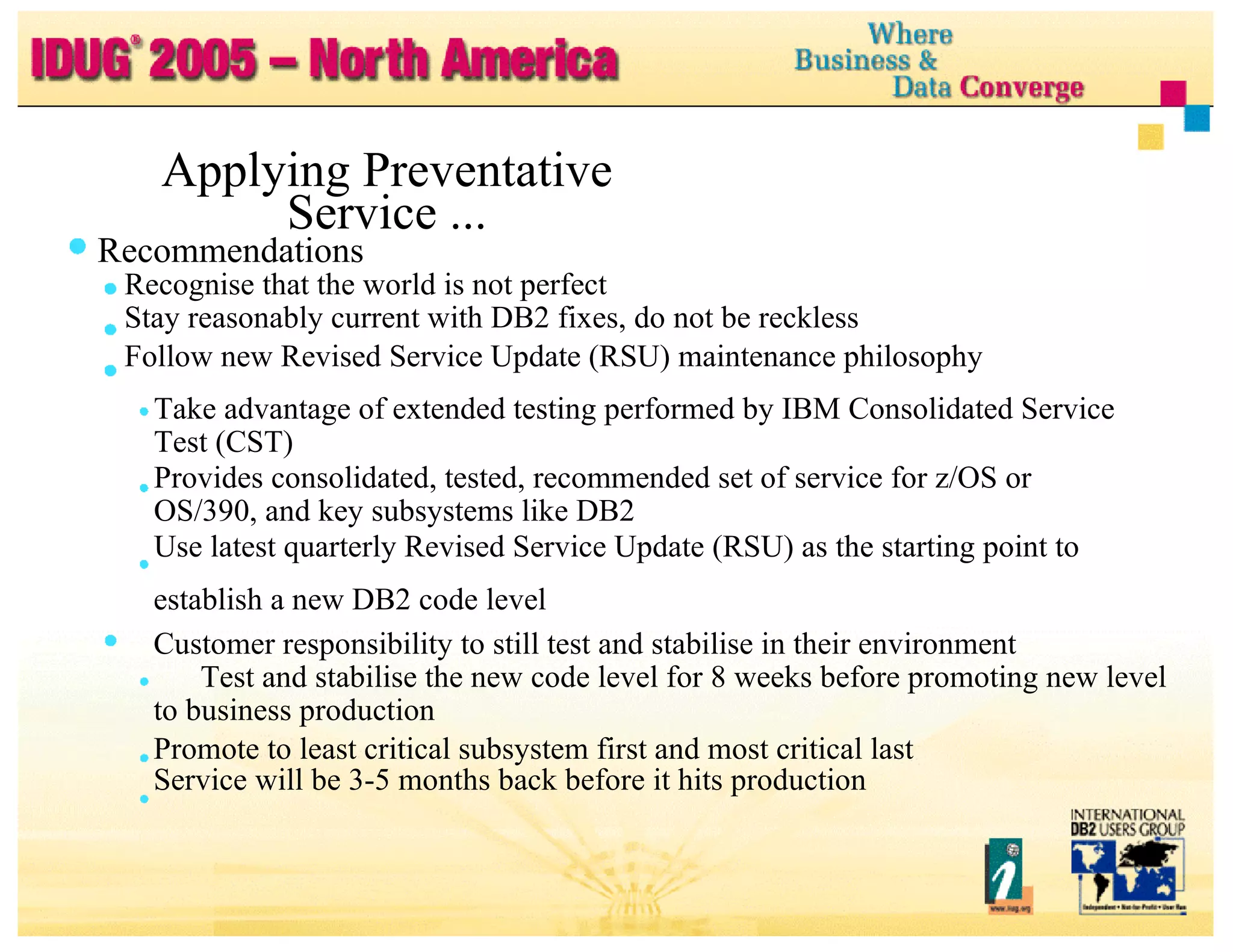

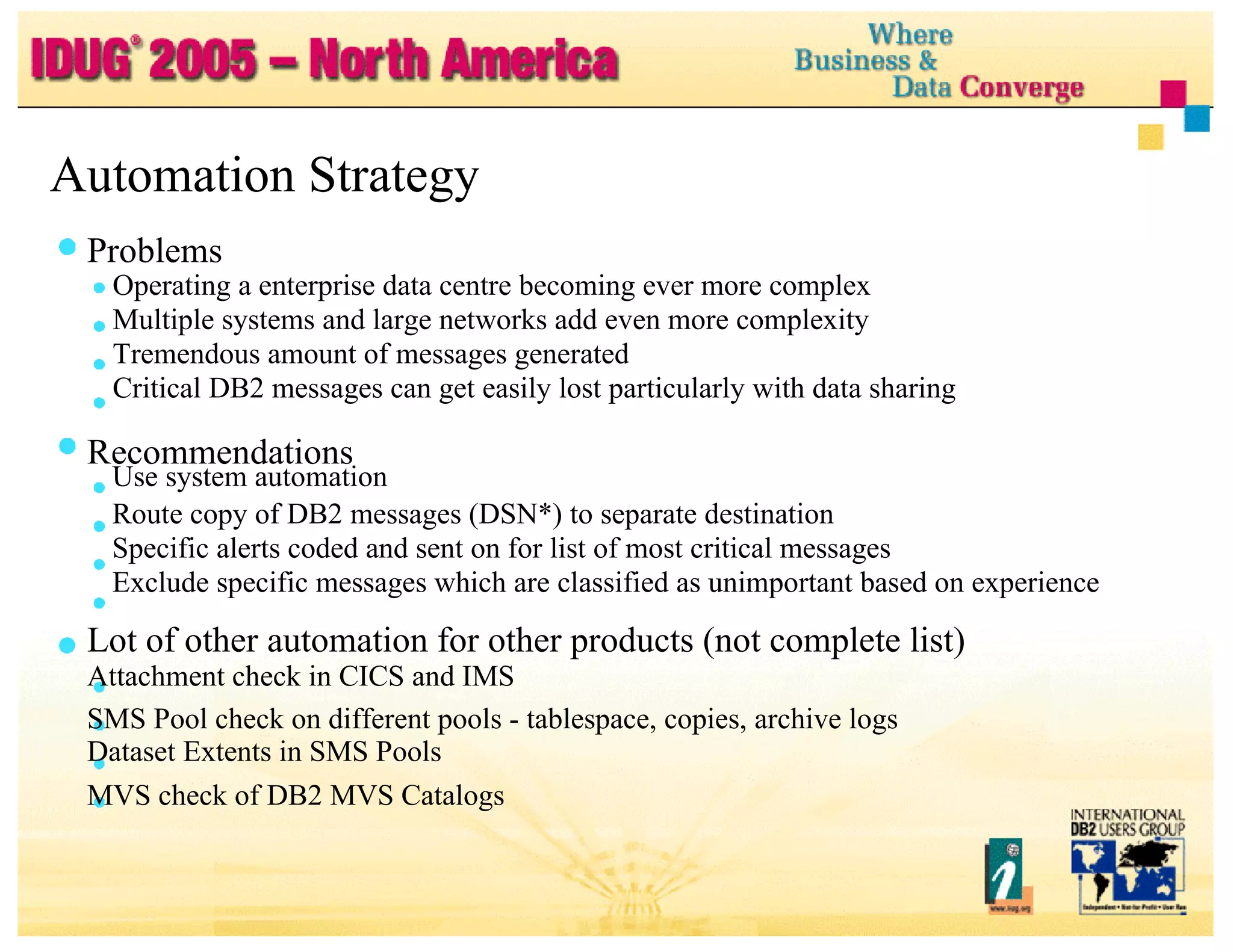

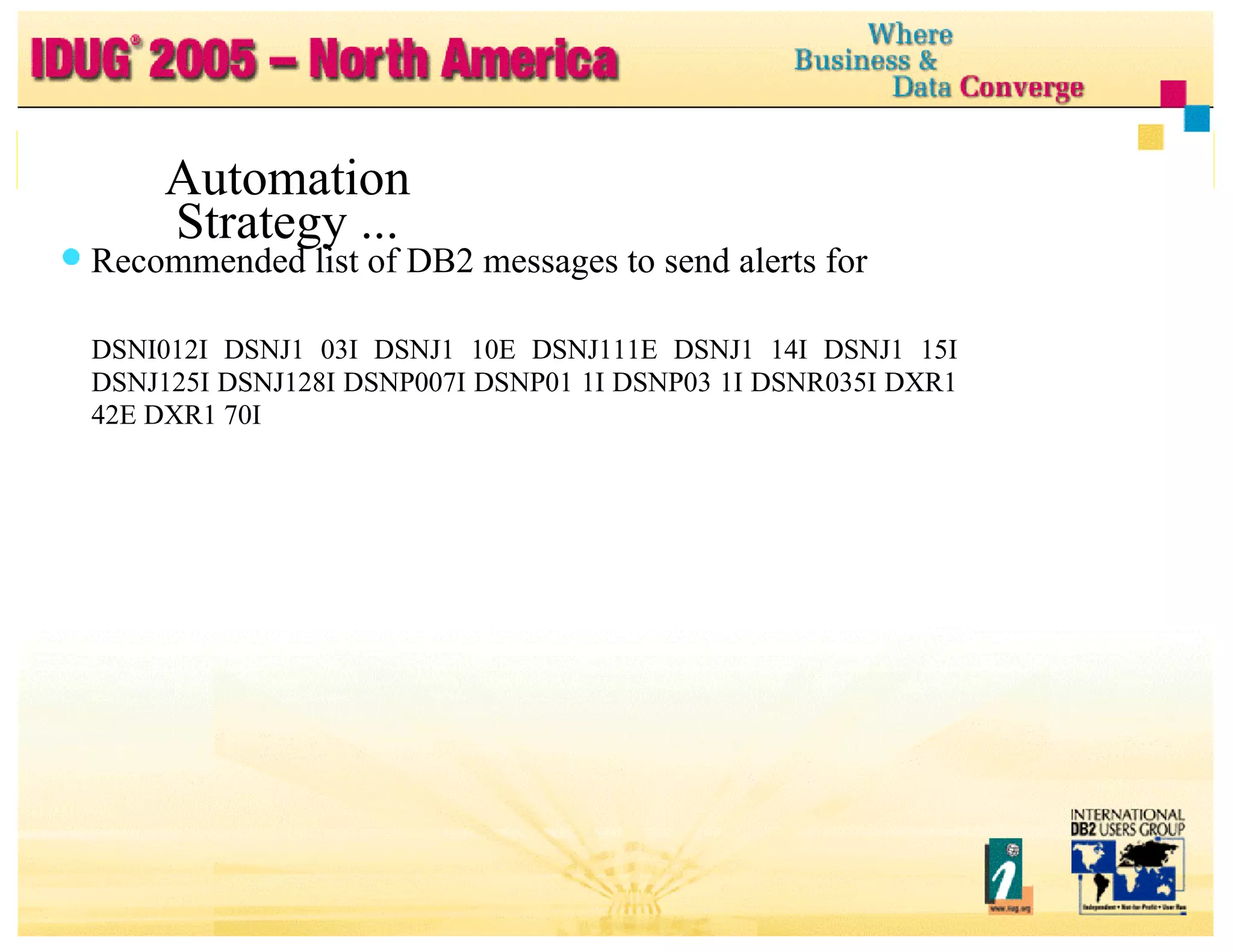

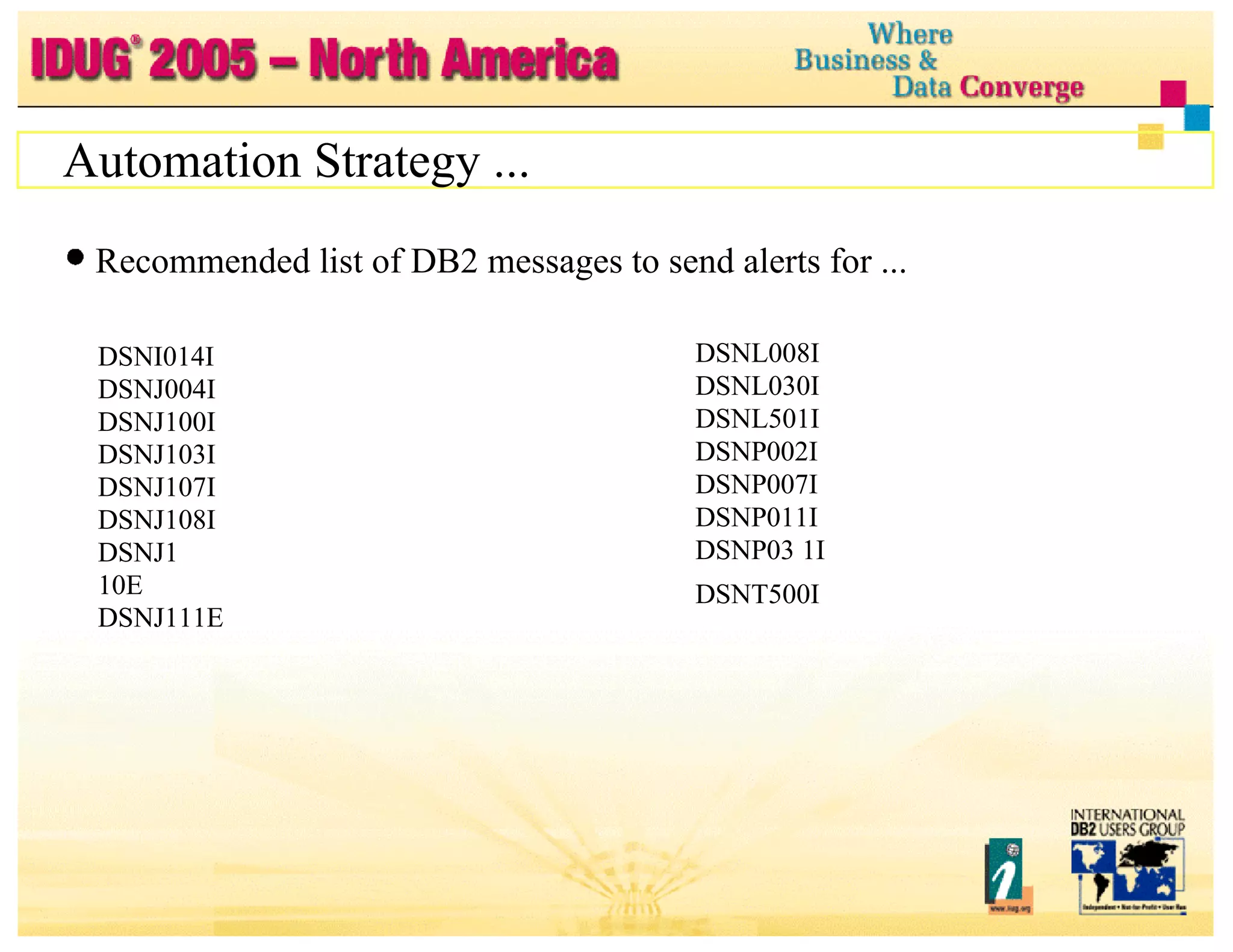

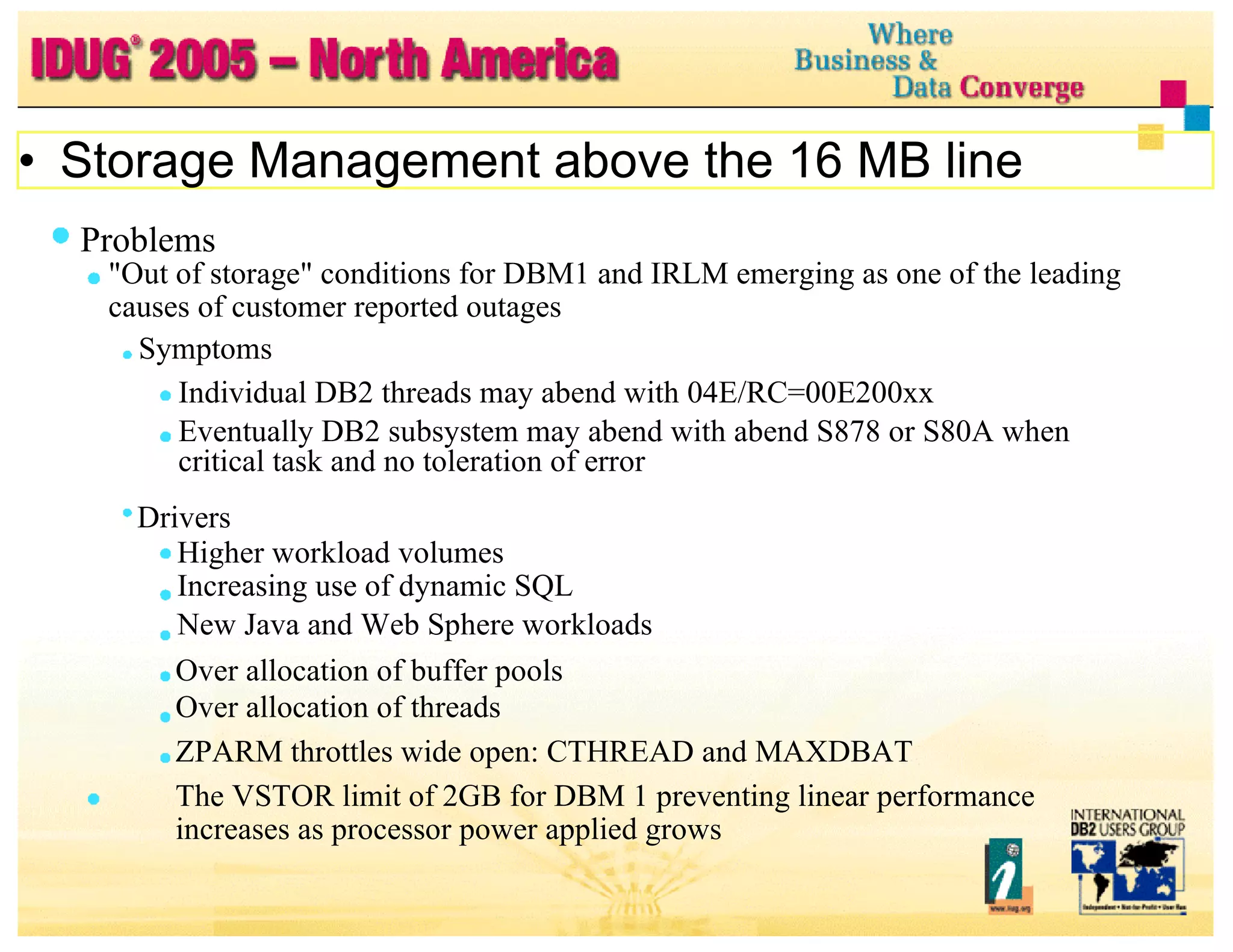

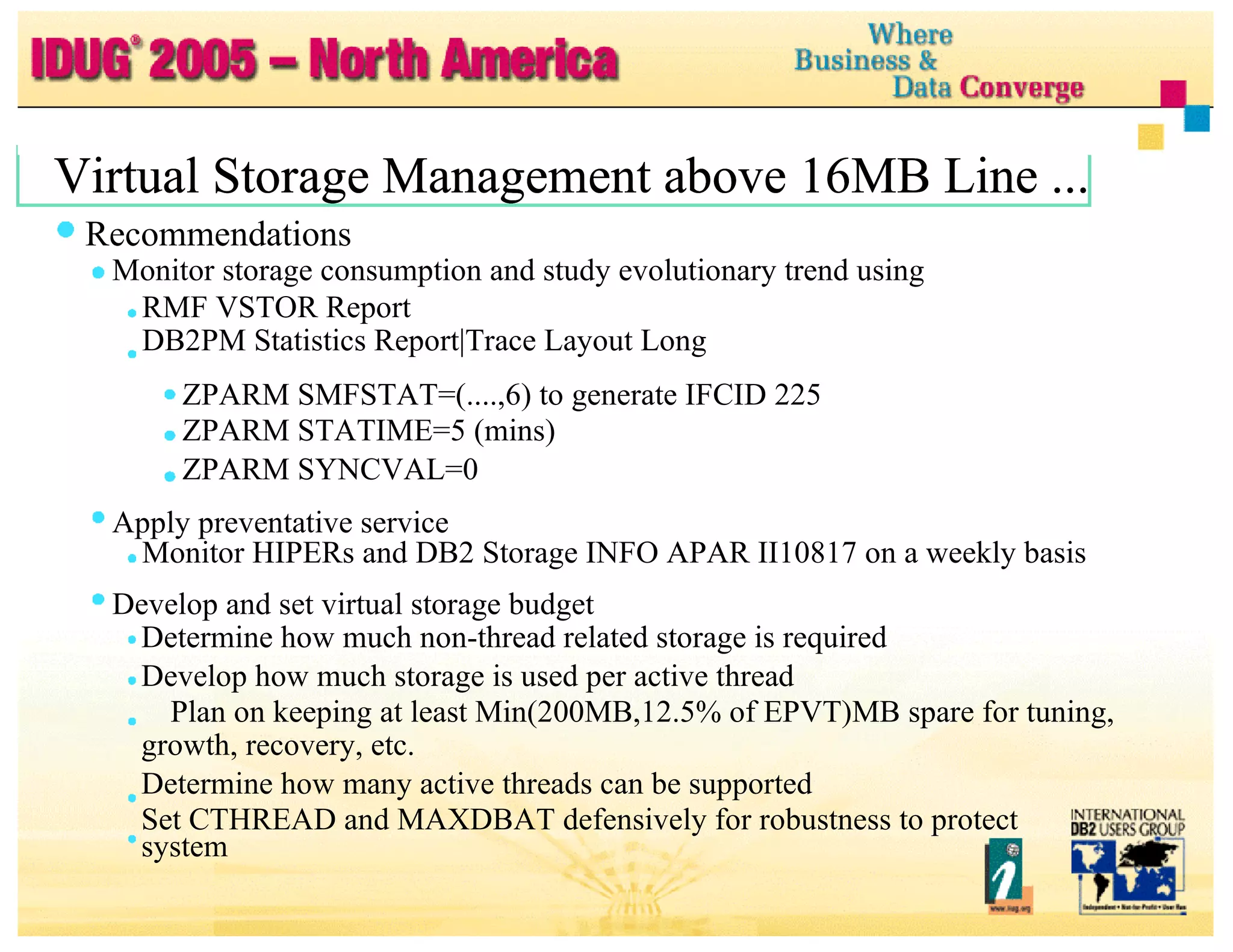

The document discusses recommendations for improving DB2 system availability and performance. It recommends automating the monitoring of critical DB2 messages, applying preventative service regularly, designing applications for high availability and parallelism, managing virtual storage above 16MB efficiently, and performing high performance object recovery through techniques like using DASD and parallel jobs.

![Data Sharing Tuning ... Recommendations ... Exploit Parallel Sysplex and promote active DB2 data sharing Replicate applications and distribute incoming workload CPU cost of data sharing offset by Higher utilisation of configuration Higher throughput Reduces possibility of retained locks at gross (object) level Avoids 'open dataset' performance problem on workload failover]](https://image.slidesharecdn.com/healthcheckyourdb2udbforzossystem-124602735529-phpapp02/75/Health-Check-Your-DB2-UDB-For-Z-OS-System-51-2048.jpg)

![Shelton Reese DB2 for z/OS Support [email_address] Health Check Your DB2 System Part 1 and 2 Session: R12 and R13](https://image.slidesharecdn.com/healthcheckyourdb2udbforzossystem-124602735529-phpapp02/75/Health-Check-Your-DB2-UDB-For-Z-OS-System-58-2048.jpg)