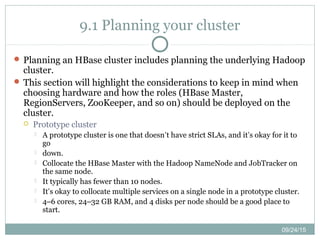

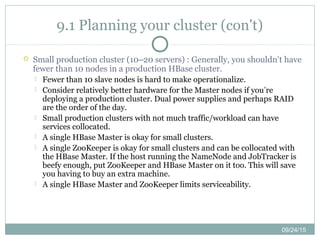

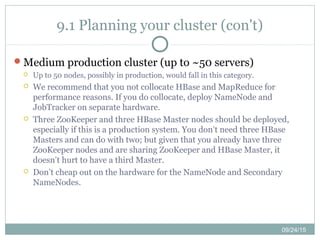

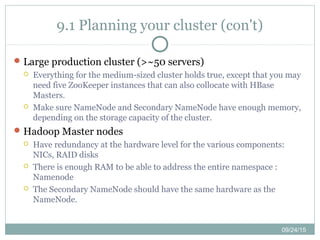

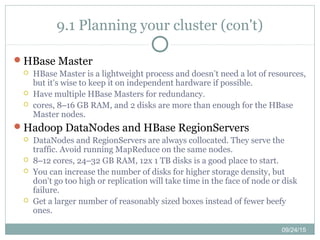

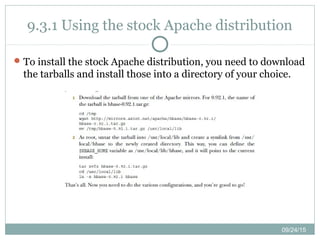

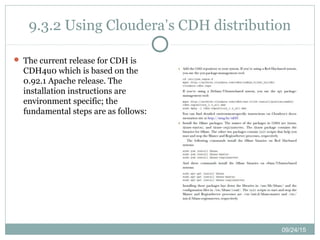

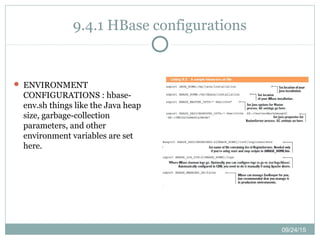

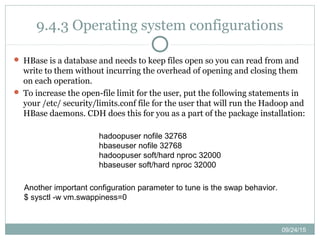

This document discusses the deployment of HBase clusters, detailing planning considerations, hardware requirements for various cluster sizes, and recommendations for software distributions like Apache and Cloudera's CDH. It emphasizes the importance of proper configuration and management of the daemons involved in the HBase setup, including the roles of HBase master, region servers, and Zookeeper. The chapter aims to prepare readers for deploying HBase in production environments, either on-premises or in the cloud.