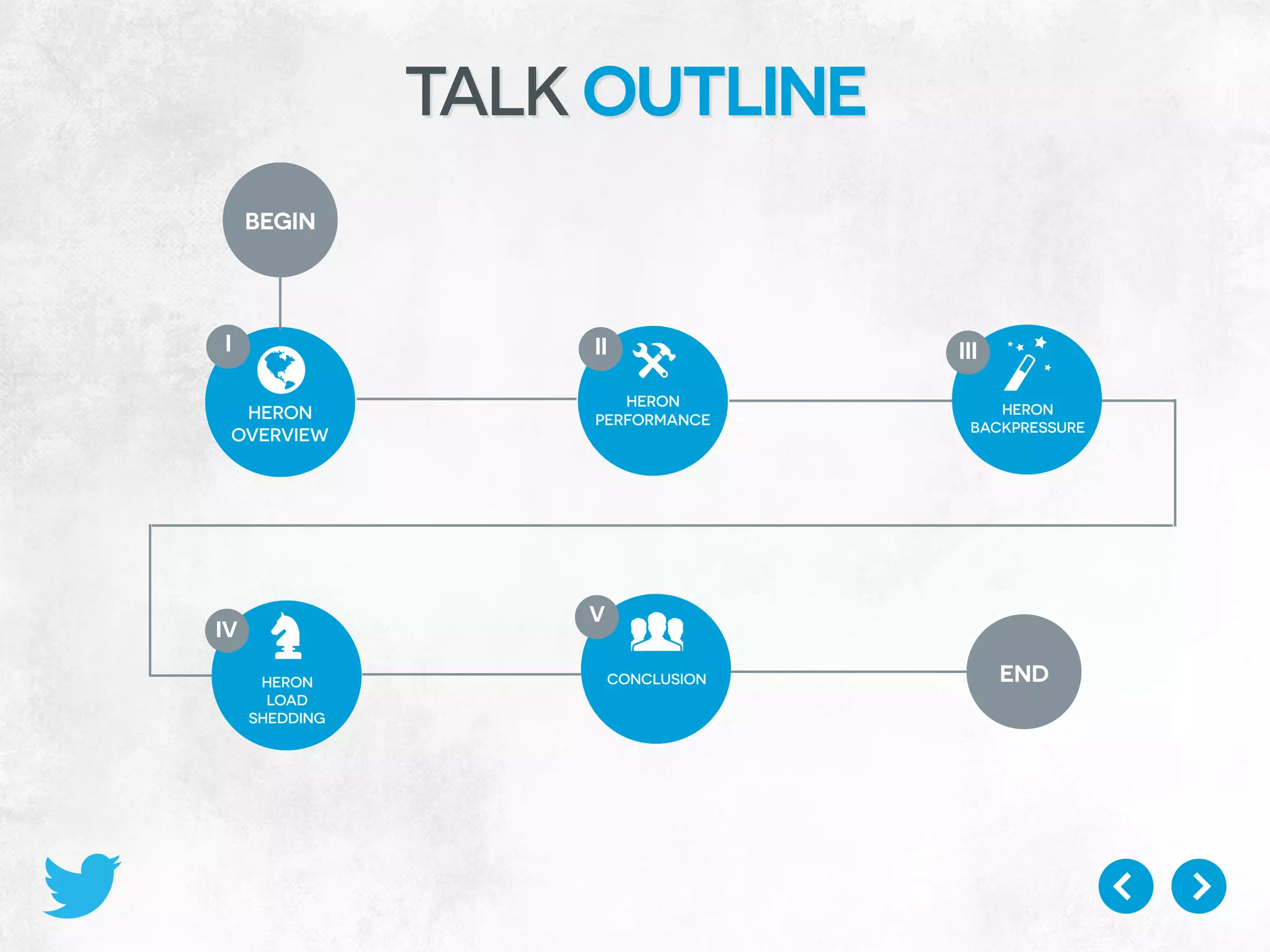

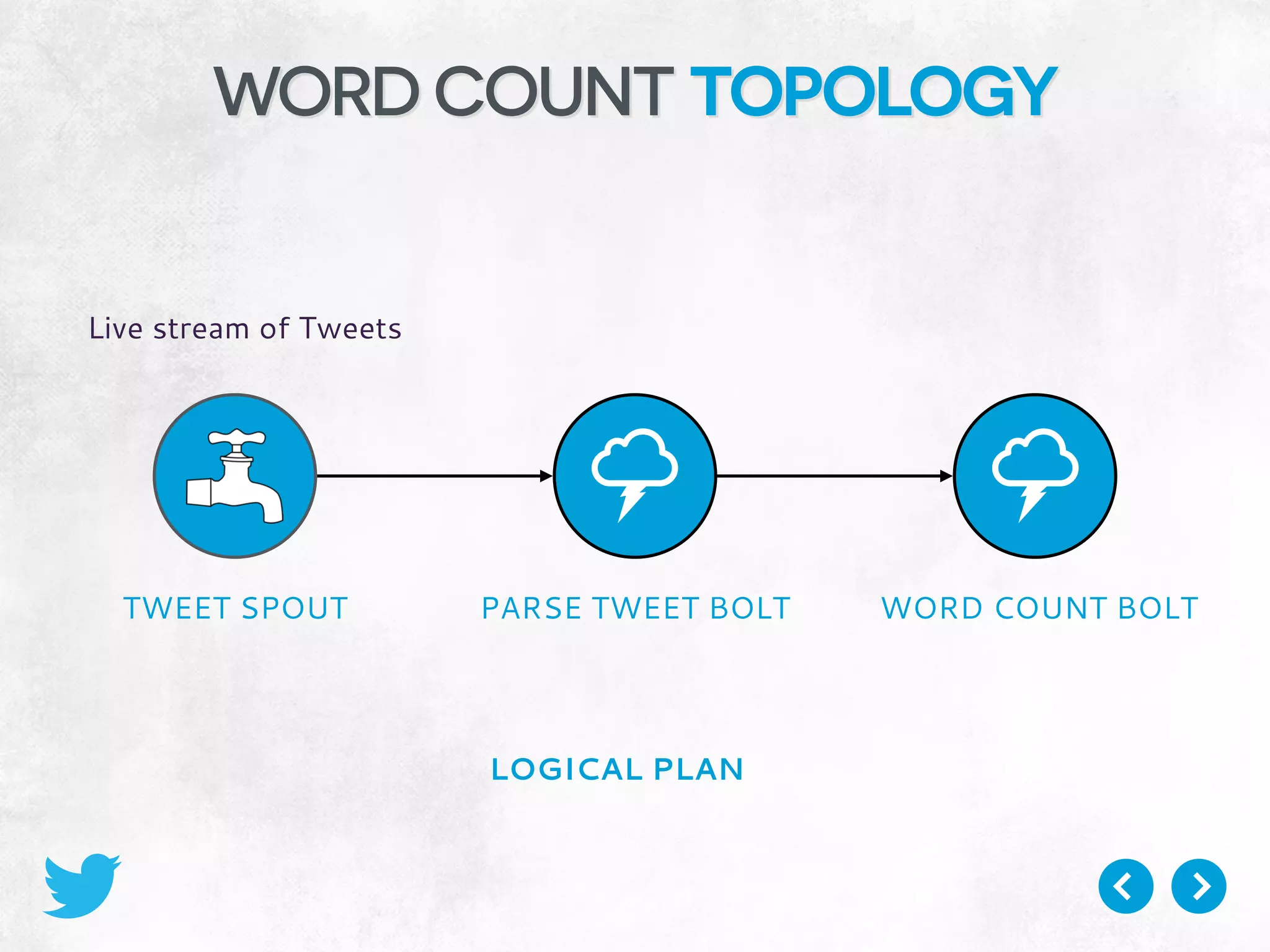

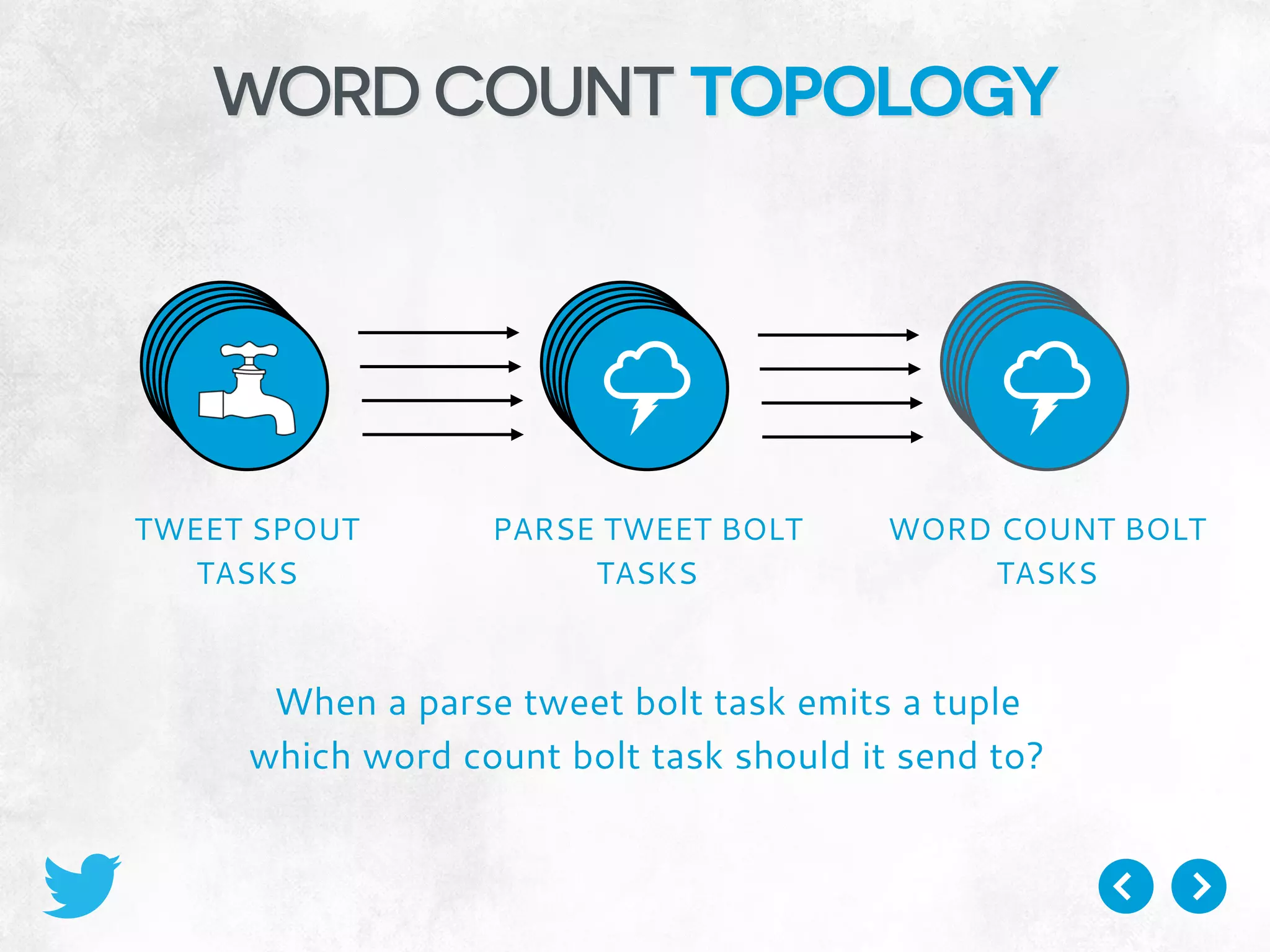

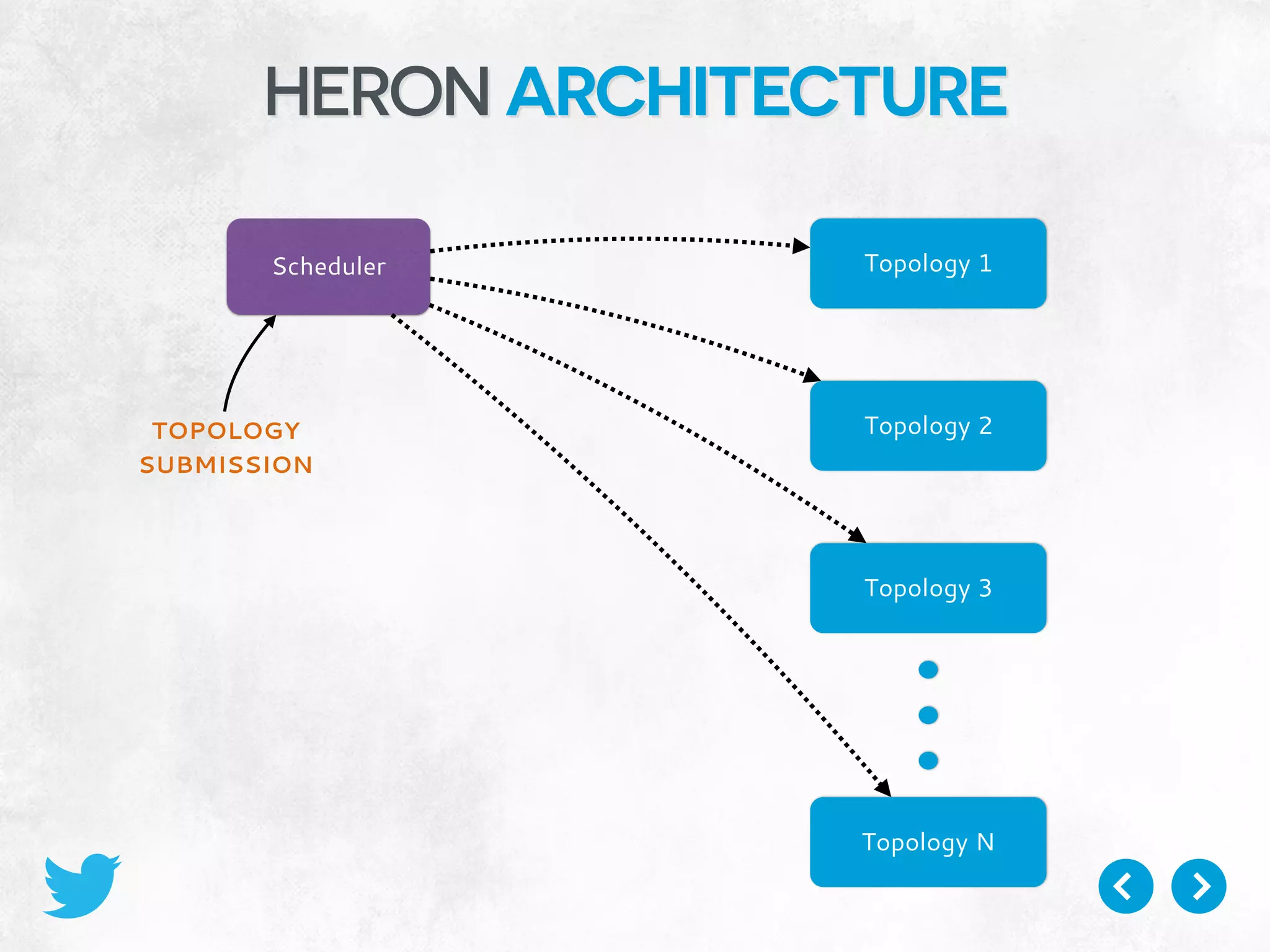

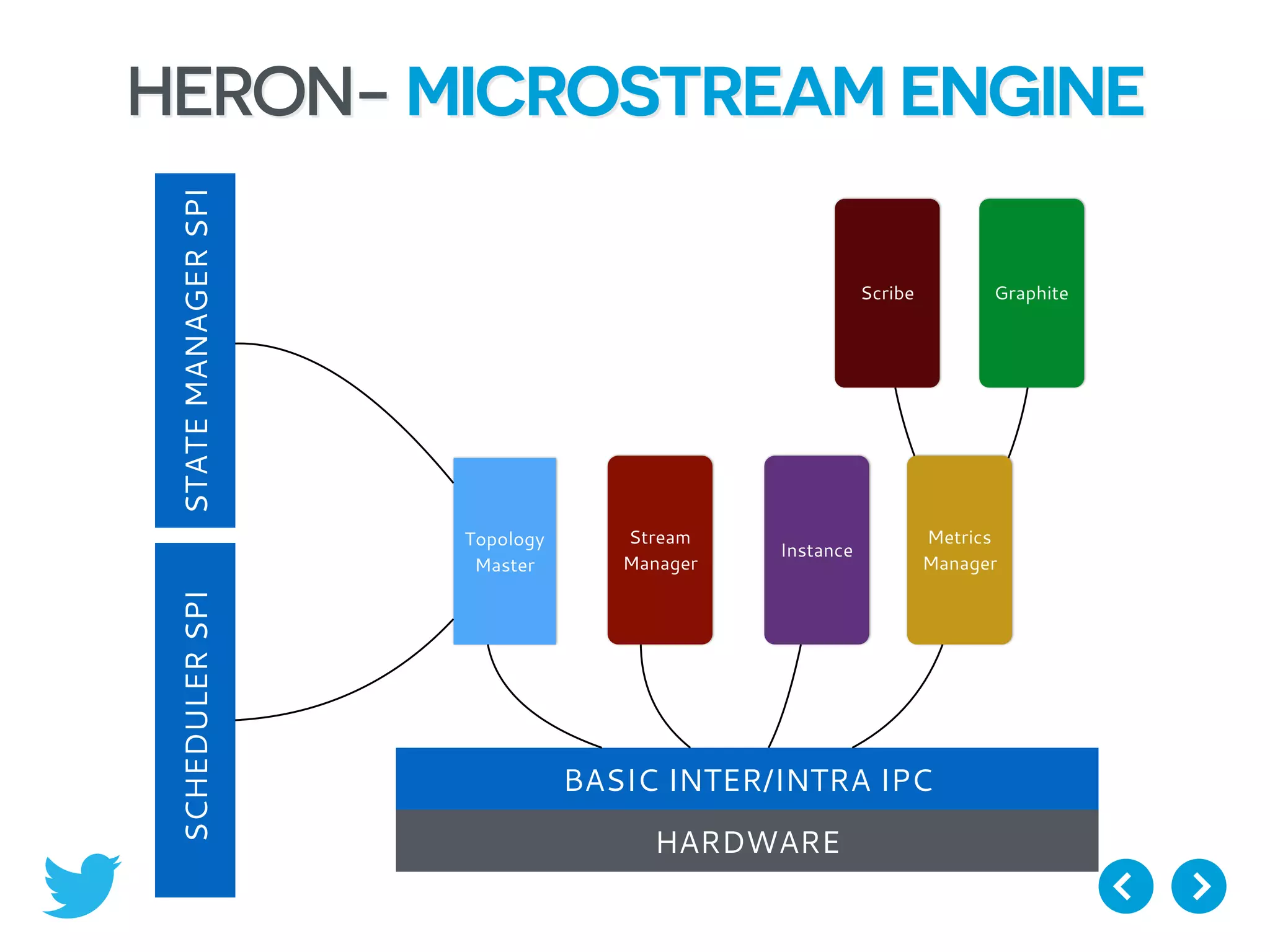

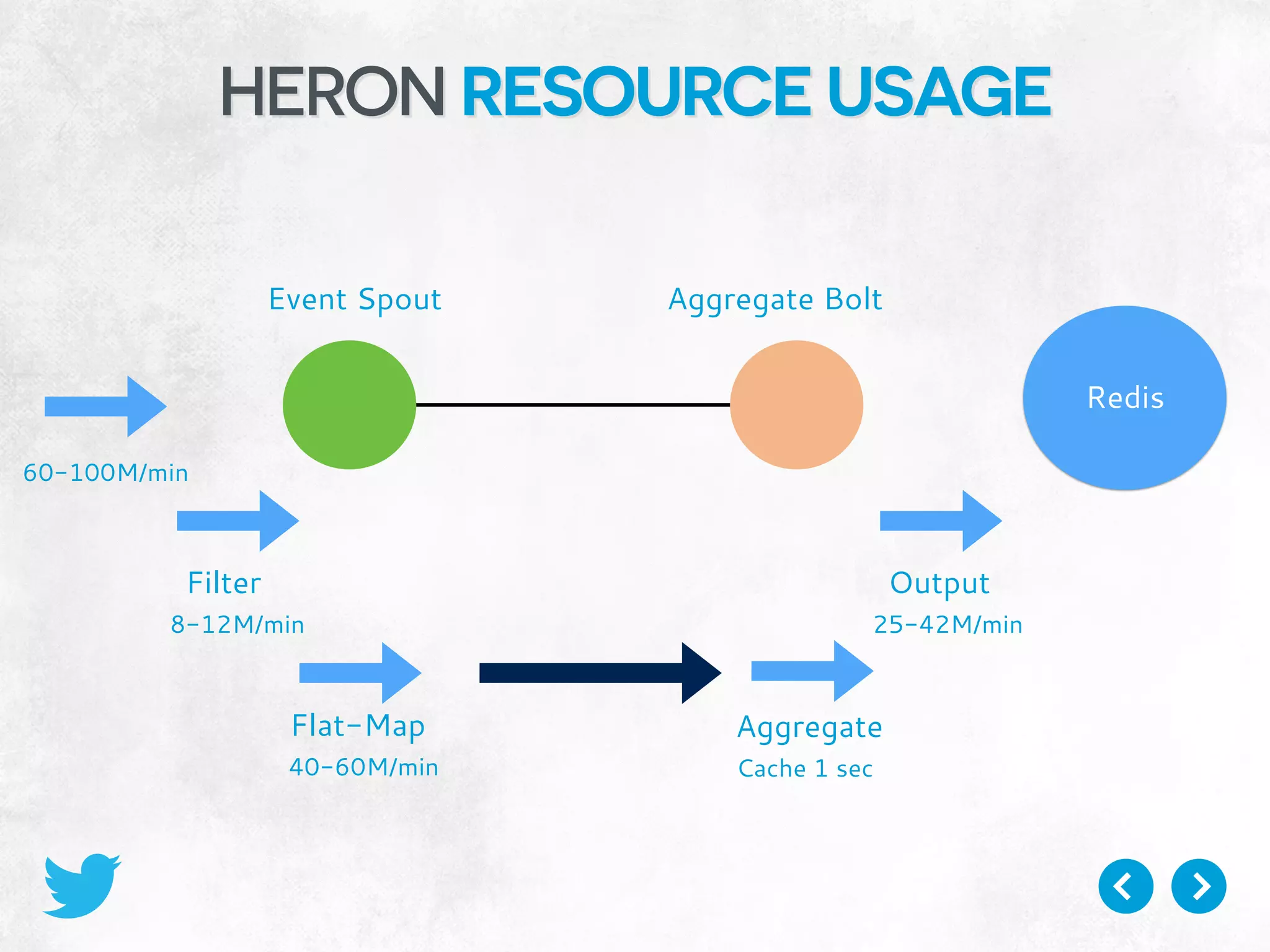

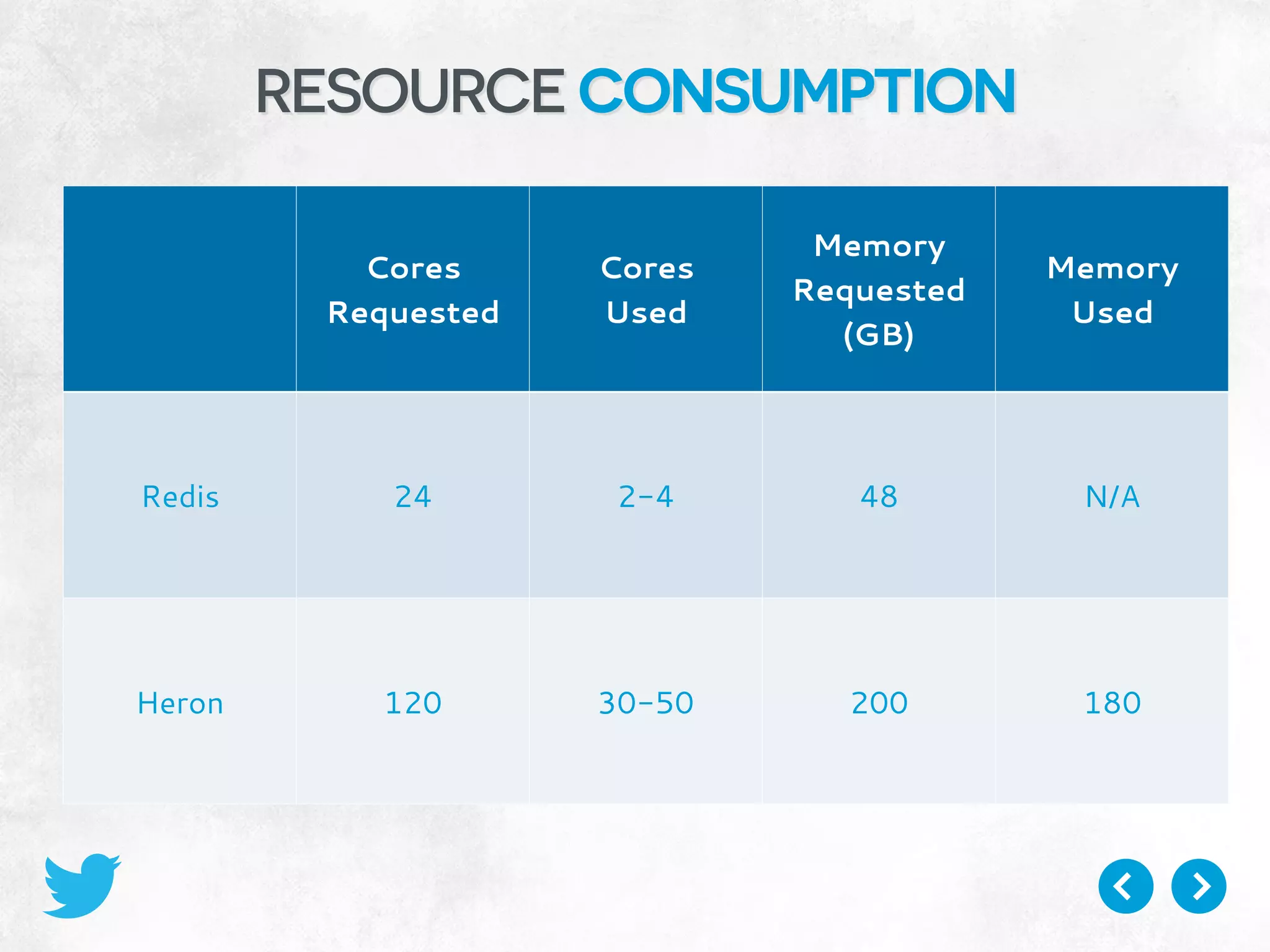

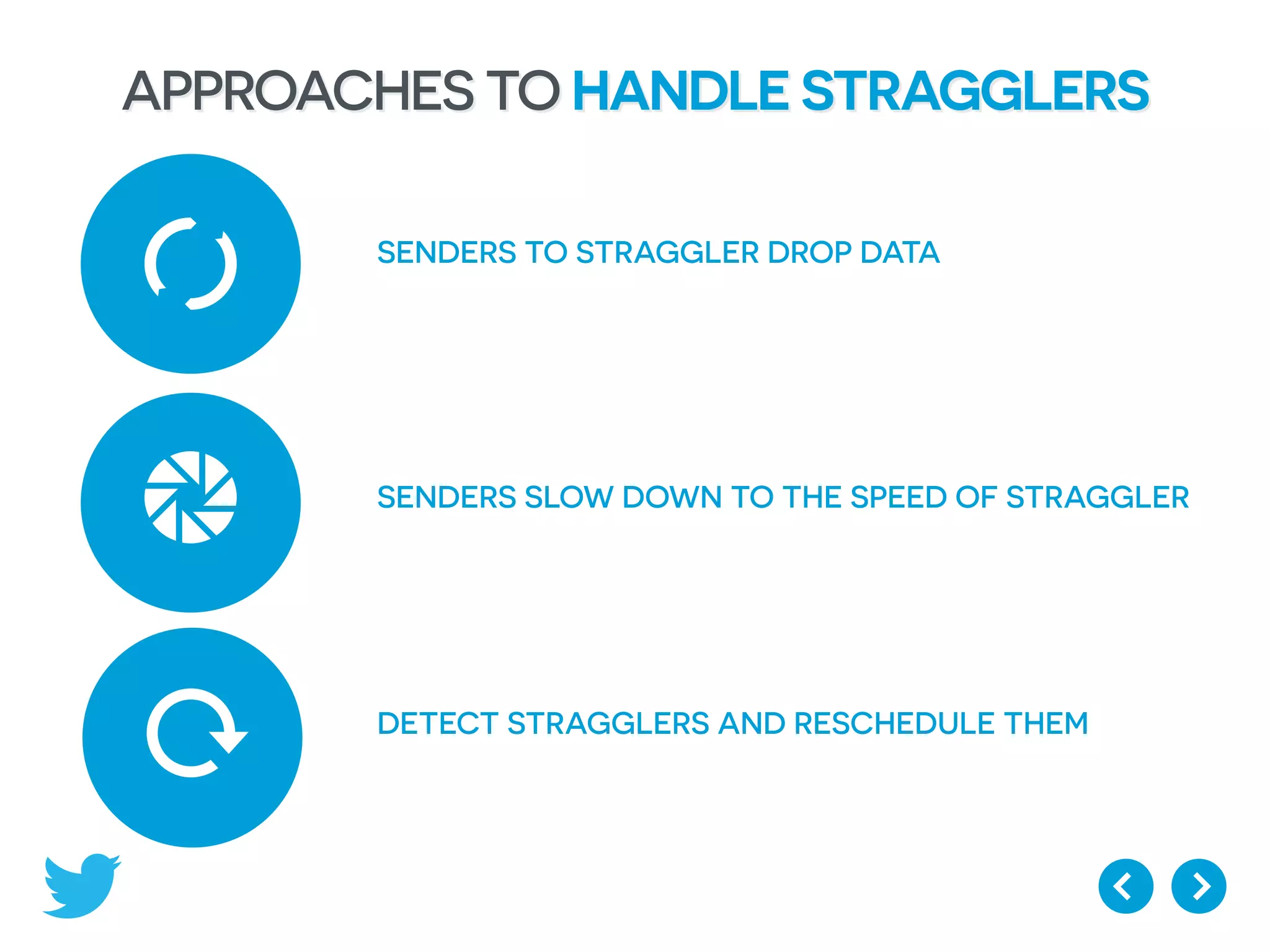

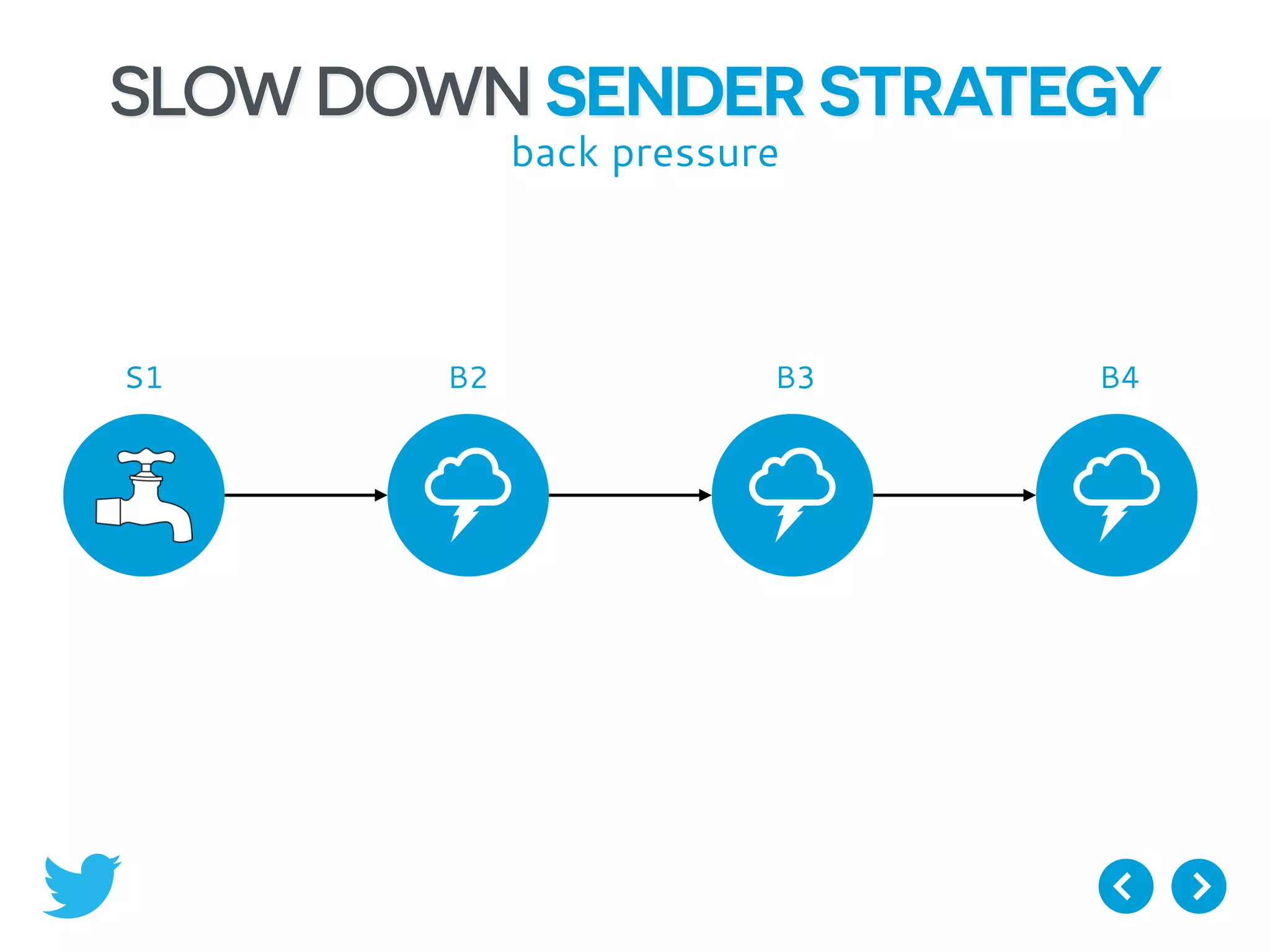

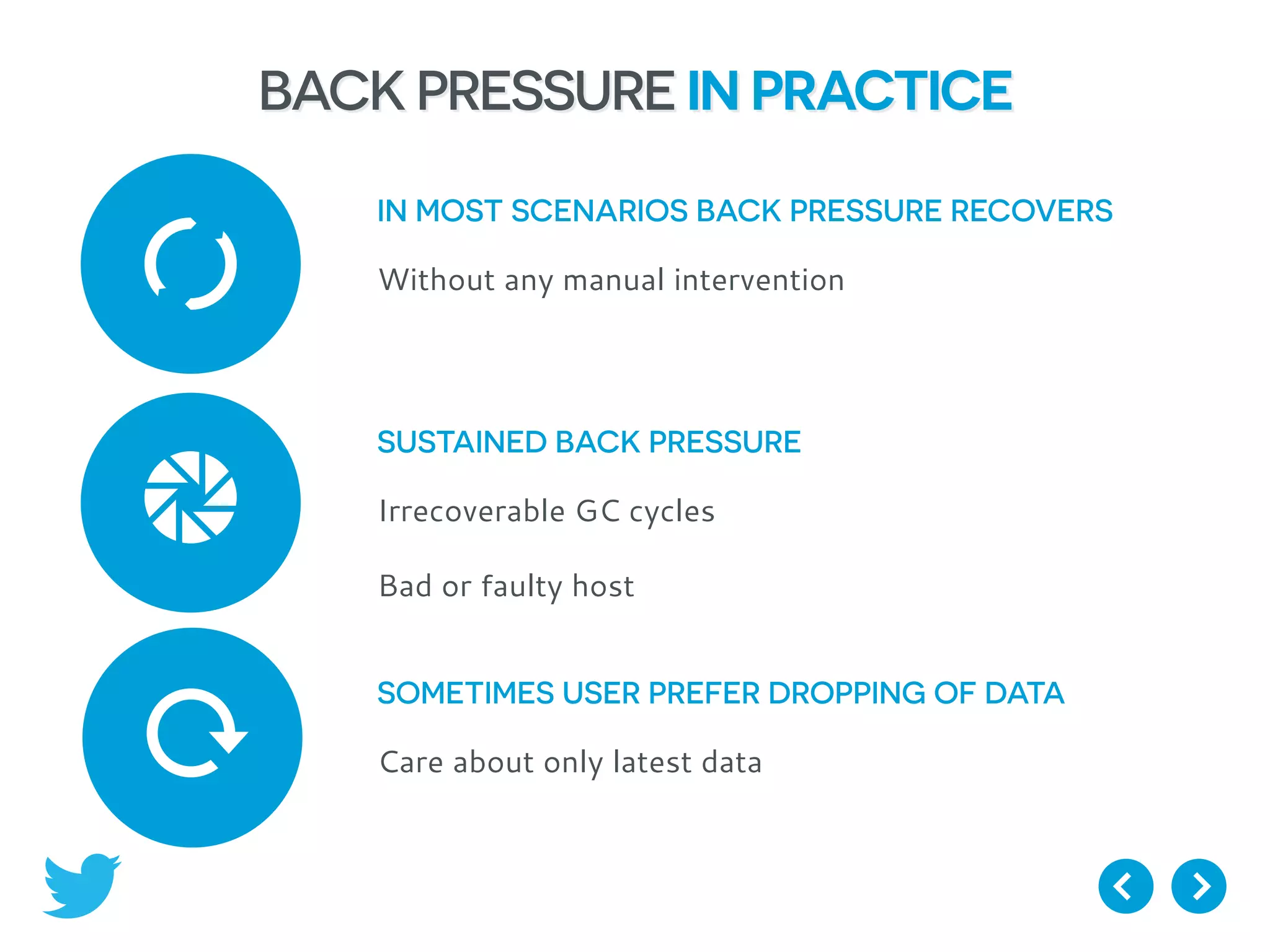

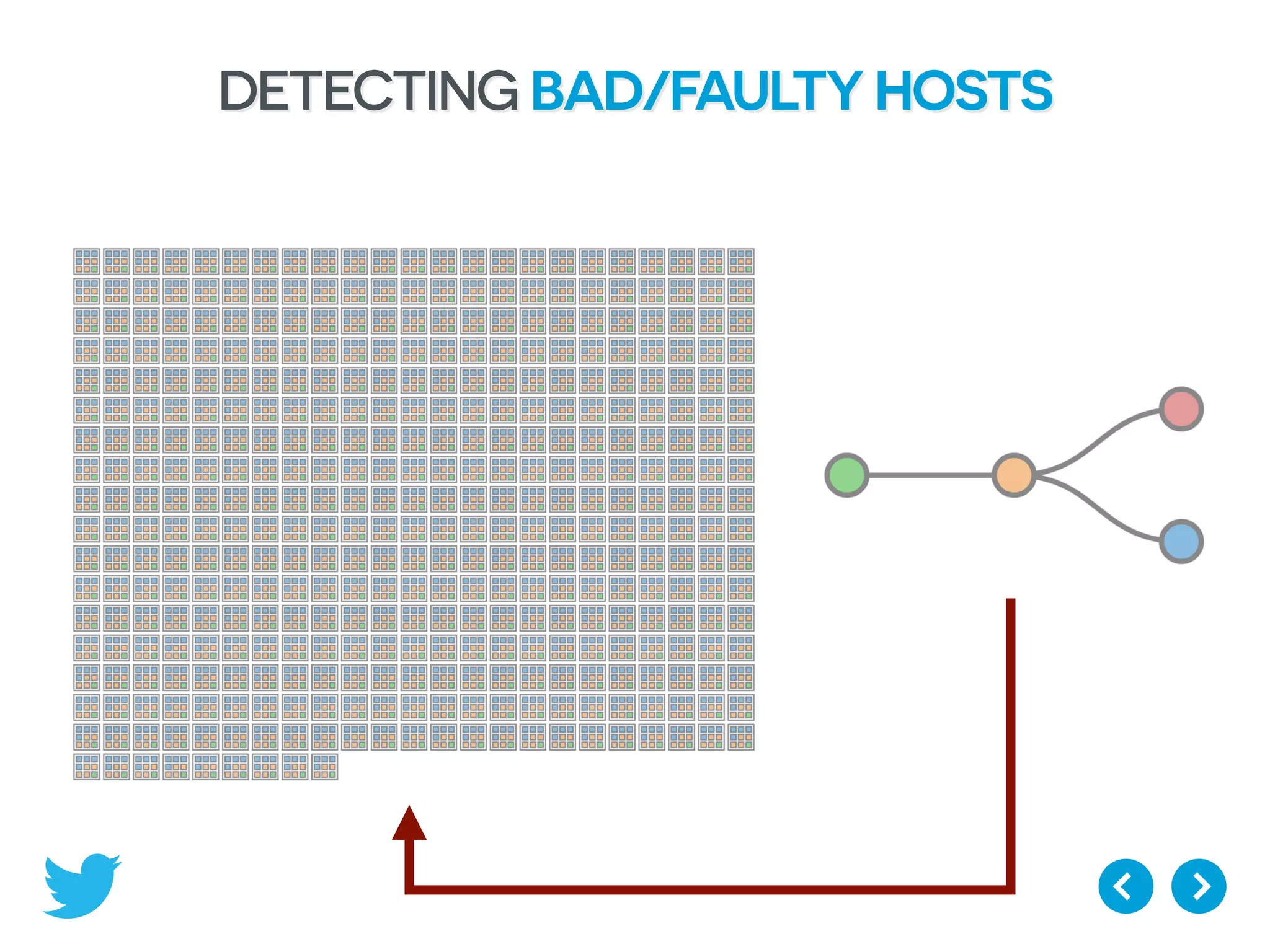

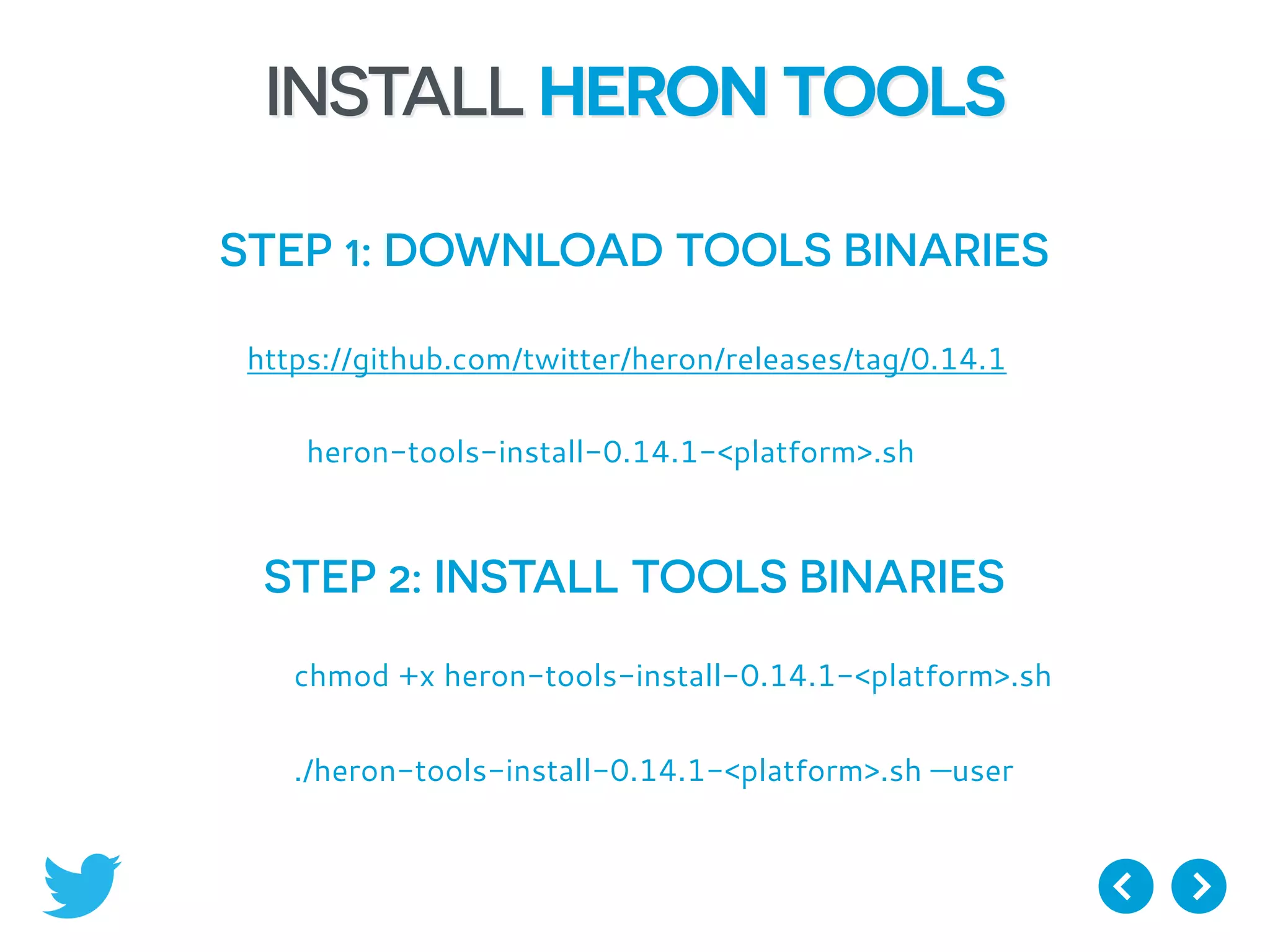

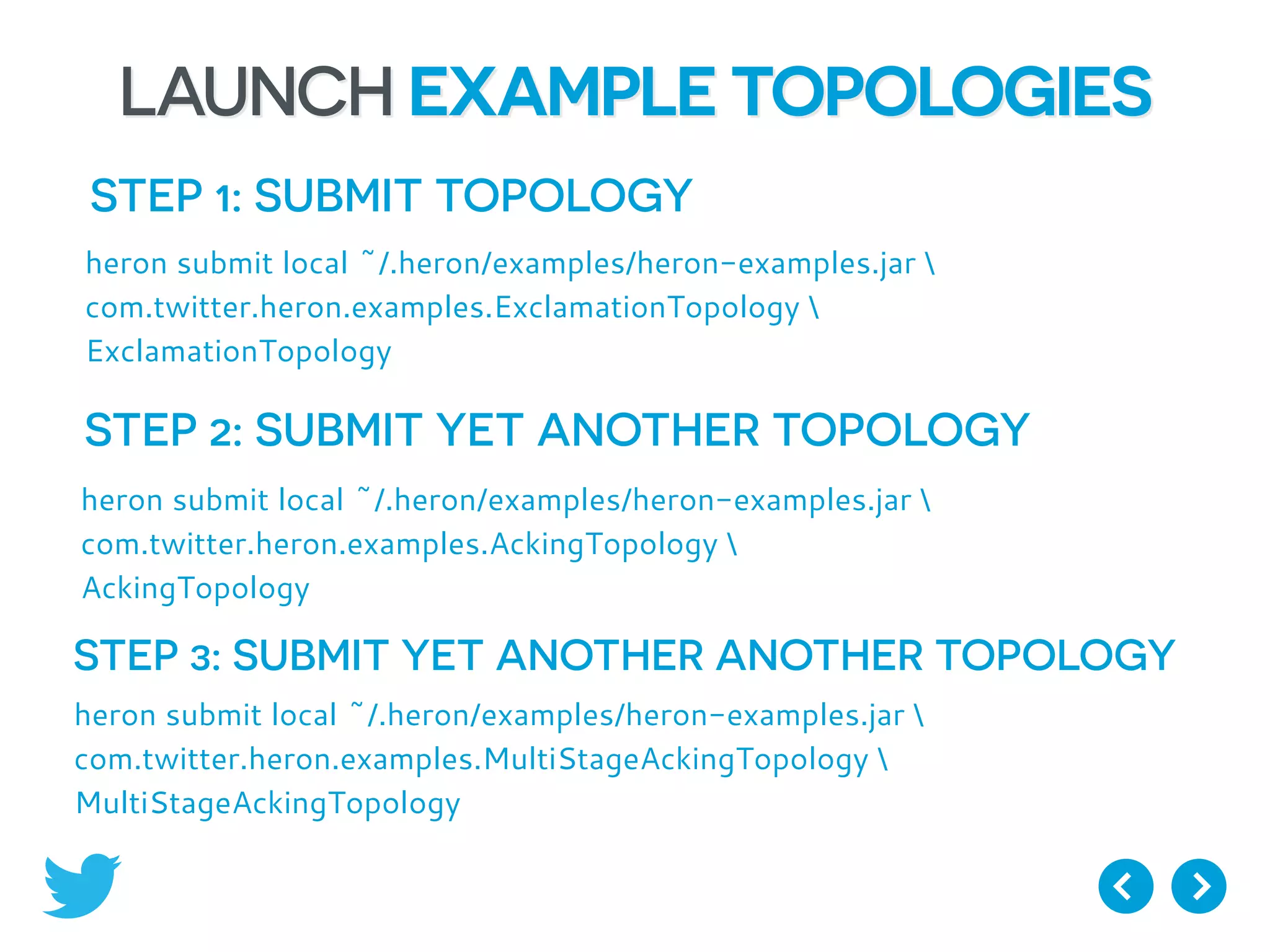

This document provides an overview of Twitter Heron, an open source real-time streaming engine developed by Twitter to replace Storm. It describes Heron's design goals of improved performance, predictability, manageability and developer productivity. The architecture is outlined, including task isolation for easier debugging. Back pressure is discussed as a way to handle stragglers. Load shedding techniques like sampling and dropping data are also covered. Examples are provided for installing Heron and exploring its UI.