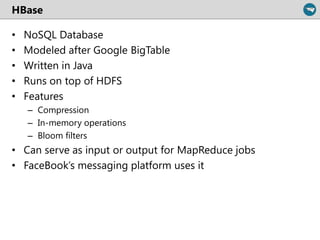

This document serves as an introduction to Hadoop and big data, explaining the challenges of processing large datasets and the necessity for distributed computing solutions. It covers key components of Hadoop, including HDFS and MapReduce, along with tools like Pig and Hive that facilitate data processing and analysis. The document emphasizes the advantages of Hadoop for handling vast amounts of data while discussing its limitations and the ecosystem surrounding it.