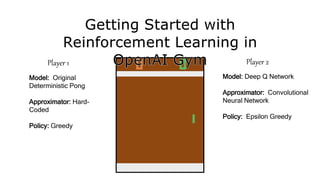

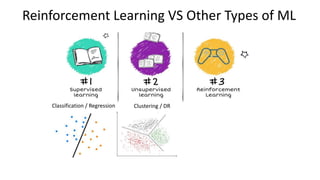

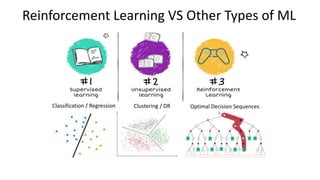

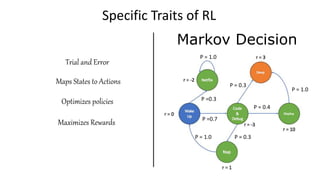

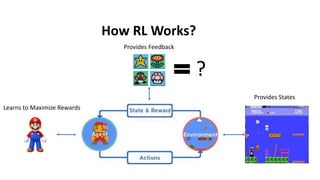

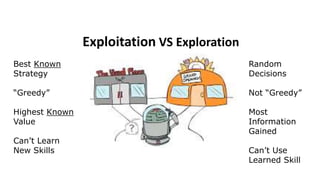

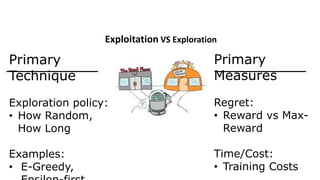

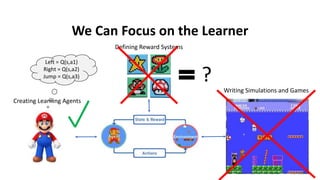

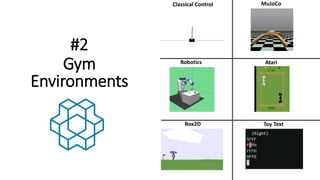

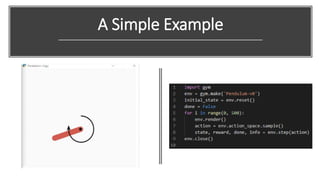

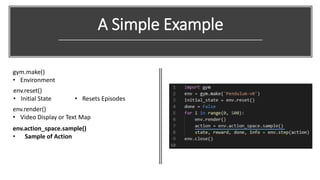

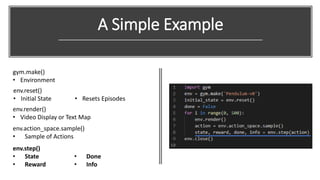

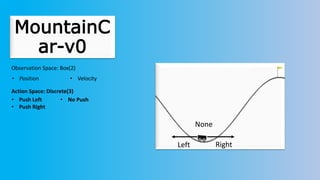

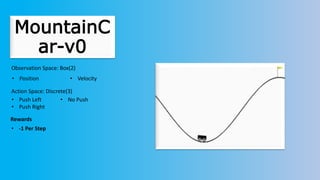

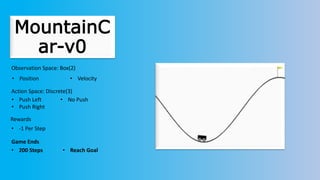

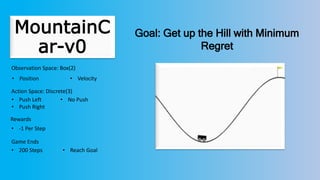

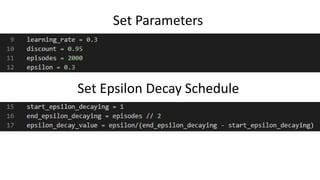

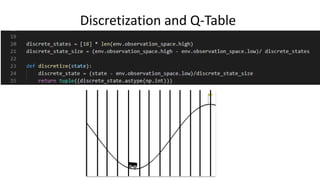

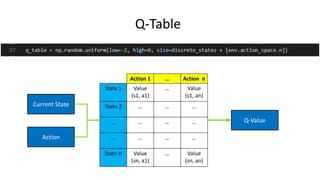

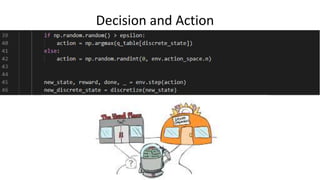

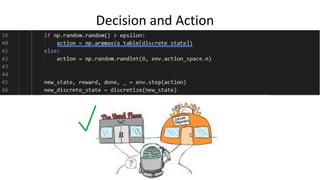

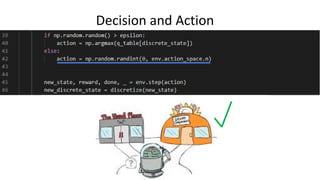

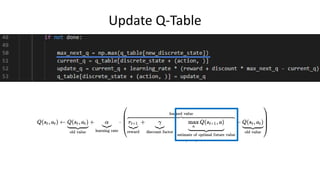

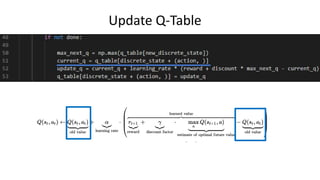

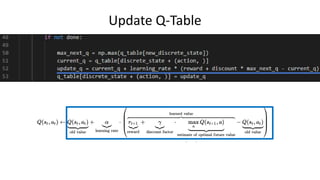

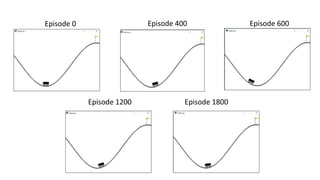

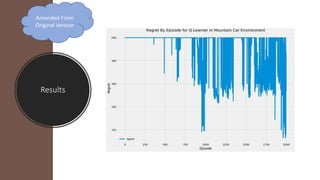

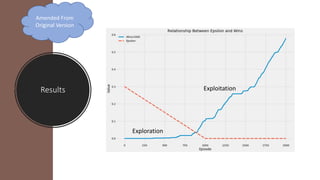

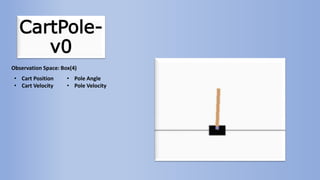

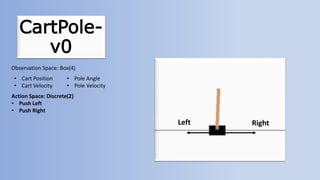

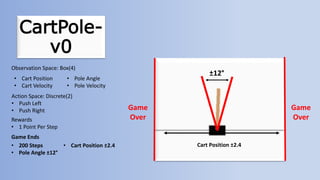

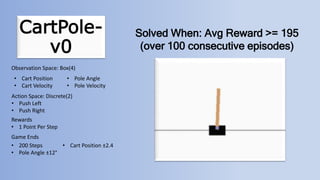

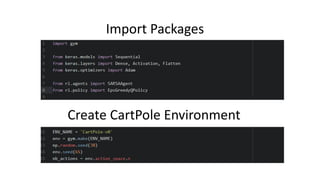

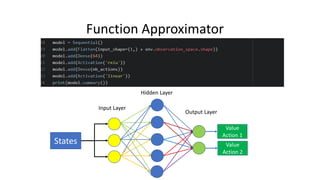

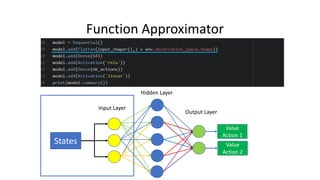

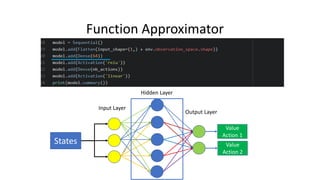

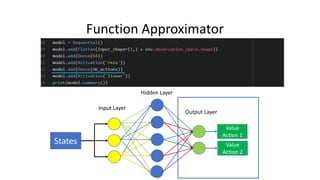

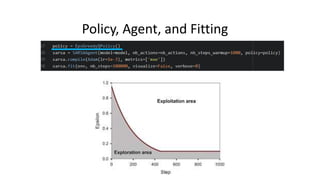

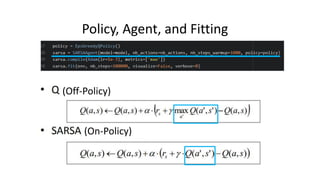

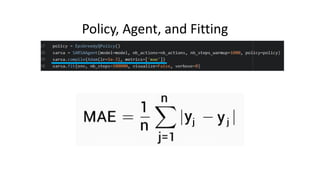

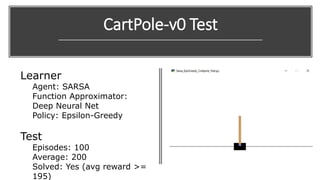

The document outlines reinforcement learning concepts and implementation strategies, focusing on deep Q-networks and epsilon-greedy policies. It presents various challenges in the OpenAI Gym, including environments like 'mountaincar-v0' and 'cartpole-v0', detailing their observation and action spaces, reward structures, and objectives. Additionally, it discusses the importance of exploration vs. exploitation in learning, exemplifying it through learning algorithms and state-action value updates.