deeplearning

- 1. Deep Learning Supervised by prof.asst. Dr.mohammad najem by: Huda hamdan ali

- 2. contents Introduction and overveiw Deep learning challenges Deep N.N Unsupervised Preprocessing Networks Deep Belief Networks Denoising auto encoder Stacked Auto Encoders Deep Boltzmann Machines CNN – Convolutional Neural Networks Recurrent N.N Long Short-Term Memory RNN (LSTM) Generative Adversarial Neural Deep Reinforcement Learning Applications. 2

- 3. introduction Deep learning (also known as deep structured learning or hierarchical learning) is part of a broader family of machine learning methods based on learning data representations, as opposed to task-specific algorithms. Learning can be supervised, semi- supervised or unsupervised. use a cascade of multiple layers of nonlinear processing units for feature extraction and transformation. Each successive layer uses the output from the previous layer as input Deep learning architectures such as deep neural networks, deep belief networks and recurrent neural networks have been applied to fields including computer vision, speech recognition, natural language processing,

- 4. Introduction cont.. Deep learning algorithms can be applied to unsupervised learning tasks. This is an important benefit because unlabeled data are more abundant than labeled data.

- 5. Inspired by the Brain The first hierarchy of neurons that receives information in the visual cortex are sensitive to specific edges while brain regions further down the visual pipeline are sensitive to more complex structures such as faces. Our brain has lots of neurons connected together and the strength of the connections between neurons represents long term knowledge.

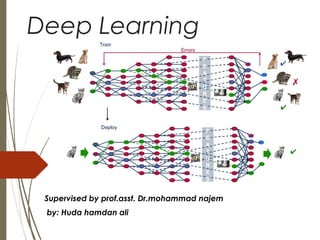

- 6. Deep Learning training Overview Train networks with many layers (Multiple layers work to build an improved feature space First layer learns 1st order features (e.g. edges…) 2nd layer learns higher order features (combinations of first layer features, combinations of edges, etc.) Some models learn in an unsupervised mode and discover general features of the input space – serving multiple tasks related to the unsupervised instances (image recognition, etc.) Final layer of transformed features are fed into supervised layer(s) And entire network is often subsequently tuned using supervised training of the entire net, using the initial weightings learned in the unsupervised phase

- 7. Deep Learning Architecture A deep neural network consists of a hierarchy of layers, whereby each layer transforms the input data into more abstract representations (e.g. edge -> nose -> face). The output layer combines those features to make predictions

- 8. What did it learn?

- 9. No more feature engineering

- 11. Problems with Back Propagation Gradient is progressively getting more dilute Below top few layers, correction signal is minimal Gets stuck in local minima Especially since they start out far from ‘good’ regions (i.e., random initialization)

- 12. DNN challenges As with ANNs, many issues can arise with naively trained DNNs. Two common issues are overfitting and computation time. DNNs are prone to overfitting because of the added layers of abstraction, which allow them to model rare dependencies in the training data. Regularization methods such as Ivakhnenko's unit pruning or weight decay (regularization) or sparsity (regularization) can be applied during training to combat overfitting. Alternatively dropout regularization randomly omits units from the hidden layers during training. This helps to exclude rare dependencies. Finally, data can be augmented via methods such as cropping and rotating such that smaller training sets can be increased in size to reduce the chances of overfitting.

- 13. Challenge cont.. DNNs must consider many training parameters, such as the size (number of layers and number of units per layer), the learning rate and initial weights. Sweeping through the parameter space for optimal parameters may not be feasible due to the cost in time and computational resources. Various tricks such as batching (computing the gradient on several training examples at once rather than individual examples) speed up computation. The large processing throughput of GPUs has produced significant speedups in training, because the matrix and vector computations required are well-suited for GPUs.

- 14. Challenge Cont.. Alternatively, we may need to look for other type of neural network which has straightforward and convergent training algorithm. CMAC (cerebellar model articulation controller) is such kind of neural network. For example, there is no need to adjust learning rates or randomize initial weights for CMAC. The training process can be guaranteed to converge in one step with a new batch of data, and the computational complexity of the training algorithm is linear with respect to the number of neurons involved

- 15. Greedy Layer-Wise Training 1. Train first layer using your data without the labels (unsupervised) Since there are no targets at this level, labels don't help. Then freeze the first layer parameters and start training the second layer using the output of the first layer as the unsupervised input to the second layer 1. Repeat this for as many layers as desired This builds the set of robust features 1. Use the outputs of the final layer as inputs to a supervised layer/model and train the last supervised layer (s) (leave early weights frozen) 2. Unfreeze all weights and fine tune the full network by training with a supervised approach, given the pre-training weight settings 15

- 16. David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail.com These slides and related resources: http://www.macs.hw.ac.uk/~dwcorne/Teaching/dmml.html

- 17. Deep Belief Networks(DBNs) Unsupervised pre-learning provides a good initialization of the network Probabilistic generative model Deep architecture – multiple layers Supervised fine-tuning Generative: Up-down algorithm Discriminative: backpropagation

- 19. DBN Greedy training First step: Construct an RBM with an input layer v and a hidden layer h Train the RBM A restricted Boltzmann machine (RBM) is: a generative stochastic artificial neural network that can learn a probability distribution over its set of inputs.

- 22. Auto-Encoders A type of unsupervised learning, An autoencoder is typically a feedforward neural network which aims to learn a compressed, distributed representation (encoding) of a dataset. Conceptually, the network is trained to “recreate” the input, i.e., the input and the target data are the same. In other words: you’re trying to output the same thing you were input, but compressed in some way. In effect, we want a few small nodes in the middle to really learn the data at a conceptual level, producing a compact representation that in some way captures the core features of our input. 22

- 23. David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail.com These slides and related resources: http://www.macs.hw.ac.uk/~dwcorne/Teaching/dmml.html

- 26. David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail.com These slides and related resources: http://www.macs.hw.ac.uk/~dwcorne/Teaching/dmml.html

- 28. DBMs vs. DBNs

- 30. Convolutional Neural Nets (CNN) Convolution layers a feature detector that automagically learns to filter out not needed information from an input by using convolution kernel. Pooling layers compute the max or average value of a particular feature over a region of the input data (downsizing of input images).Also helps to detect objects in some unusual places and reduces memory size.

- 31. CNN High accuracy for image applications – Breaking all records and doing it using just raw pixel features. Special purpose net – Just for images or problems with strong grid-like local spatial/temporal correlation Once trained on one problem (e.g. vision) could use same net (often tuned) for a new similar problem – general creator of vision features Unlike traditional nets, handles variable sized inputs Same filters and weights, just convolve across different sized image and dynamically scale size of pooling regions (not # of nodes), to normalize Different sized images, different length speech segments, etc. Lots of hand crafting and CV tuning to find the right recipe of receptive fields, layer interconnections, etc. Lots more Hyperparameters than standard nets, and even than other deep networks, since the structures of CNNs are more handcrafted CNNs getting wider and deeper with speed-up techniques (e.g. GPU, ReLU, etc.) and lots of current research, excitement, and success 31

- 32. Recurrent Neural Nets (RNN)

- 33. Long Short-Term Memory RNN (LSTM)

- 37. Deep Learning in Computer Vision Image Segmentation

- 38. Deep Learning in Computer Vision Image Captioning

- 39. Deep Learning in Computer Vision Image Compression

- 40. Deep Learning in Computer Vision Image Localization

- 41. Deep Learning in Computer Vision Image Transformation –Adding features

- 42. Deep Learning in Computer Vision Image Colorization

- 44. Style Transfer –morph images into paintings

- 45. Deep Learning in Audio Processing Sound Generation

- 46. Deep Learning in NLP Syntax Parsing

- 47. Deep Learning in NLP Generating Text

- 48. Deep Learning in Medicine Skin Cancer Diagnoses

- 49. Deep Learning in Medicine Detection of diabetic eye disease

- 50. Deep Learning in Science Saving Energy

- 51. Deep Learning in Cryptography Learning to encrypt and decrypt communication

- 52. Autonomous drone navigation with deep learning

- 54. Finally .. That’s the basic idea.. There are many types of deep learning, different kinds of autoencoder, variations on architectures and training algorithms, etc… Very fast growing area …

- 55. Thanks for attention 2017 //H u d a

Editor's Notes

- Pre-Traiining

- If do full supervised, we may not bet the benefits of building up the incrementally abstracted feature space Steps 1-4 called pre-training as it gets the weights close enough so that standard training in step 5 can be effective Do fine tuning for sure if lots of labeled data, if little labeled data, not as helpful.

- Though Deep Nets done first, start with auto-encoders because they are simpler Mention Zipser auotencoder with reverse engineering, then Cottrell compression where unable to reverse engineer If h is smaller than x then “undercomplete” autoencoding – also would use “regularized” autoencoding Can use just new features in the new training set or concatenate both original and new

- Dynamic size – Pooling region just sums/maxes over an area with one final weight so no hard changes when we adjust pool region size Simard 2003, Simple consistent CNN structure 5x5 with 2x2 subsampling with number of features 5 in first C-layer, 50 in next, until too small. Don’t actually used pool layer as instead just connect every other node which samples rather than max/average. Each layer reduces feature size by (n-3)/2. Just two layers for mnist. They also use elastic distortions which is a type of jitter to get increased data. 99.6% - best at the time, Distortions also help a lot with standard MLP Thus an approach with less Hyperparameter fiddling Ciresan and Schmidhuber 2012, Multi column DNN. CNN with depth 6-10 (deeper if initial input image is bigger), and wider on fields, 1-2 hidden layers in MLP, columns are CNNs (an ensemble with different parameters, features, etc.) where their output is averaged, jitter inputs, multi-day GPU training, annealed LR (.001 dropping to .00003) 99.76% mnist