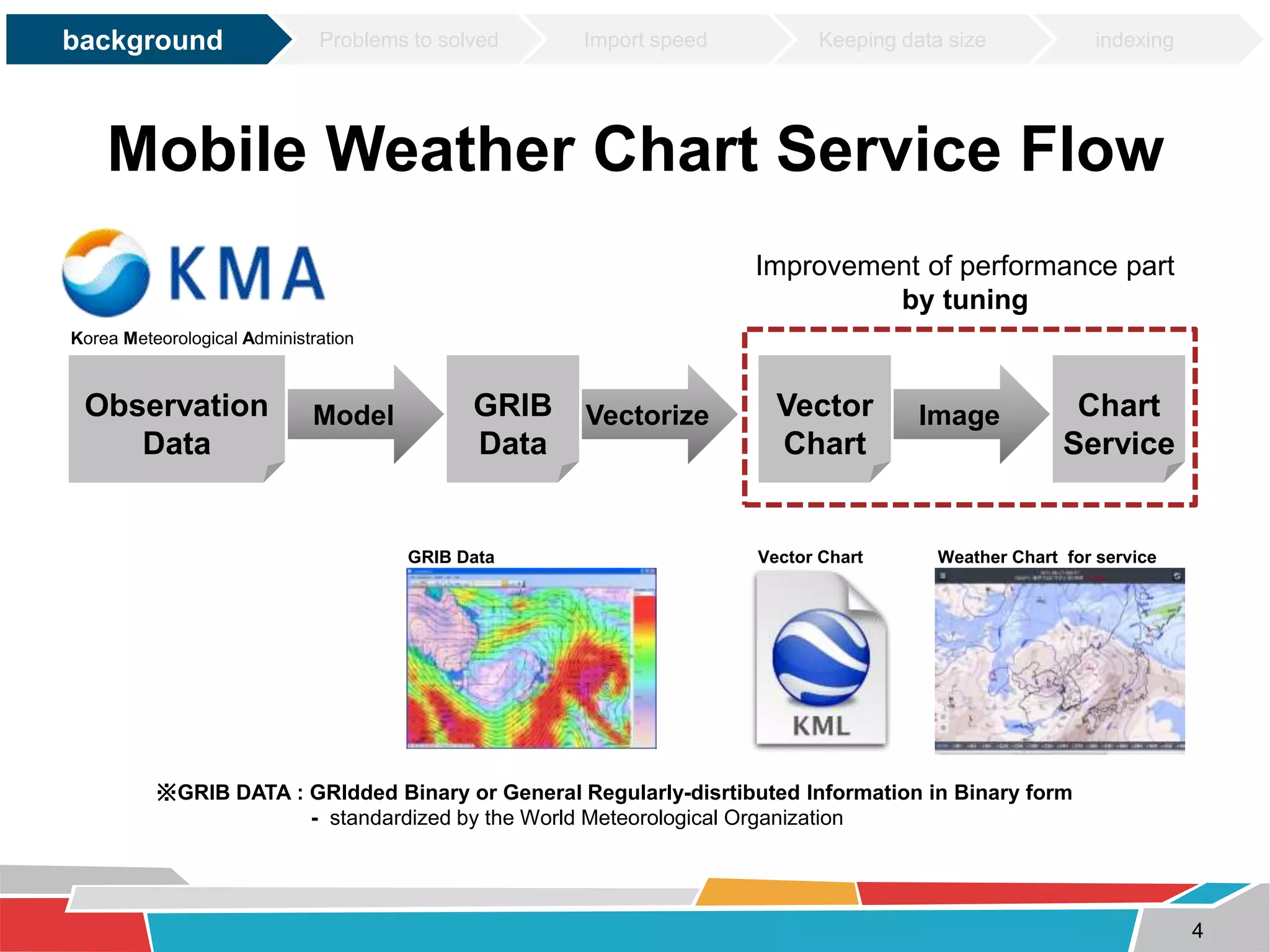

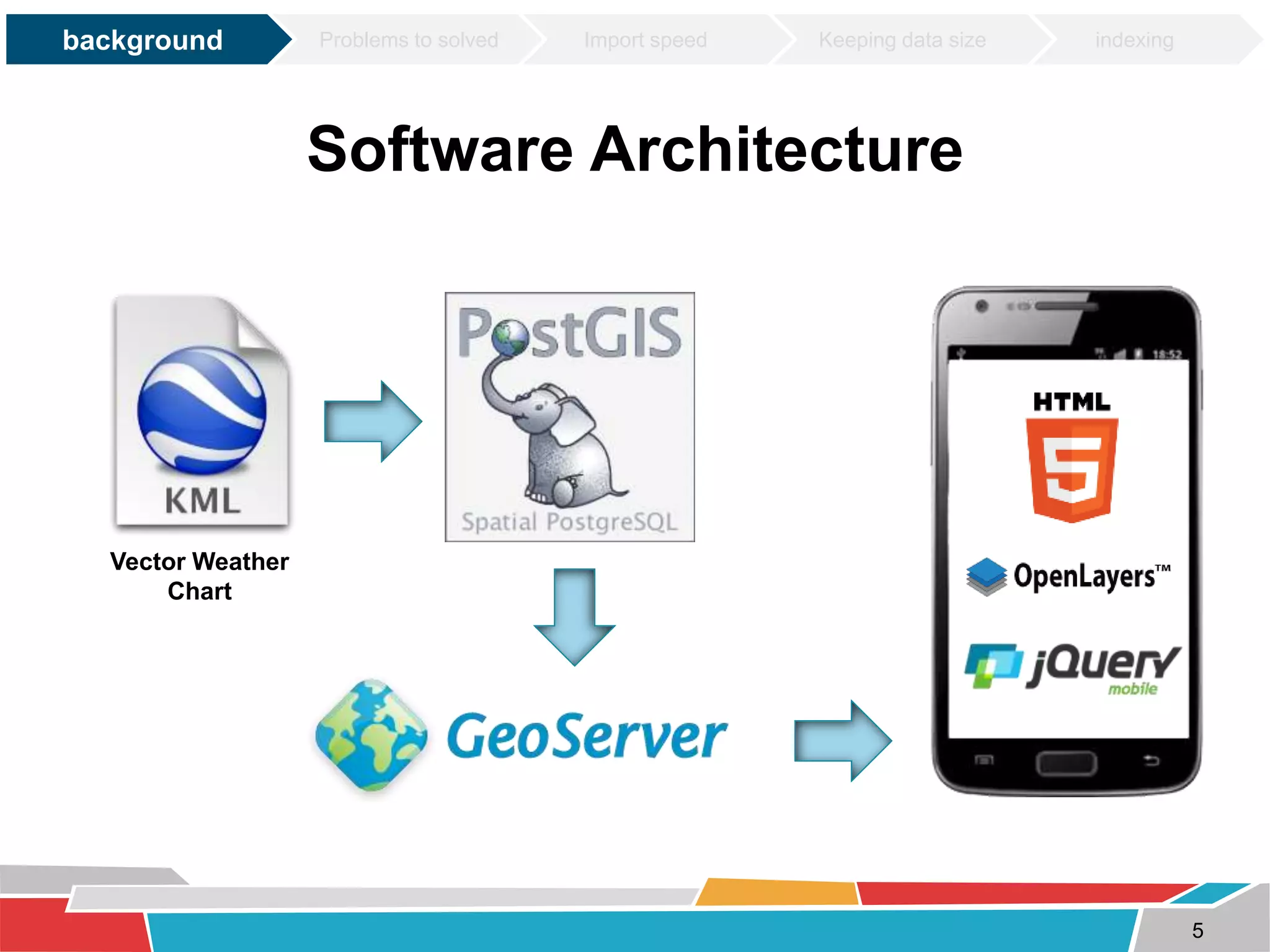

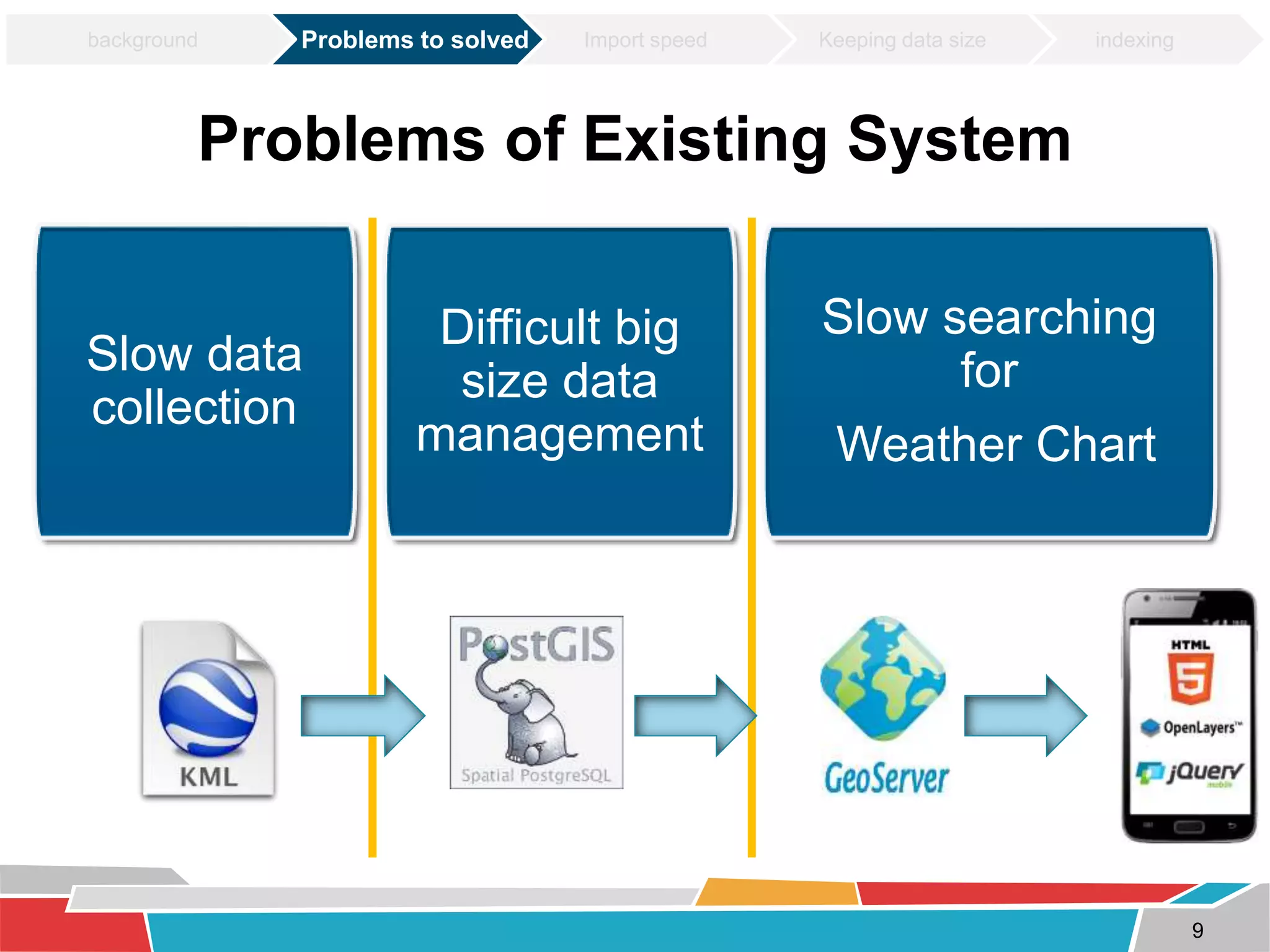

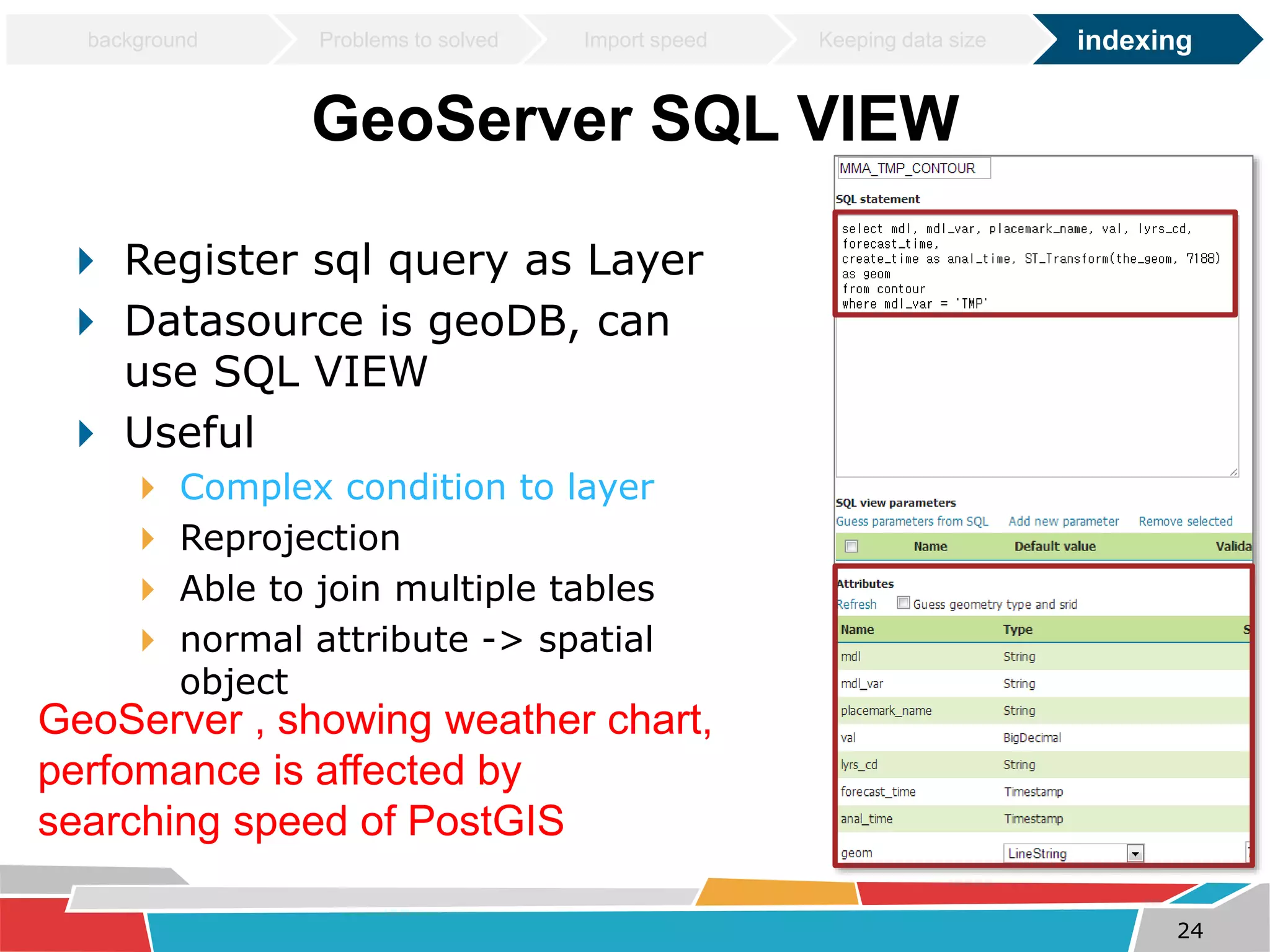

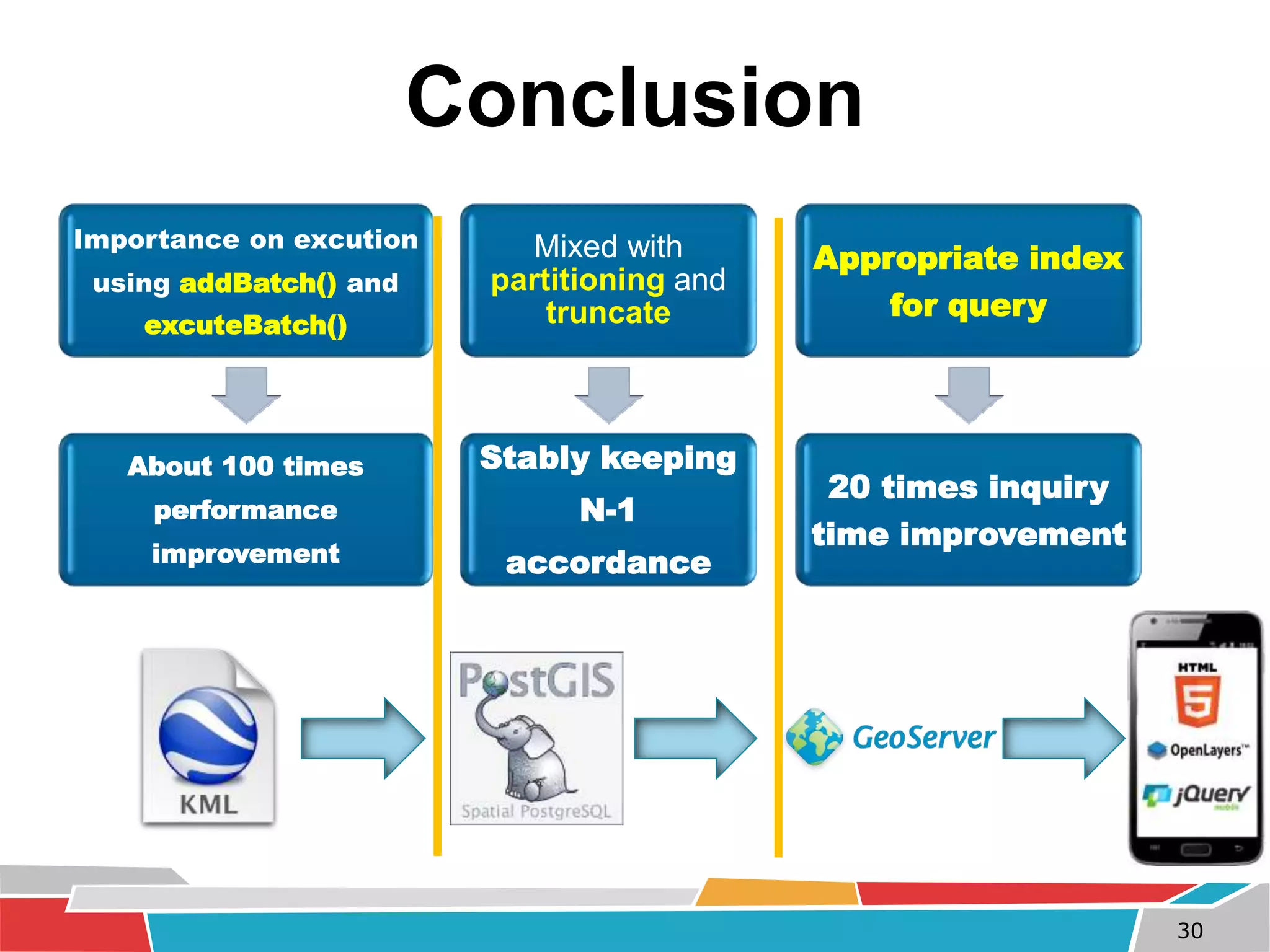

This document discusses tuning techniques used to improve the performance of a mobile weather chart service using PostGIS and GeoServer. The initial system was slow due to a lack of tuning. Tuning techniques included:

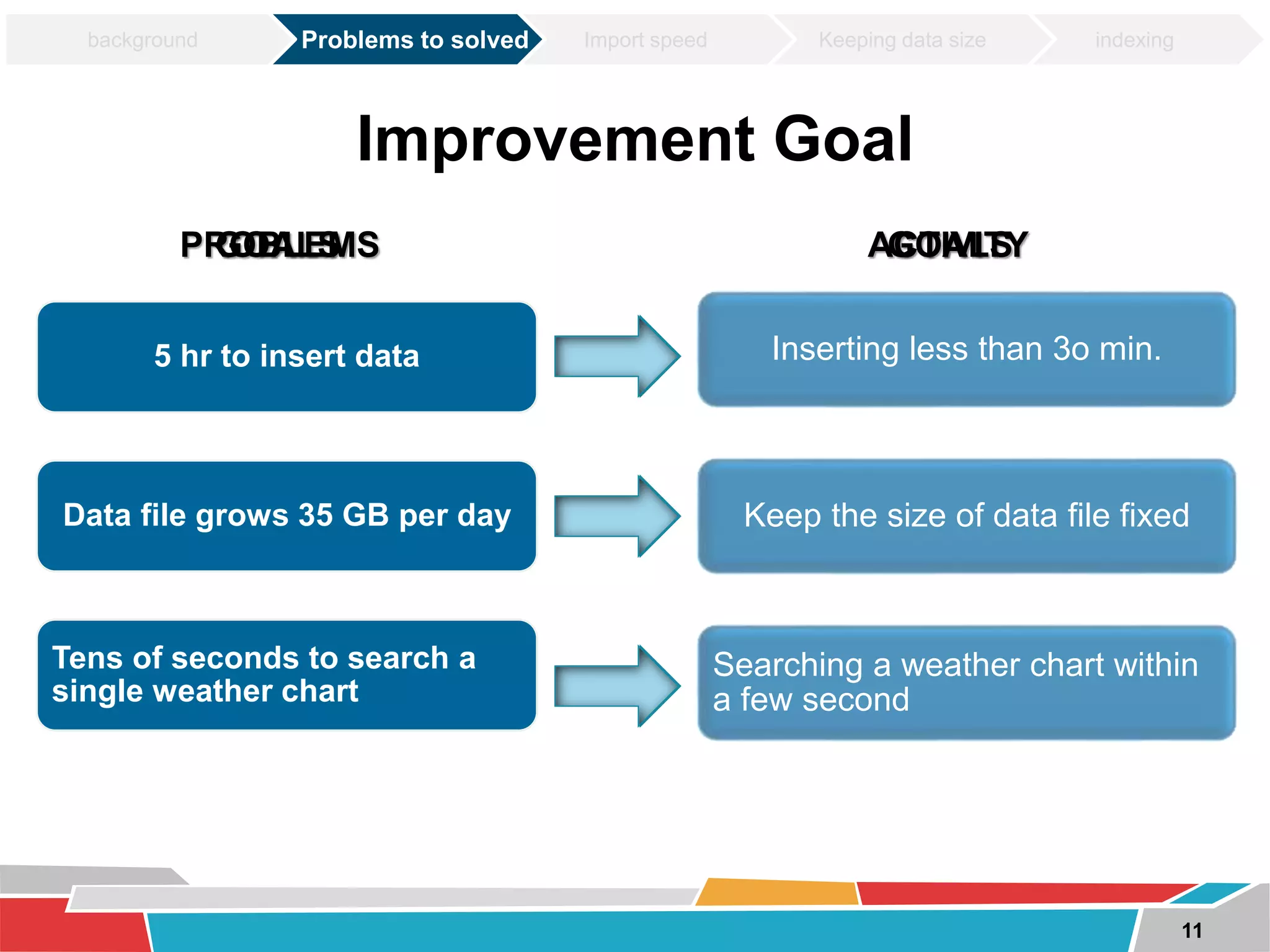

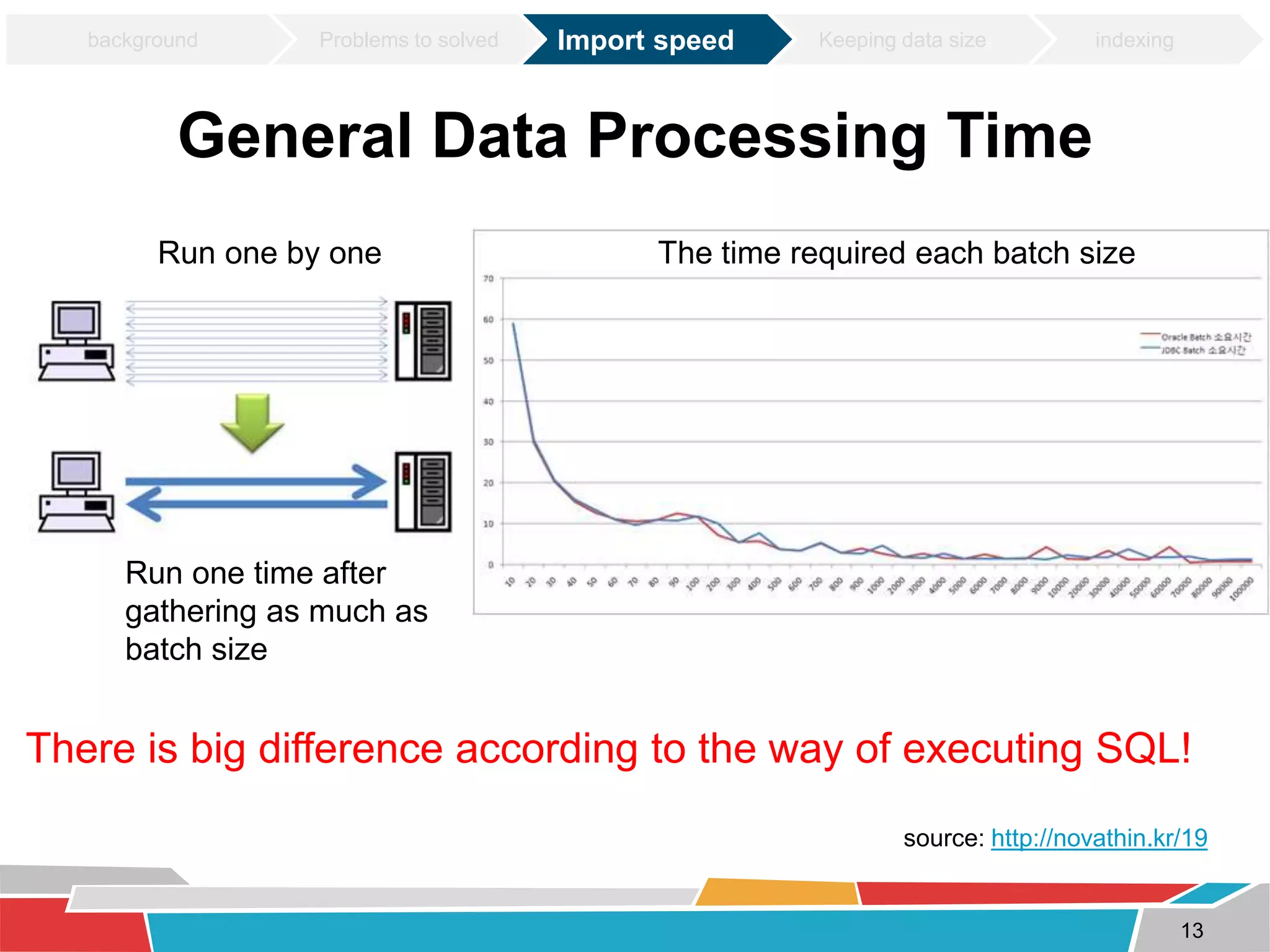

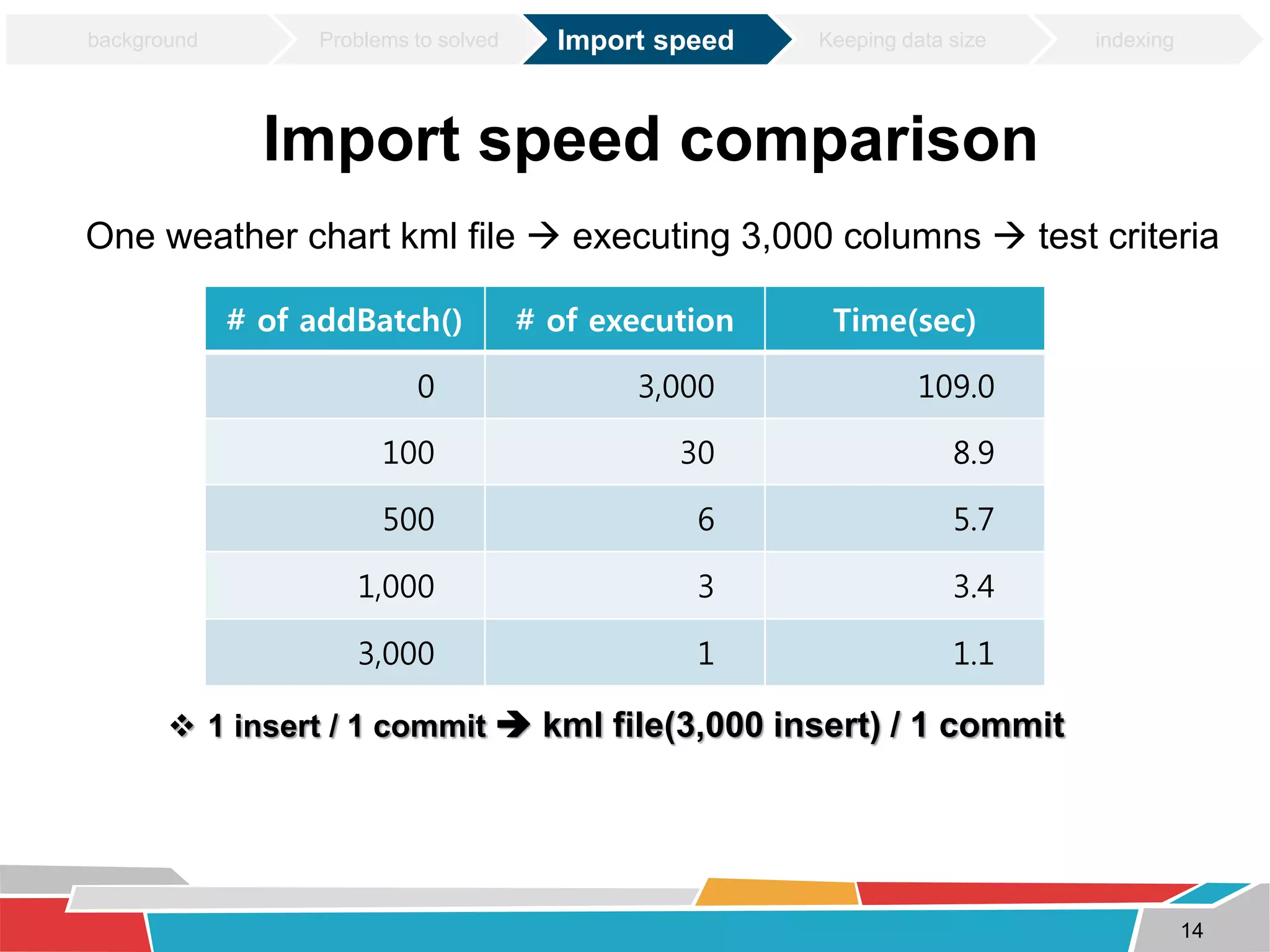

1) Improving import speed by batching inserts instead of single inserts, reducing import time from over 5 hours to under 30 minutes.

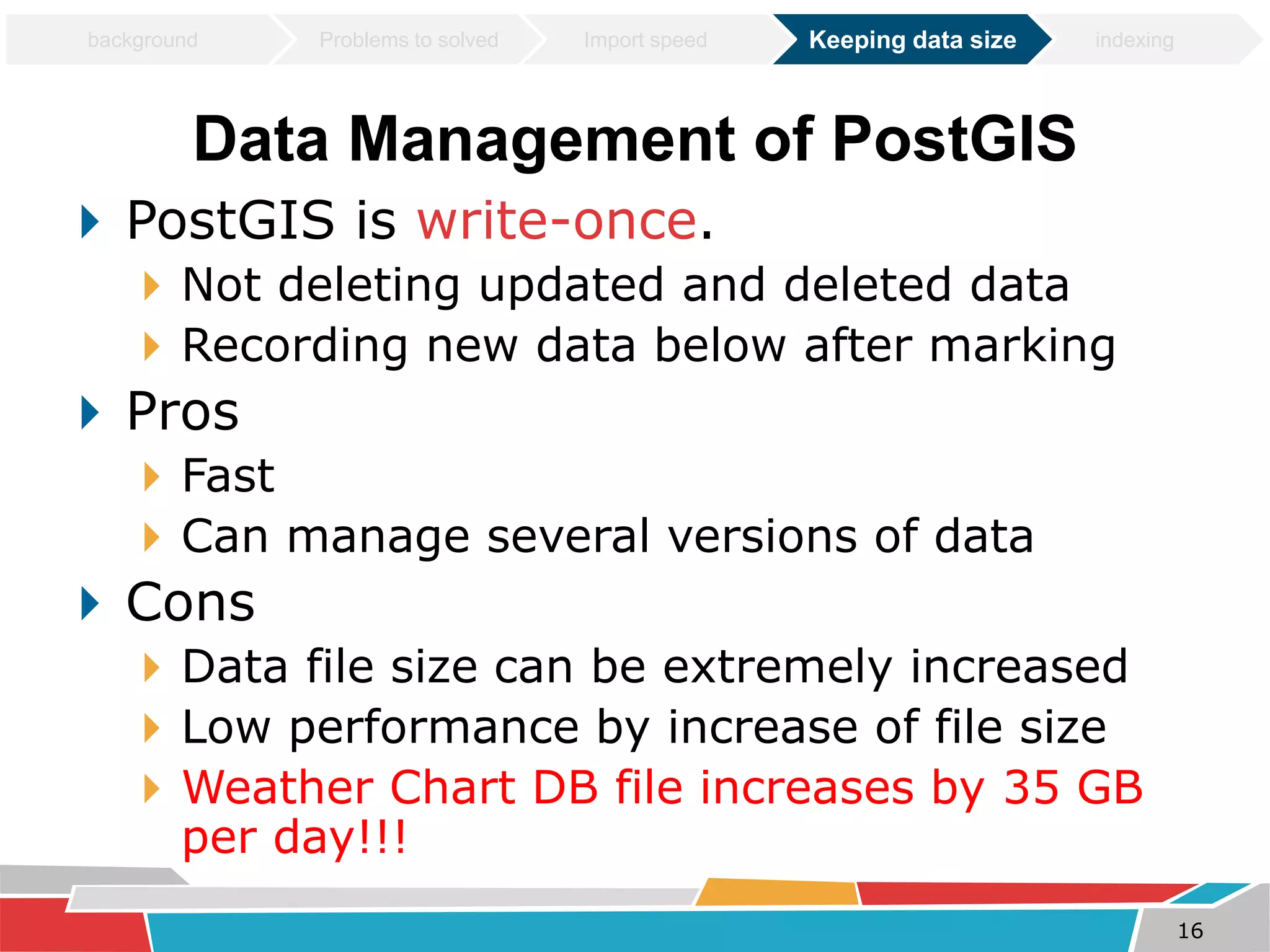

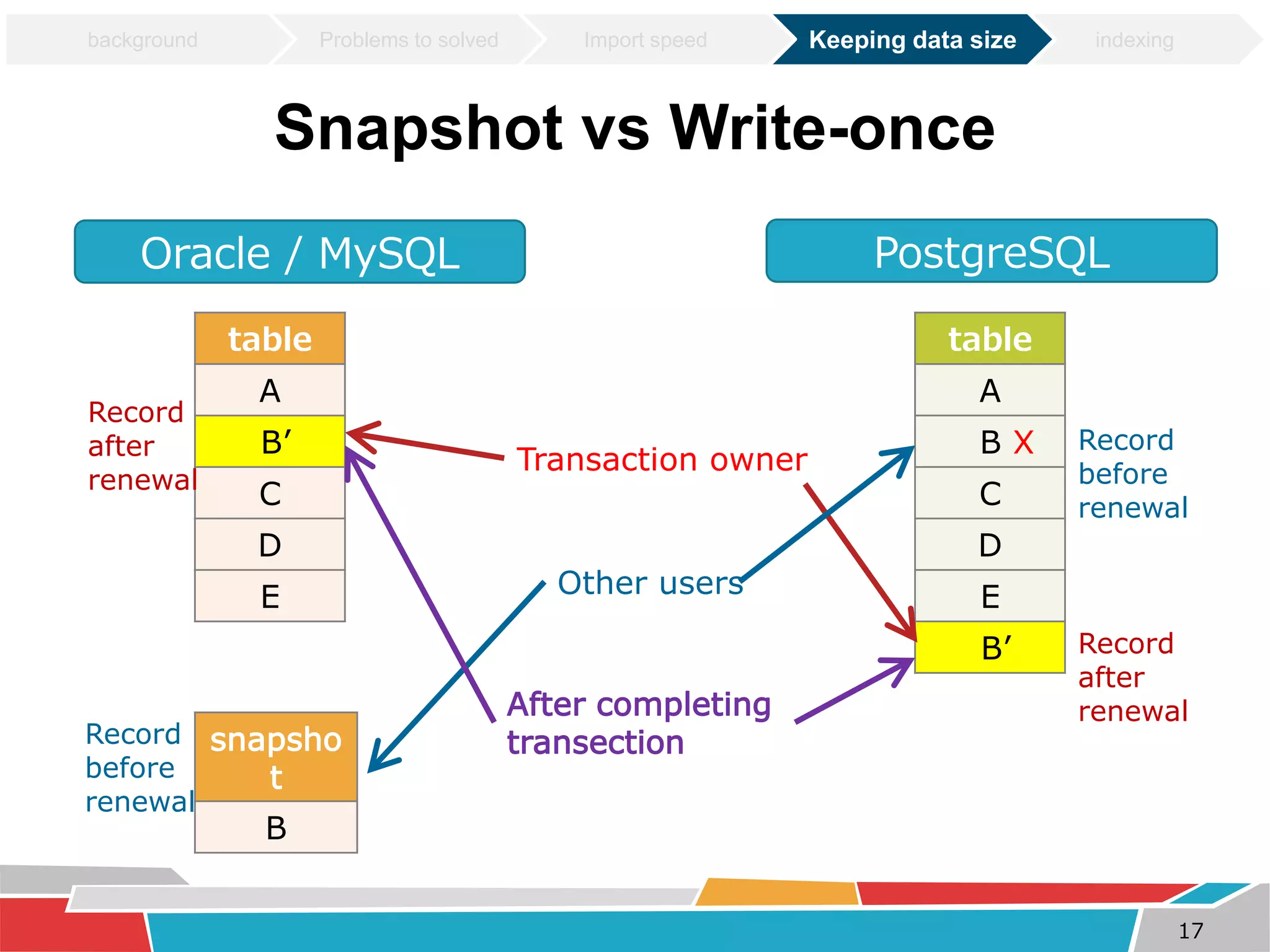

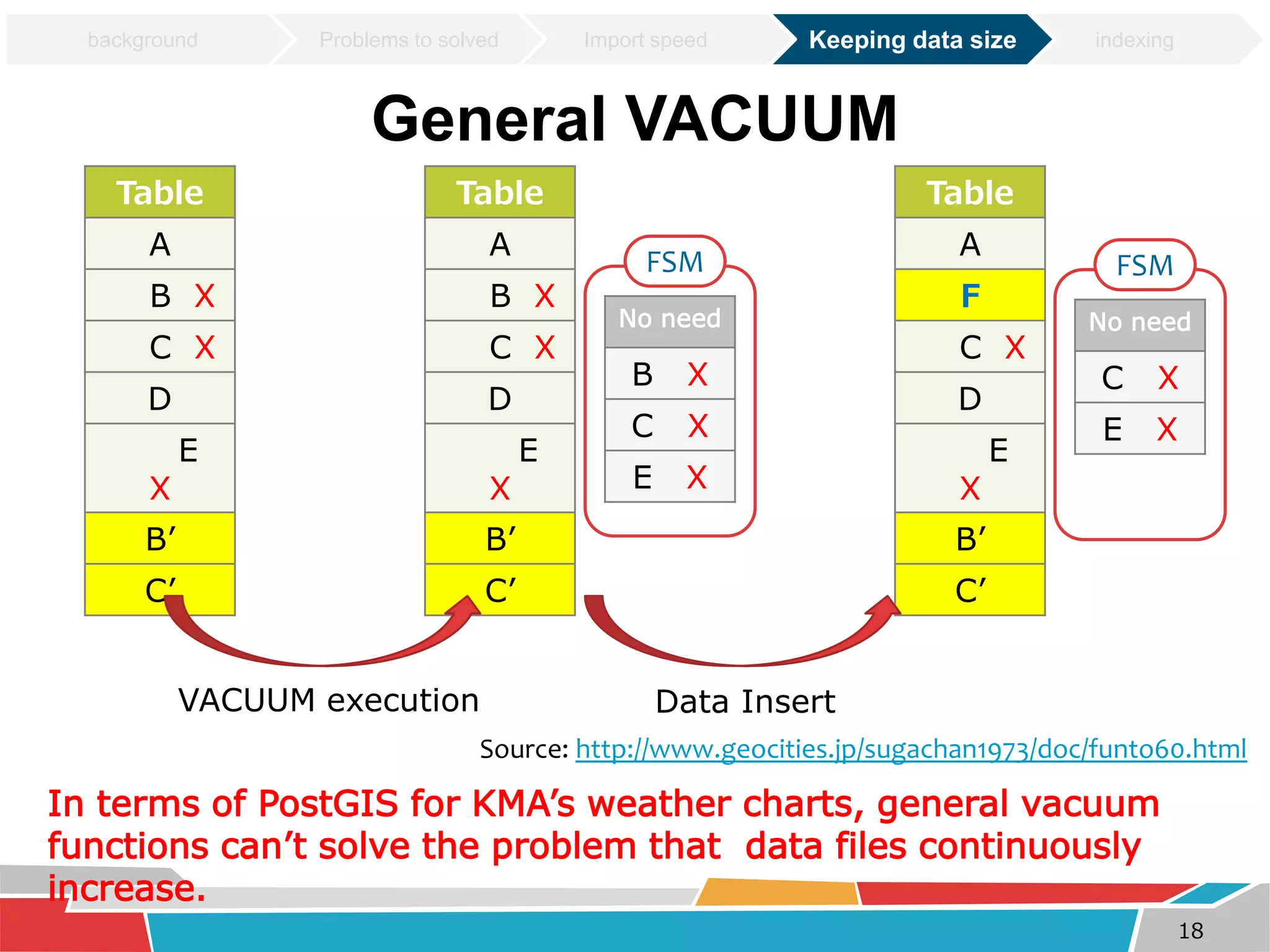

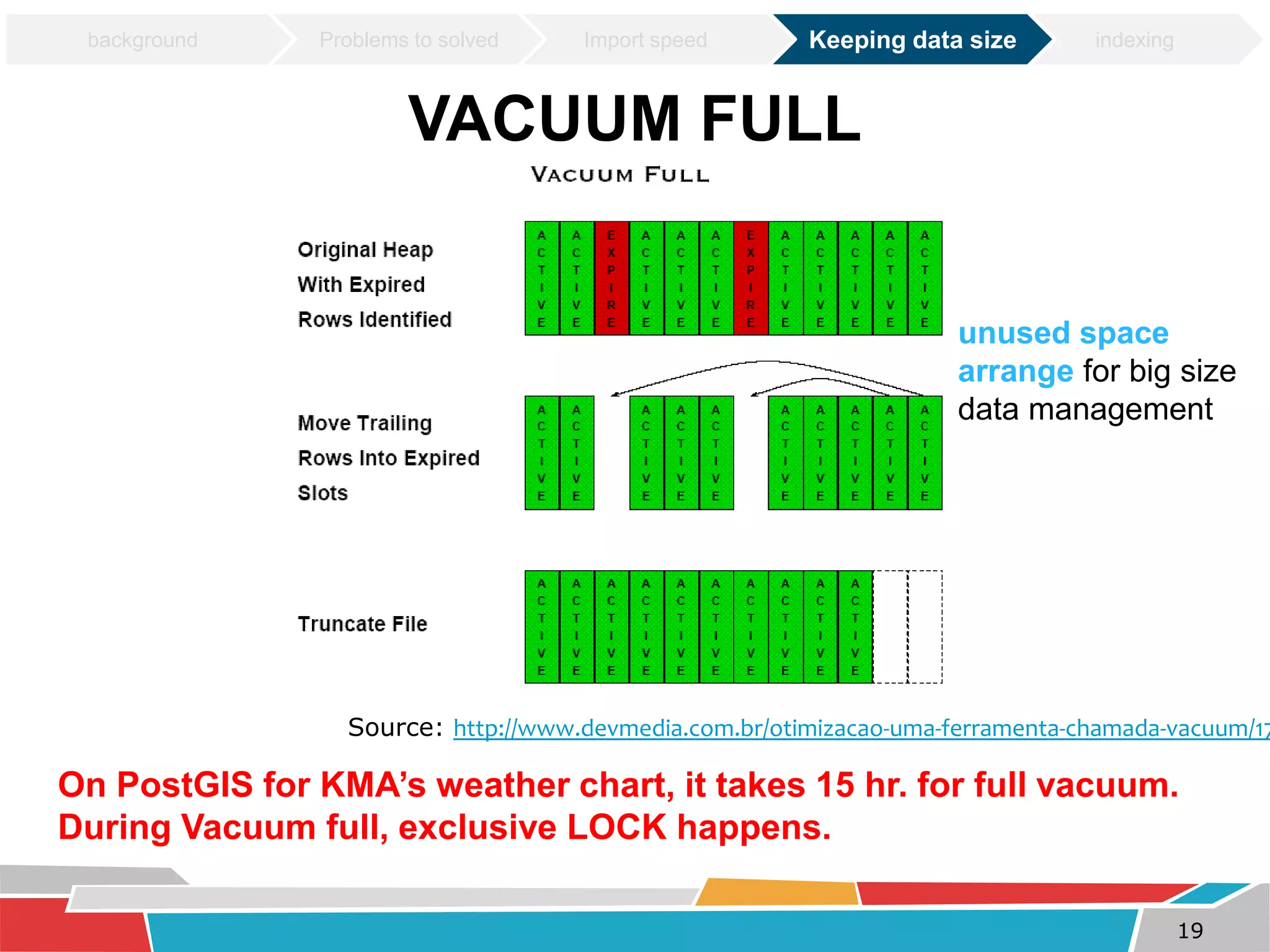

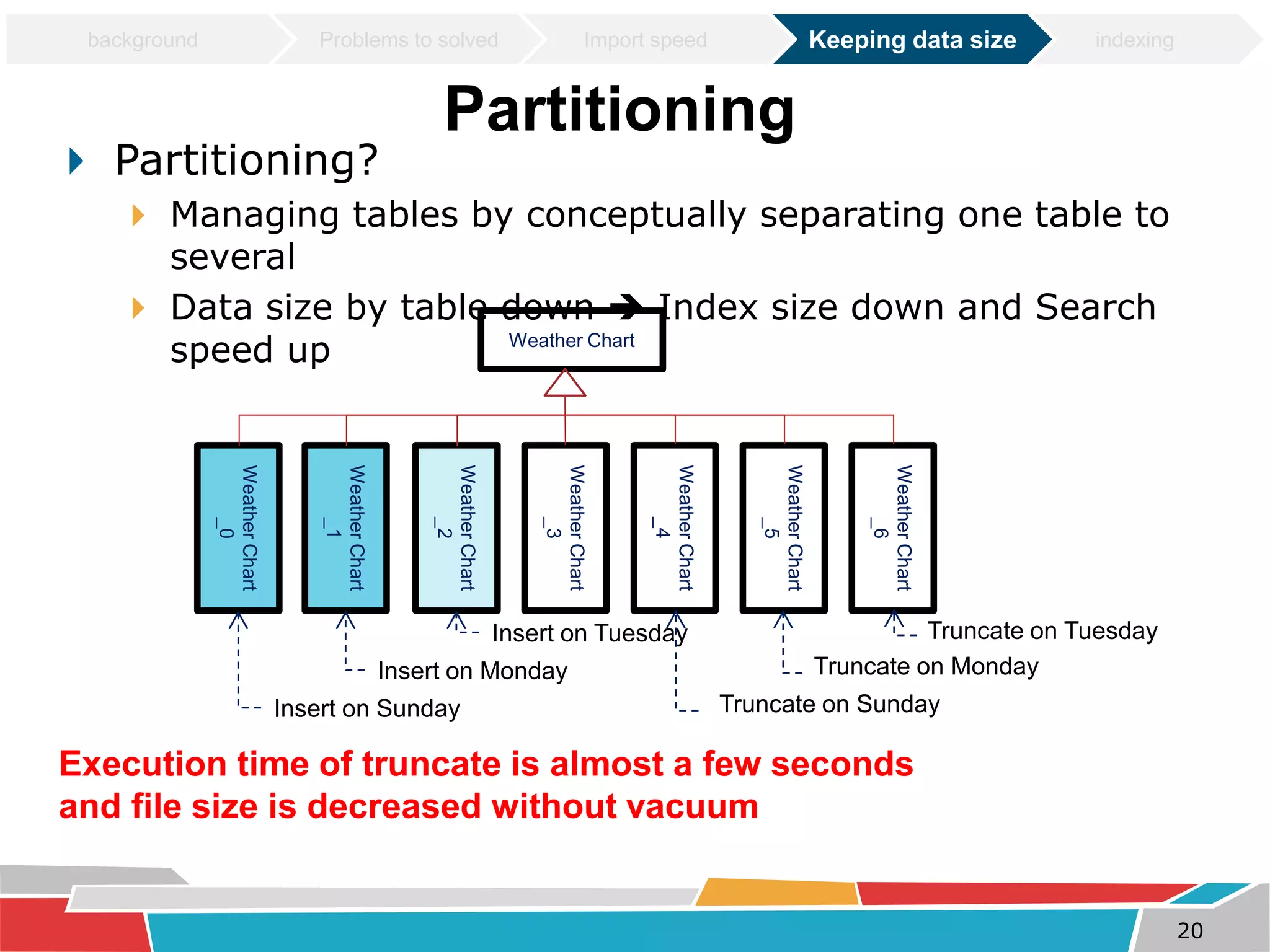

2) Managing data size by partitioning tables and truncating partitions daily to keep file sizes fixed.

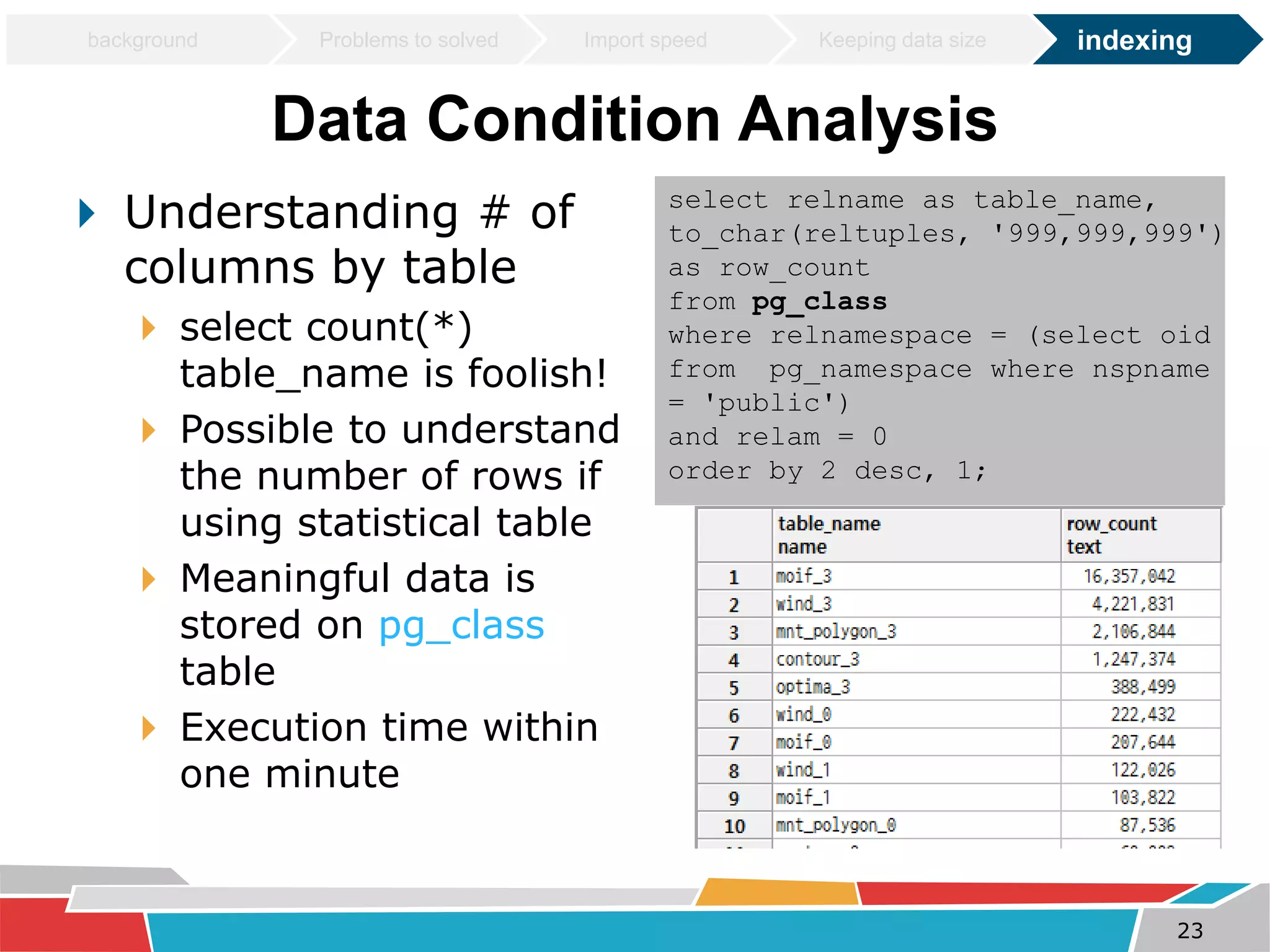

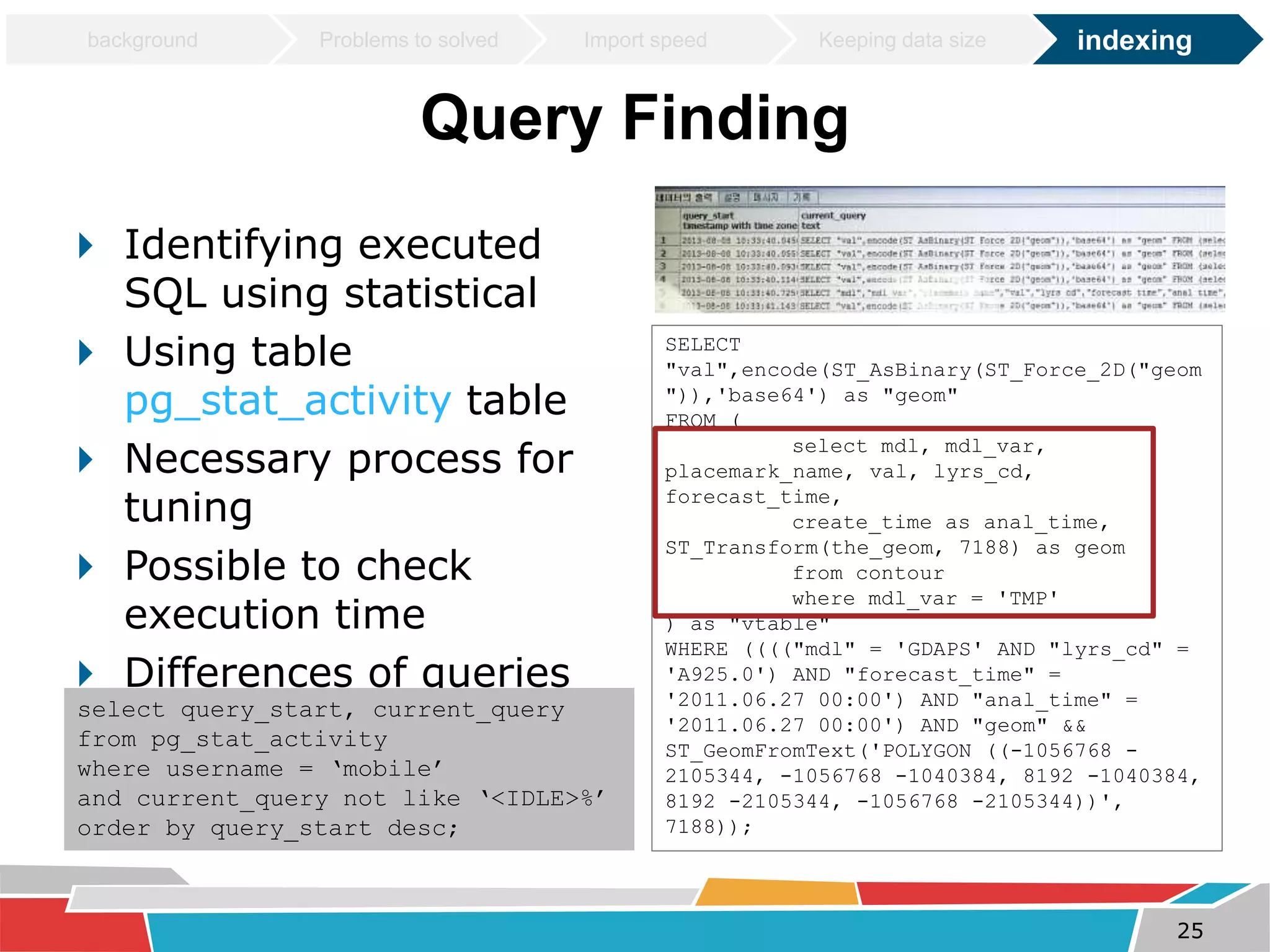

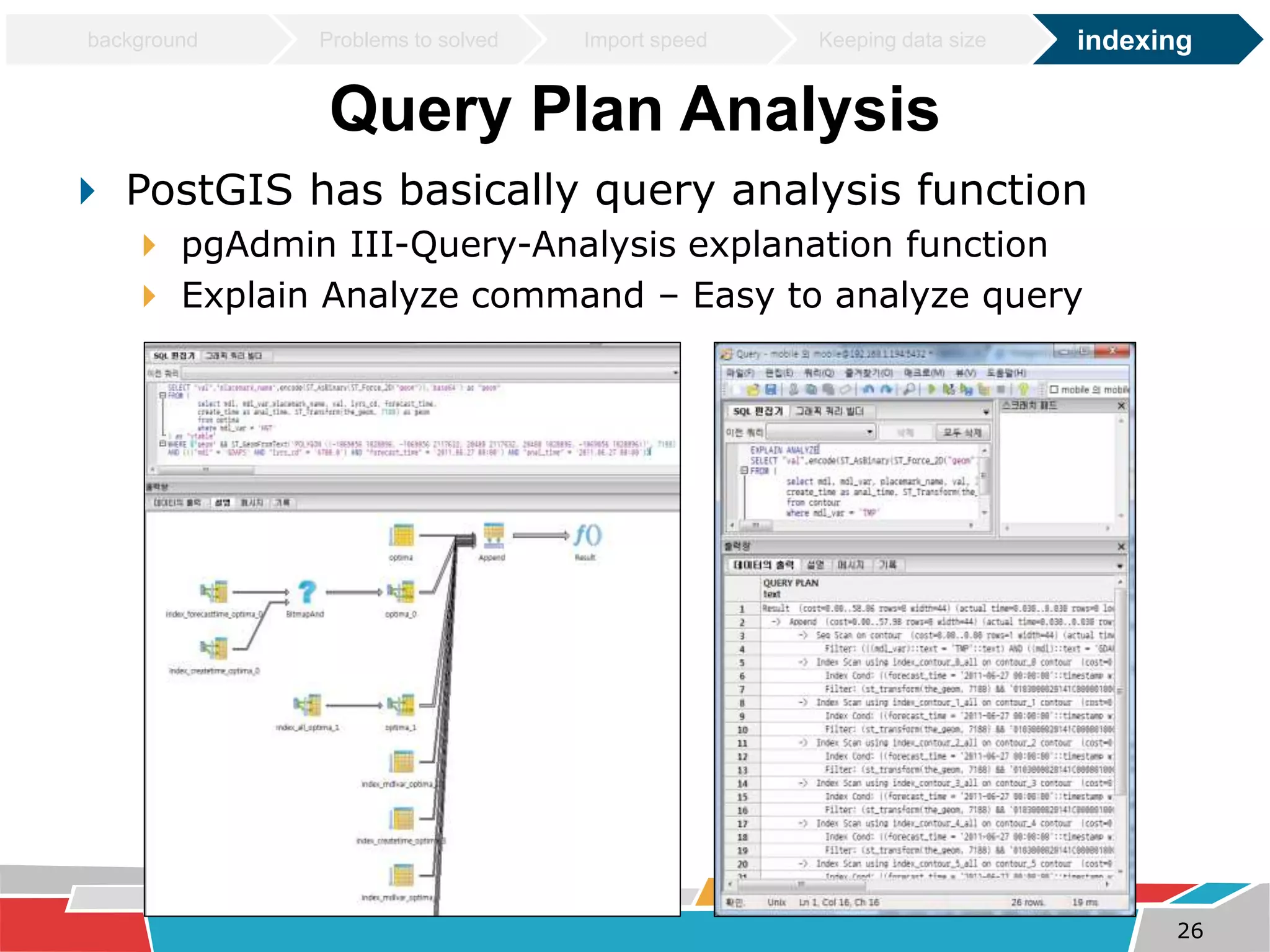

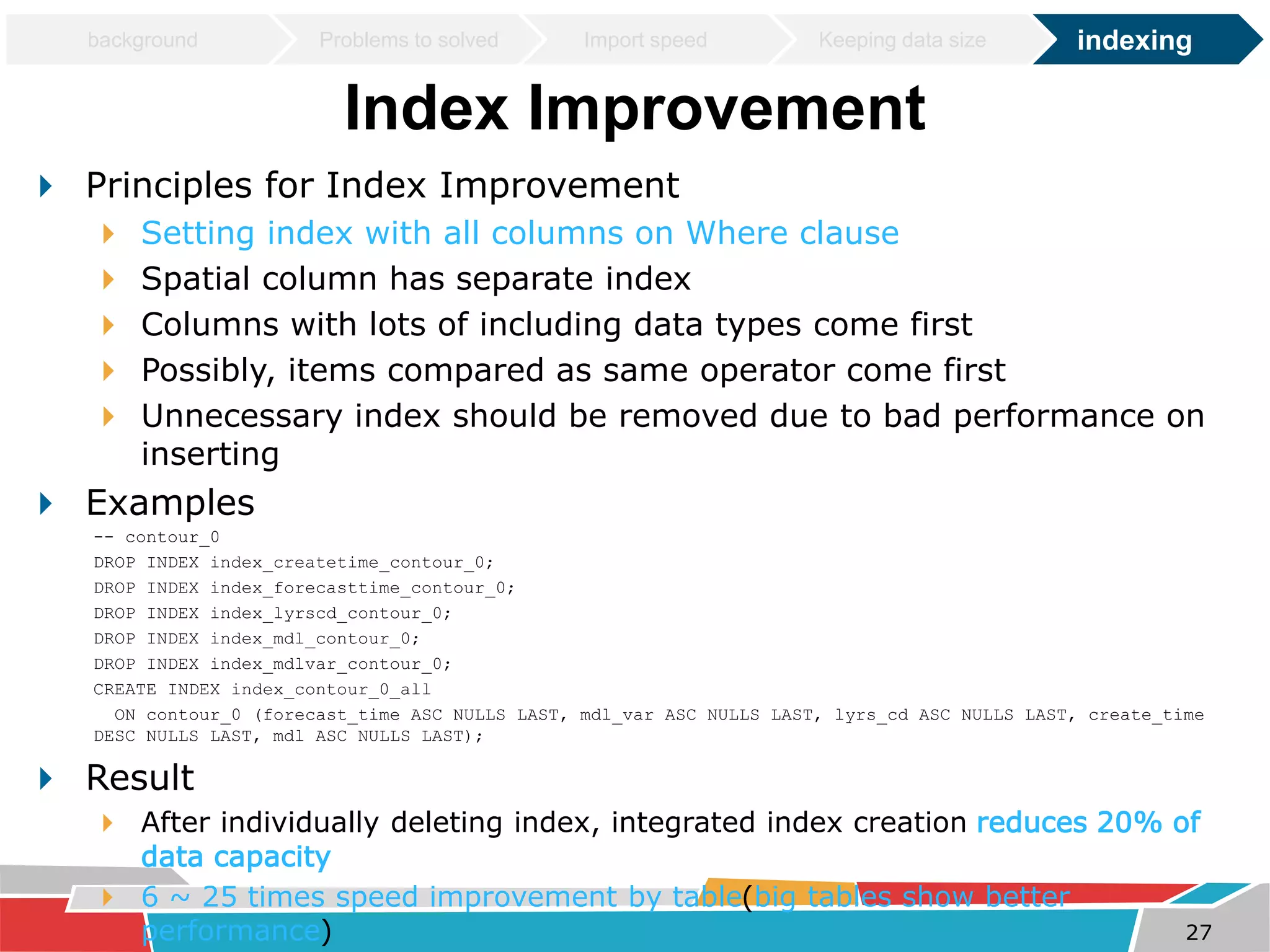

3) Improving query speeds by analyzing queries, redesigning indexes on frequently searched columns, and reducing index sizes. These tuning efforts improved query speeds by up to 25 times.