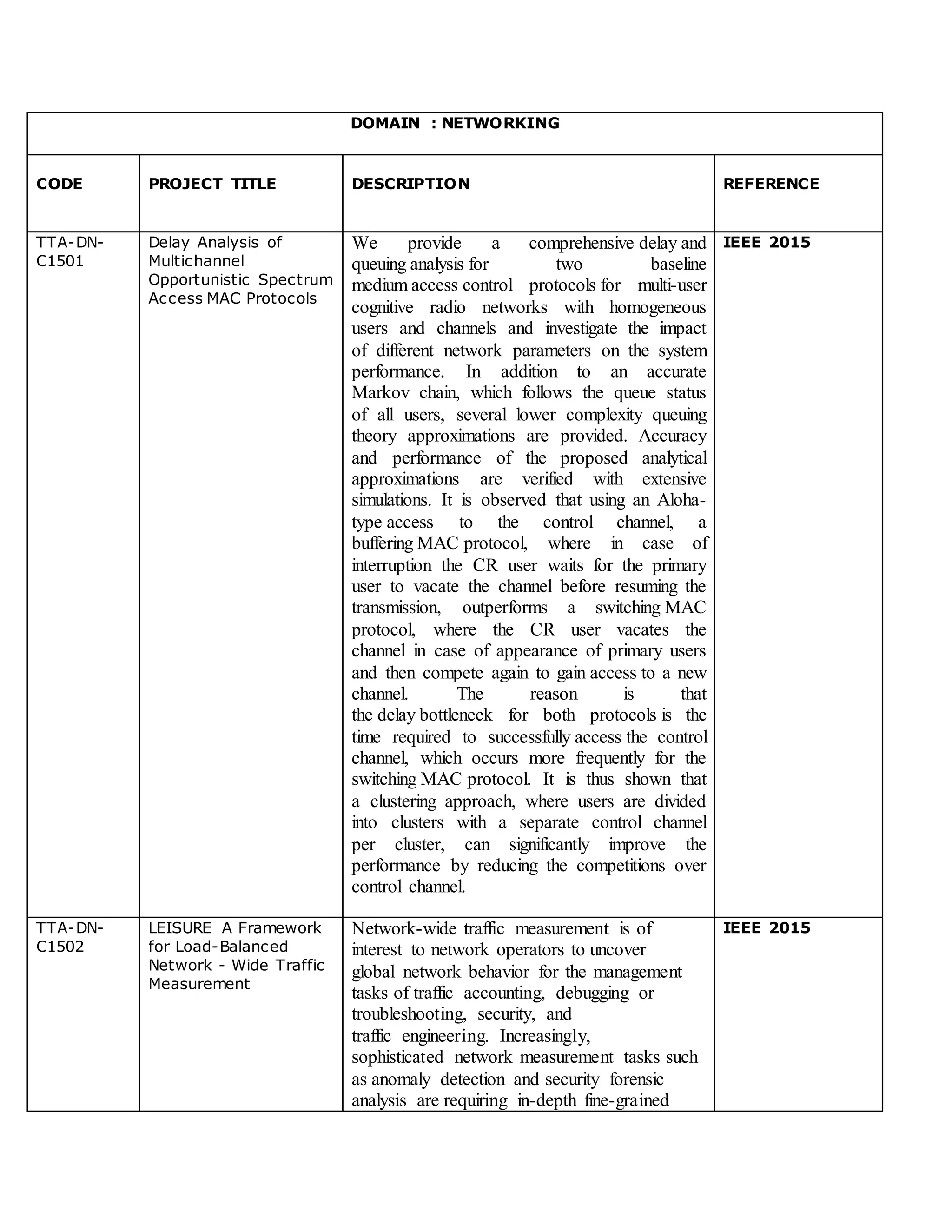

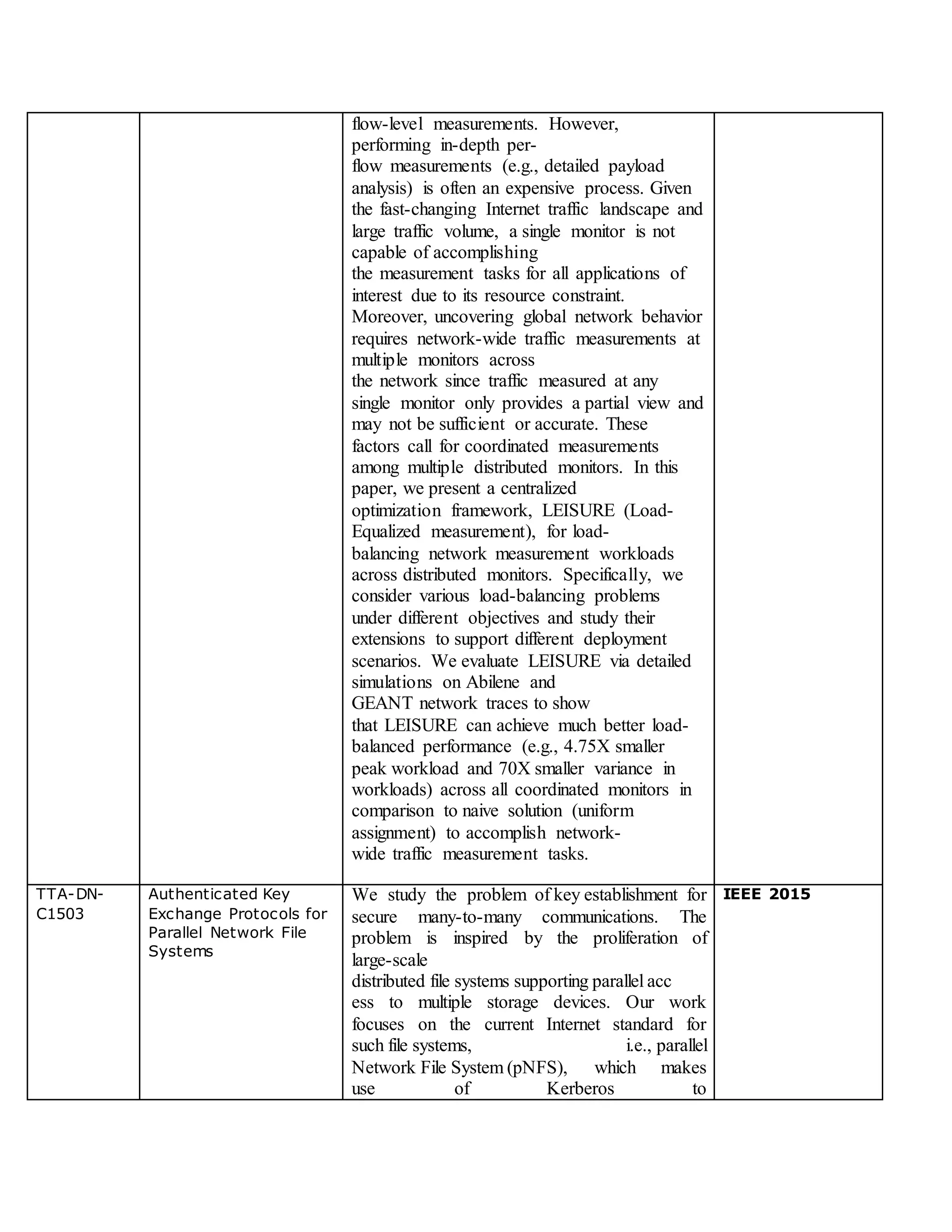

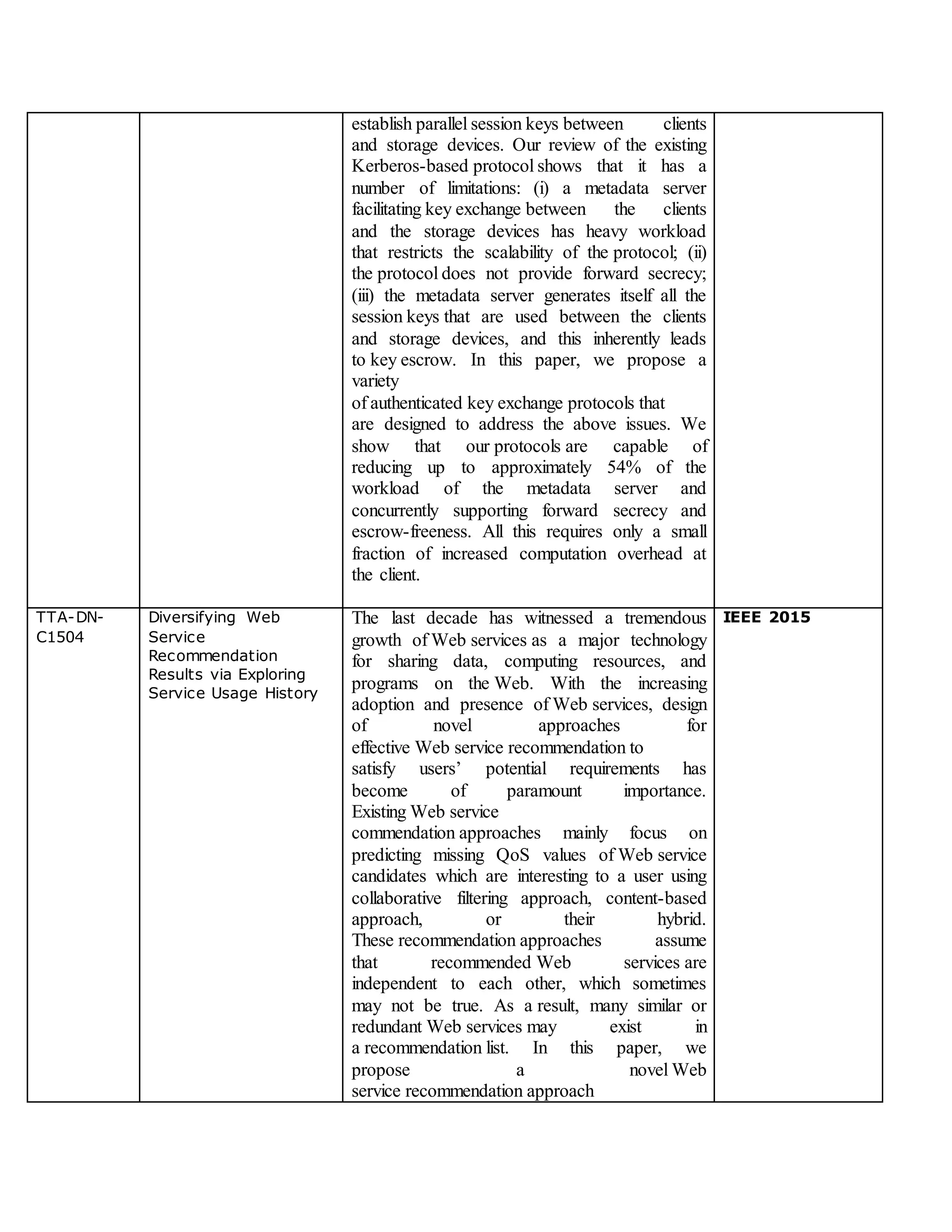

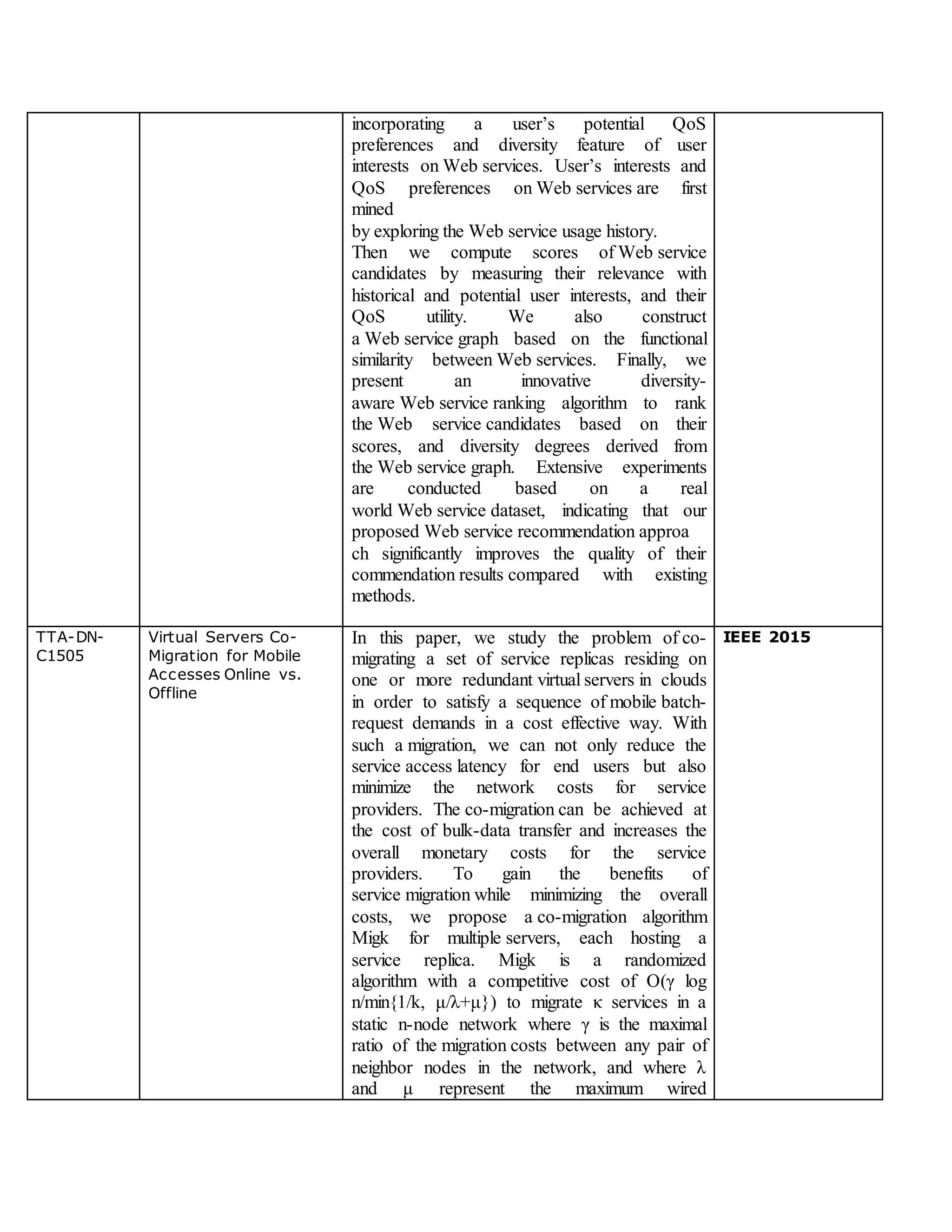

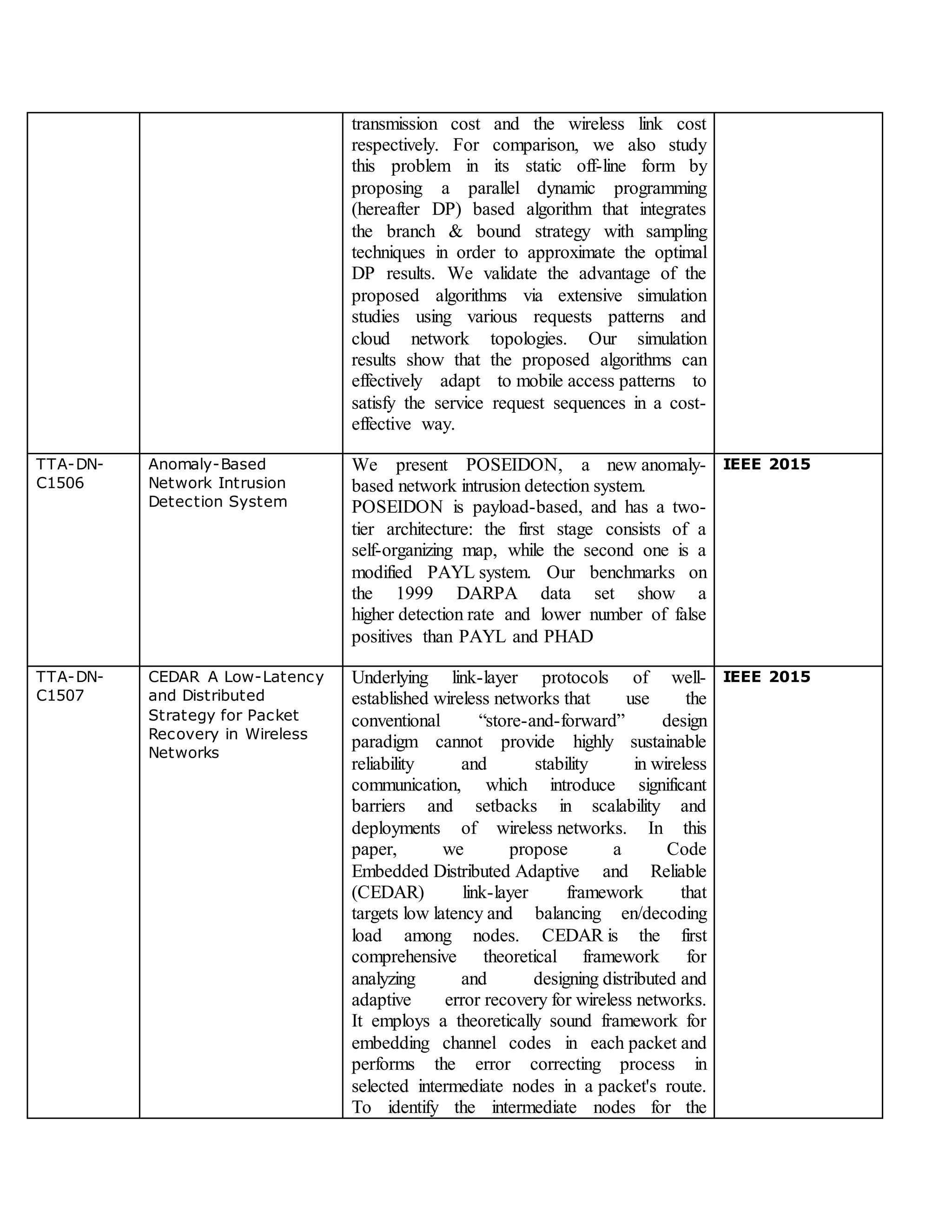

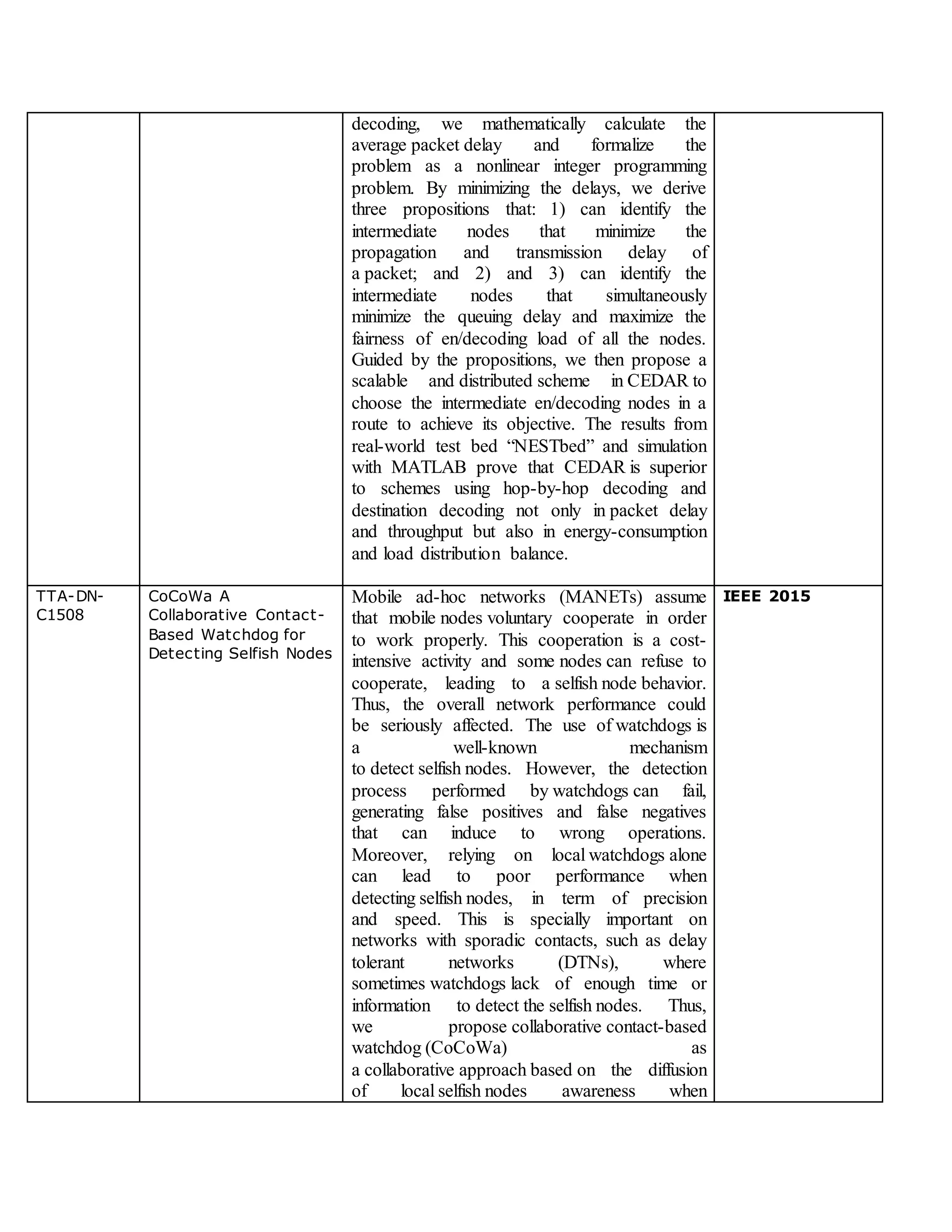

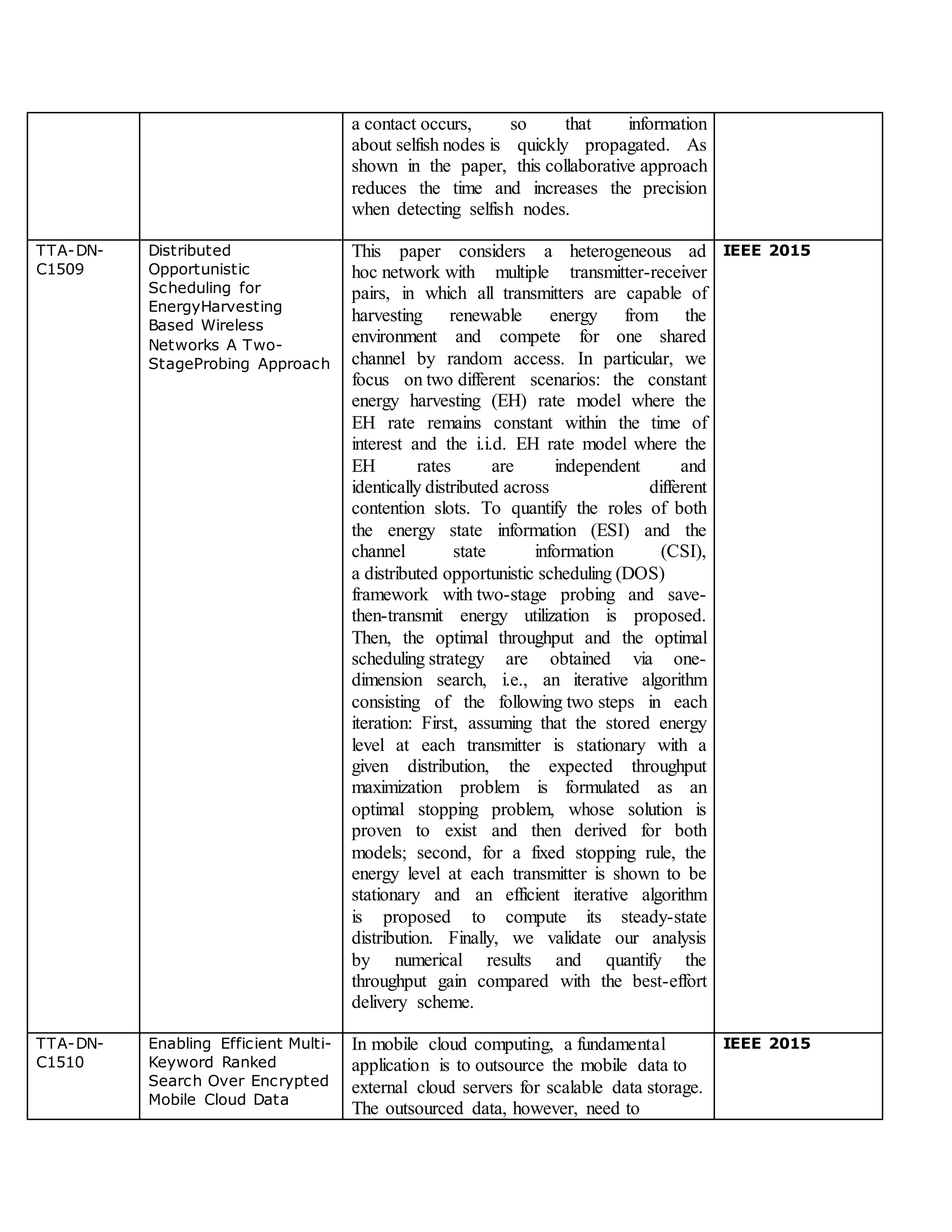

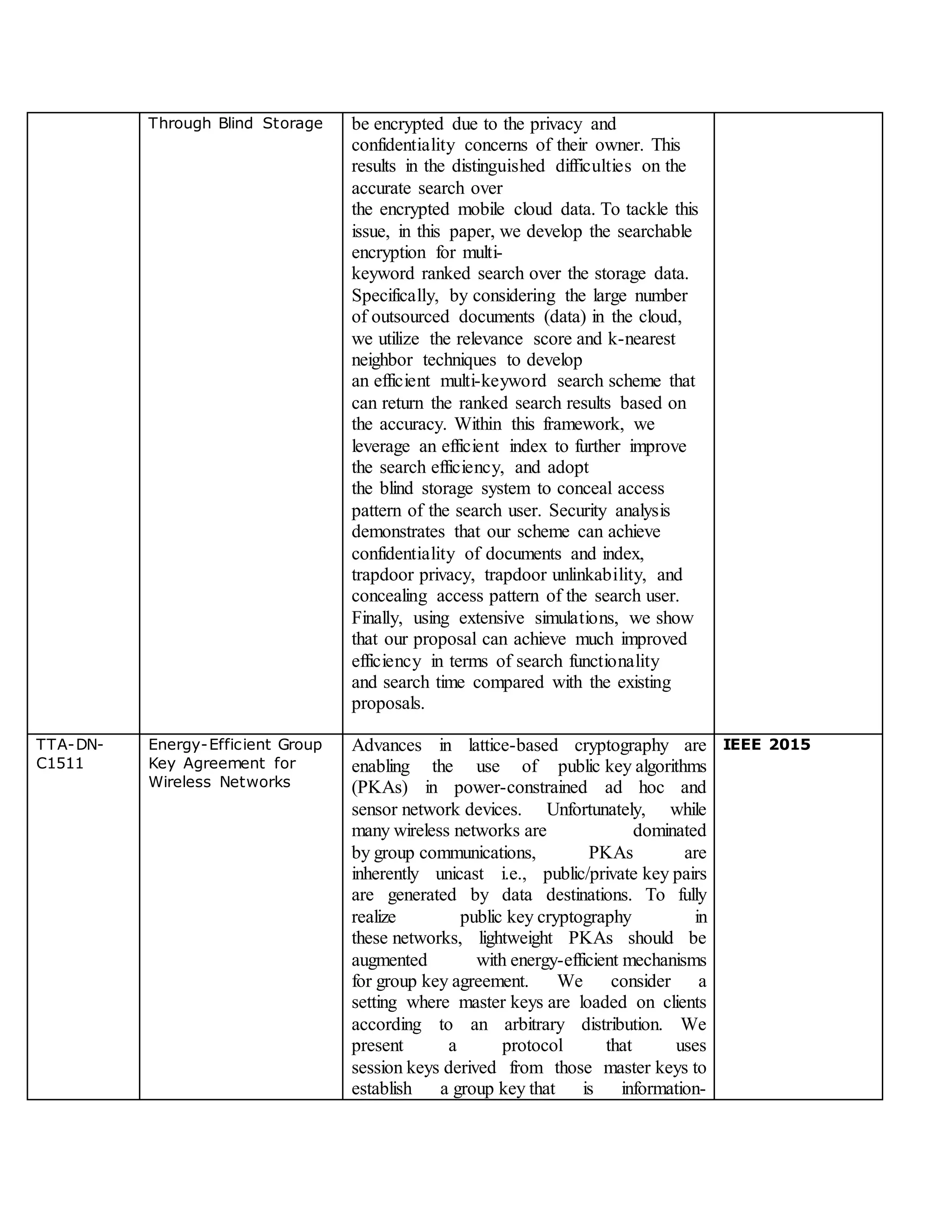

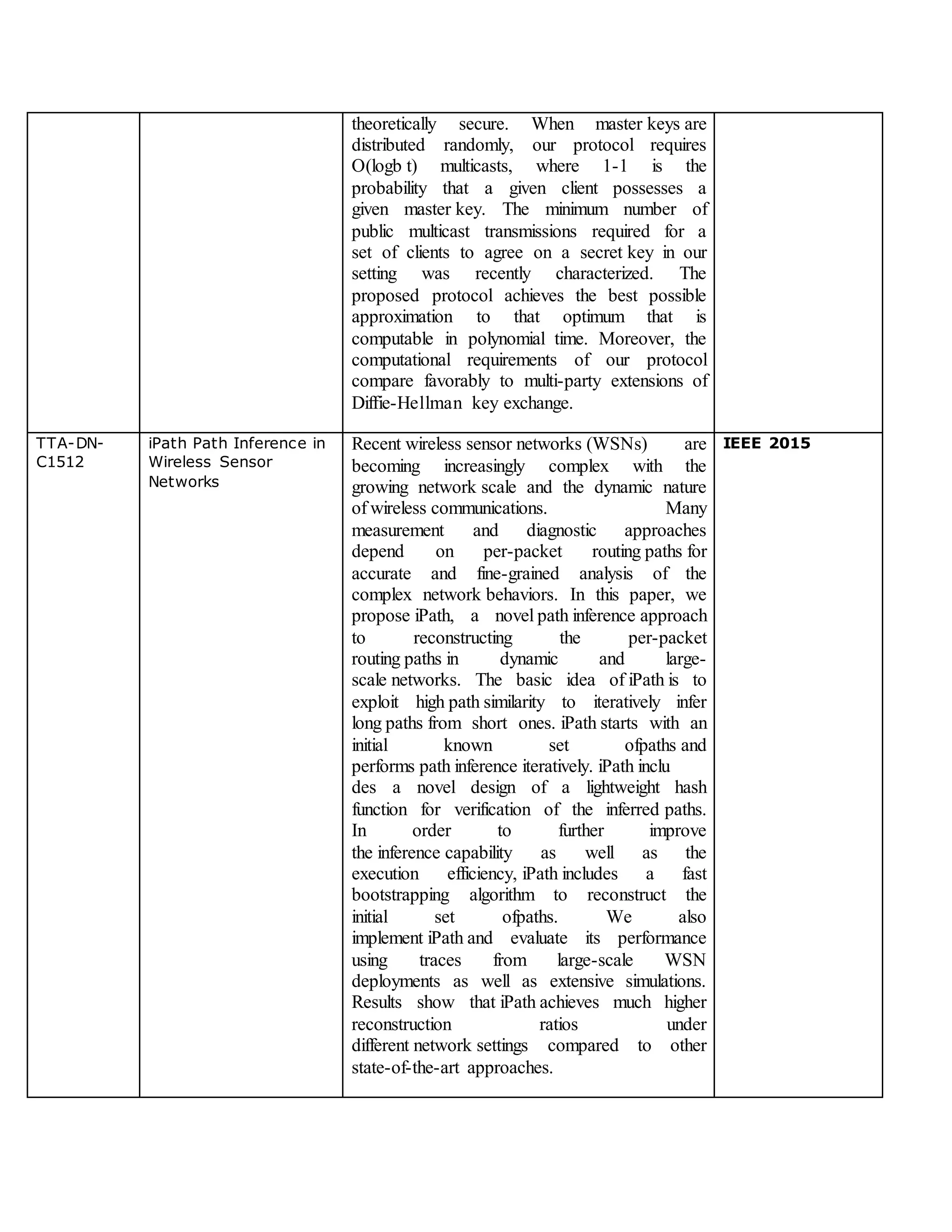

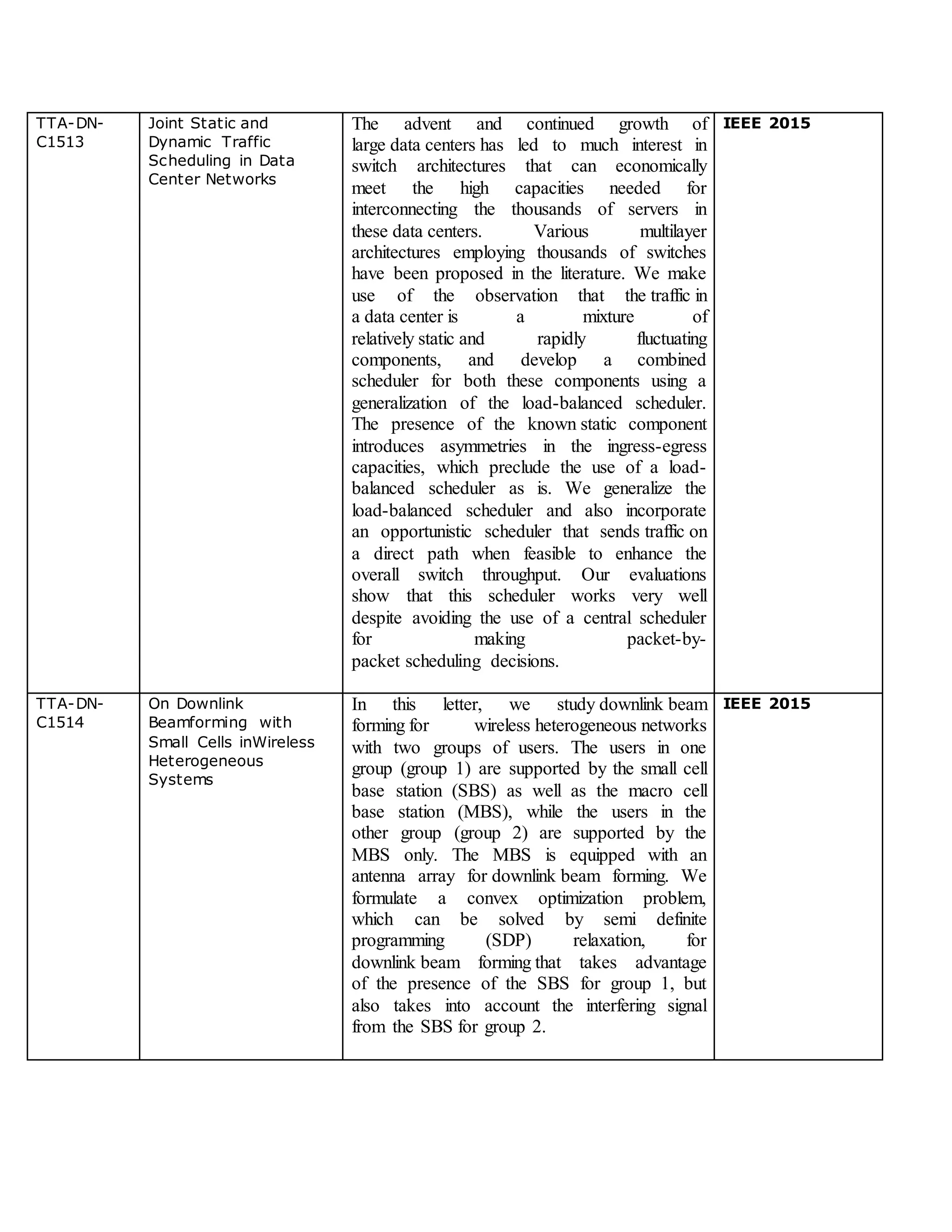

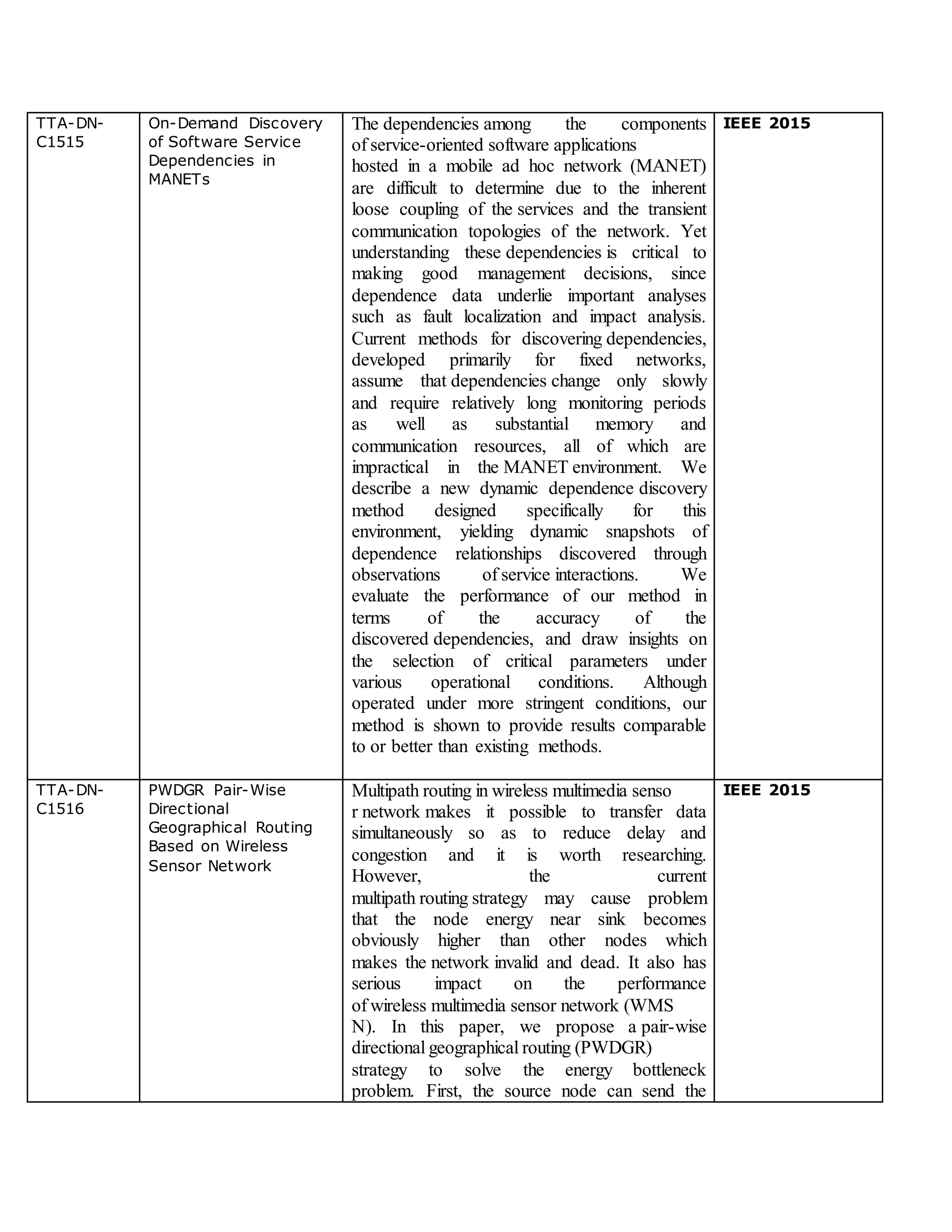

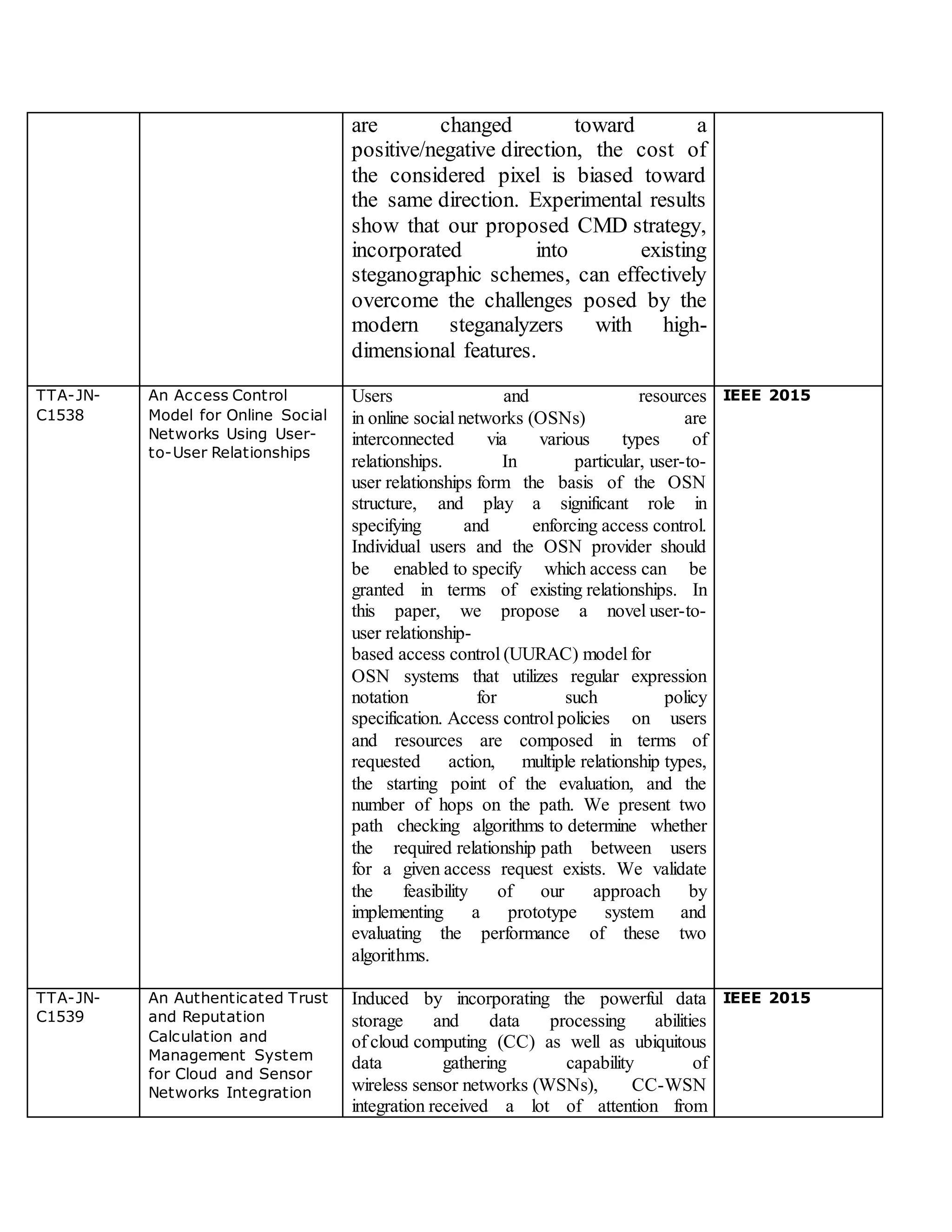

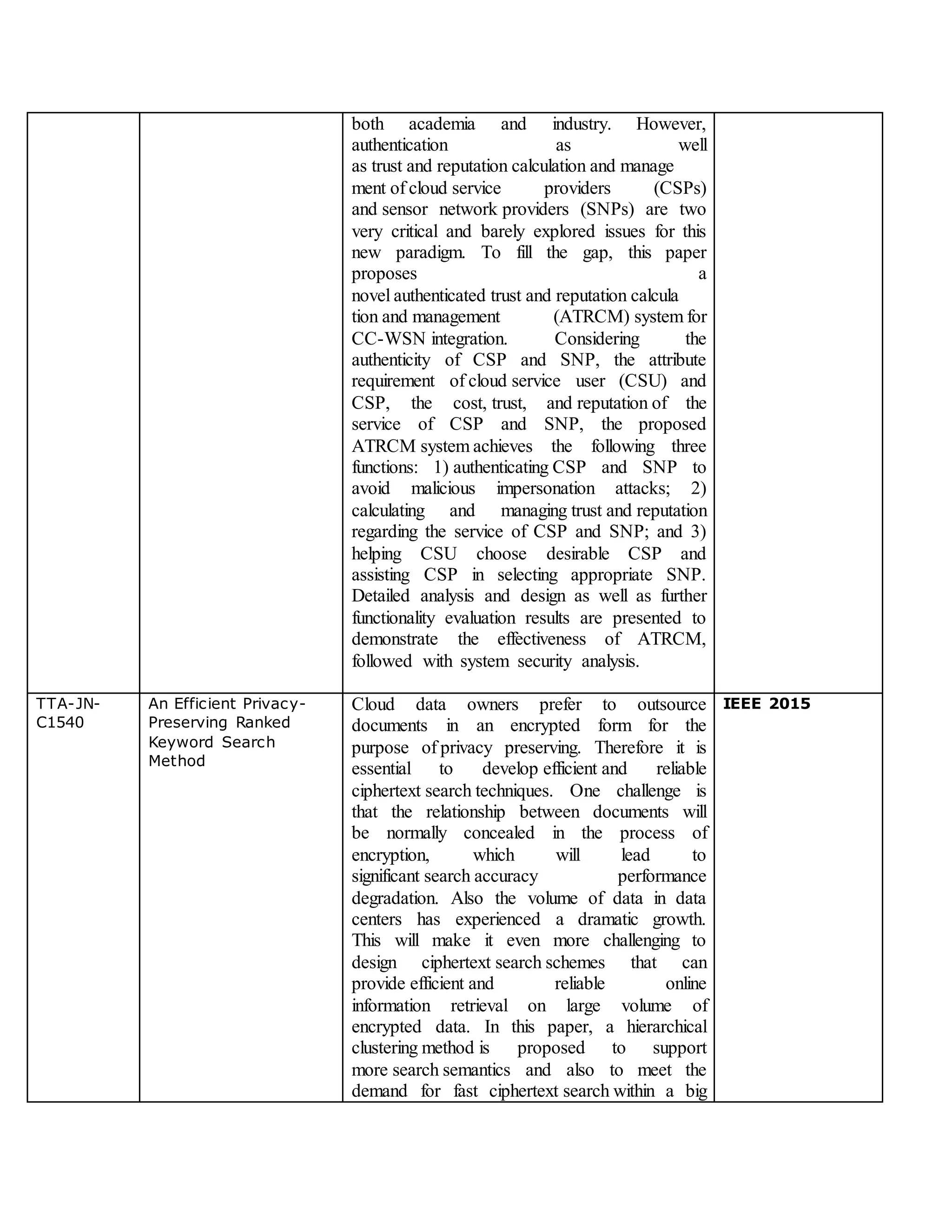

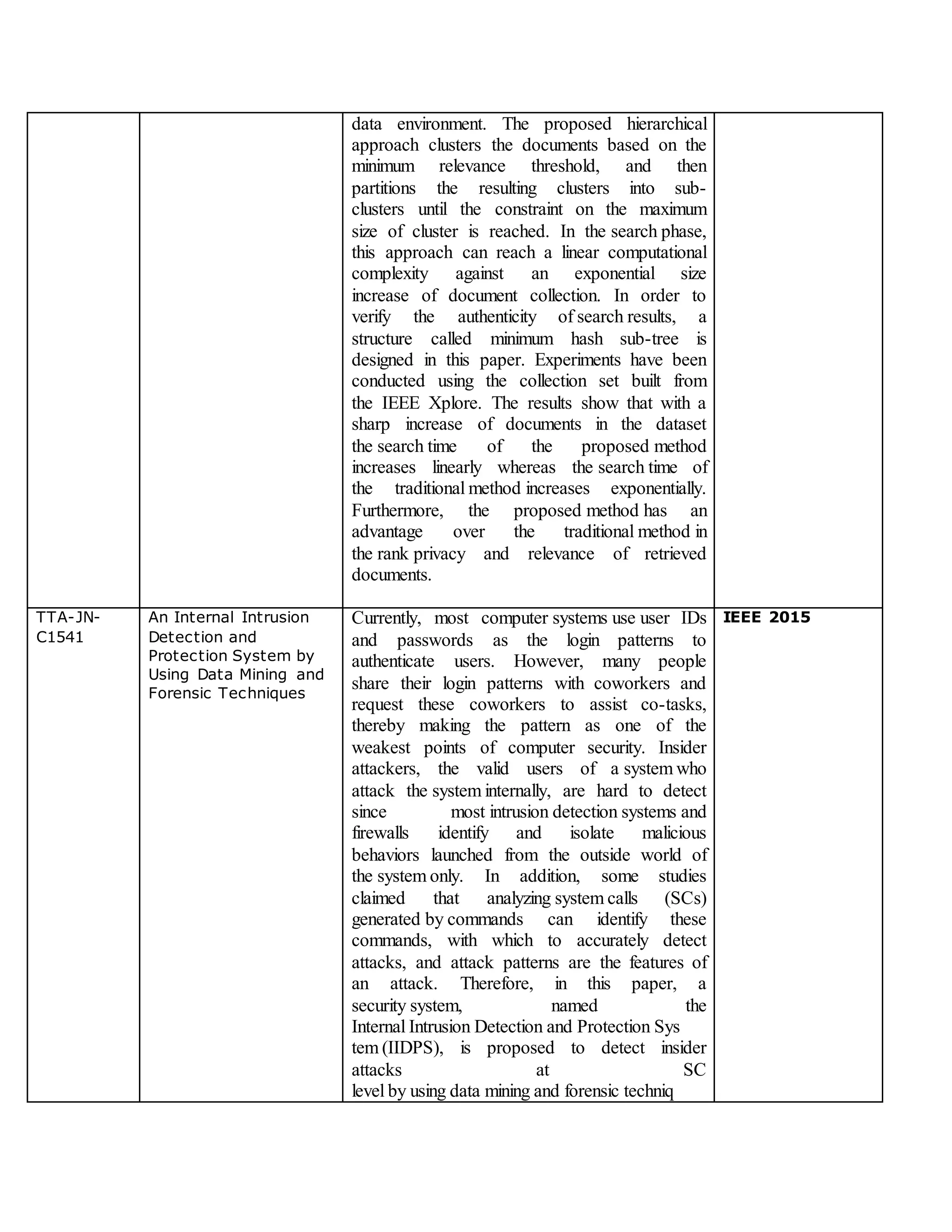

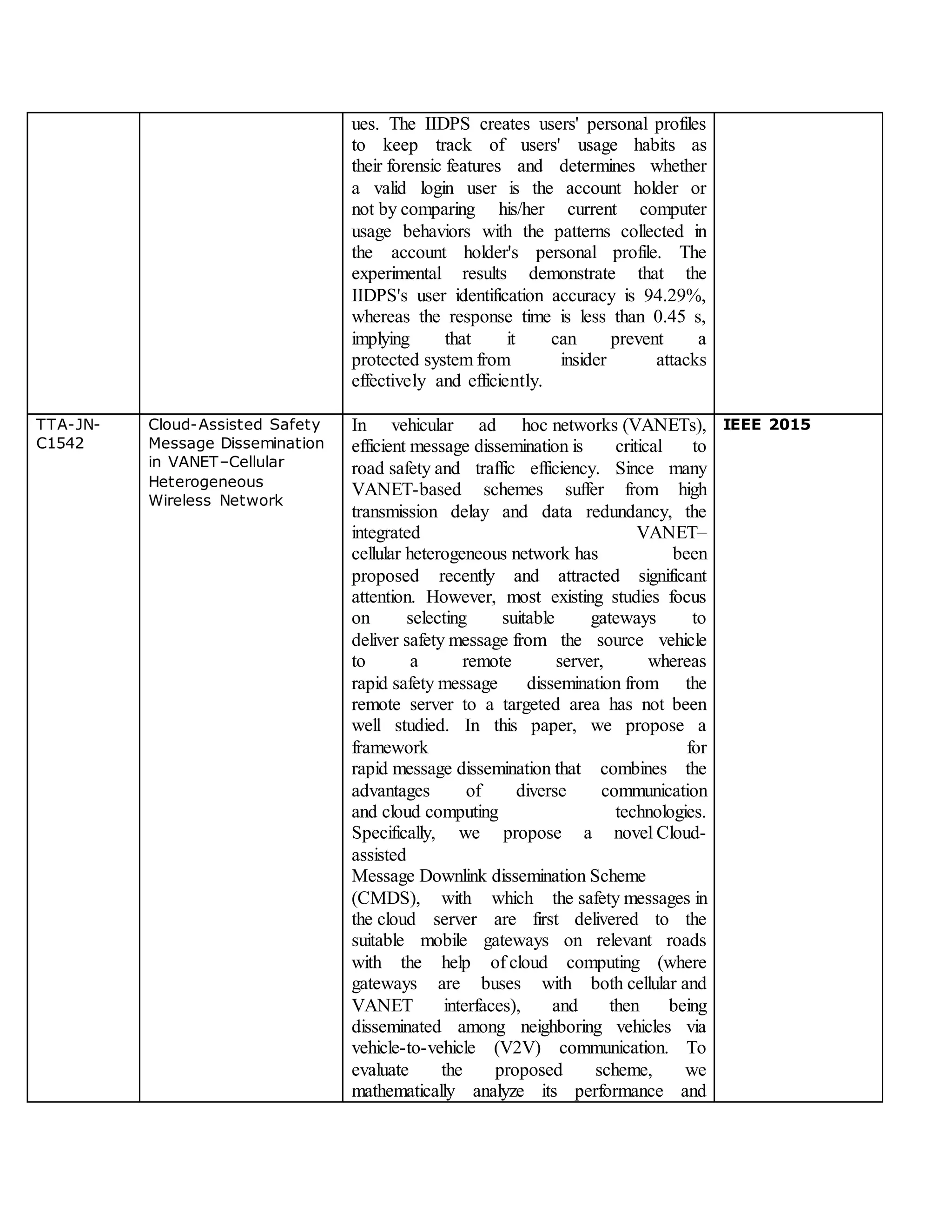

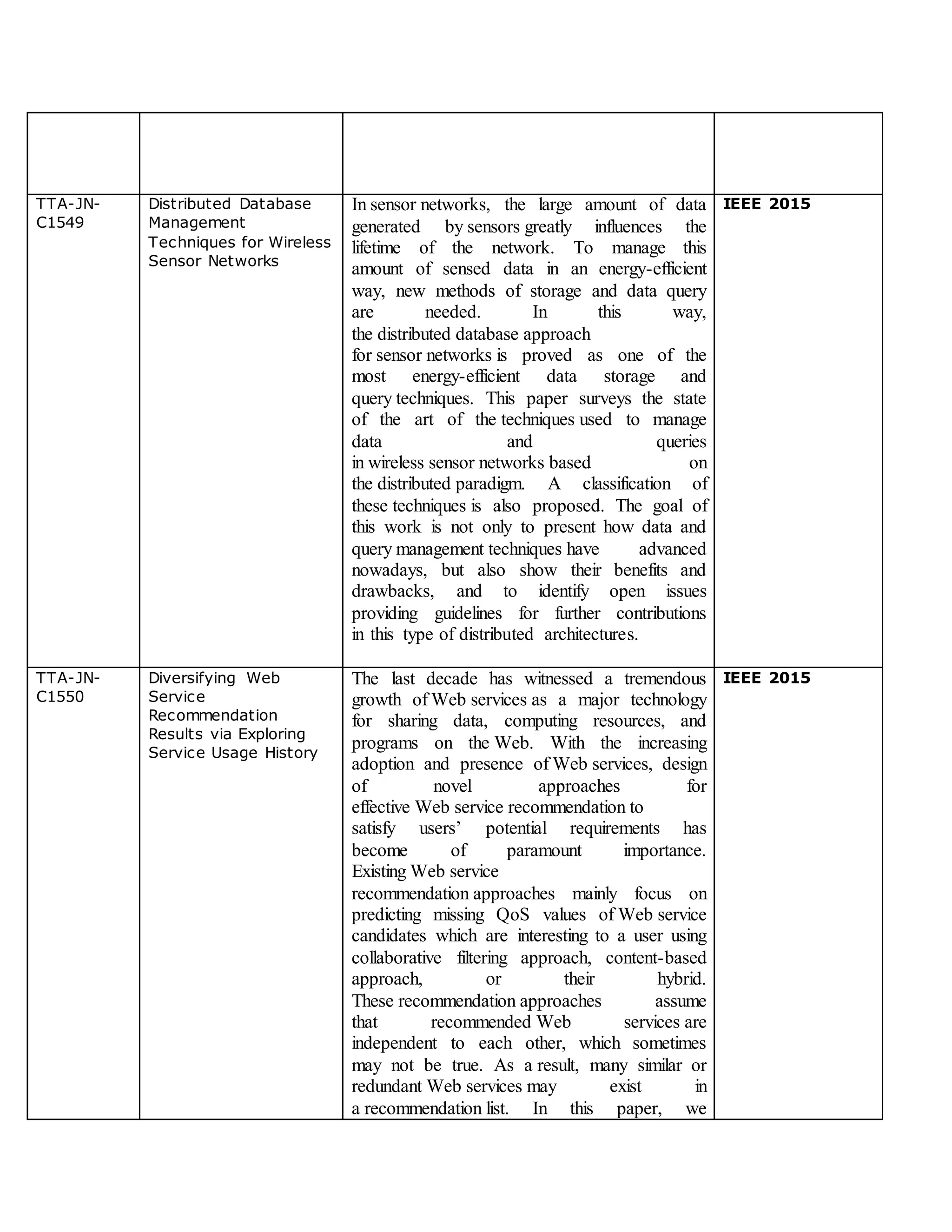

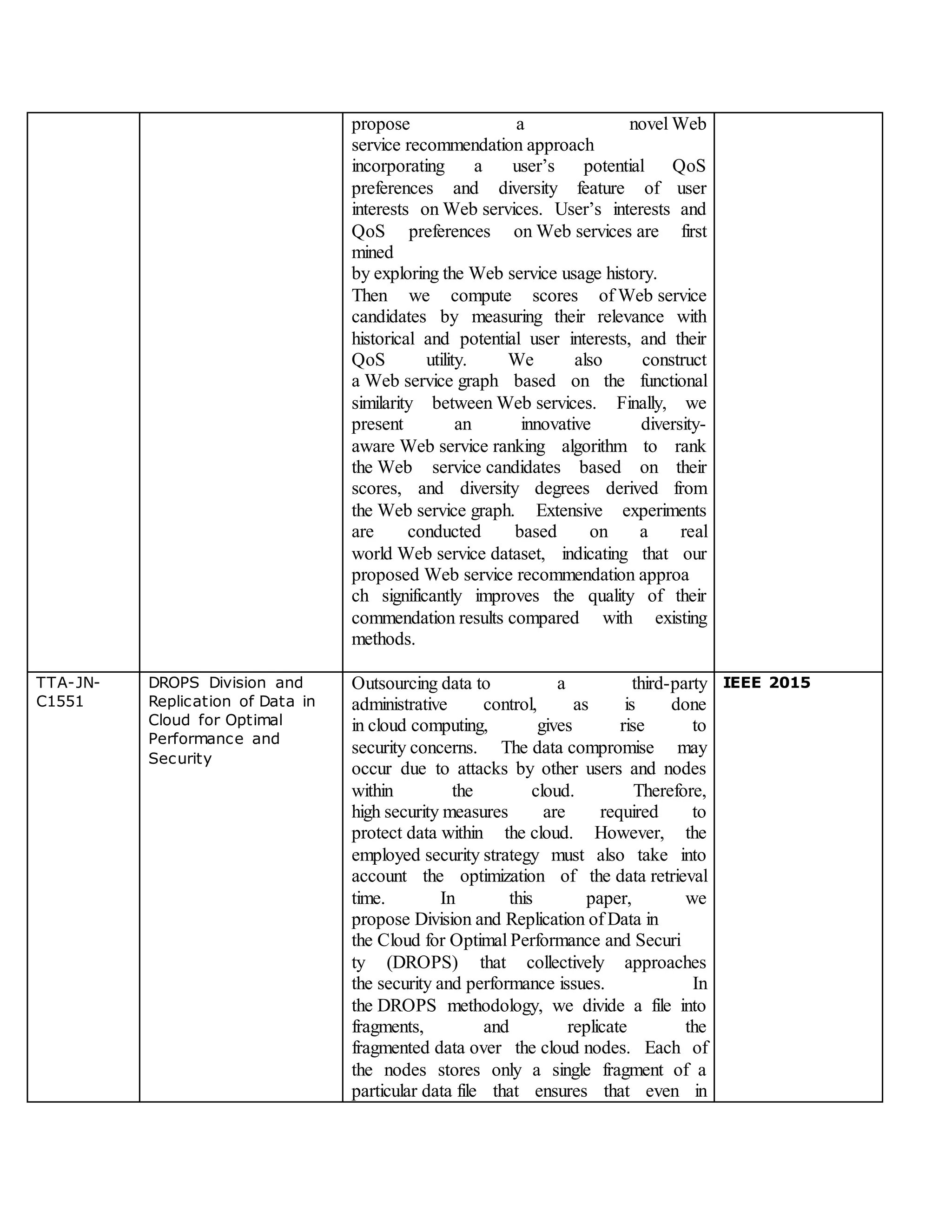

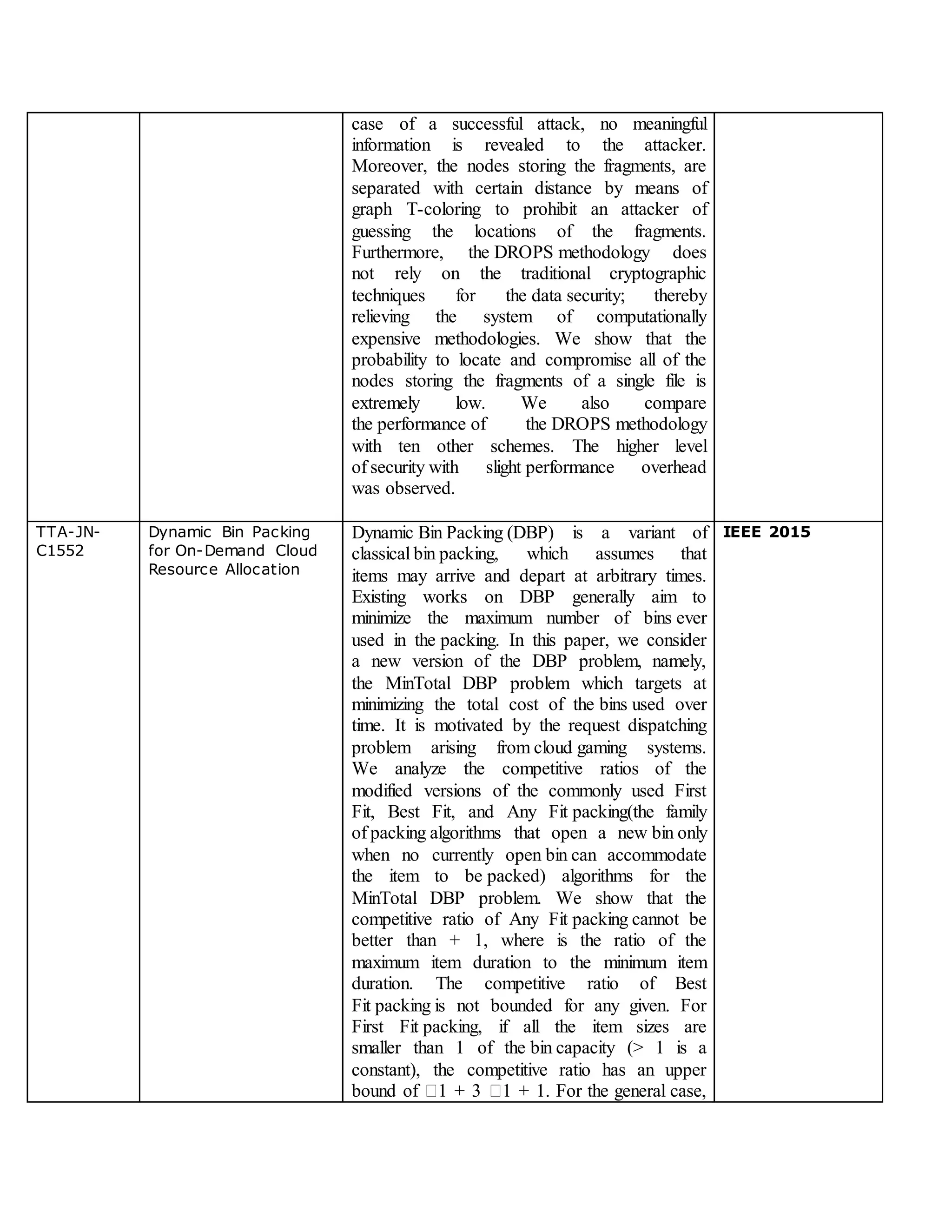

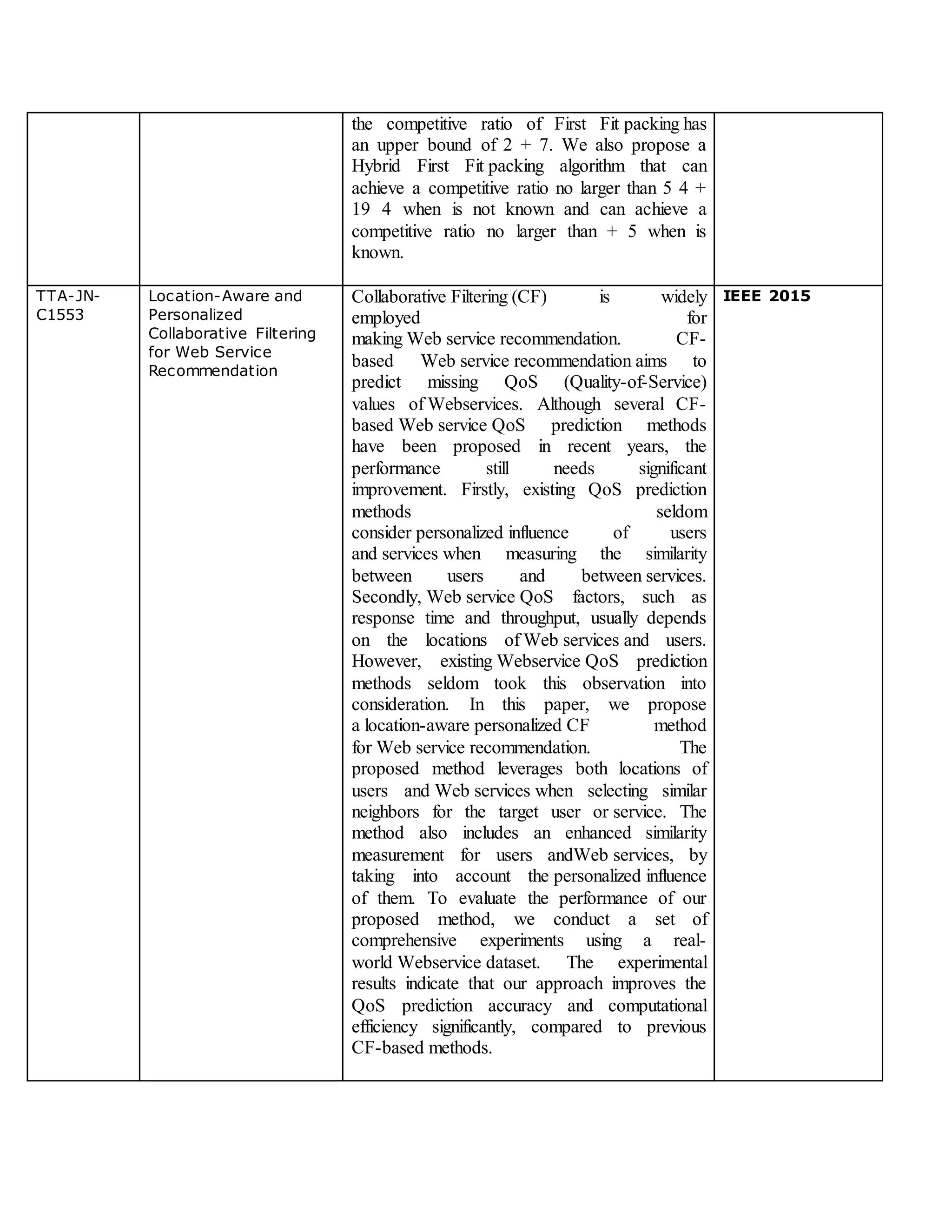

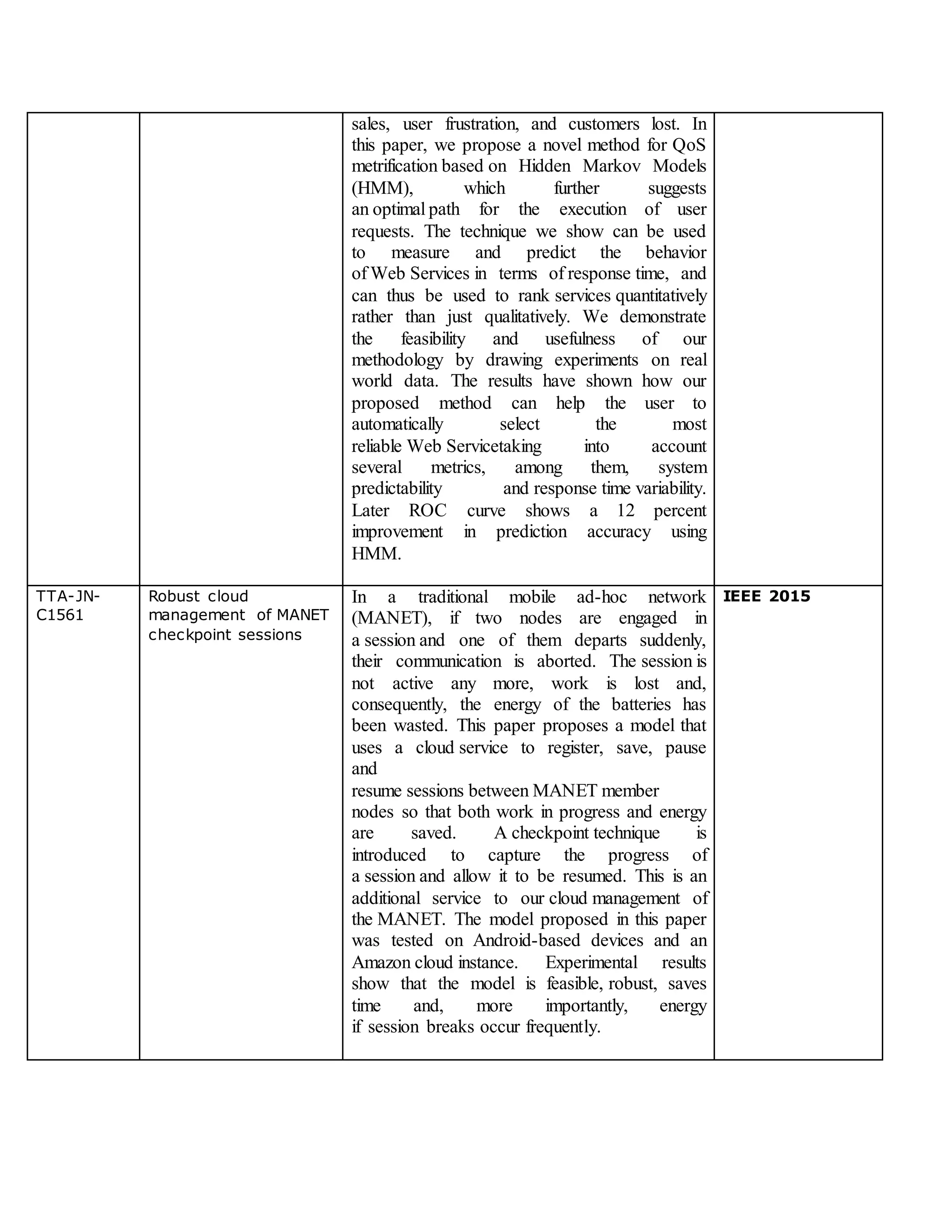

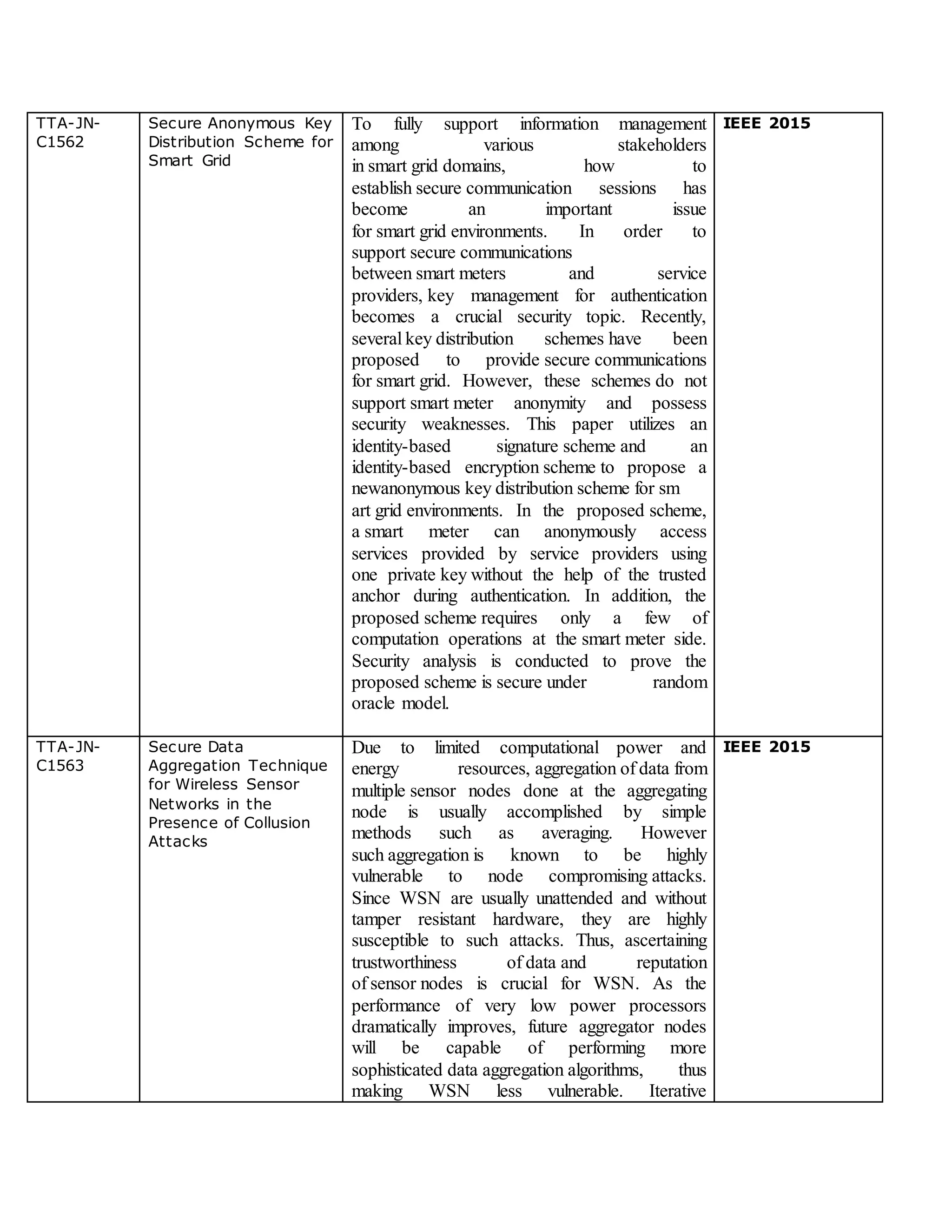

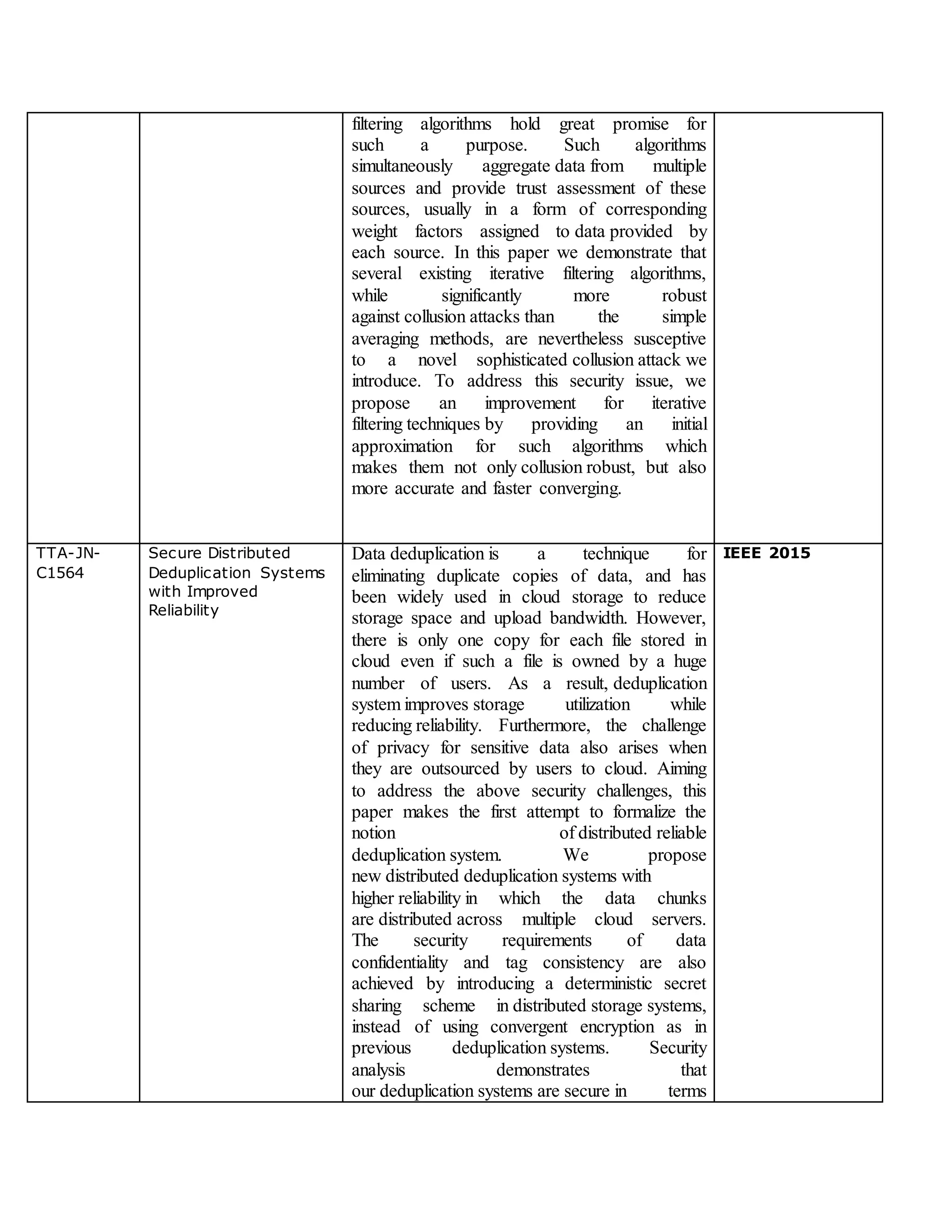

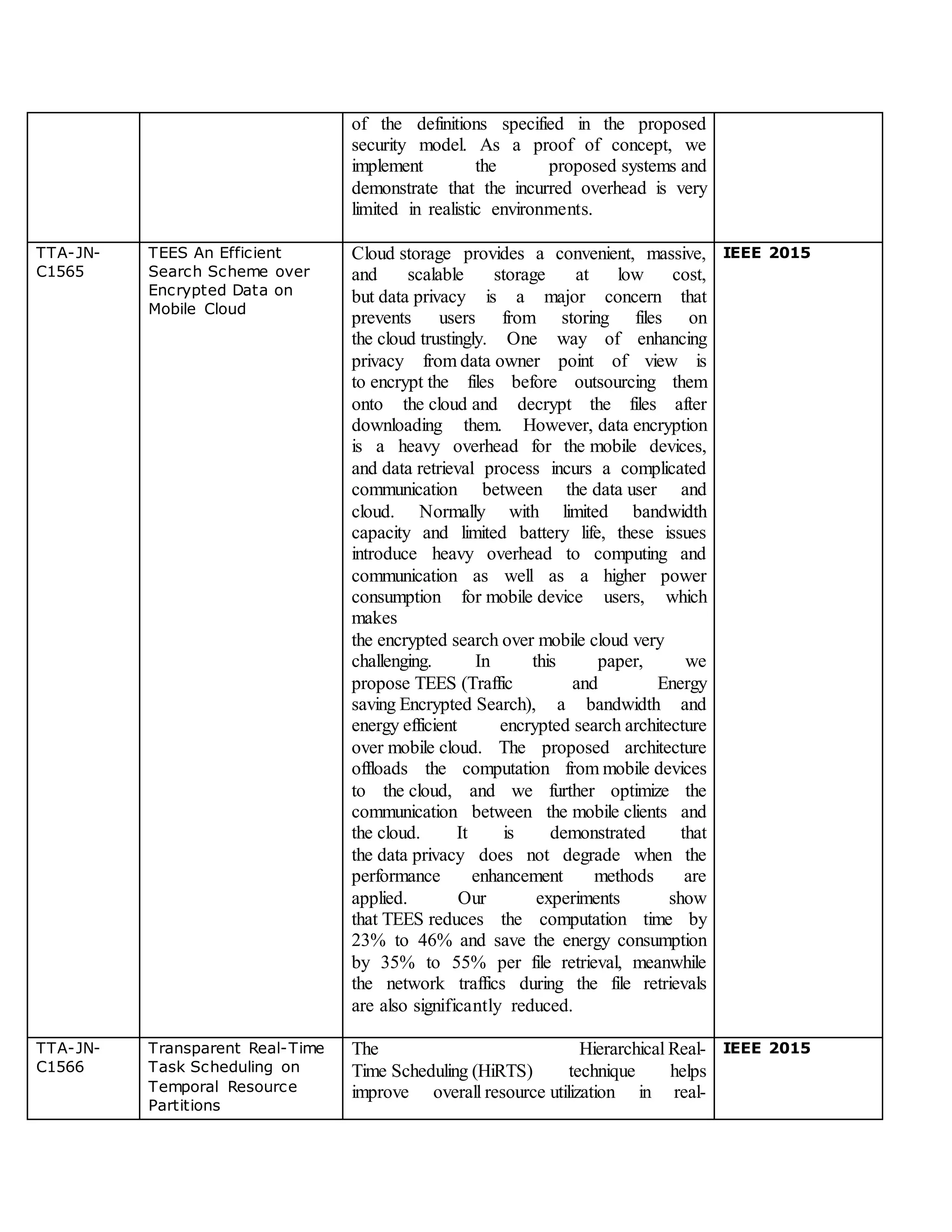

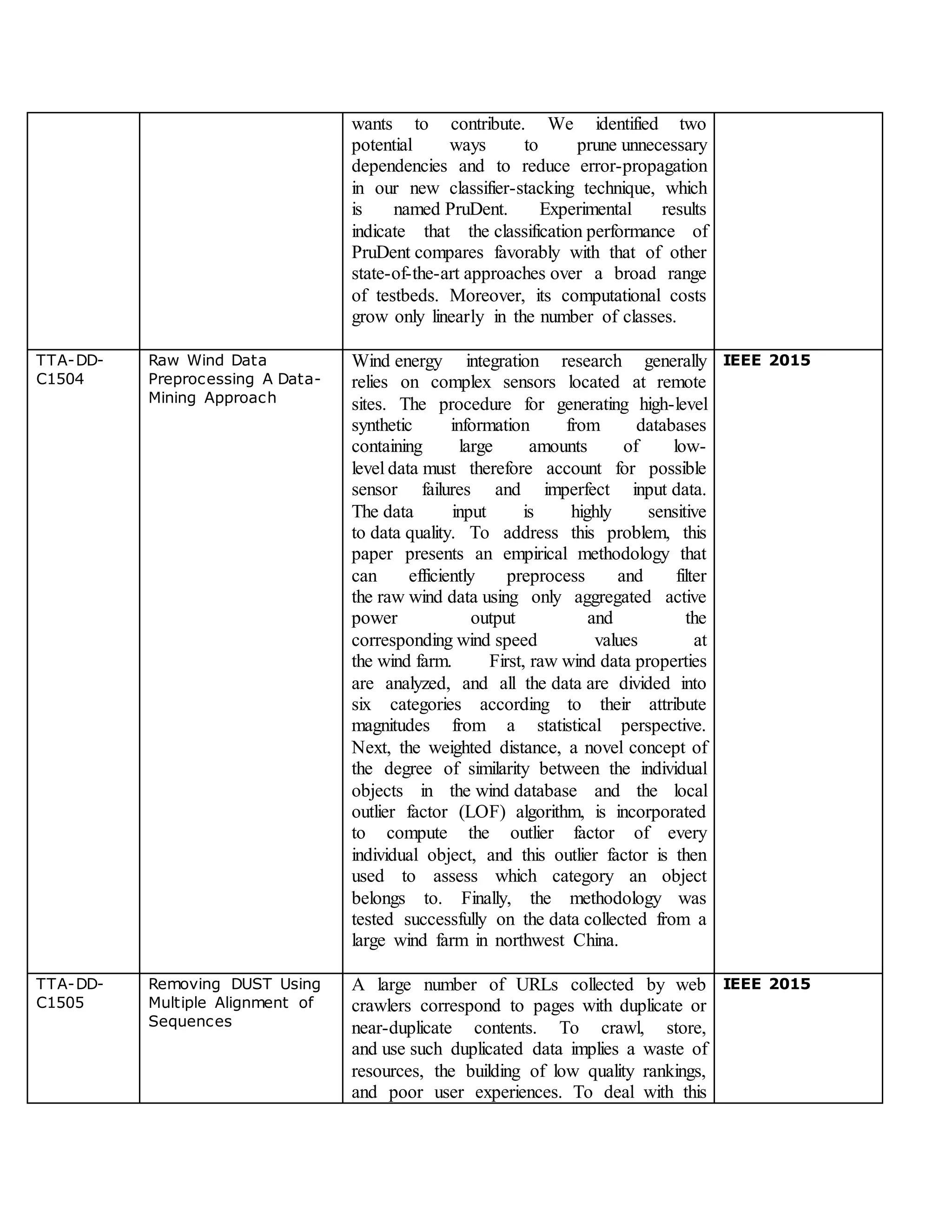

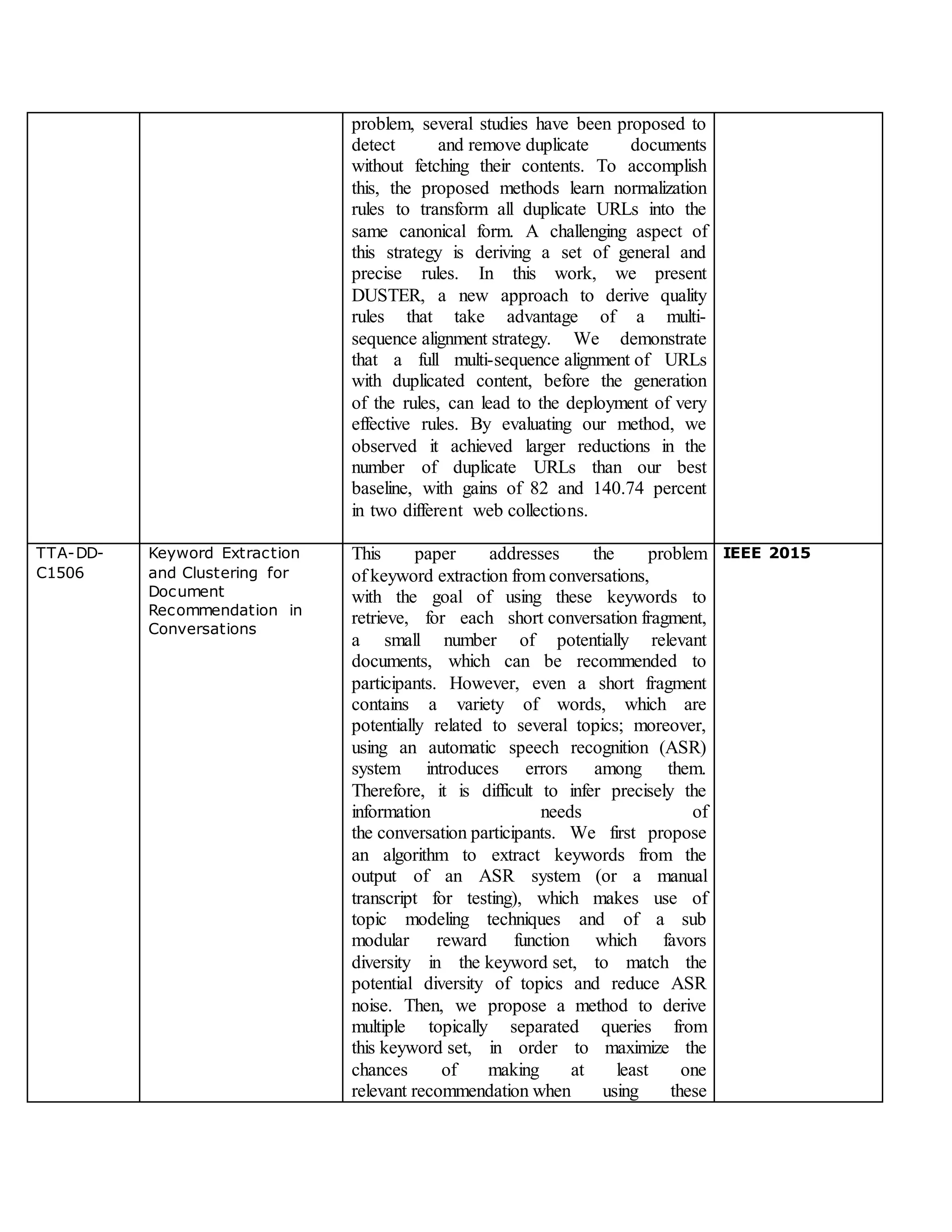

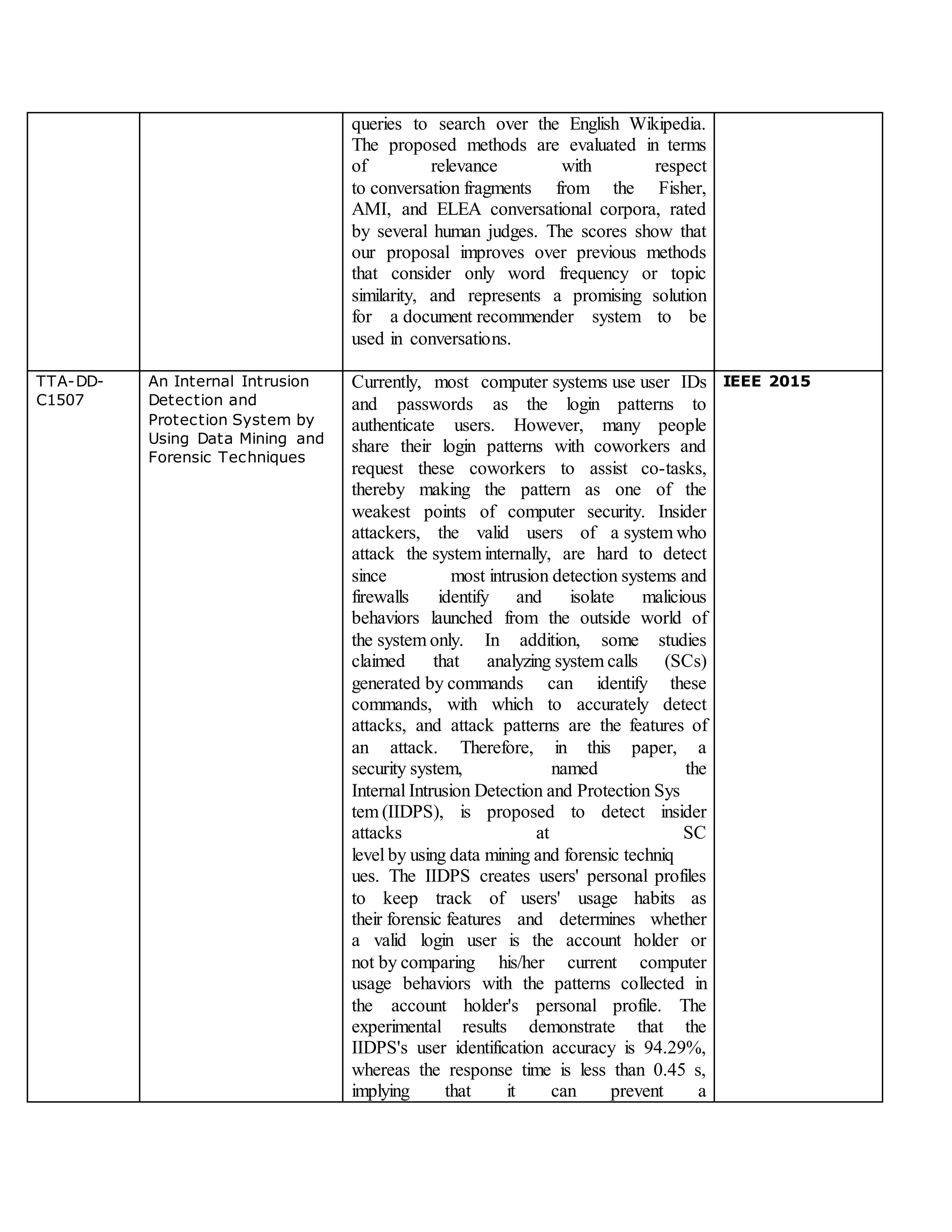

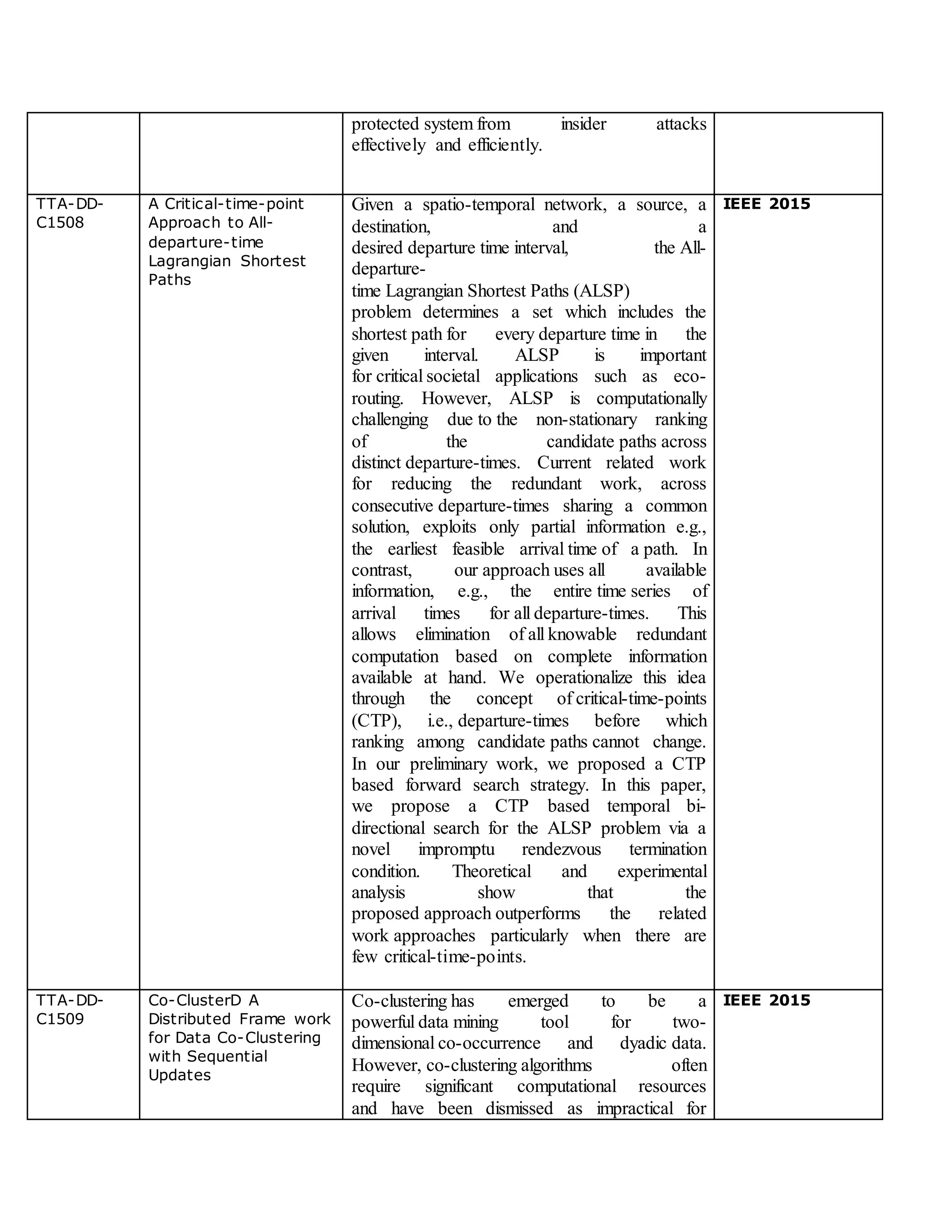

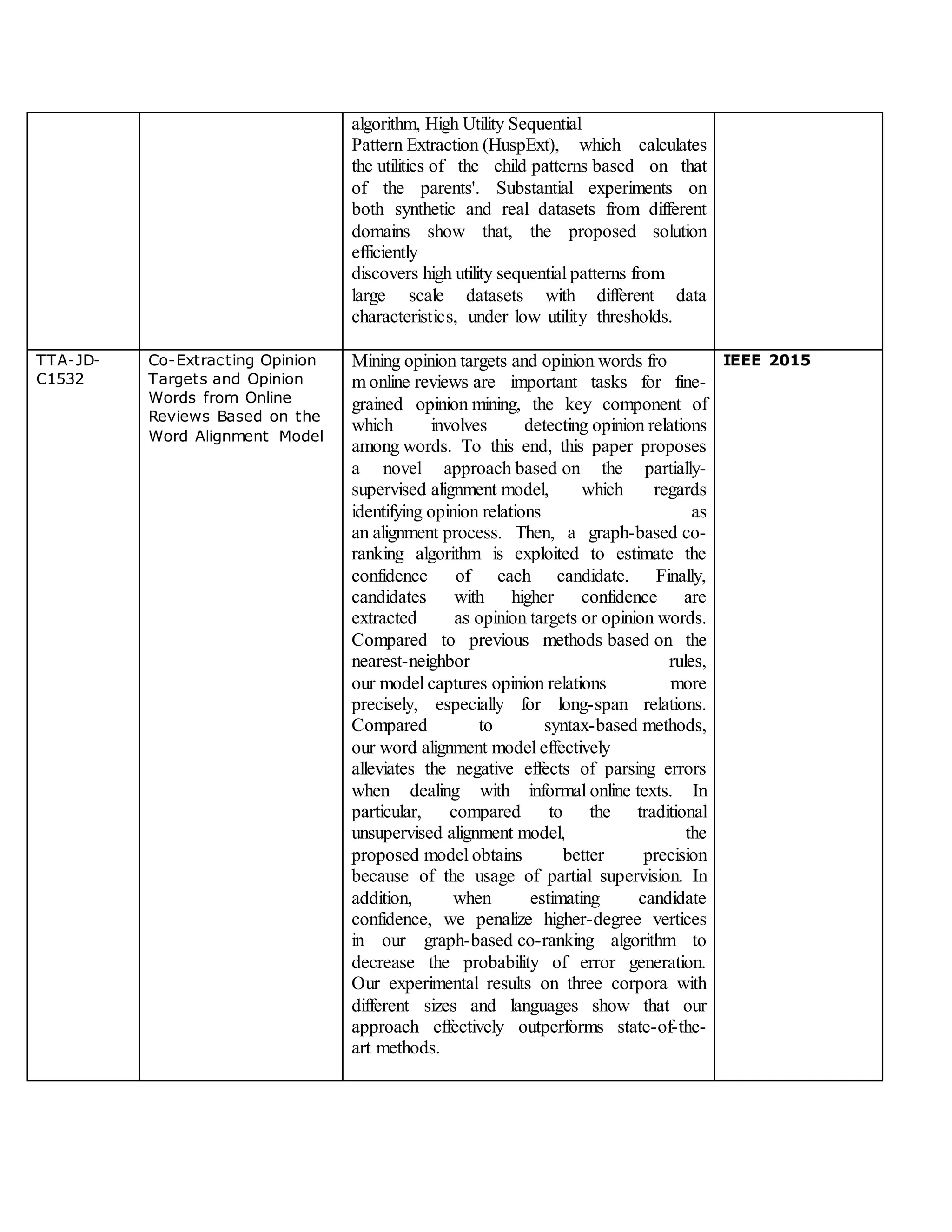

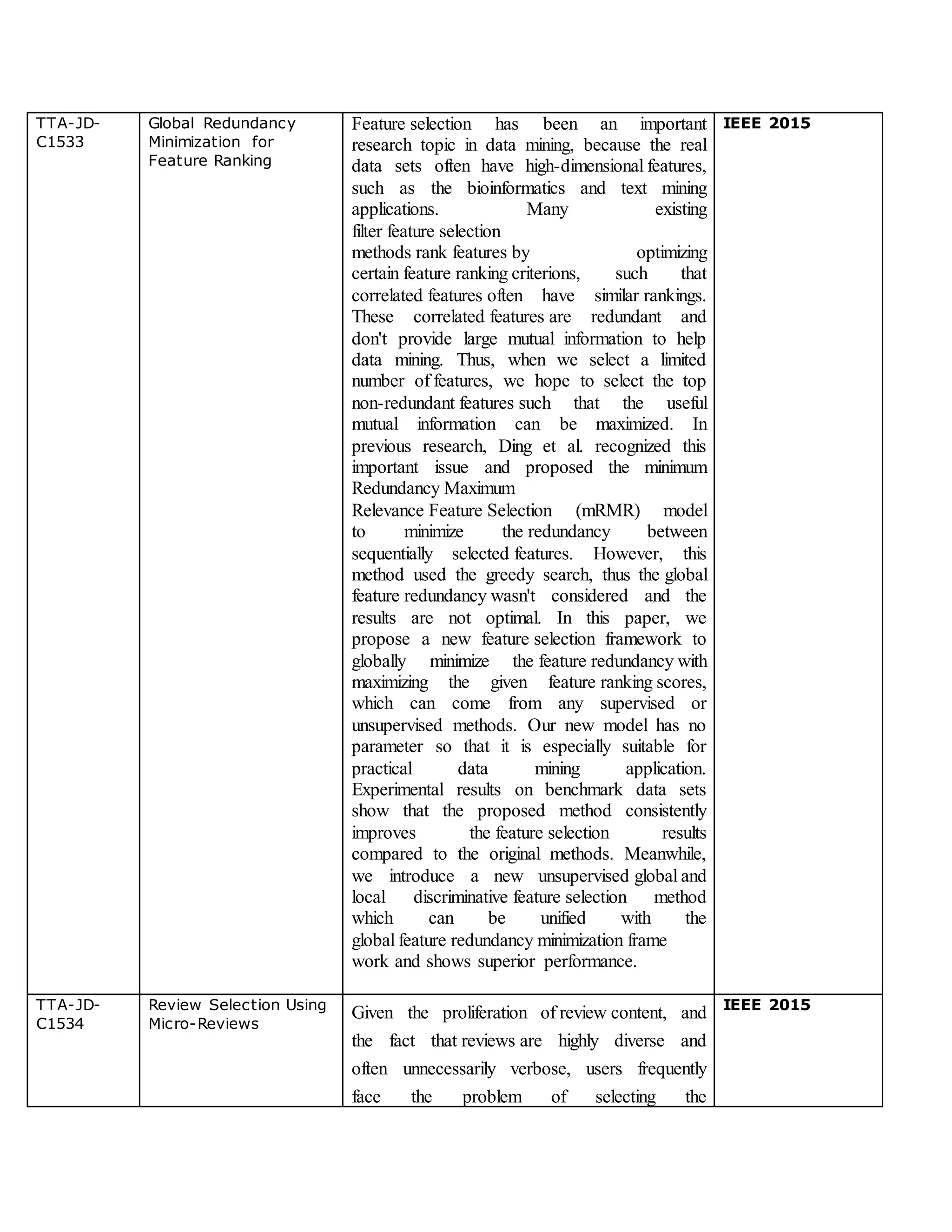

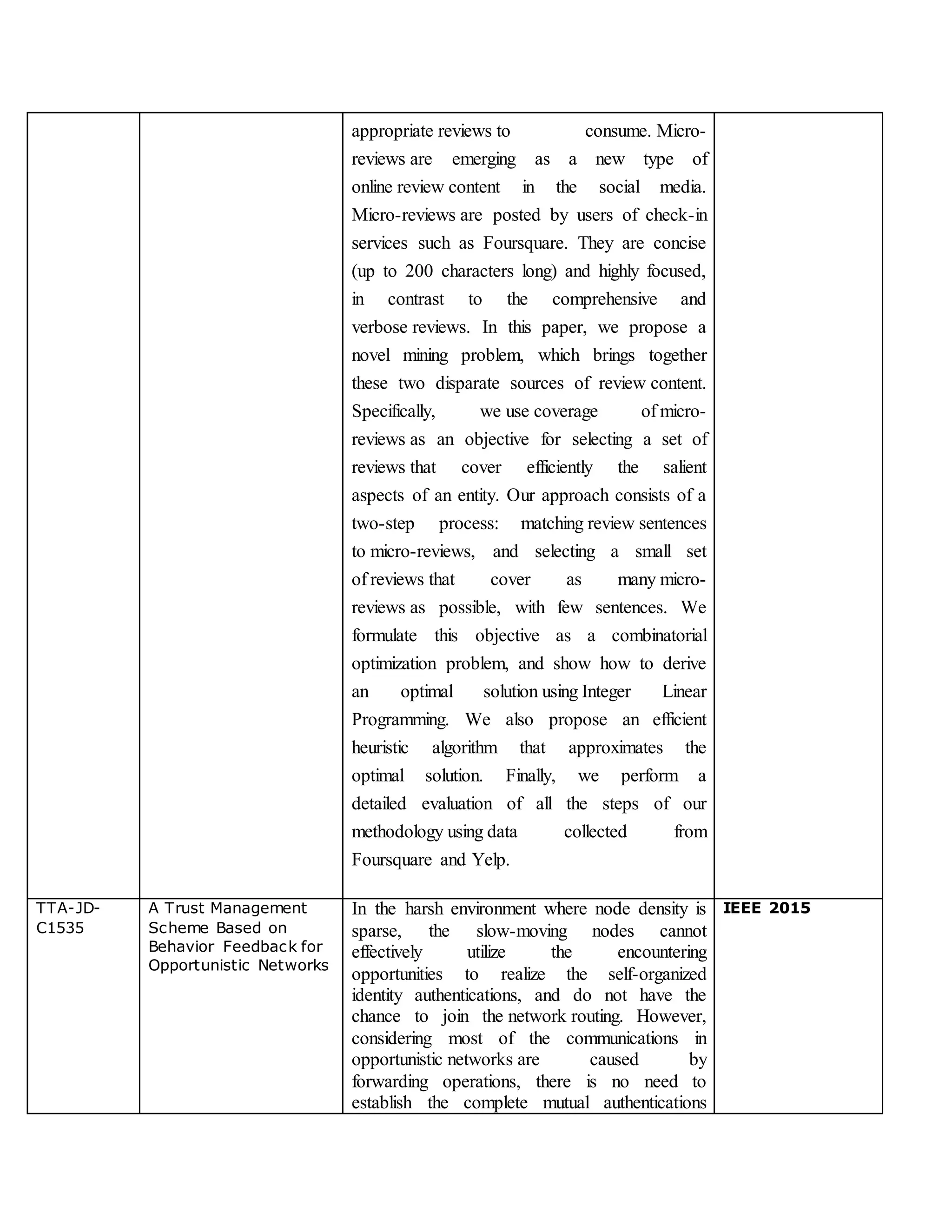

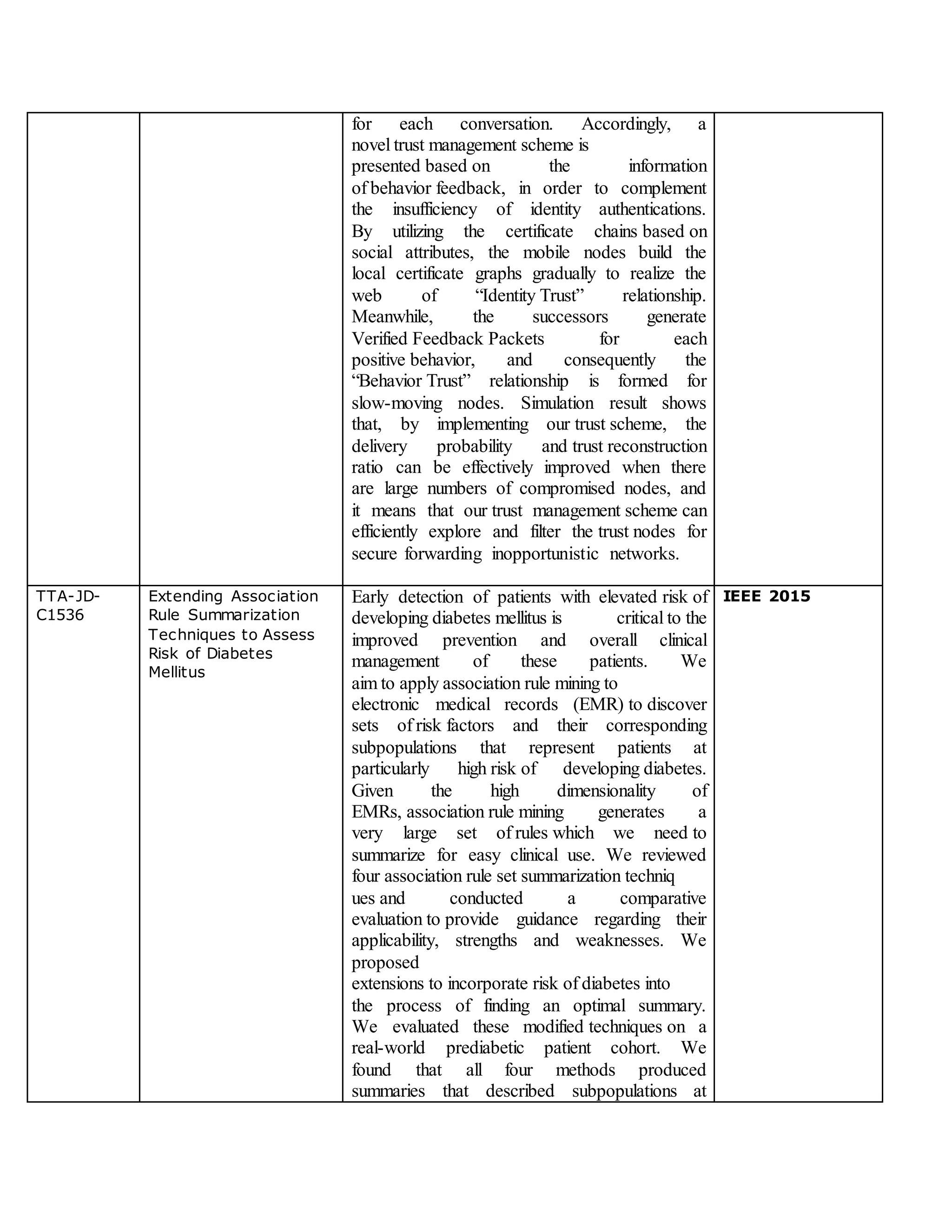

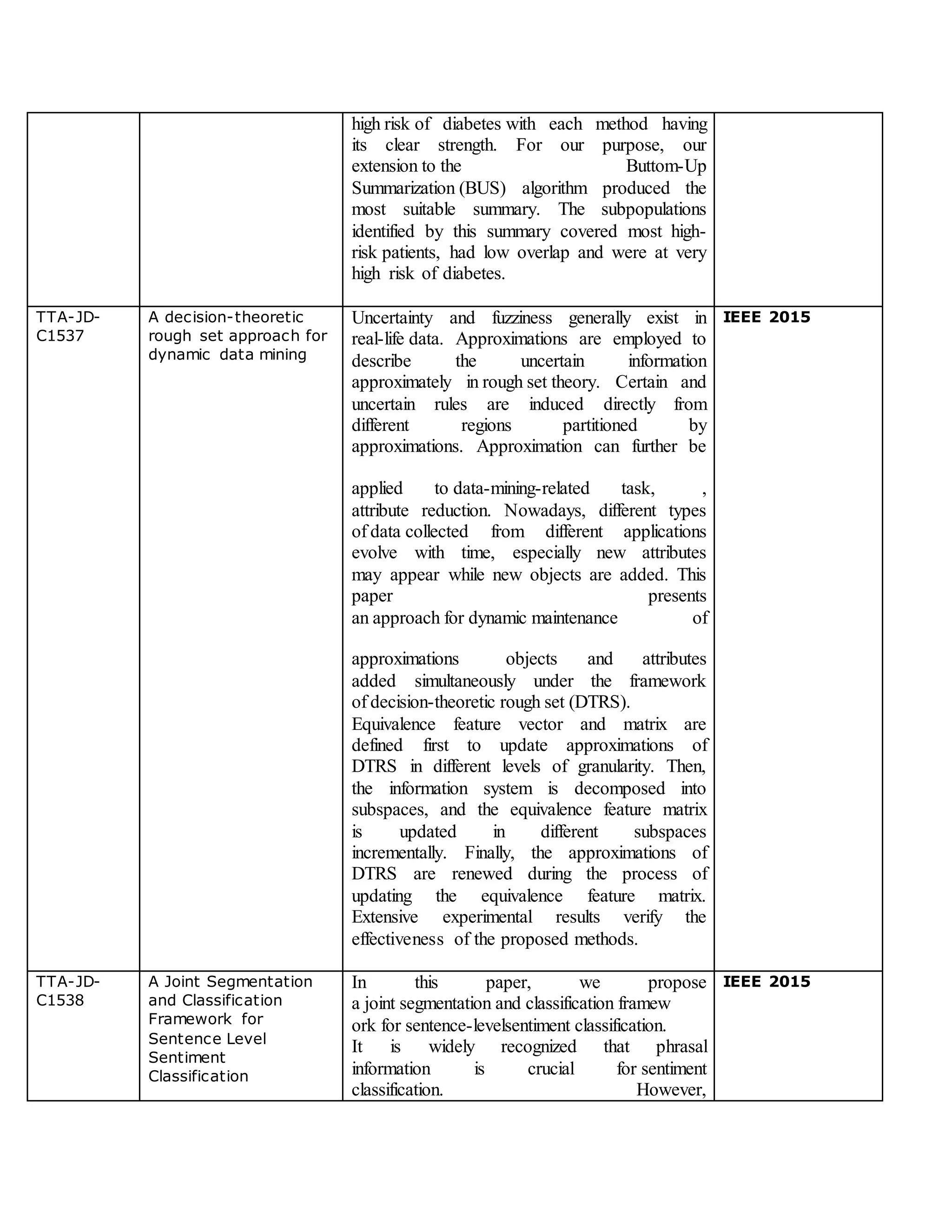

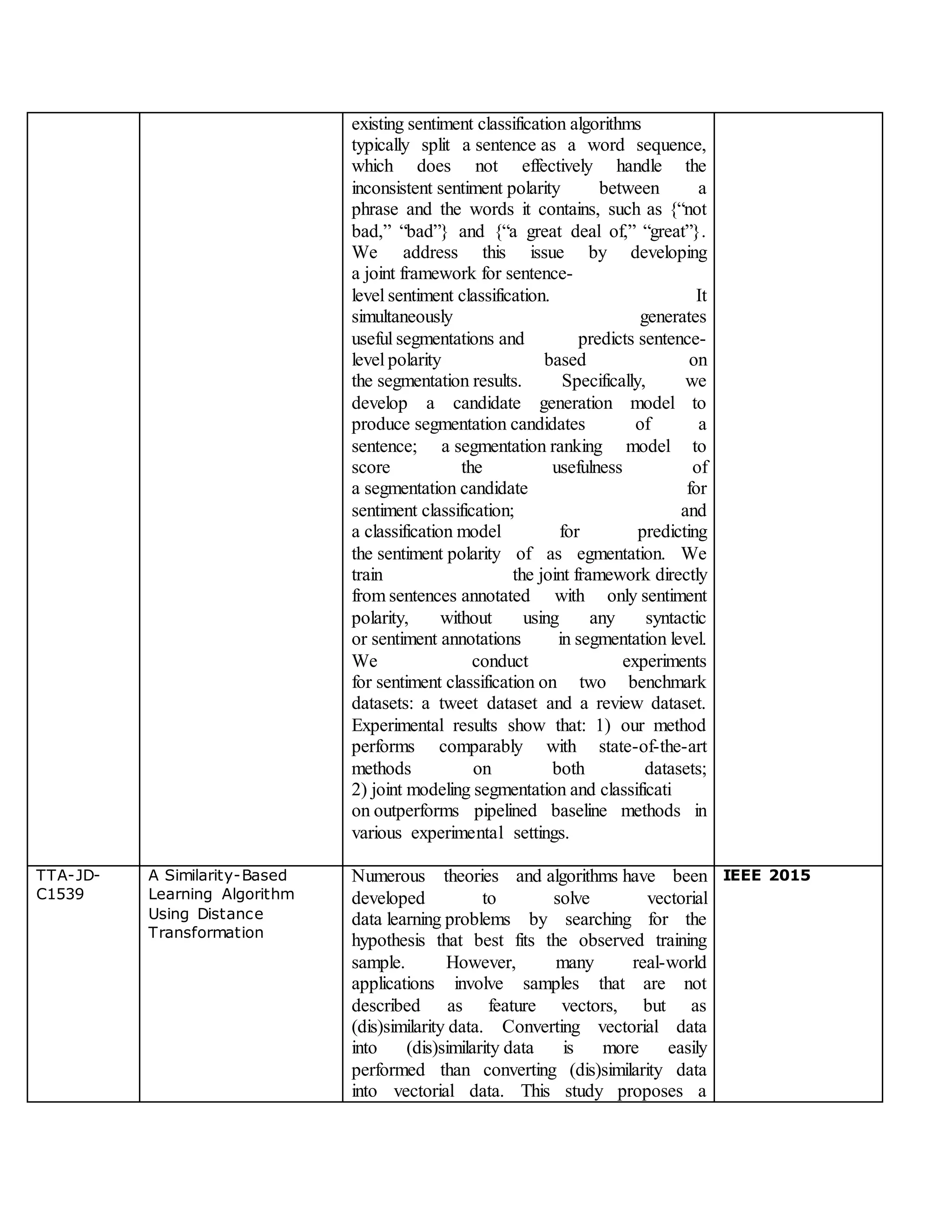

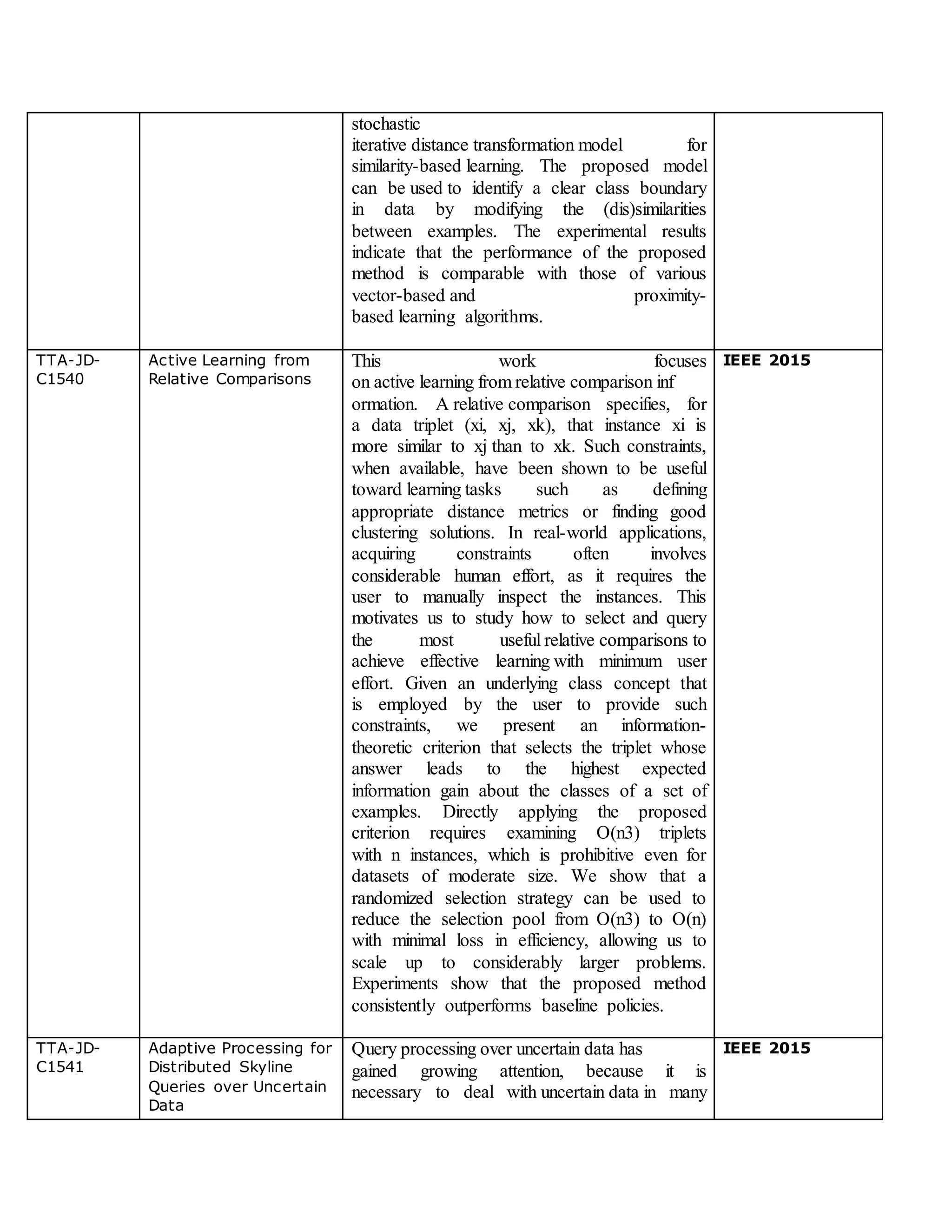

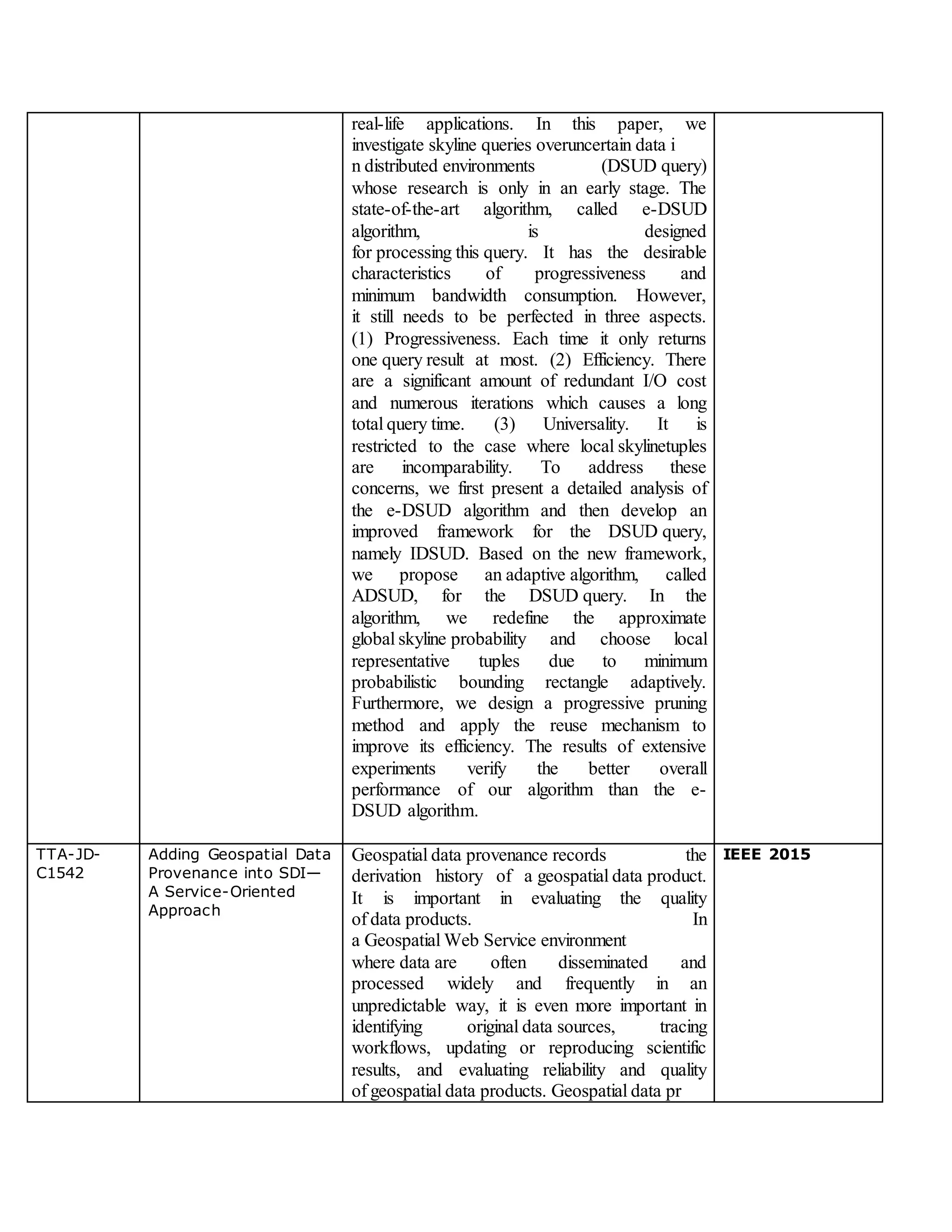

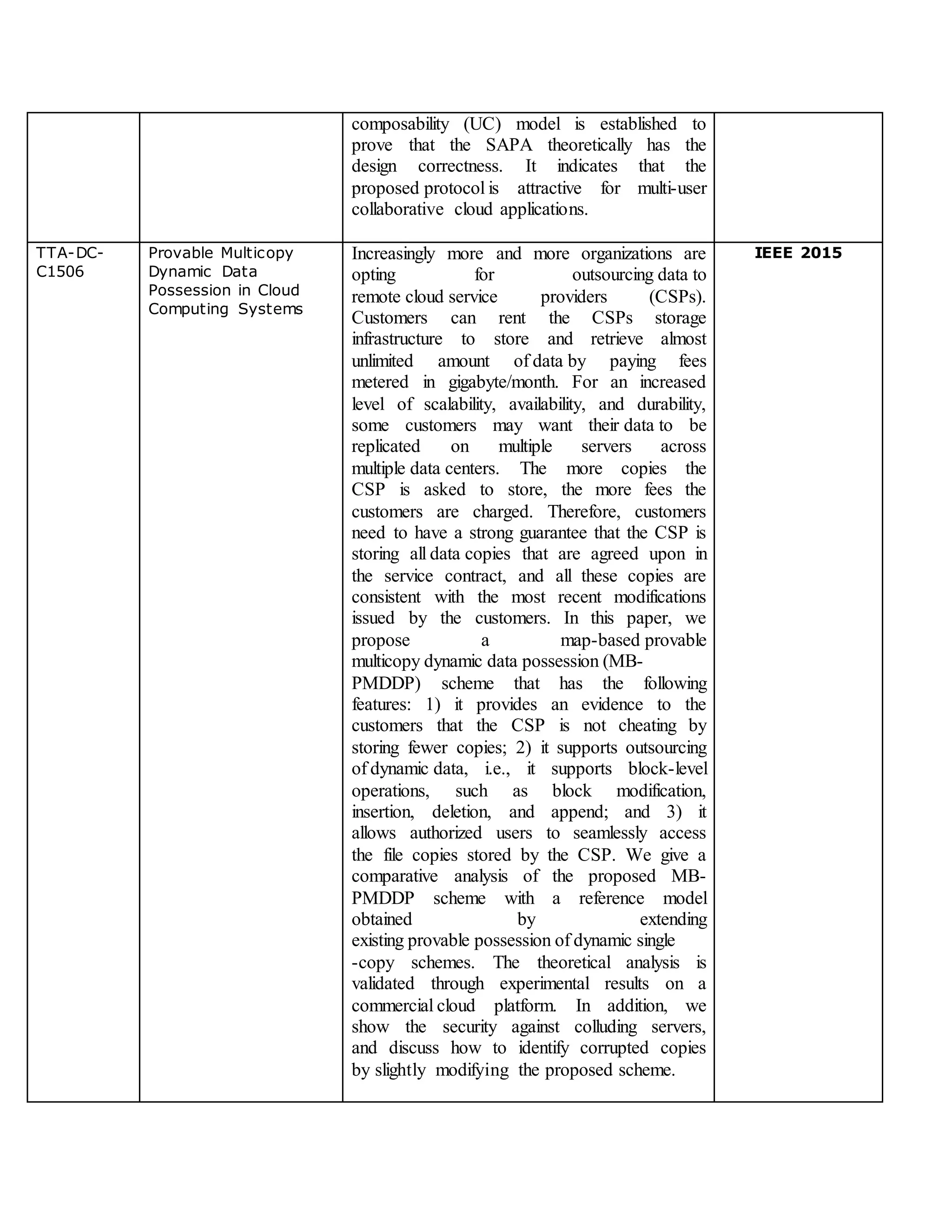

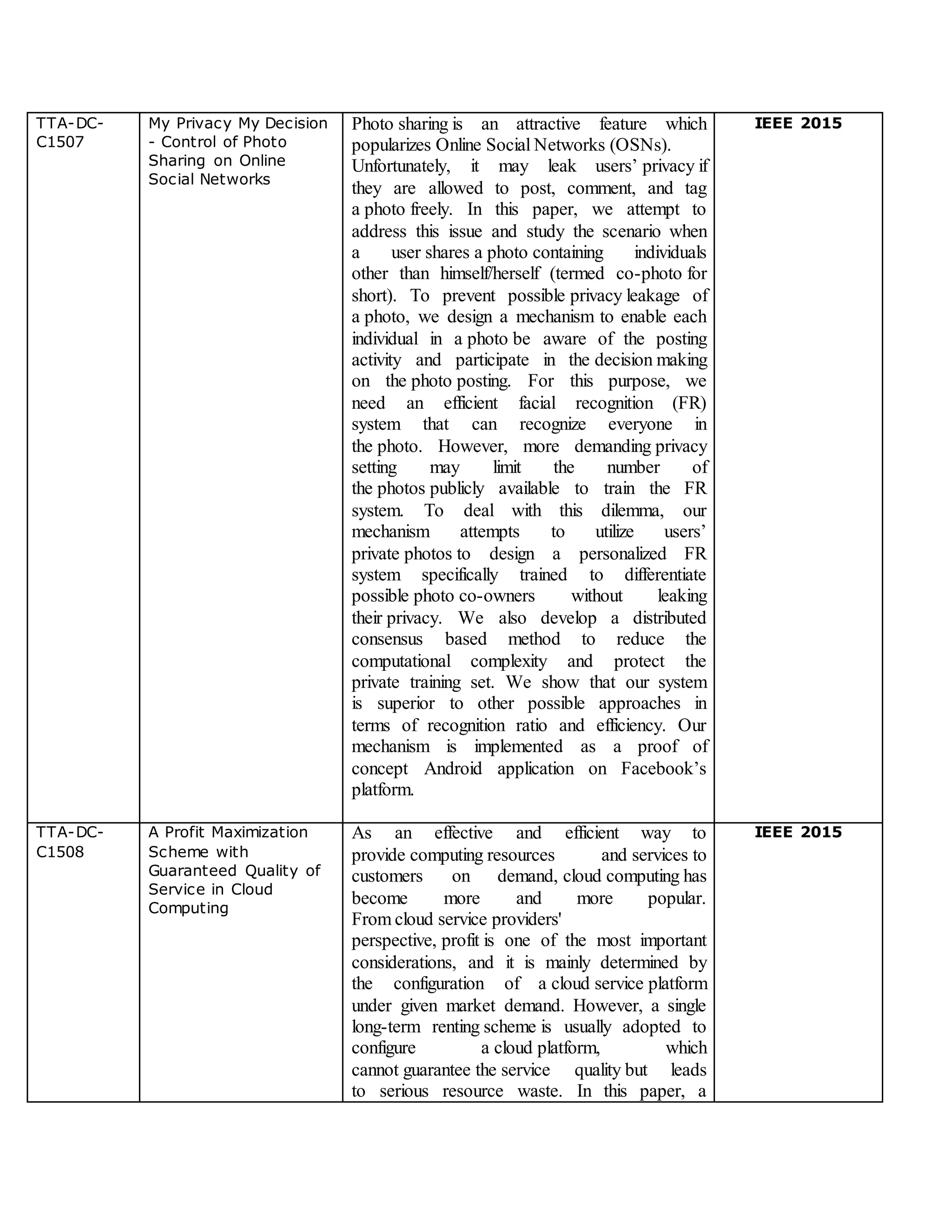

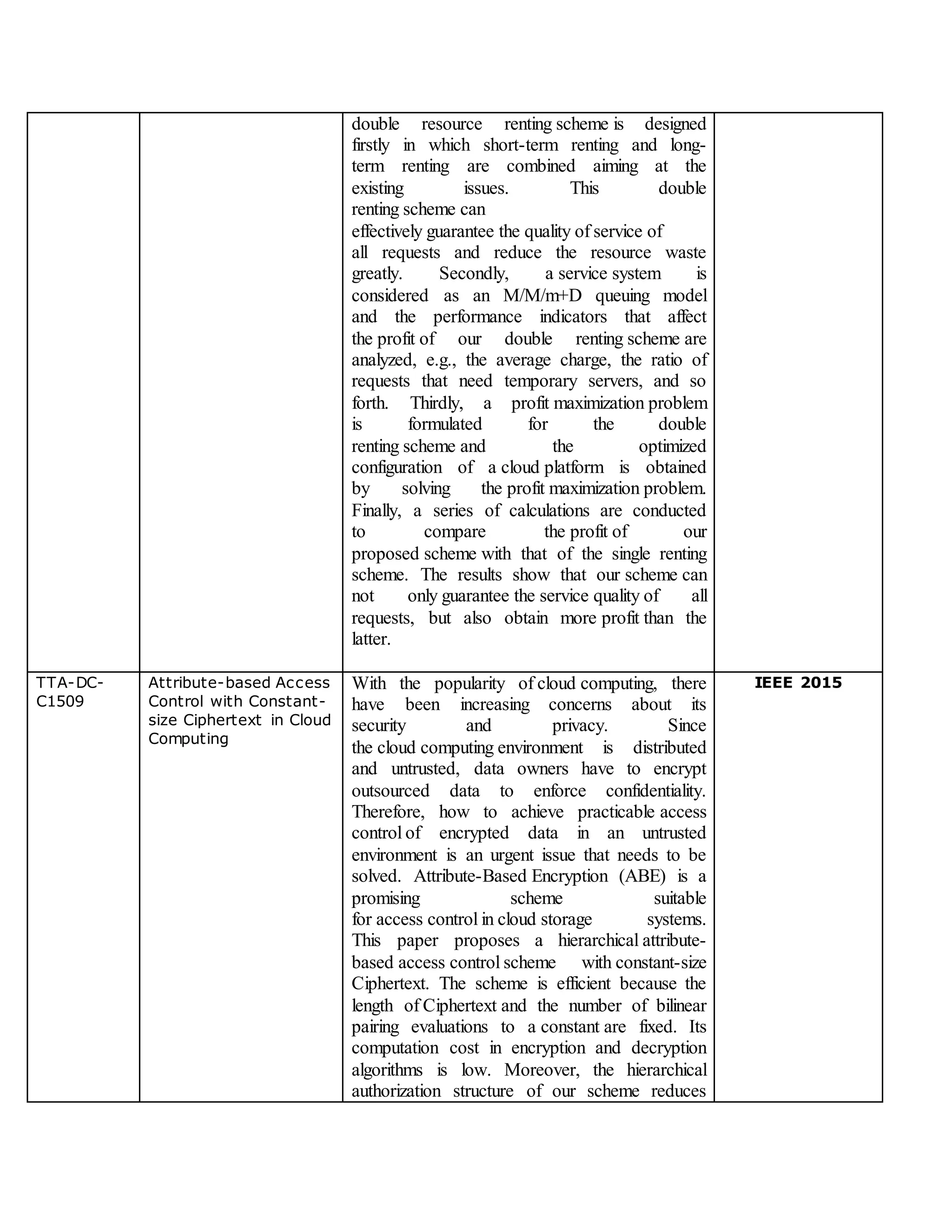

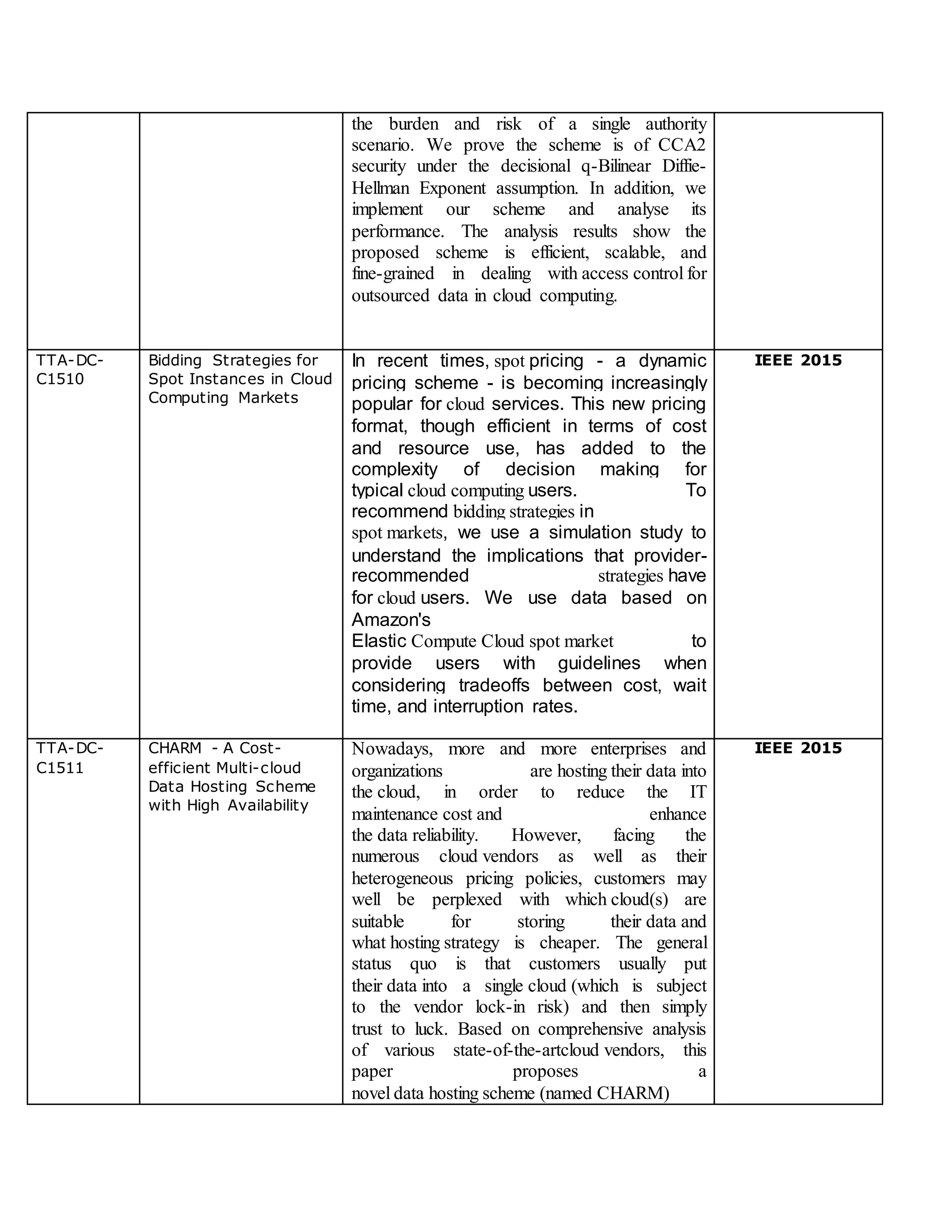

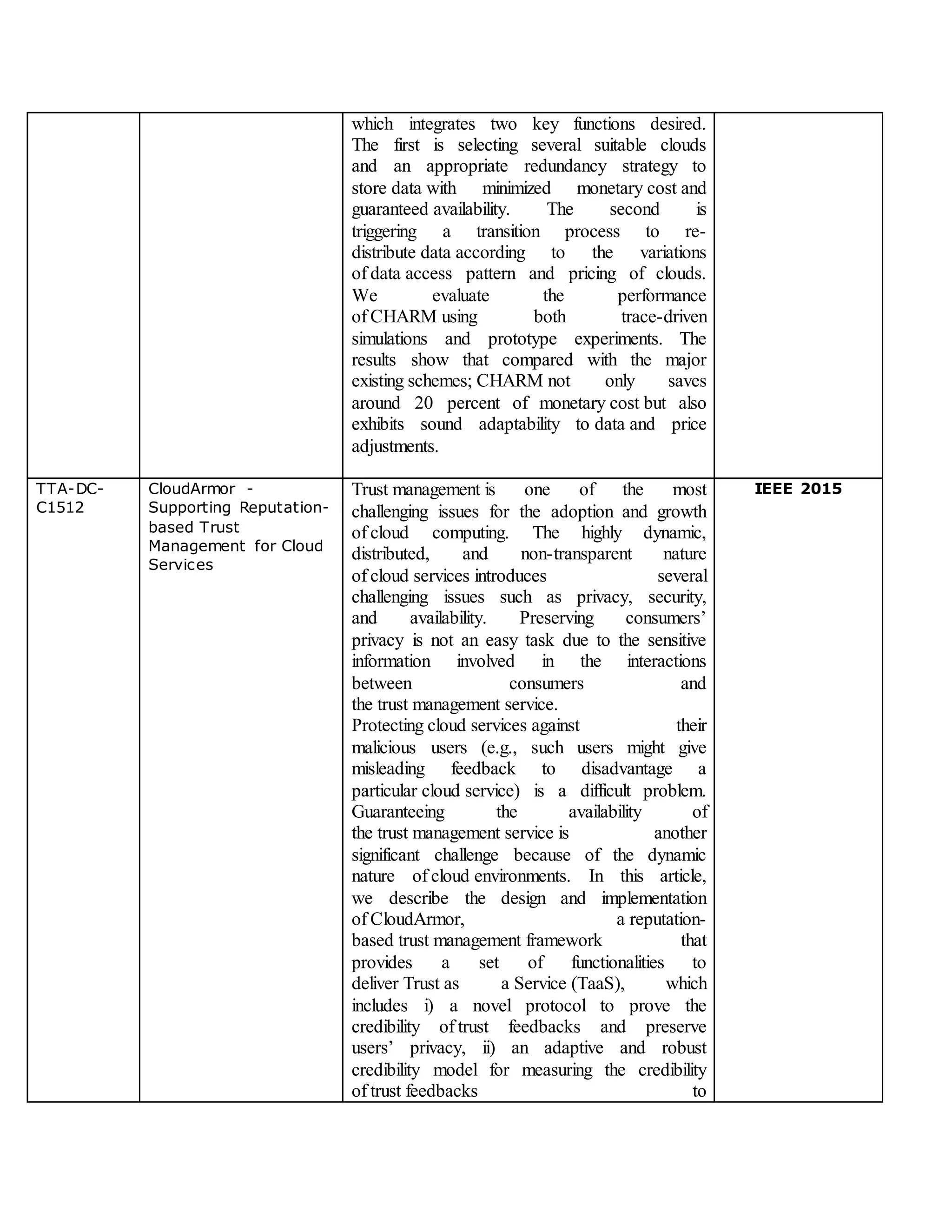

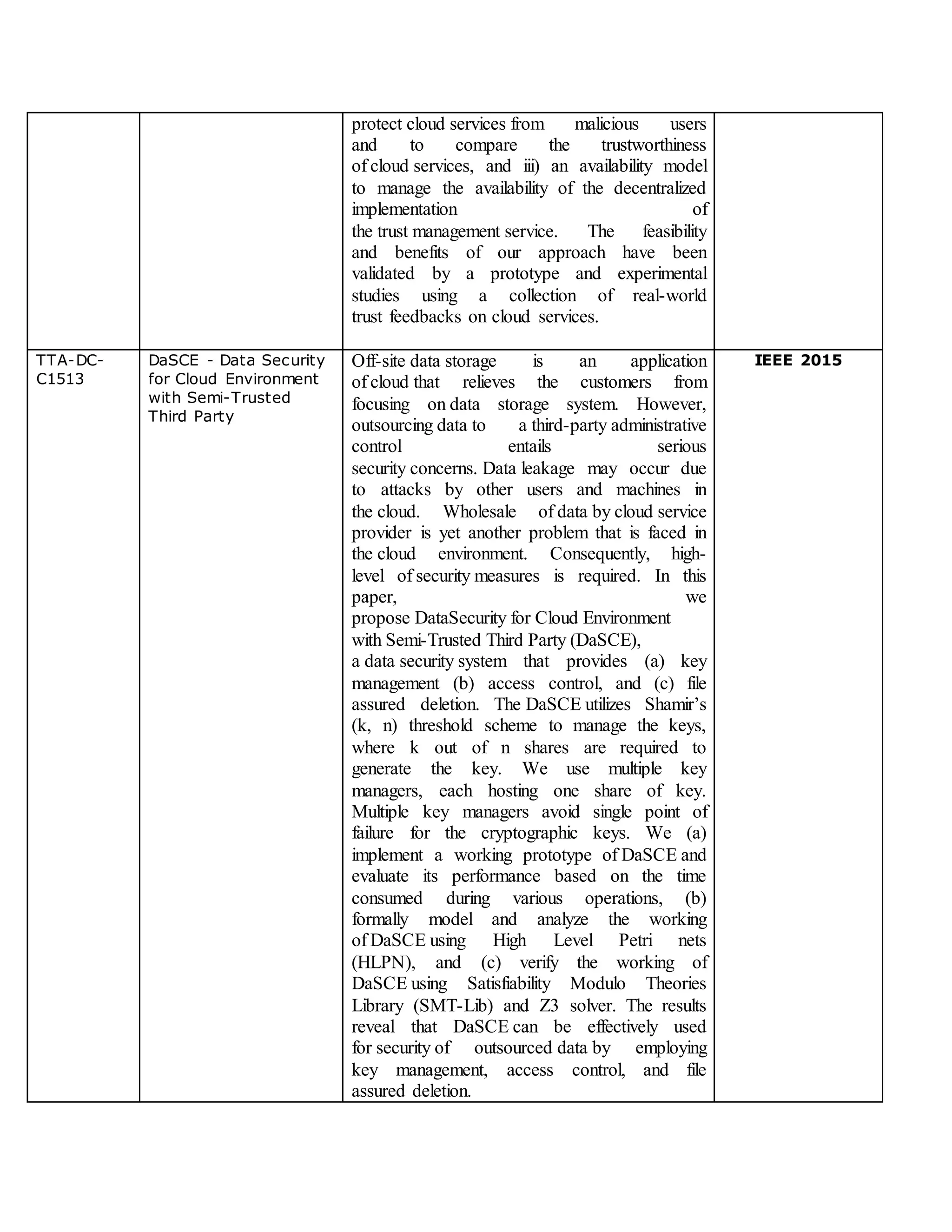

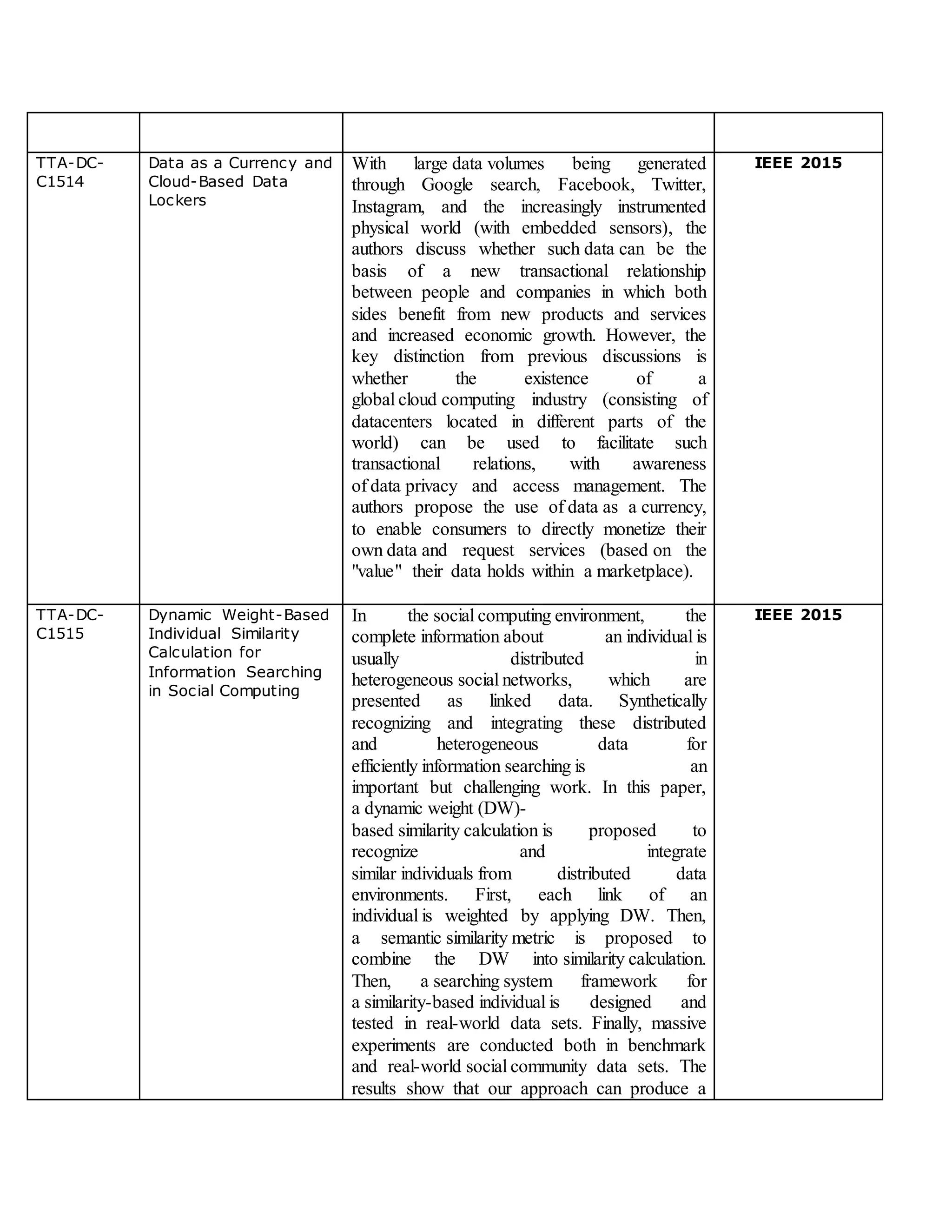

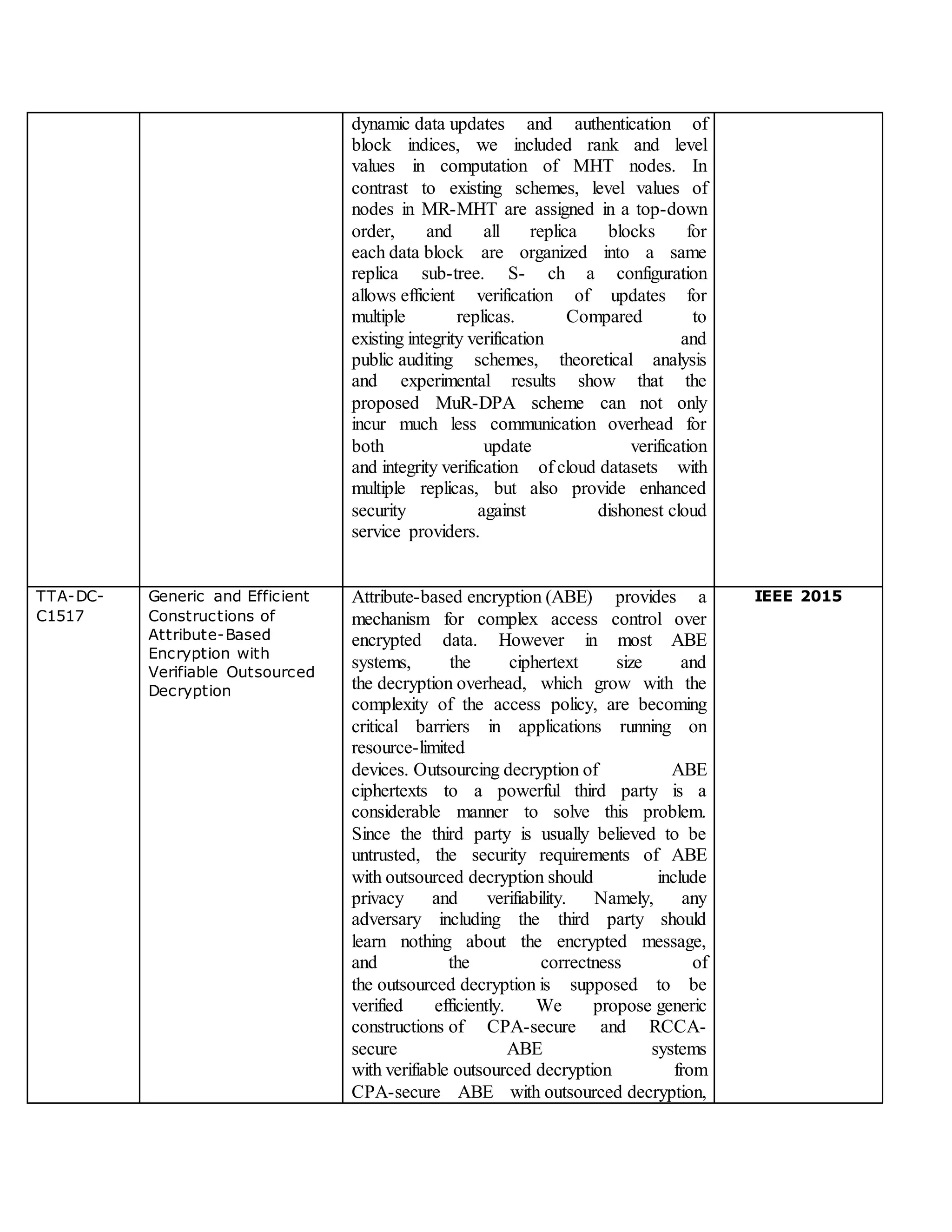

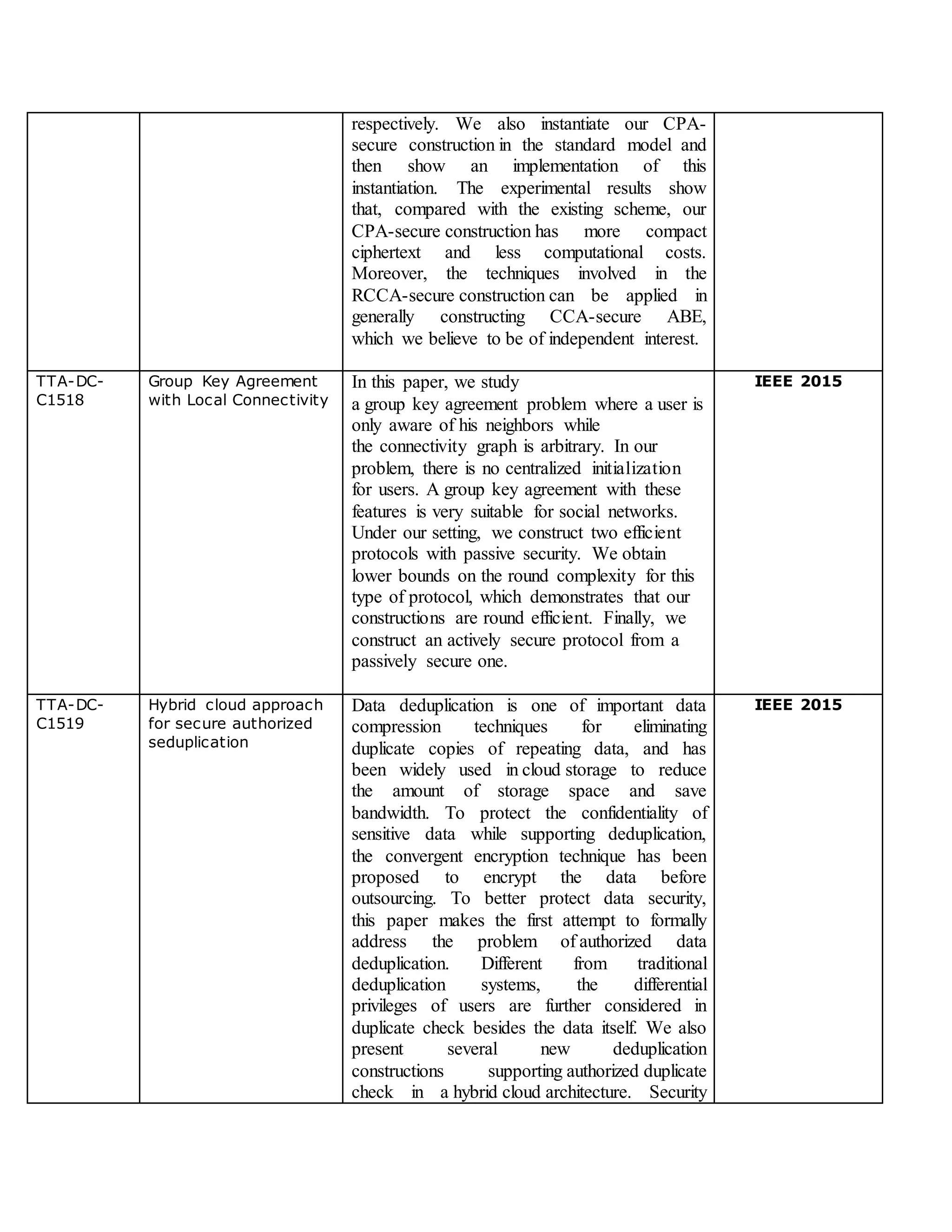

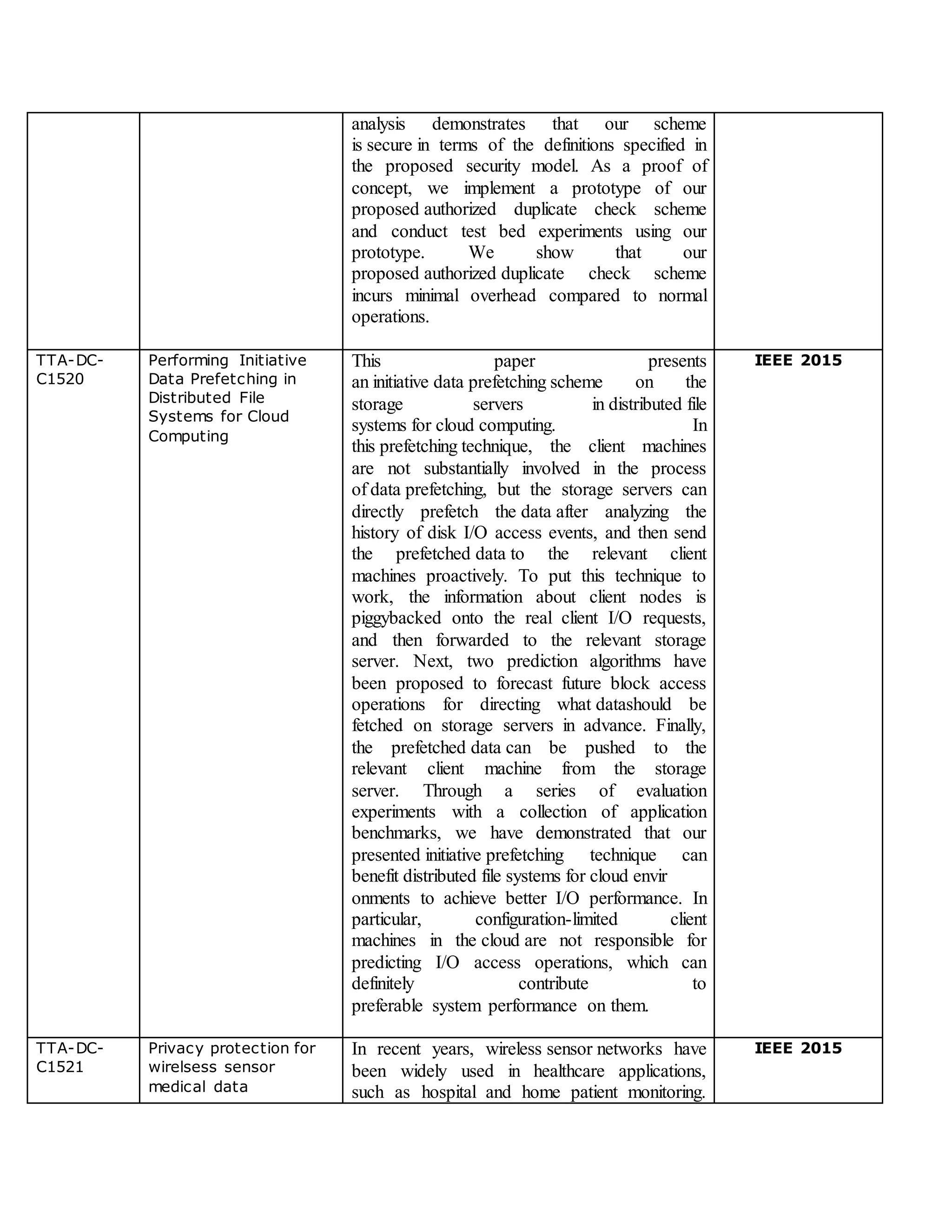

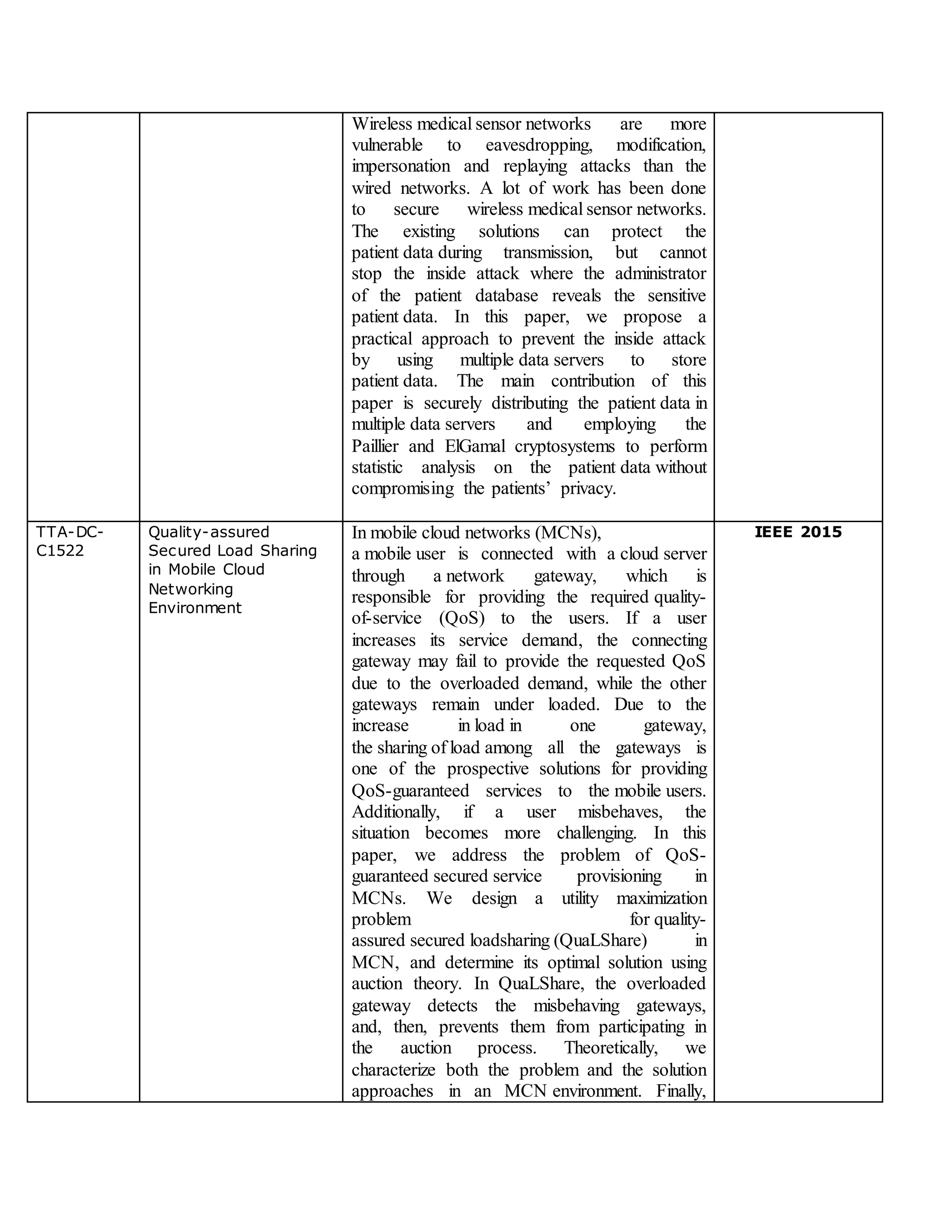

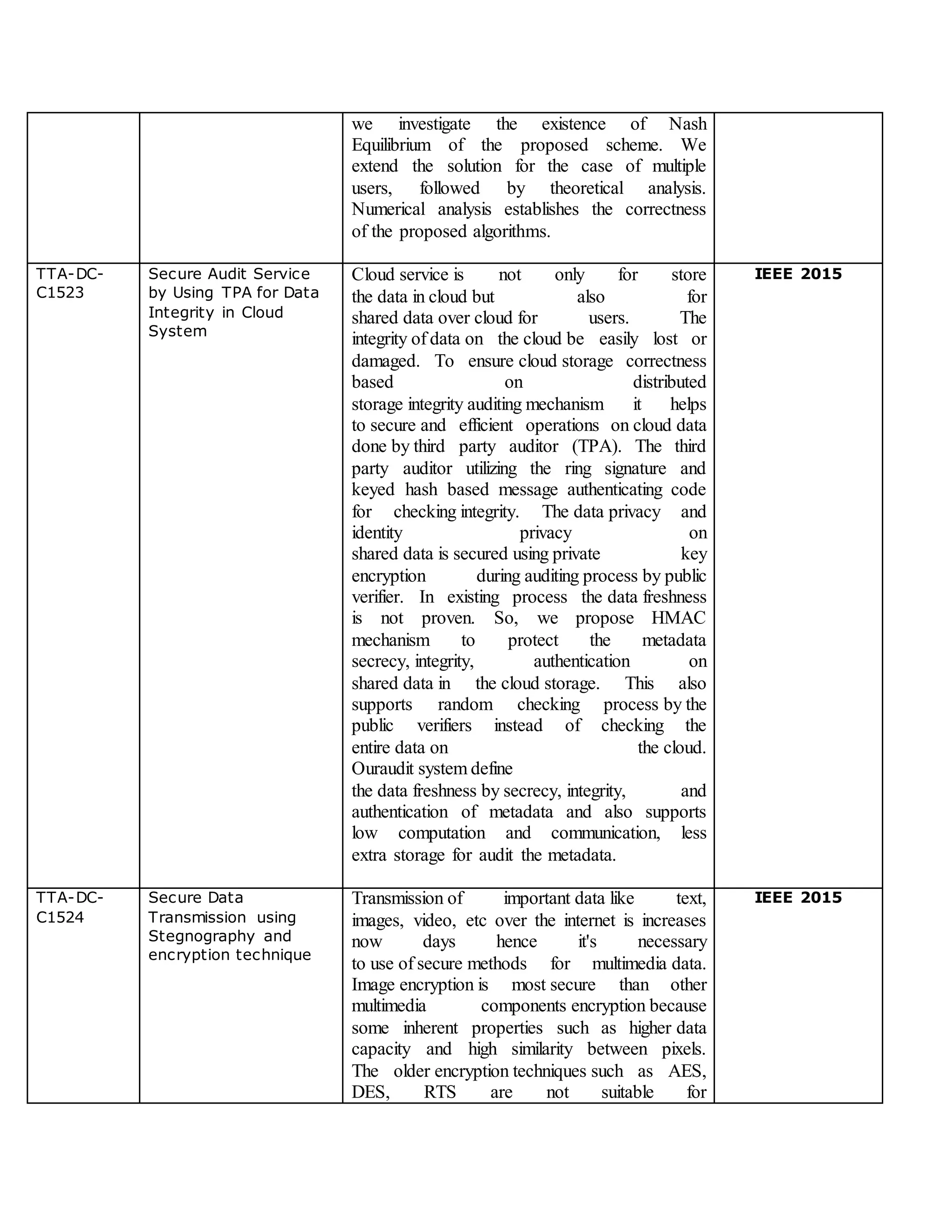

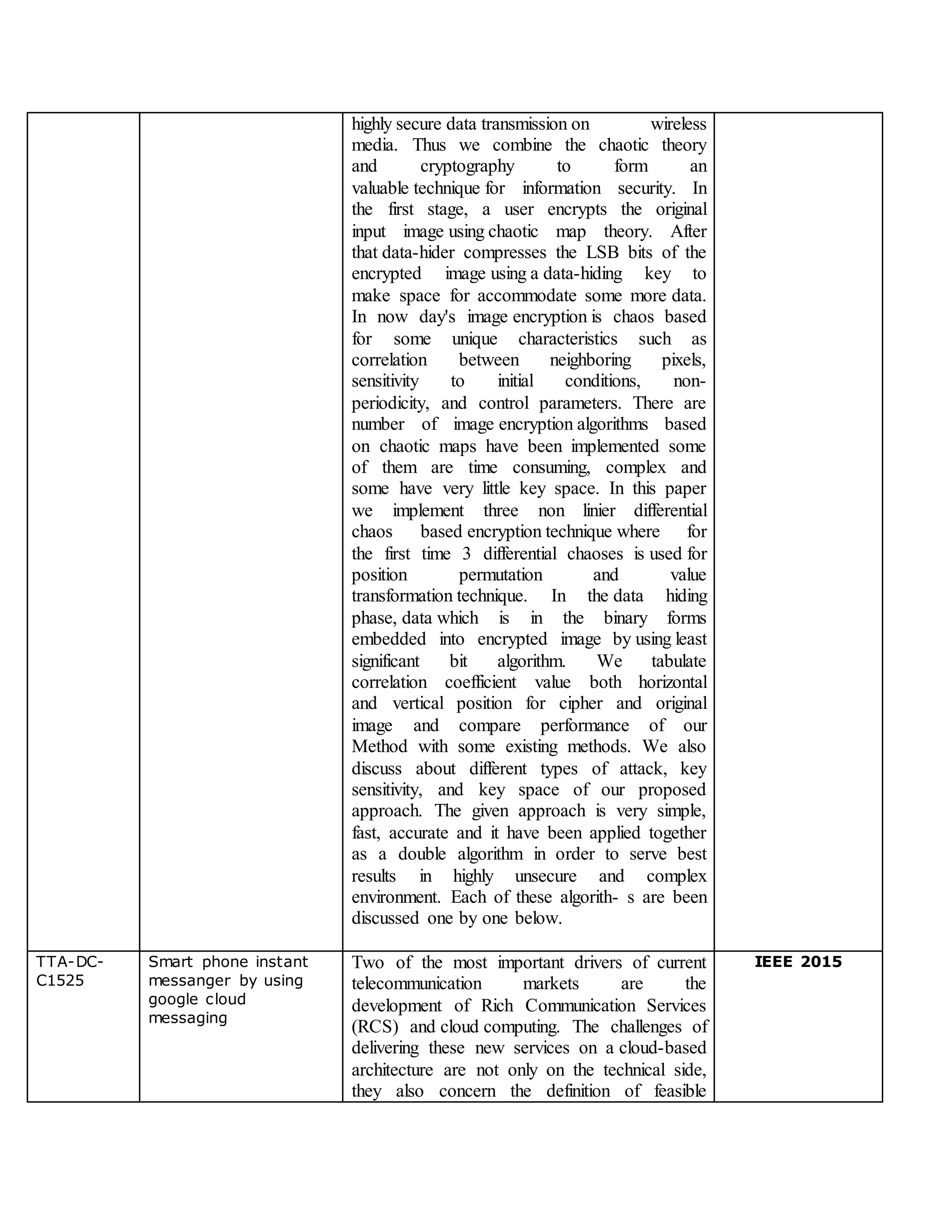

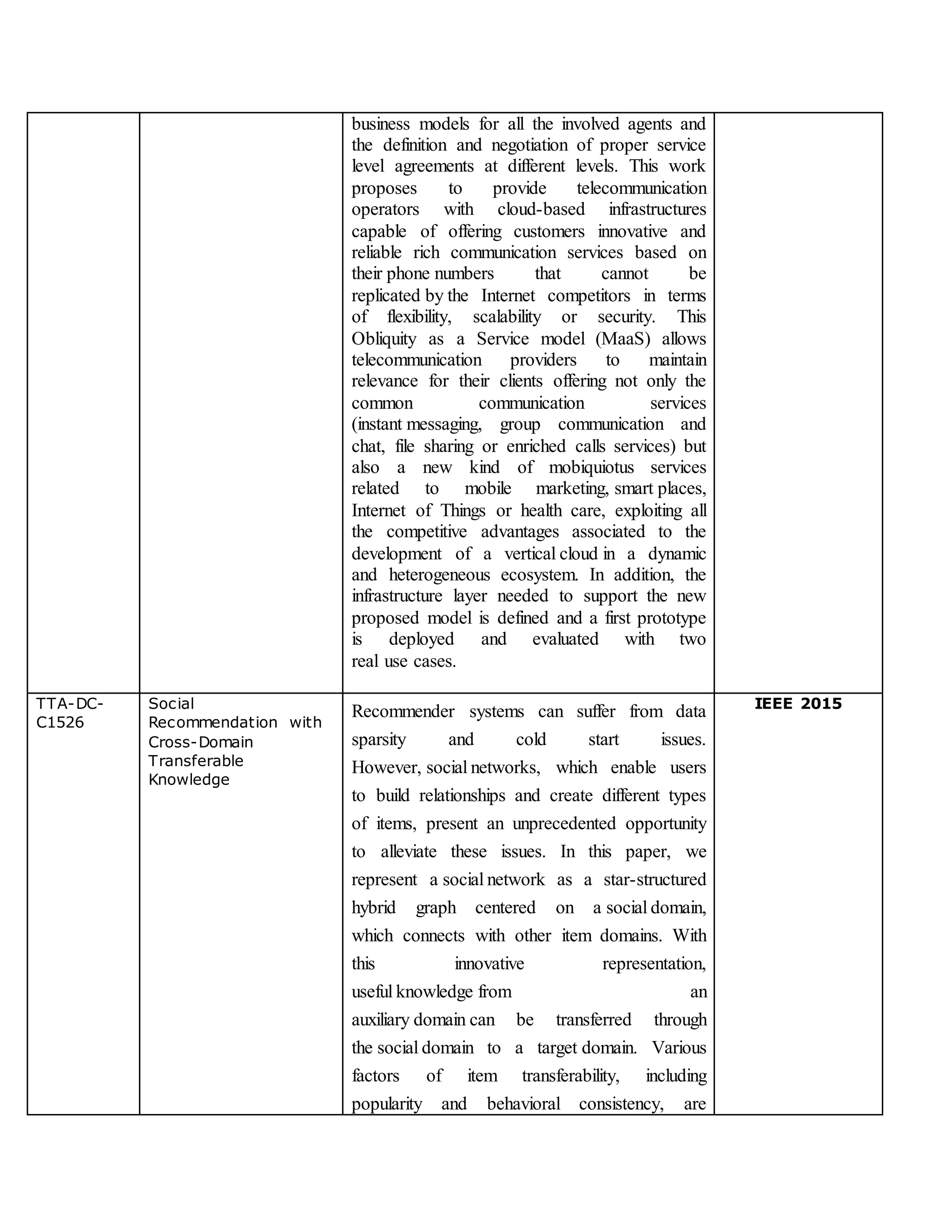

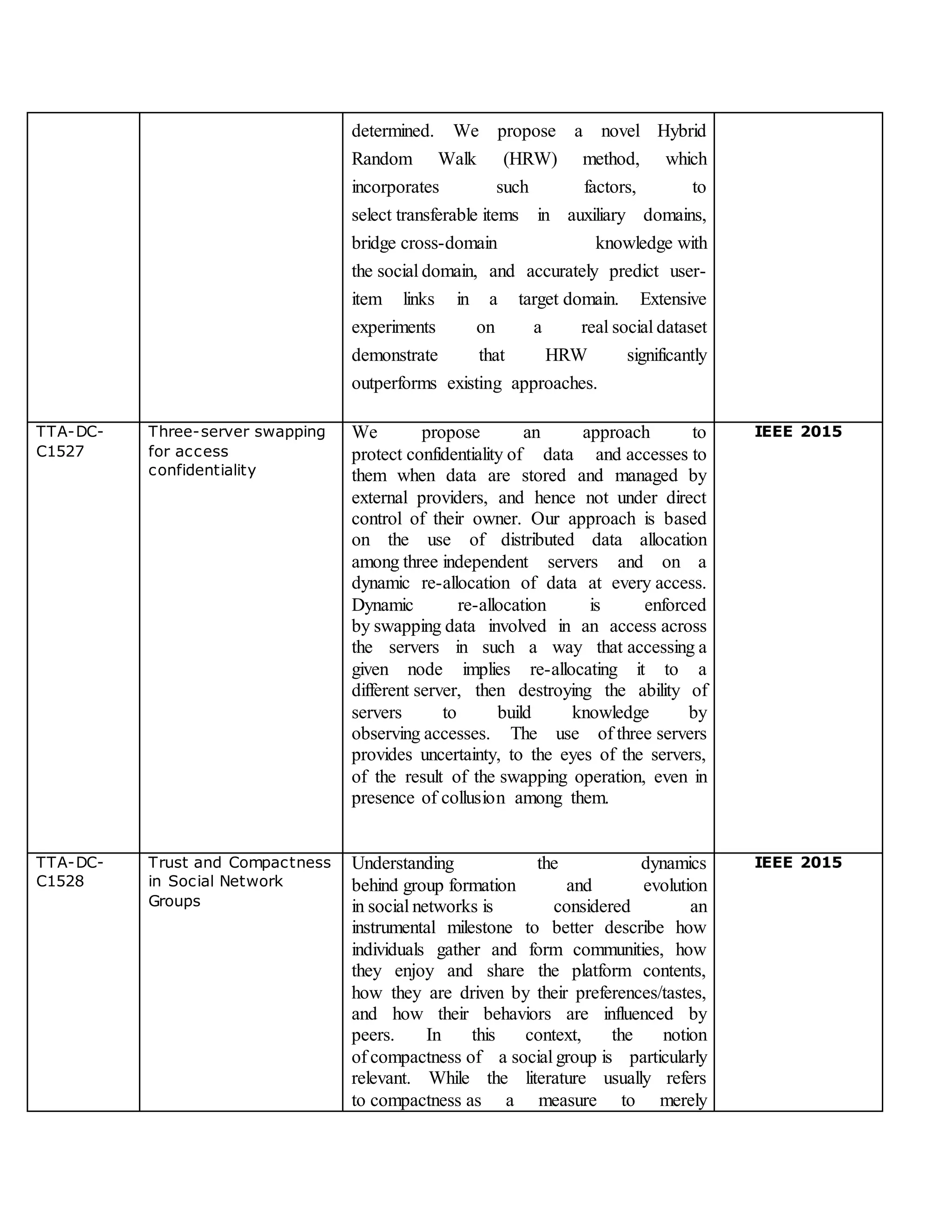

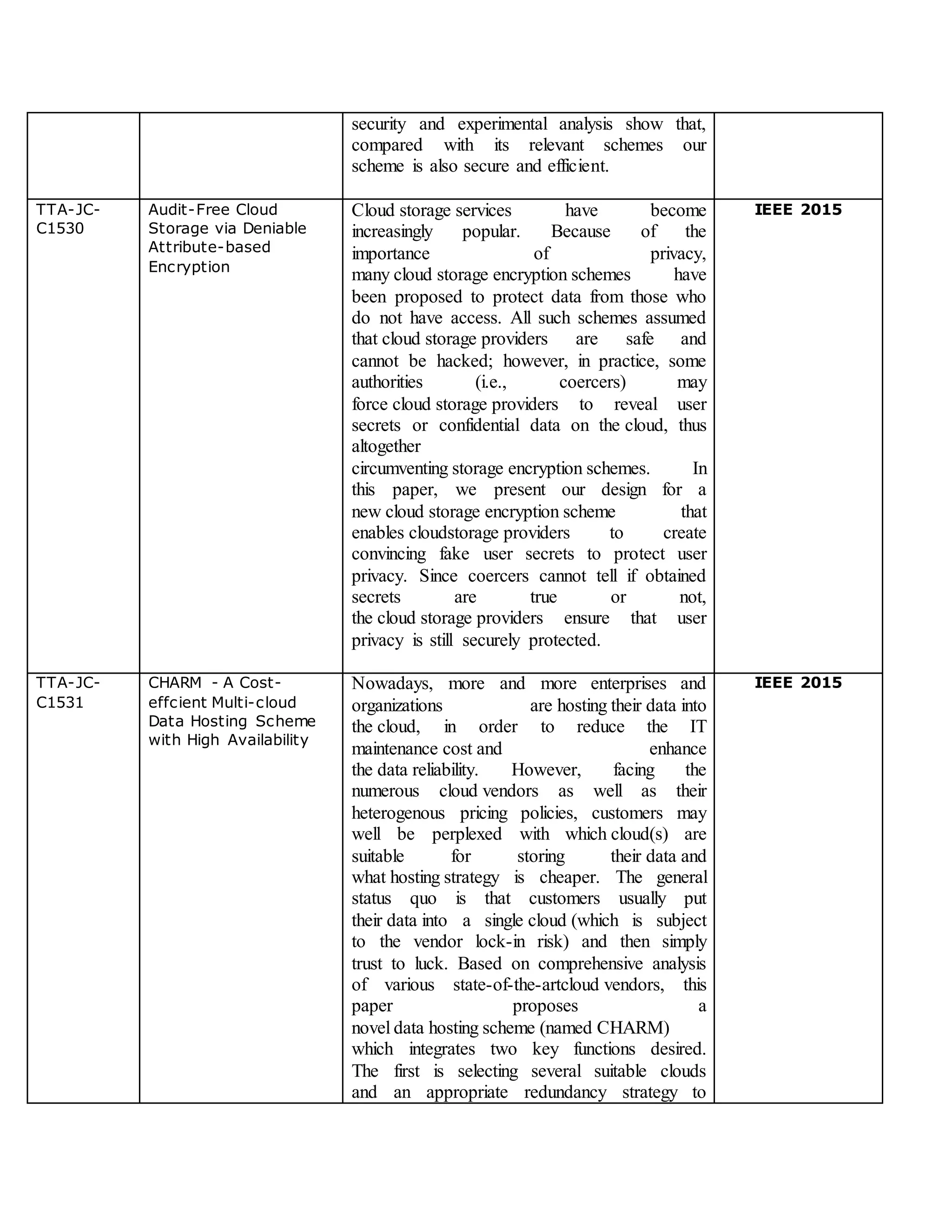

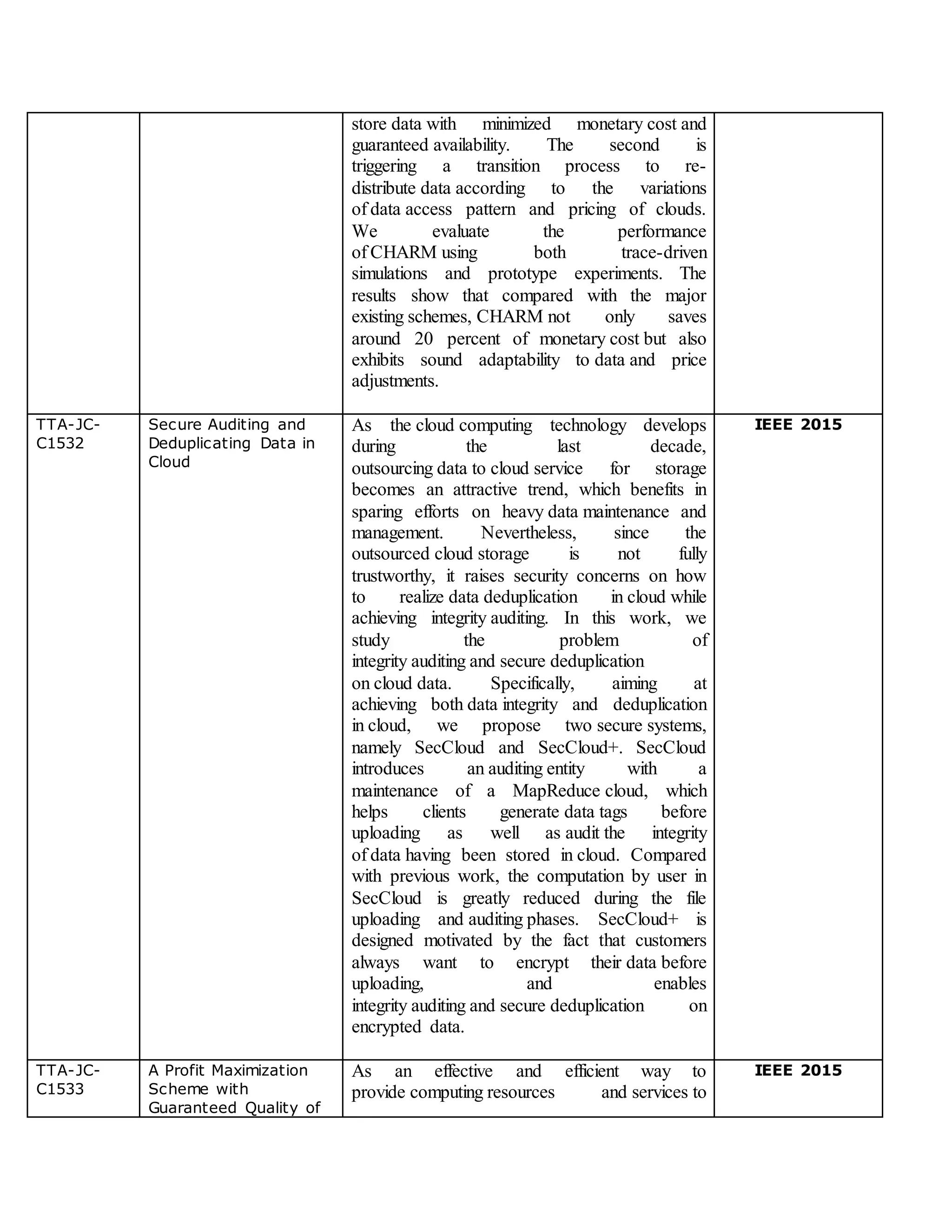

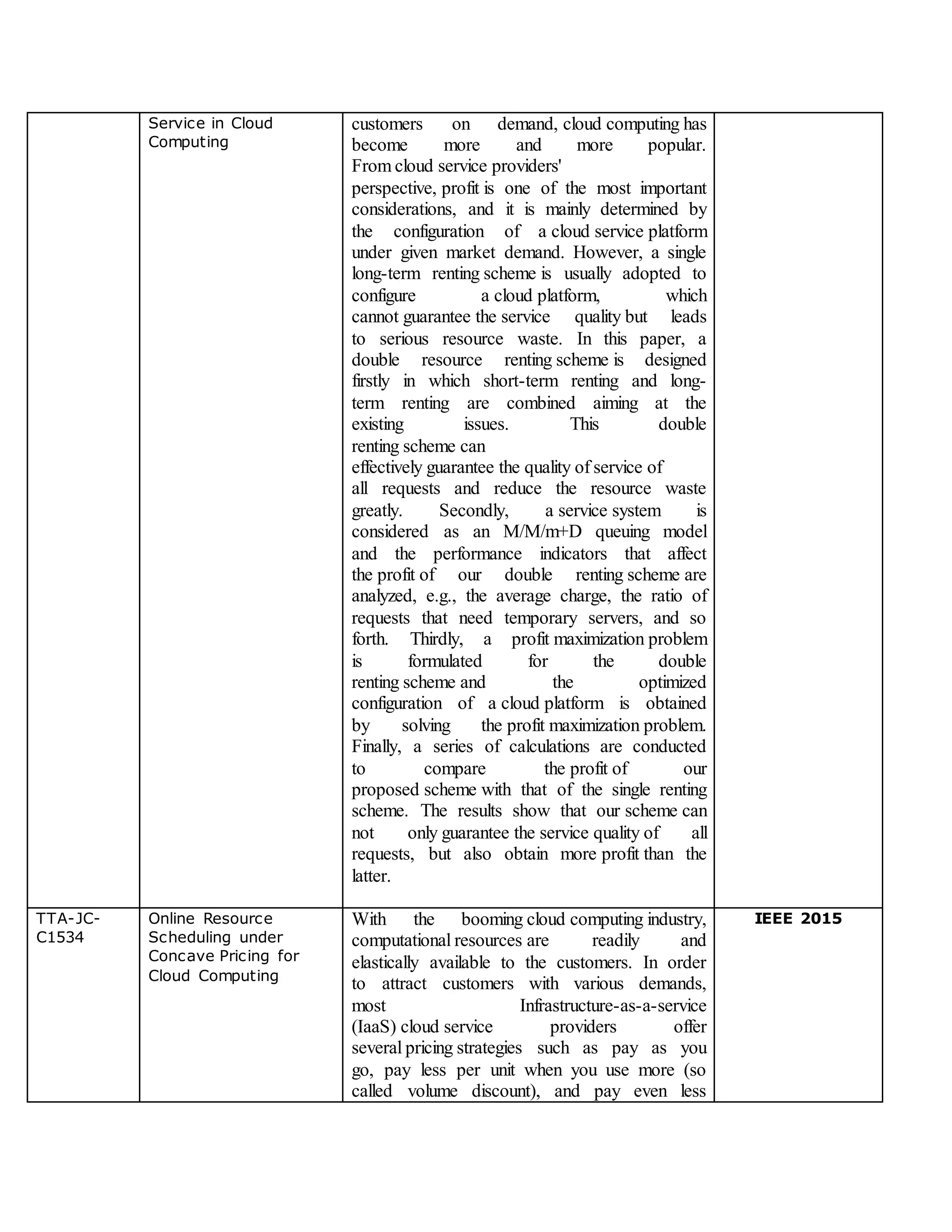

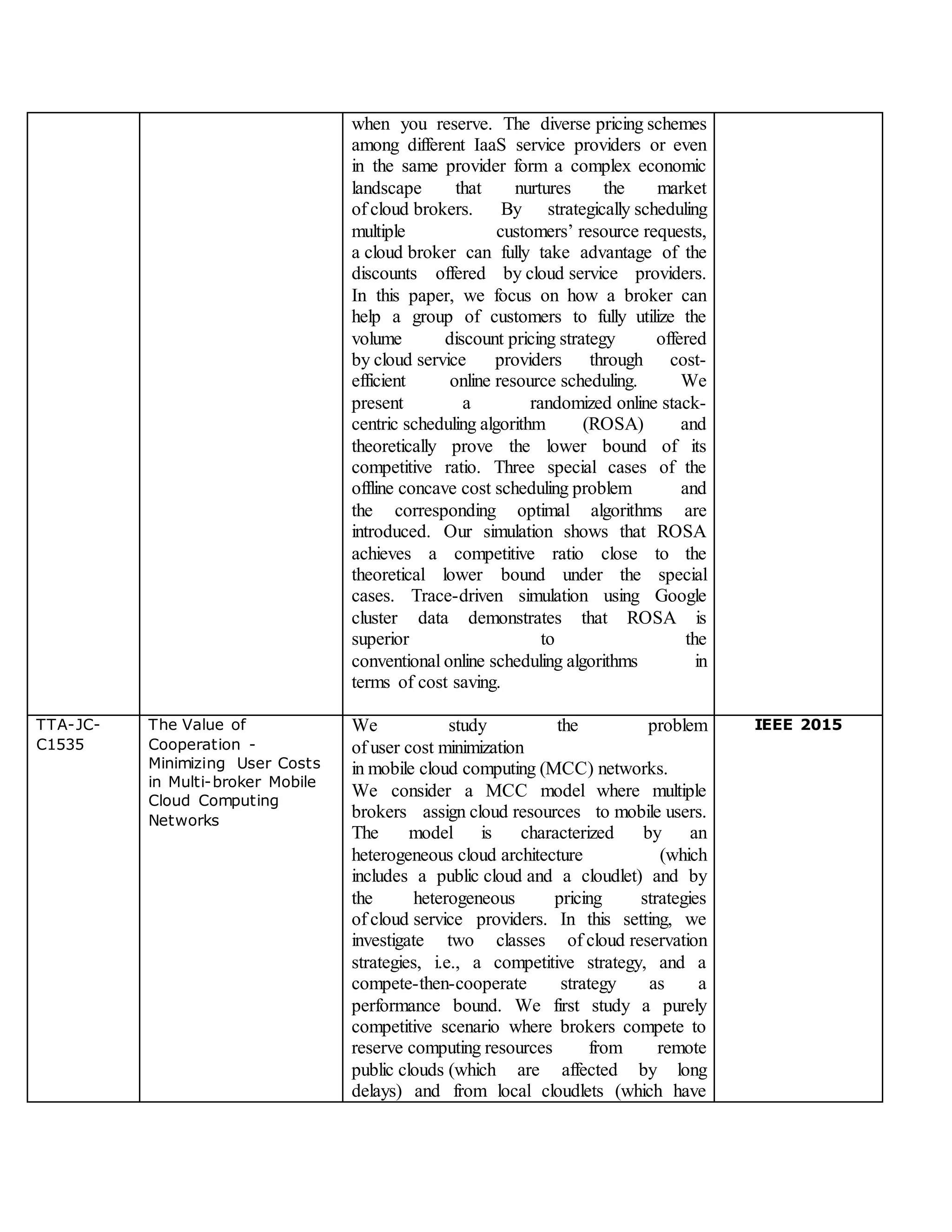

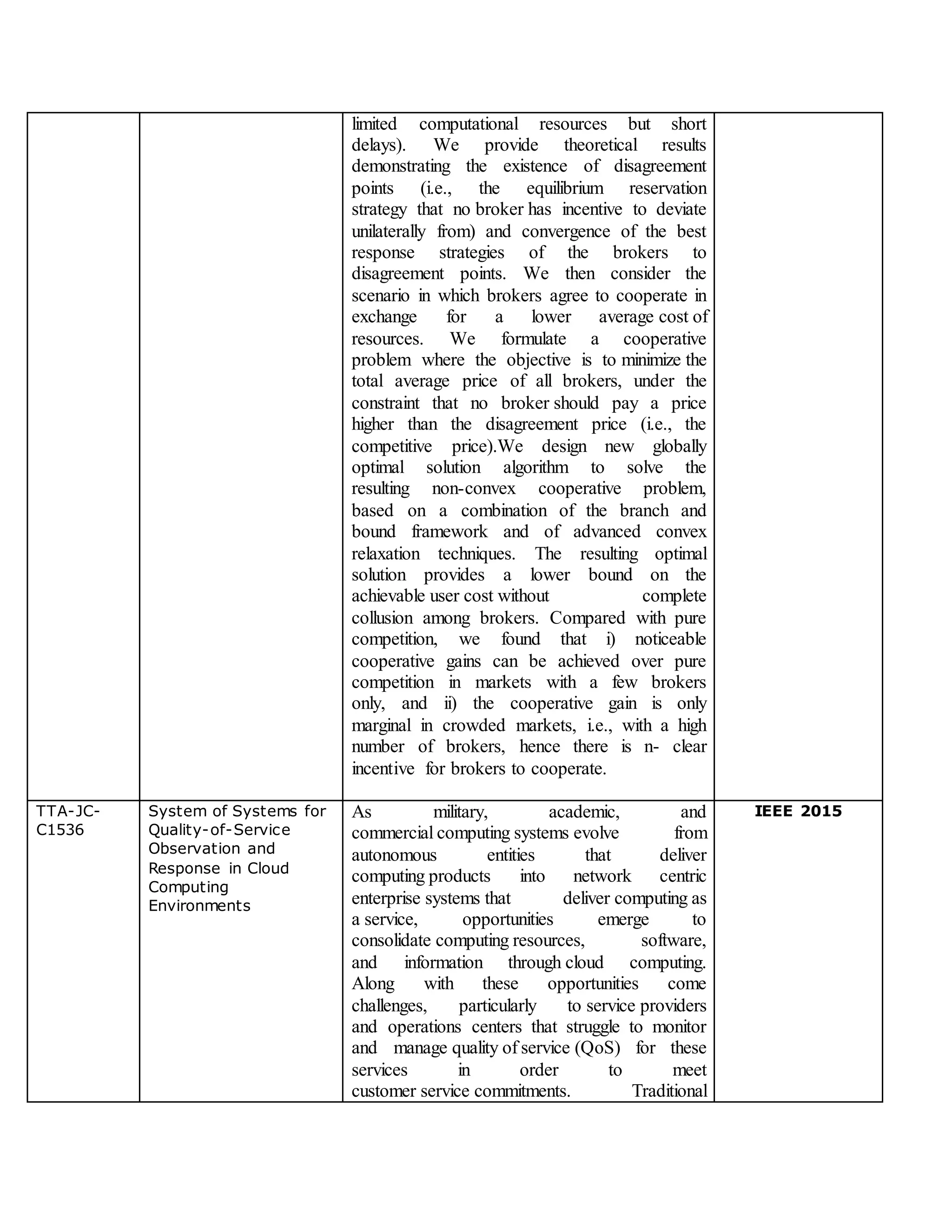

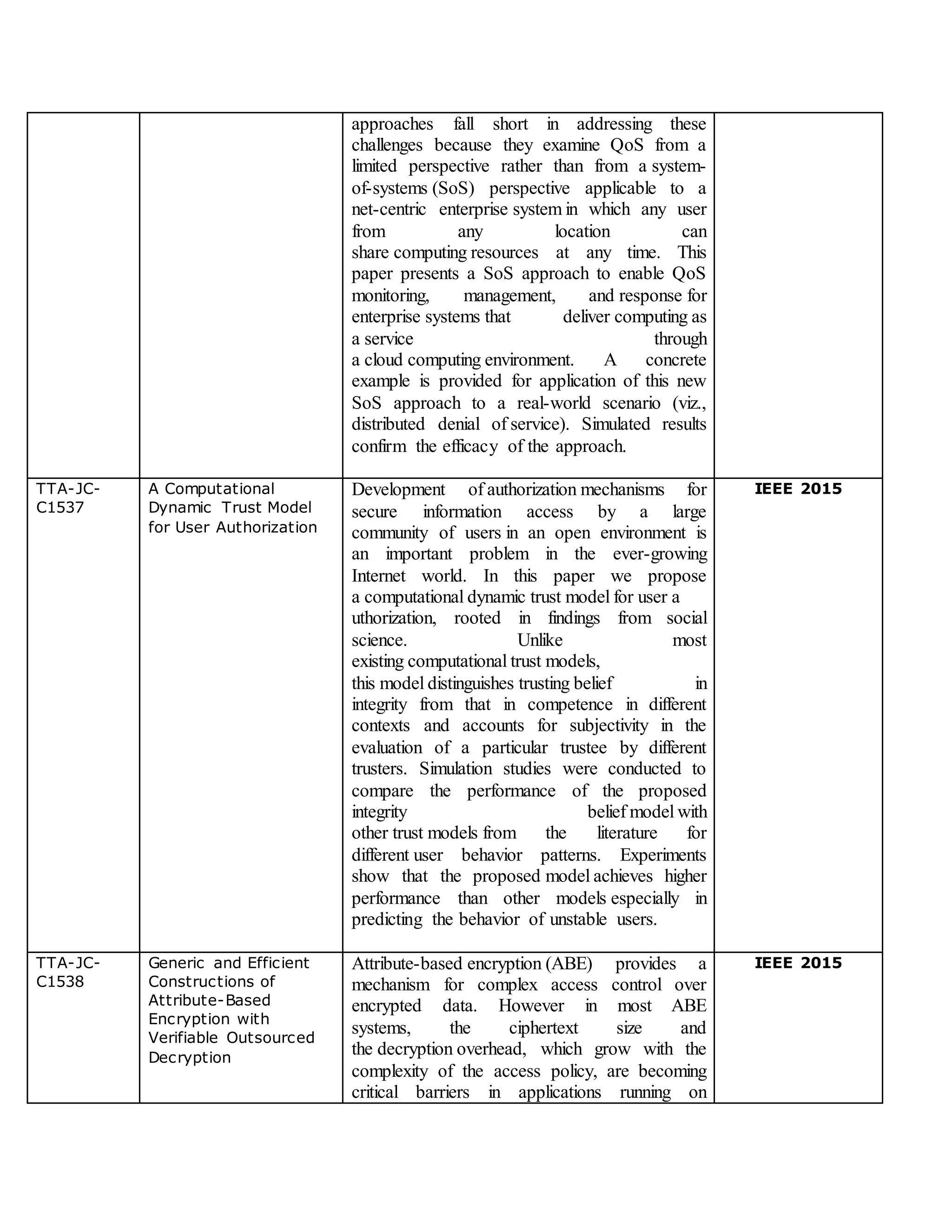

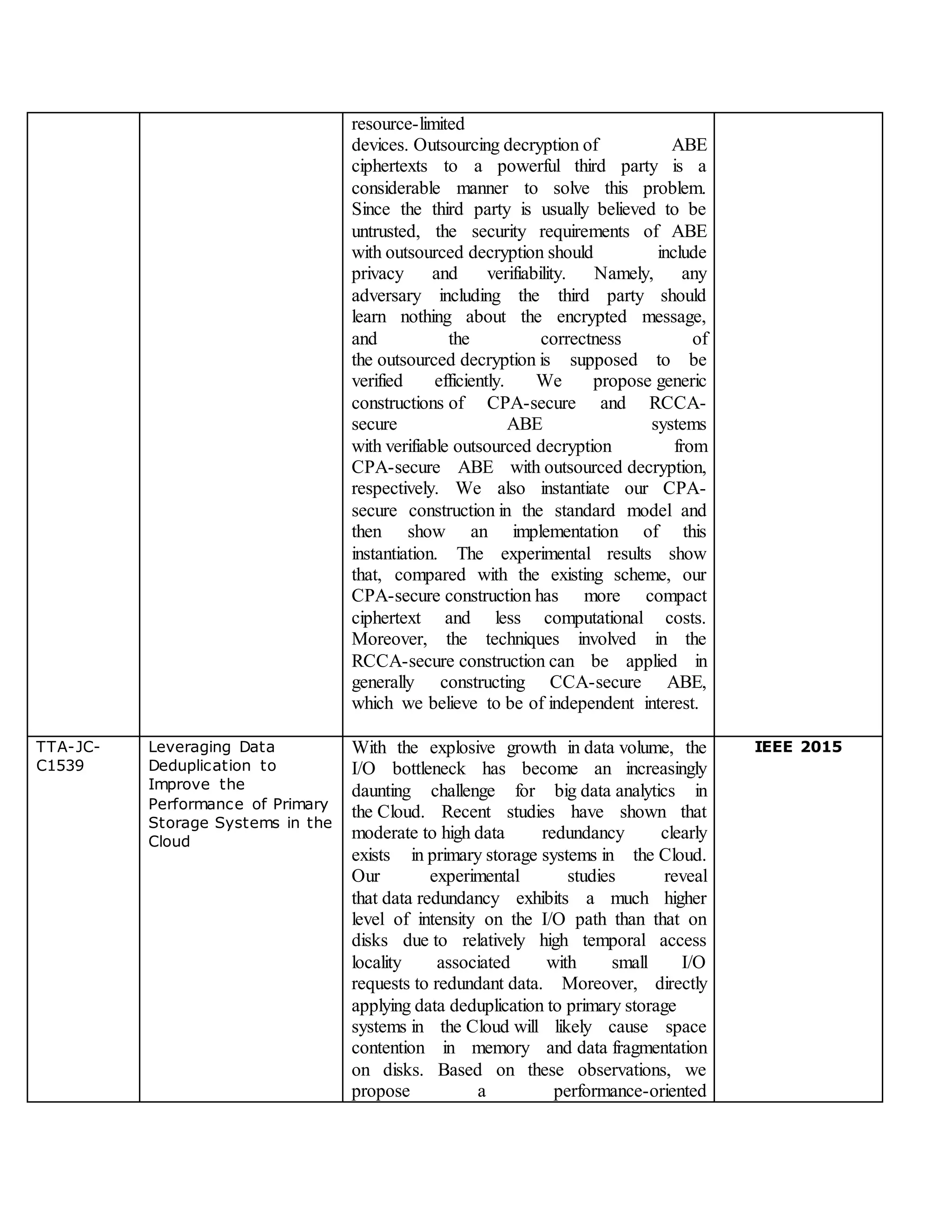

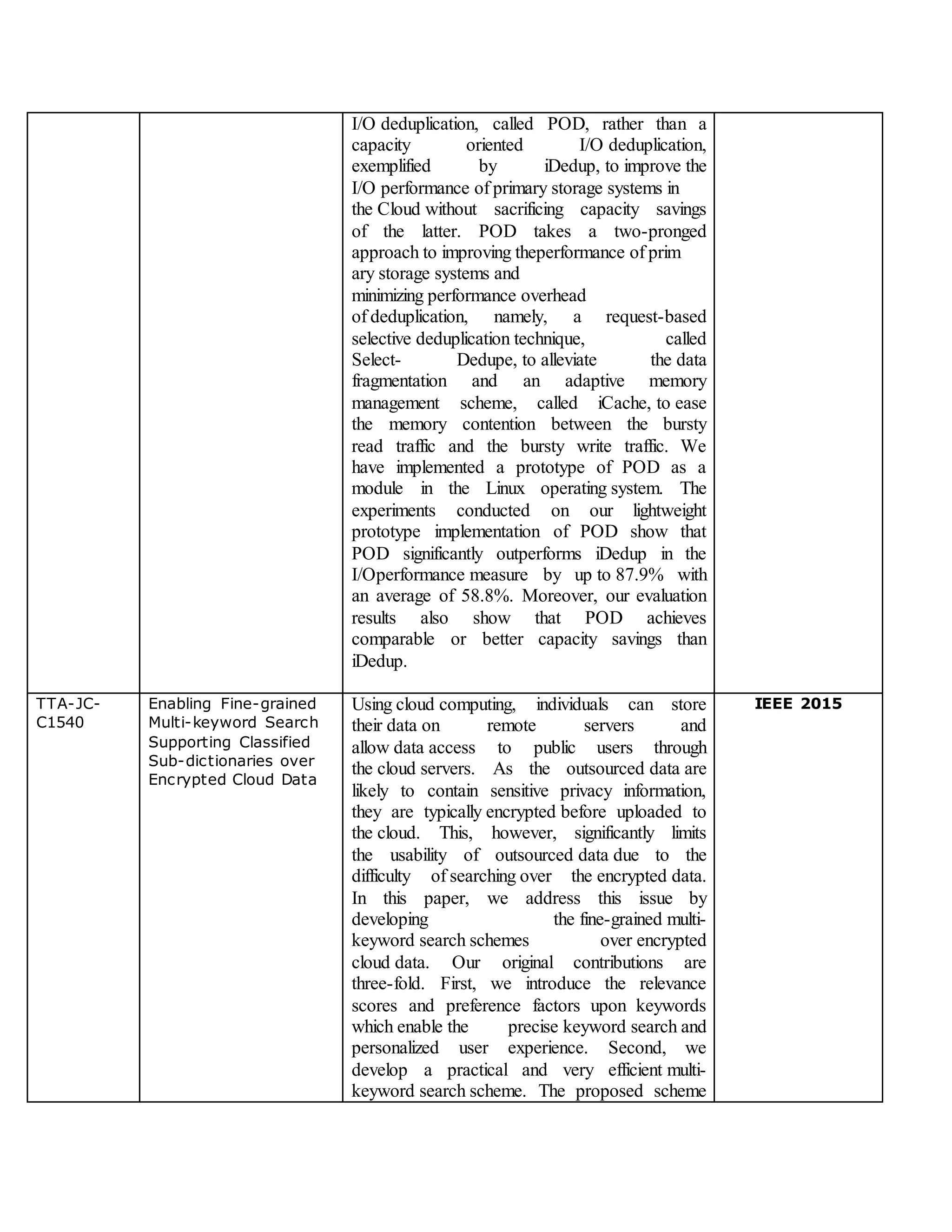

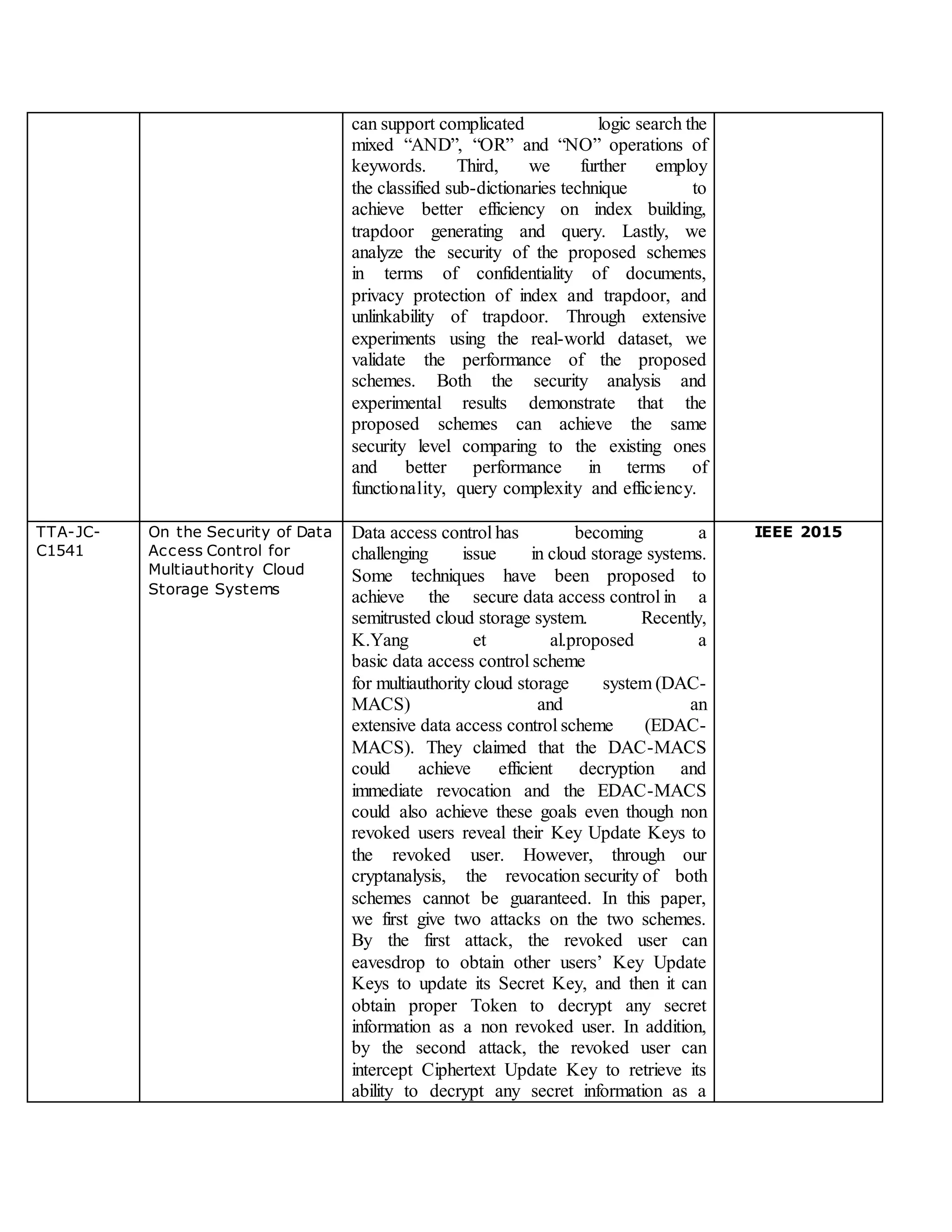

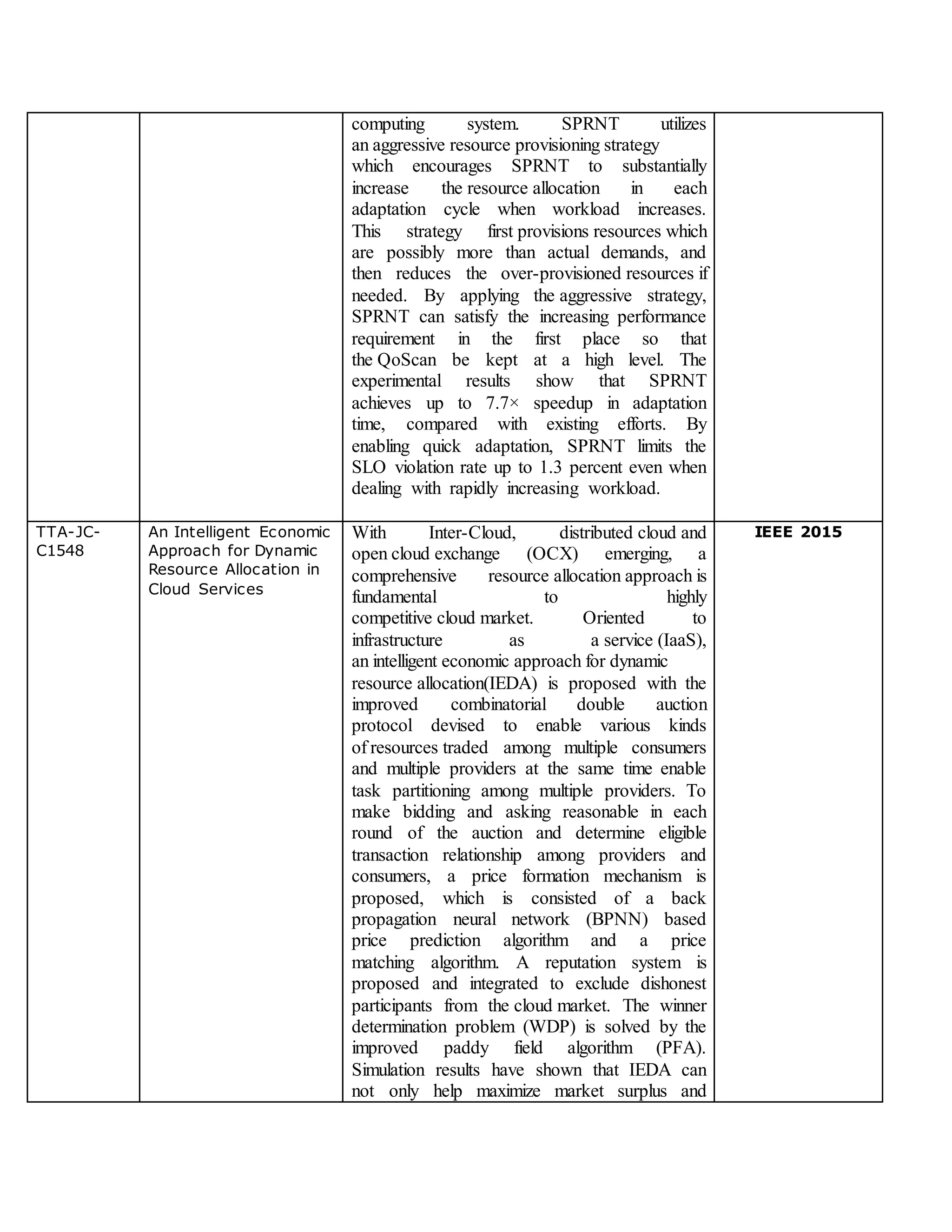

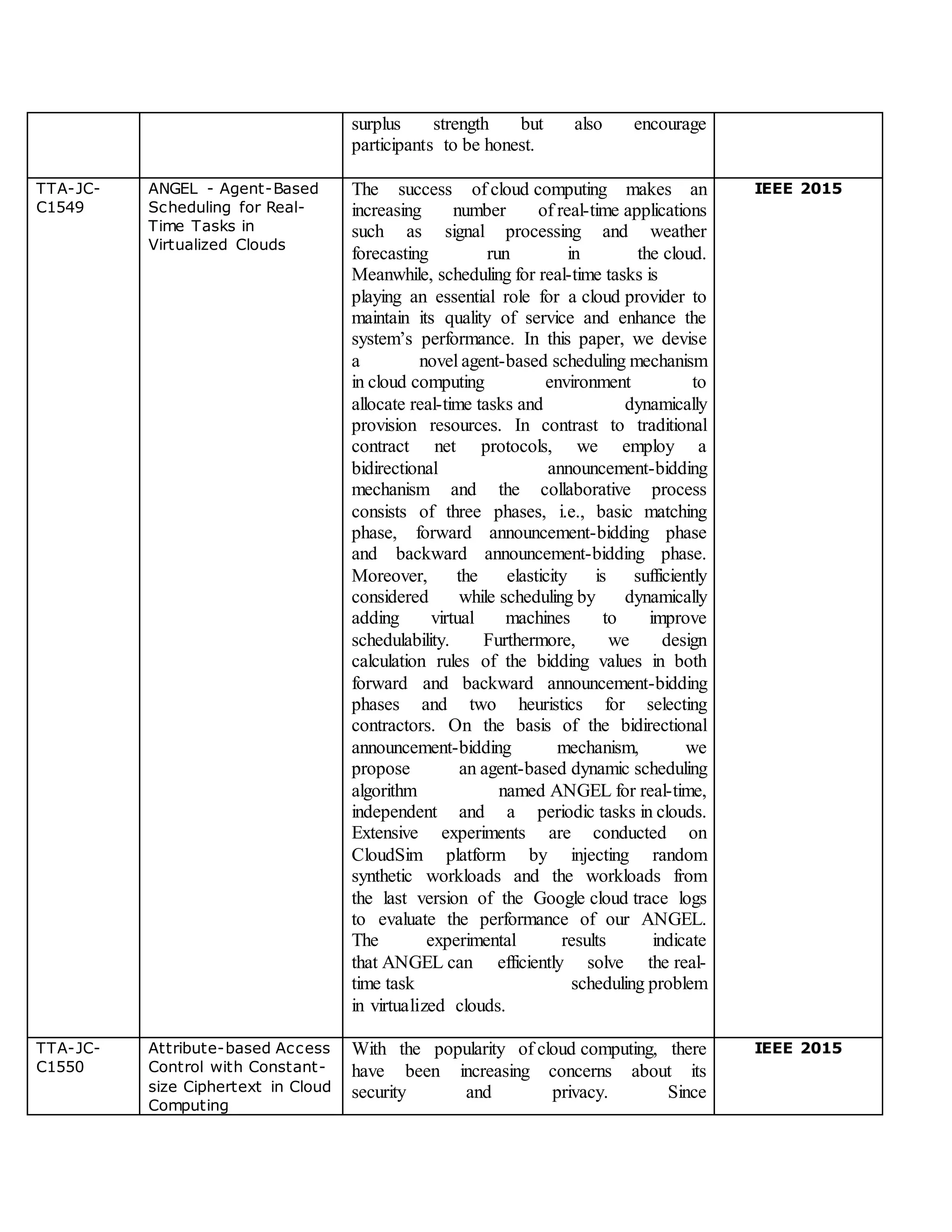

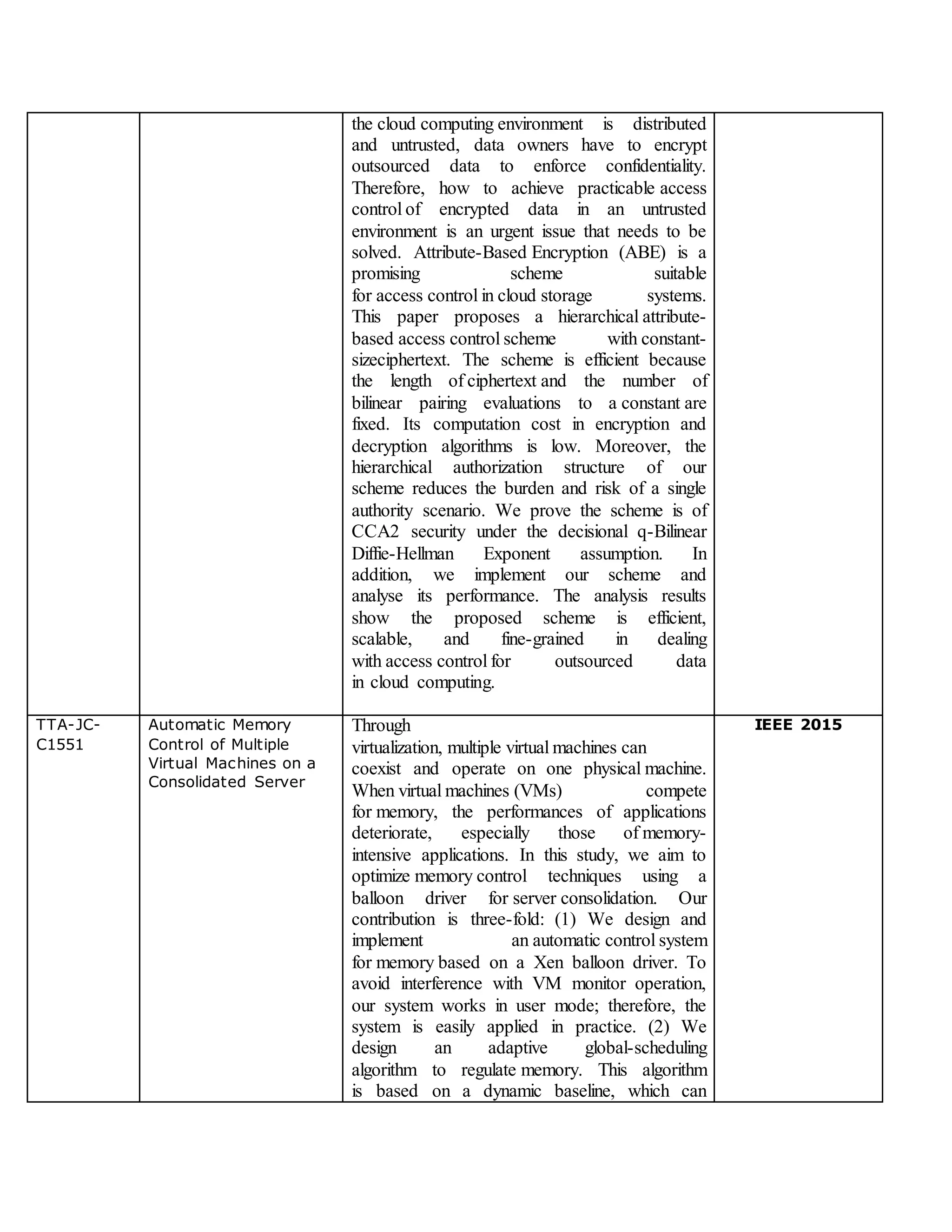

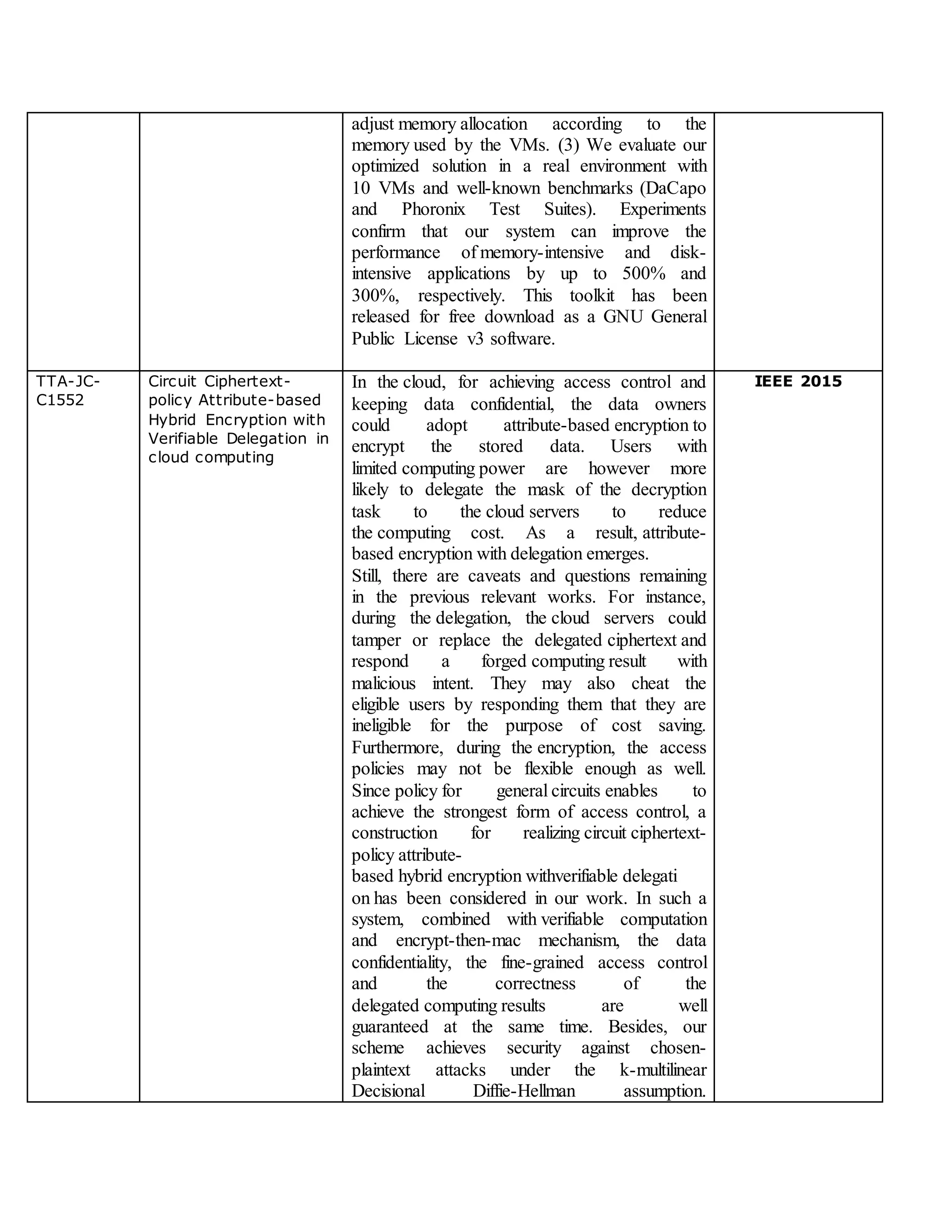

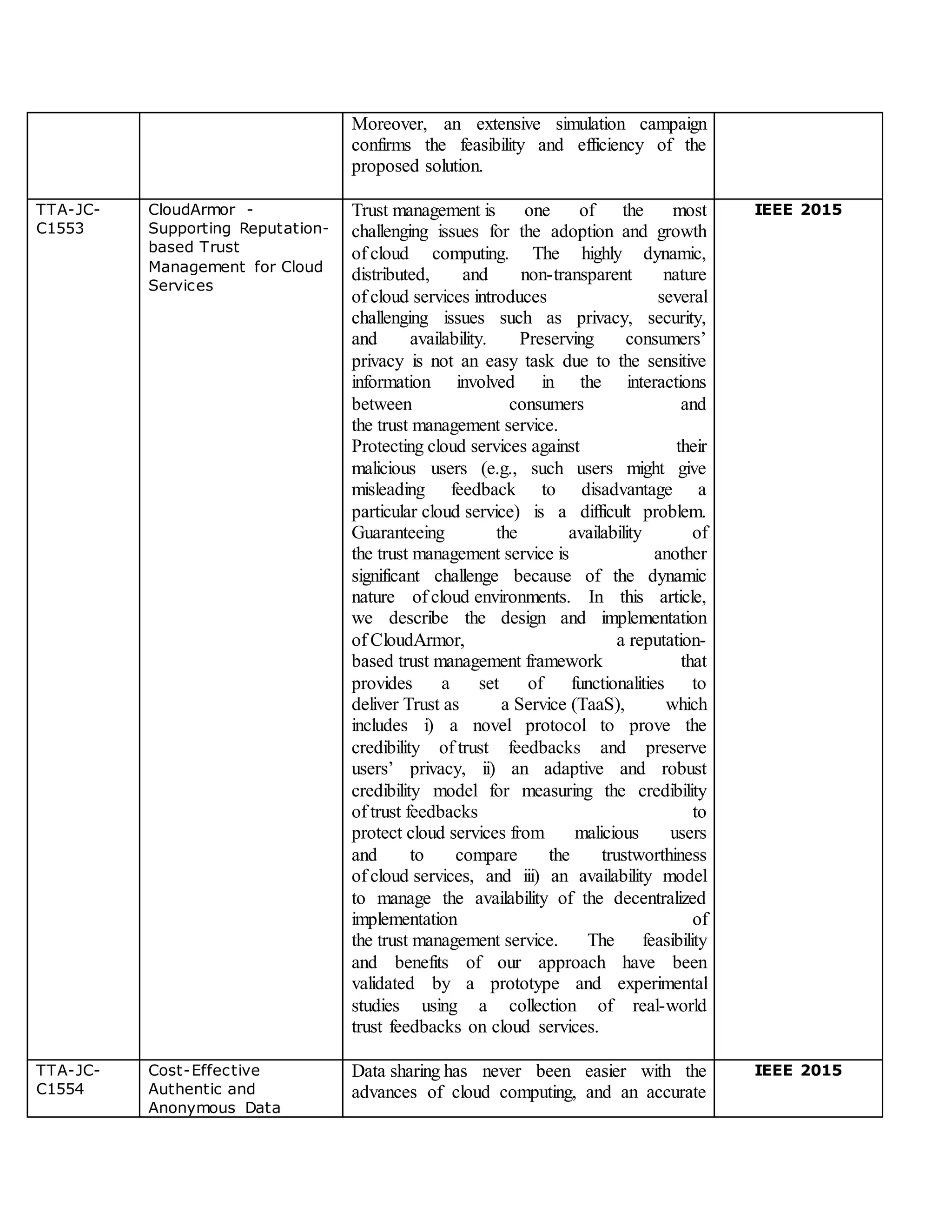

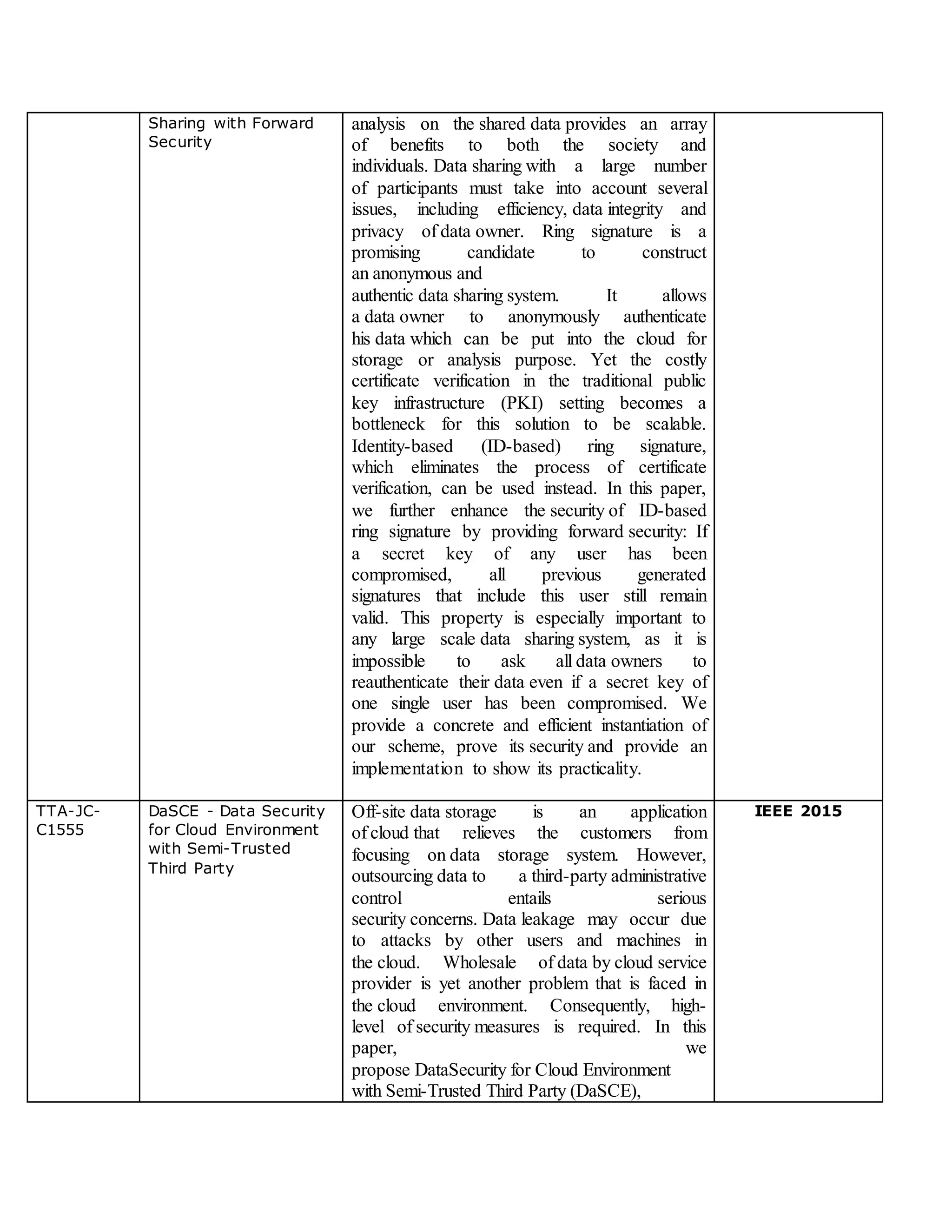

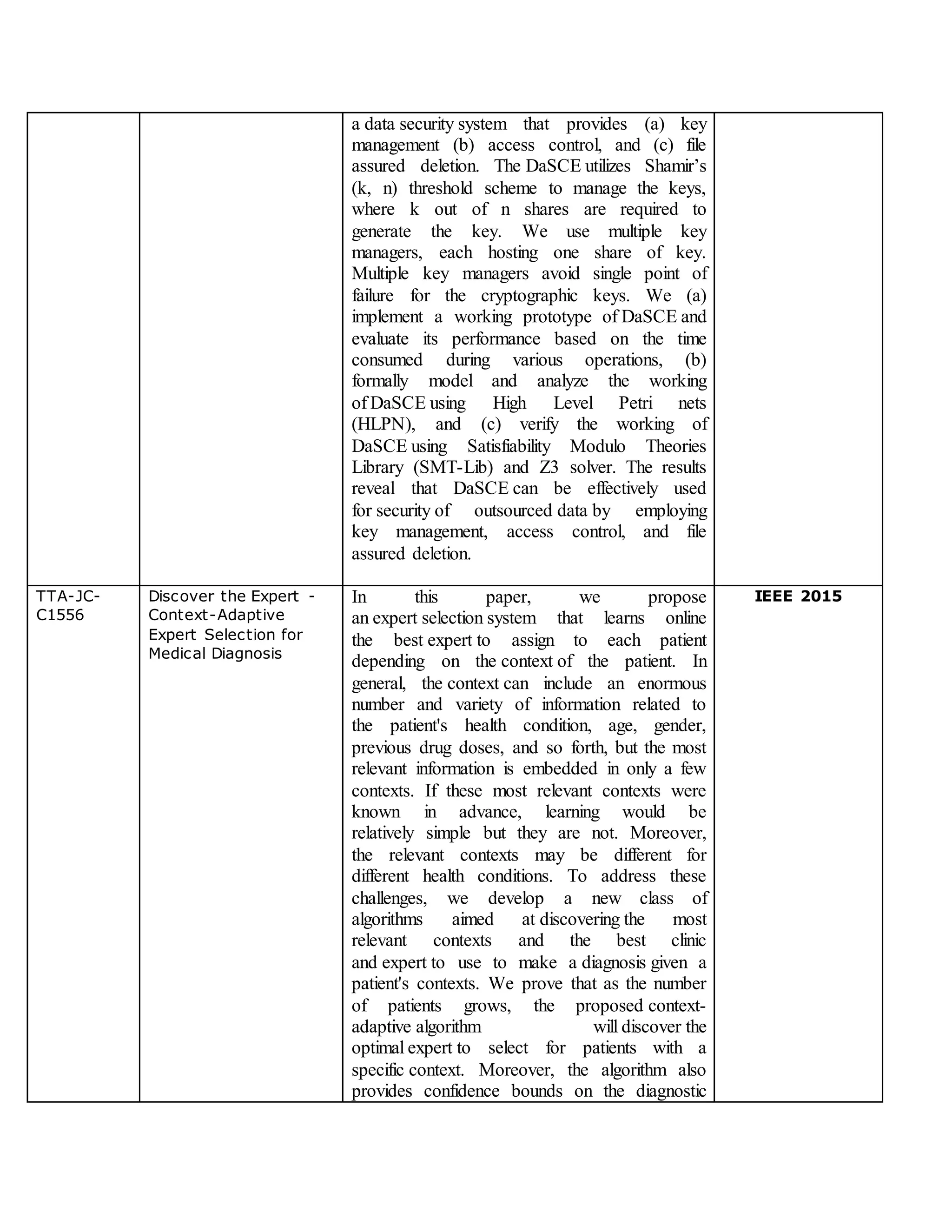

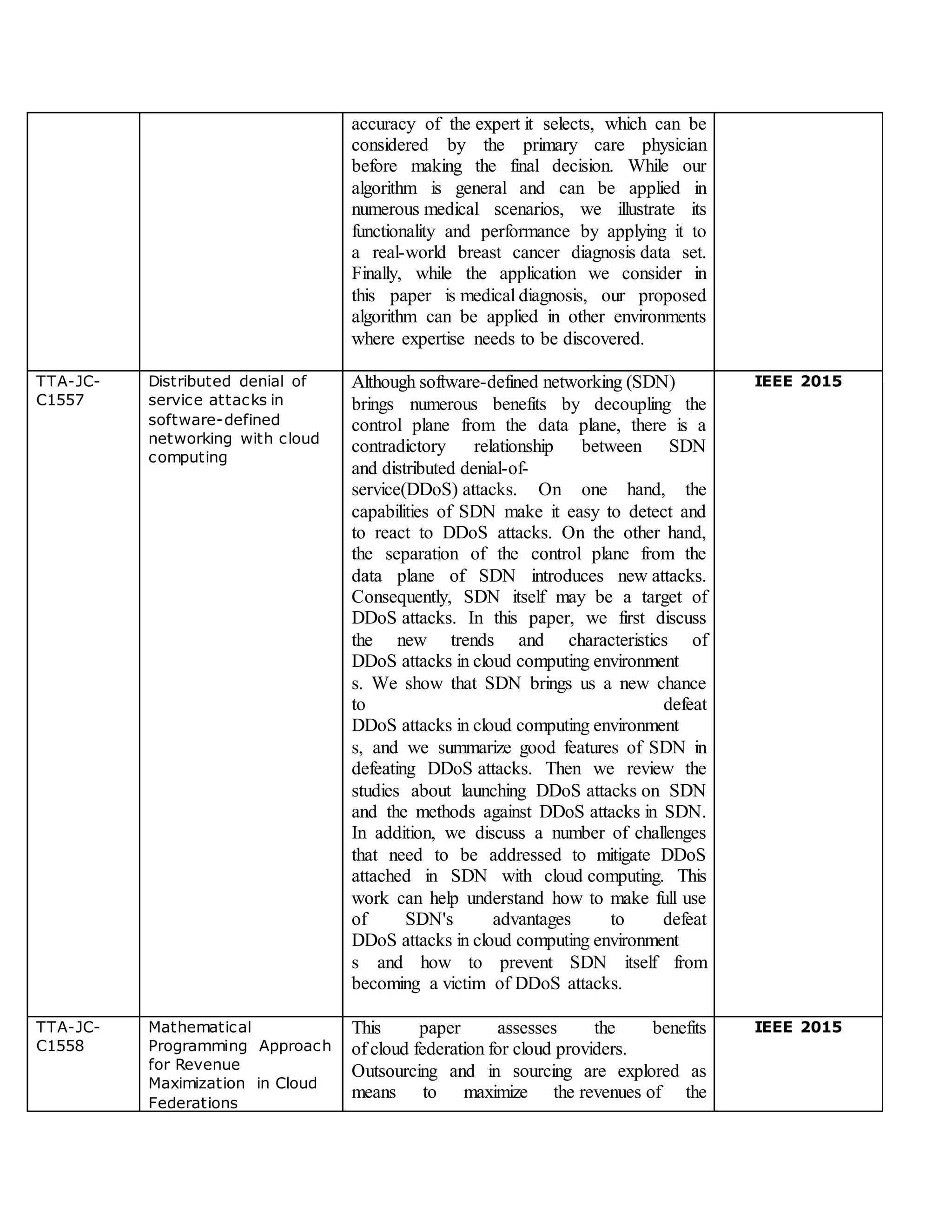

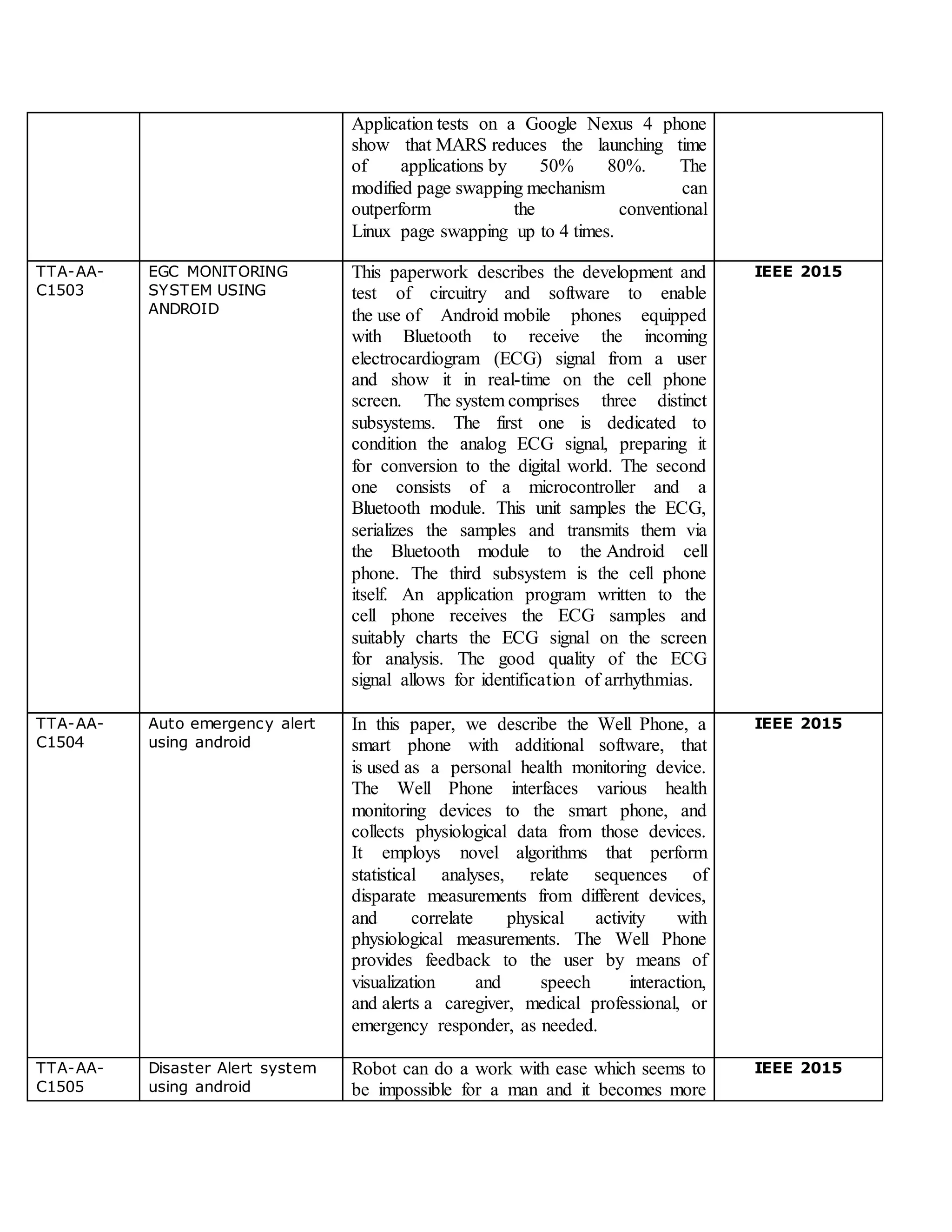

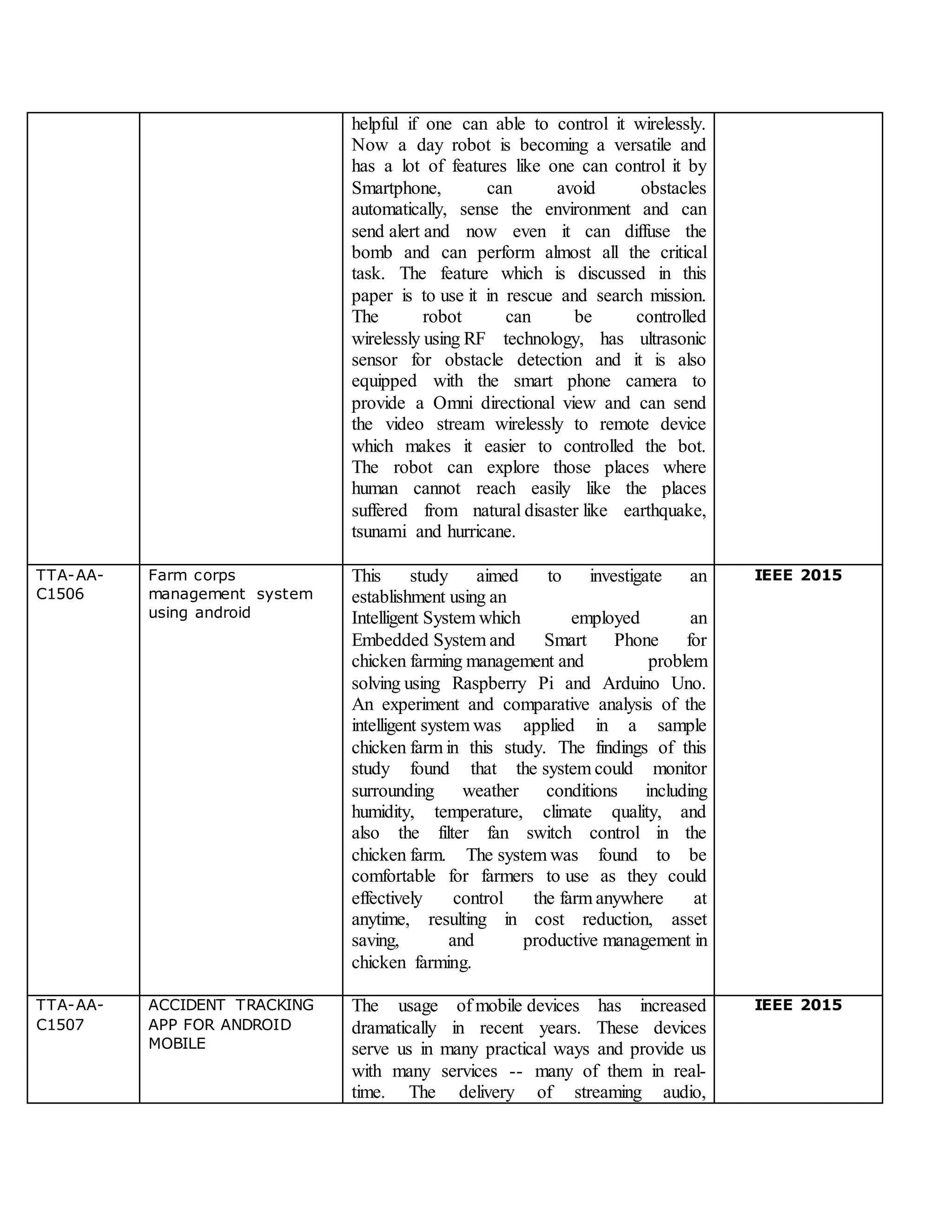

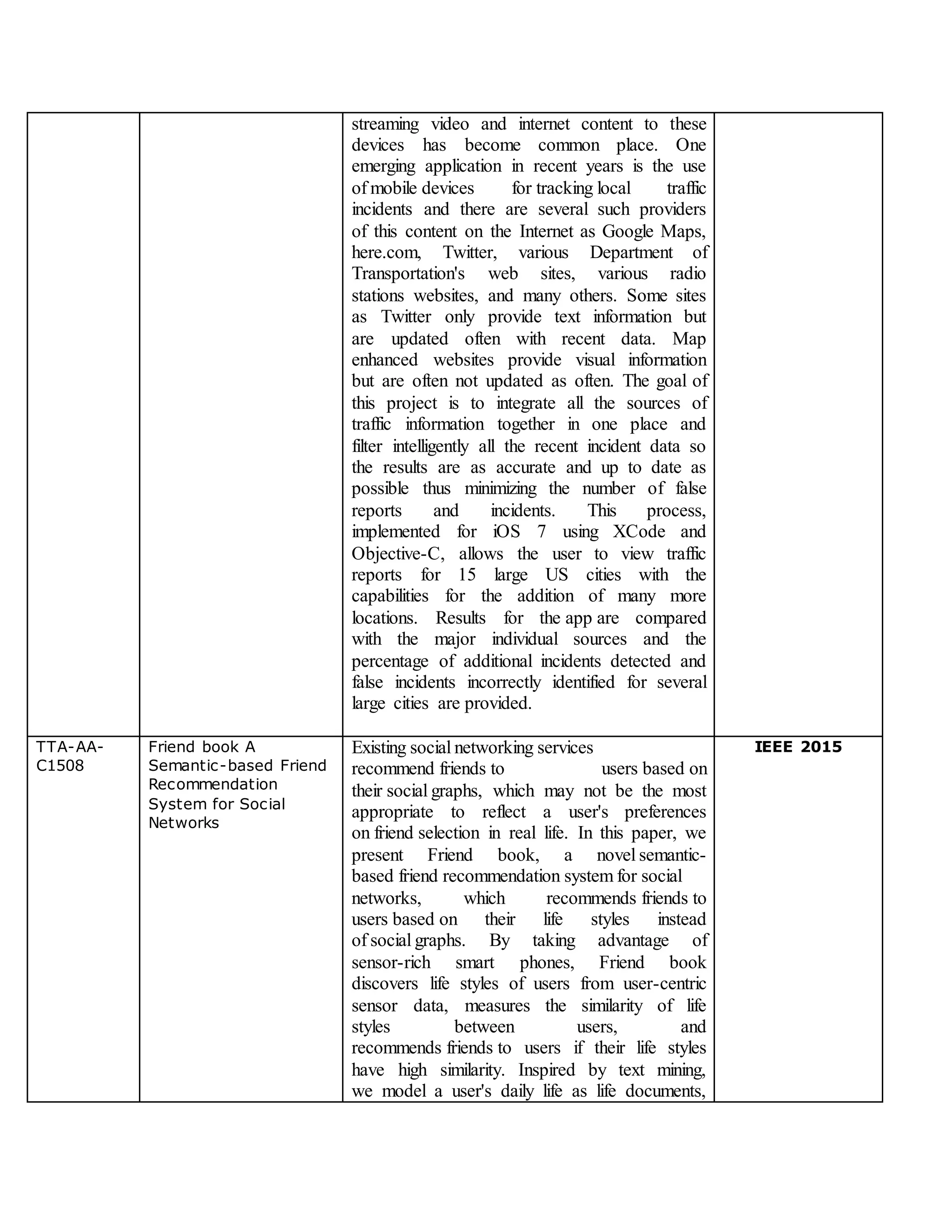

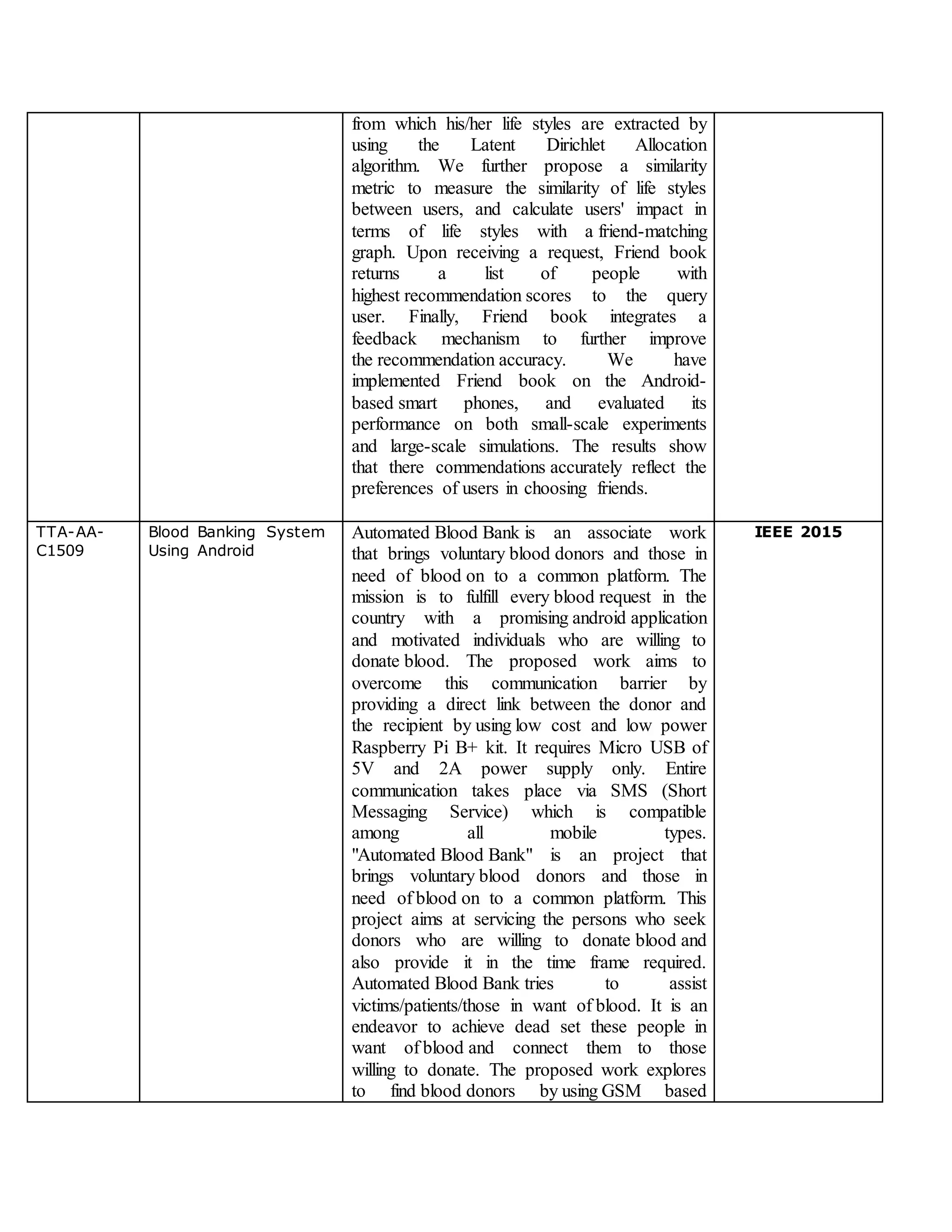

This document provides summaries of 15 networking projects from TTA including the project code, title, description, and reference. The projects cover topics like delay analysis of opportunistic spectrum access MAC protocols, load balancing for network traffic measurement, key exchange protocols for parallel network file systems, anomaly detection in intrusion detection systems, and energy efficient group key agreement for wireless networks. The document provides contact information at the end for obtaining full project papers.