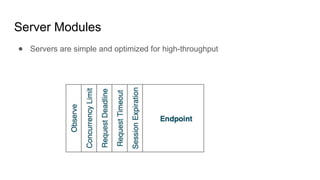

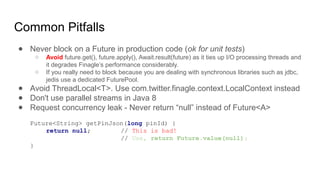

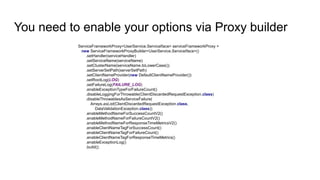

Finagle is an asynchronous RPC framework from Twitter that provides client/server abstractions over various protocols like HTTP and Thrift. It uses Futures to handle asynchronous operations and provides methods like map, flatmap, and handle to transform Futures. The Java Service Framework builds on Finagle to add features like metrics, logging, and rate limiting for Java services. It allows configuring options like enabling specific logs and metrics through a Proxy builder.

![Finagle Architecture

Netty:

Event-based

Low-level network I/O

Finagle:

Service-oriented,

Functional abstractions

[3] Thanks @vkostyukov for the diagram](https://image.slidesharecdn.com/copyoffinagleandjavaserviceframework-180626170038/85/Finagle-and-Java-Service-Framework-at-Pinterest-5-320.jpg)

![Part 2. Programming with Futures

● What is a Future?

○ A container to hold the value of async computation that may be either a

success or failure.

● History - Introduced as part of Java 1.5 java.util.concurrent.Future but had

limited functionality: isDone() and get() [blocking]

● Twitter Futures - Are more powerful and adds composability!

● Part of util-core package and not tied to any thread pool model.](https://image.slidesharecdn.com/copyoffinagleandjavaserviceframework-180626170038/85/Finagle-and-Java-Service-Framework-at-Pinterest-10-320.jpg)

![How to consume Futures?

● Someone gives you a future, you act on it and pass it on (kinda hot potato)

Typical actions:

● Transform the value [map(), handle()]

● Log it, update stats [side-effect/callbacks - onSuccess(), onFailure()]

● Trigger another async computation and return that result [flatmap(), rescue()]

Most of the handlers are variations of the basic handler transform()

Future<B> transform(Function<Try<A>, Future<B>>);](https://image.slidesharecdn.com/copyoffinagleandjavaserviceframework-180626170038/85/Finagle-and-Java-Service-Framework-at-Pinterest-12-320.jpg)

![Resources:

“Your server as a function” paper - https://dl.acm.org/citation.cfm?id=2525538

Source code: https://github.com/twitter/finagle

Finaglers - https://groups.google.com/d/forum/finaglers

Blogs:

[1] https://twitter.github.io/scala_school/finagle.html

[2] https://twitter.github.io/finagle/guide/developers/Futures.html

[3] http://vkostyukov.net/posts/finagle-101/](https://image.slidesharecdn.com/copyoffinagleandjavaserviceframework-180626170038/85/Finagle-and-Java-Service-Framework-at-Pinterest-31-320.jpg)