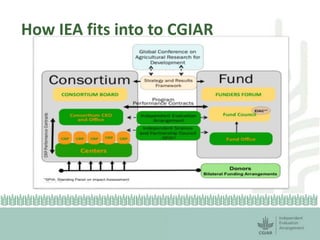

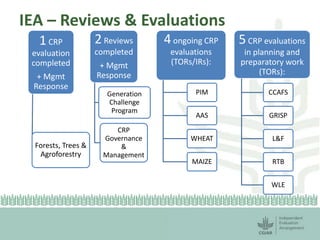

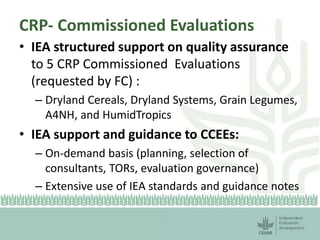

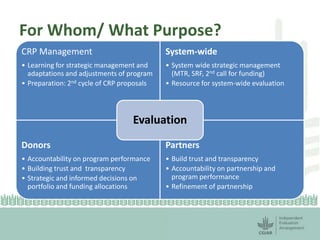

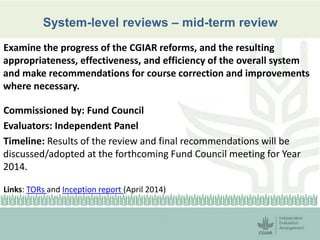

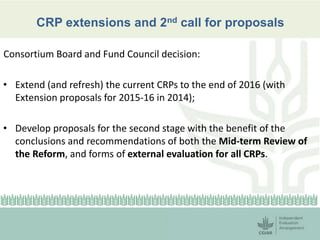

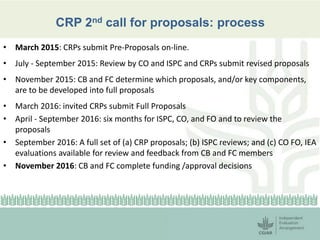

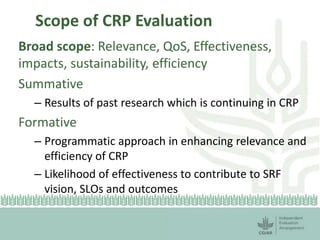

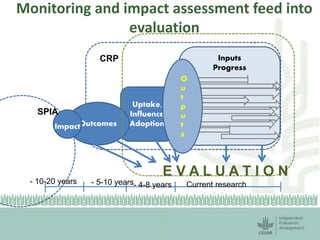

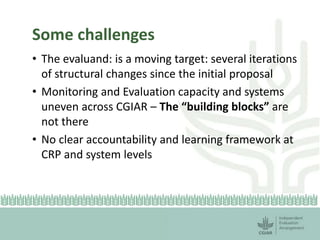

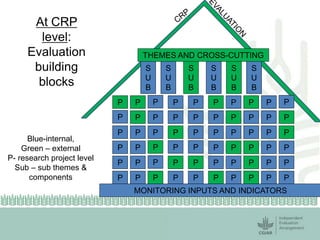

The document outlines the progress of the CGIAR evaluation function, emphasizing its role as a key instrument for accountability and learning within the organization. It details completed and ongoing evaluations, support structures for various CRPs, and timelines for future evaluations, along with the strategic objectives of enhancing management, accountability, and transparency. Key recommendations for improvement and processes surrounding CRP proposals are also discussed, highlighting the importance of building a culture of evaluation across CGIAR.