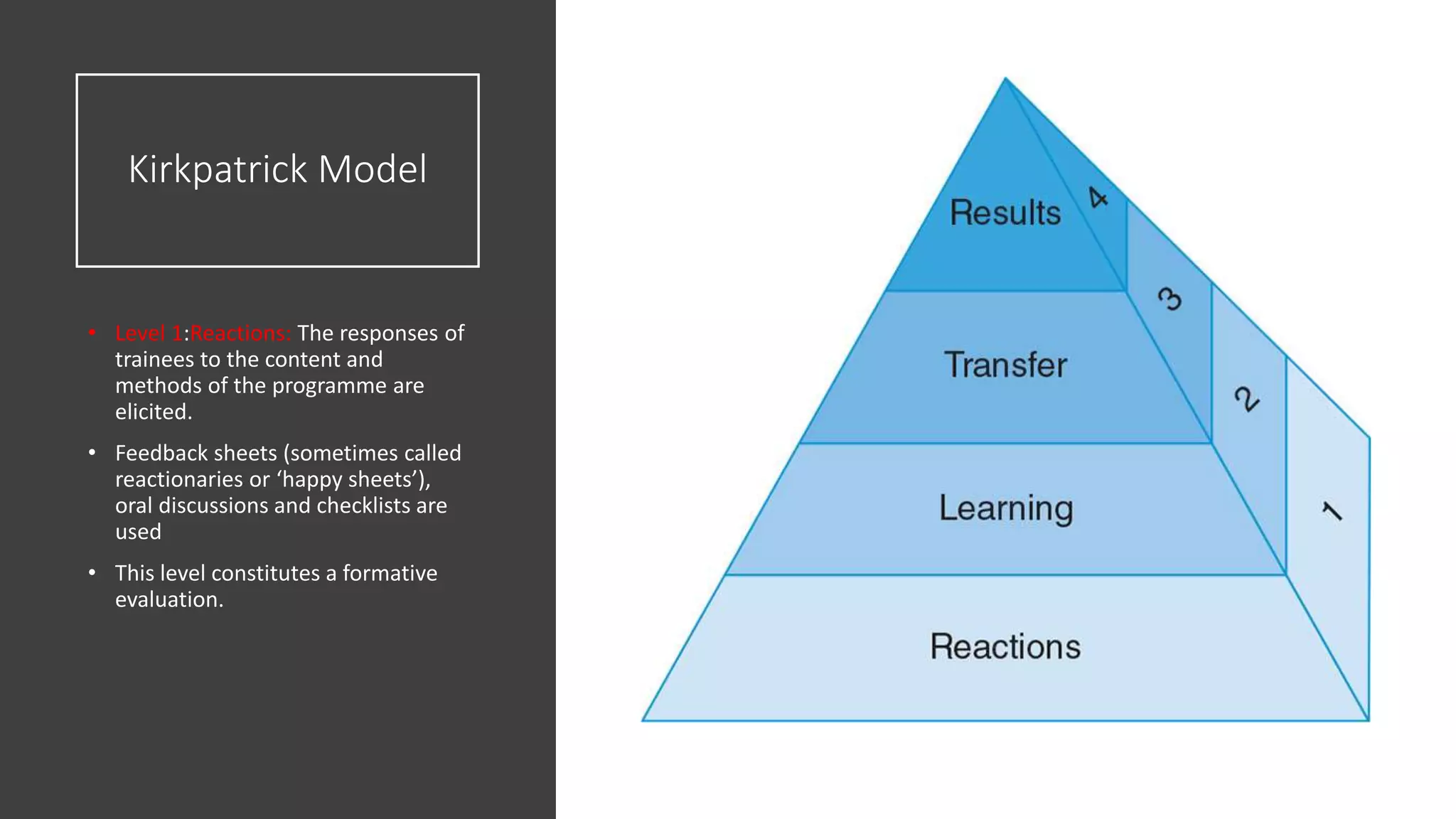

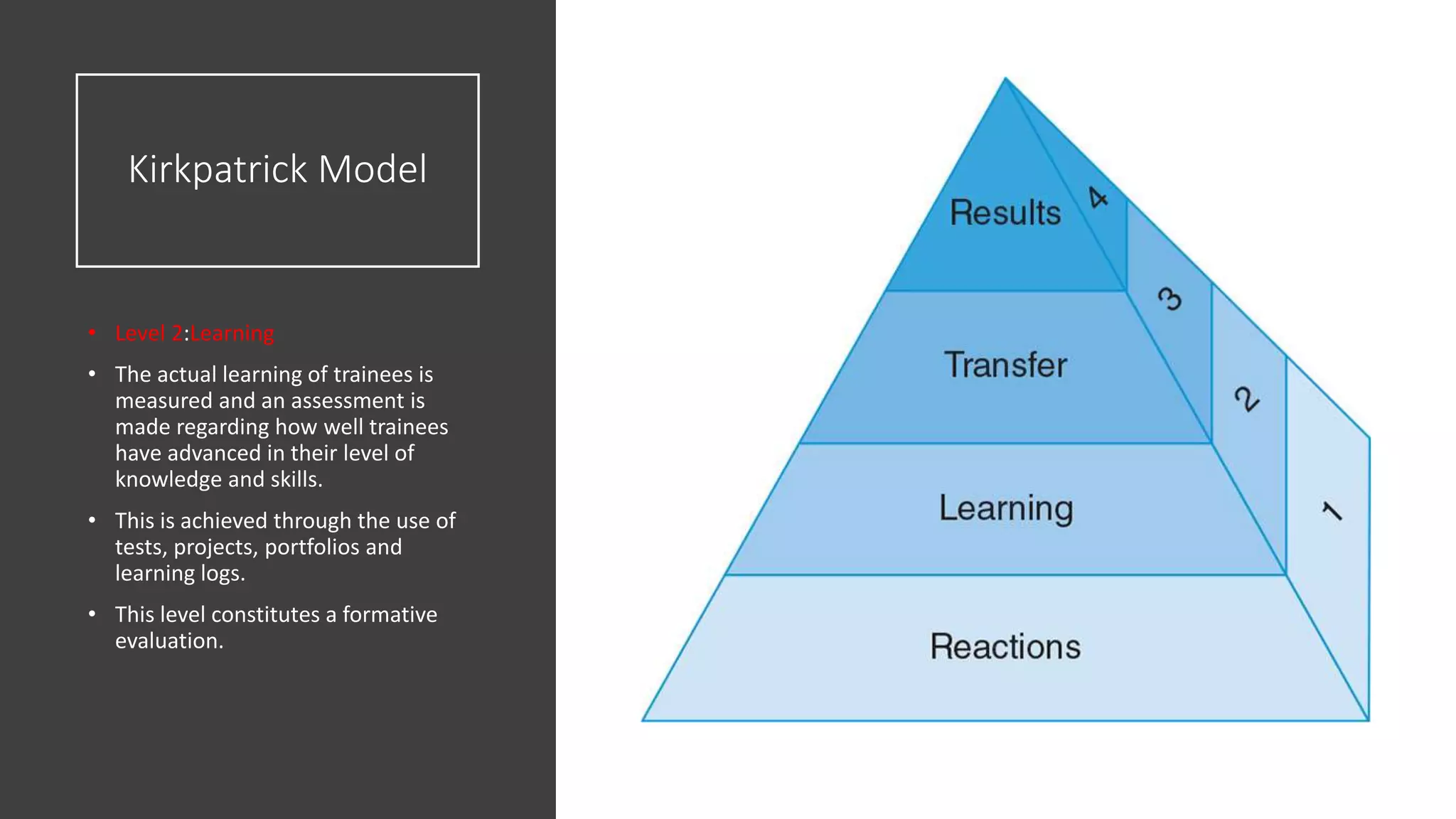

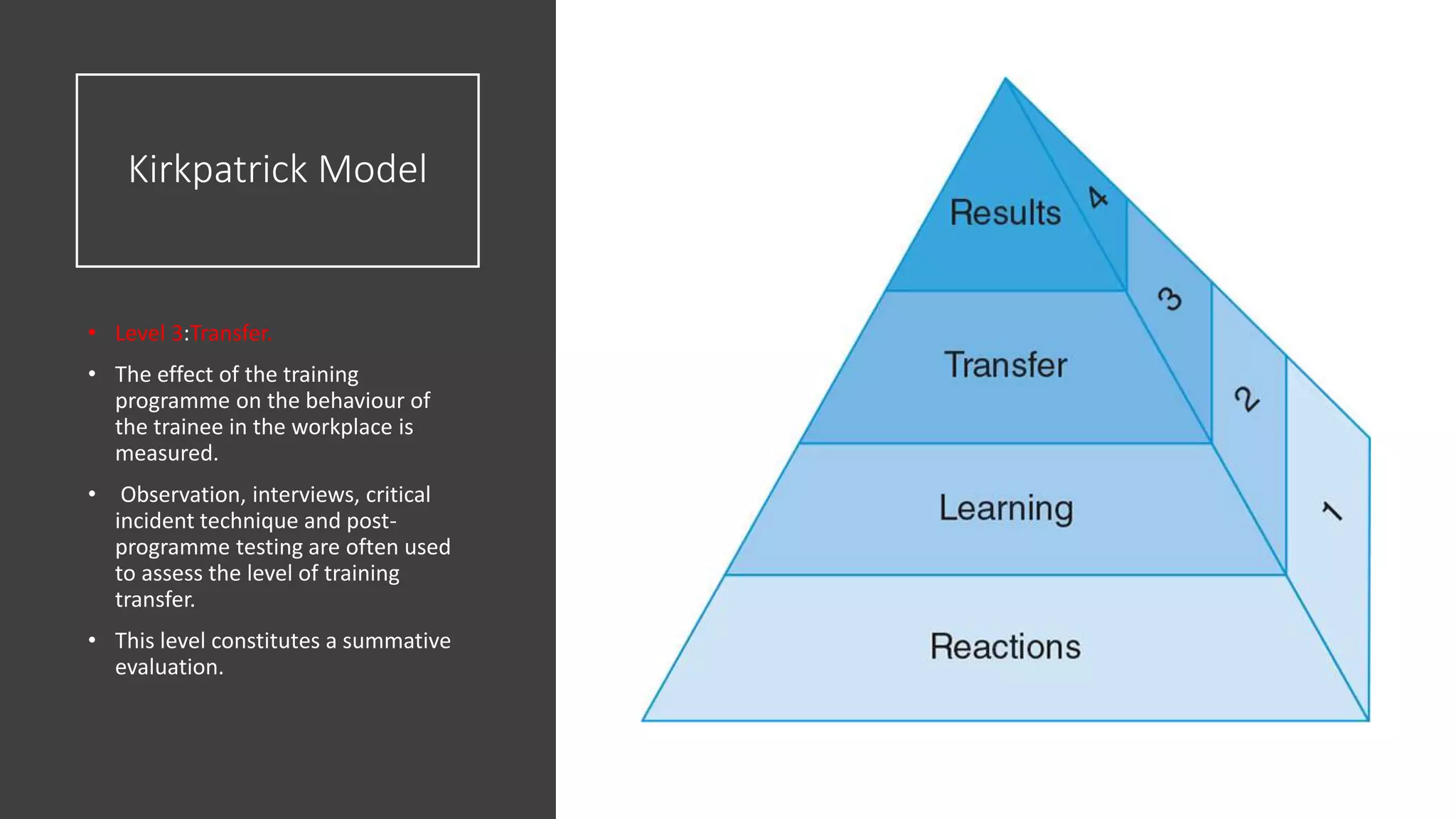

The document discusses the importance of evaluating training outcomes and the involvement of models like Kirkpatrick's four levels taxonomy and the balanced scorecard. Despite significant investments in training evaluations, organizations often hesitate to evaluate due to challenges like the cost-benefit ratio and difficulties in measuring training effectiveness. It advocates for a more nuanced approach to evaluation, emphasizing methods that align with organizational performance and the need for effective learning initiatives.