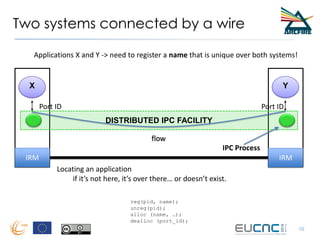

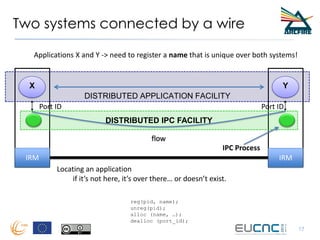

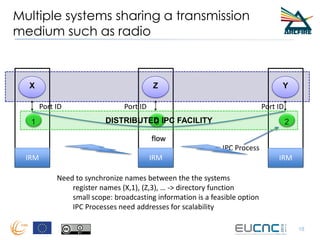

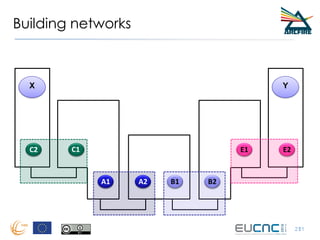

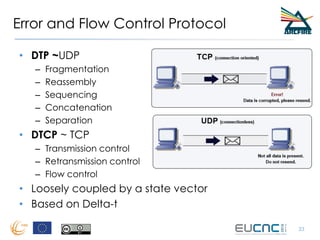

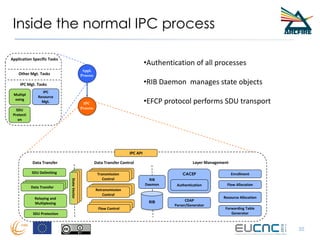

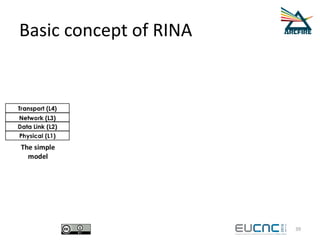

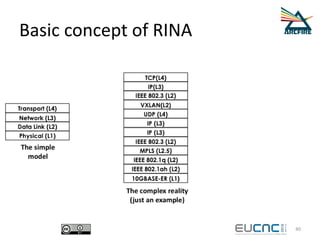

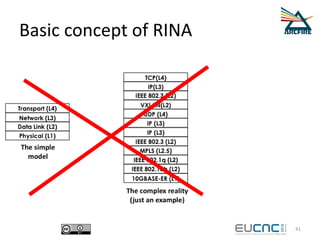

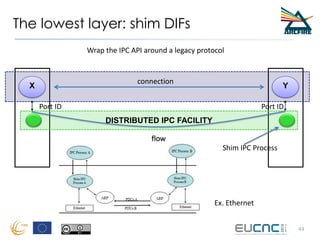

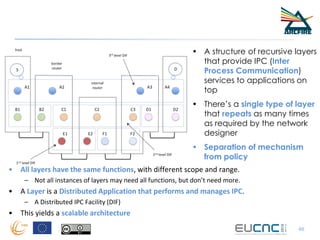

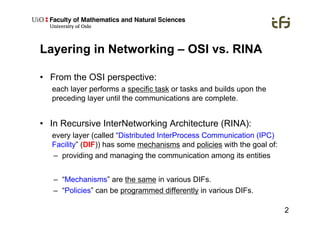

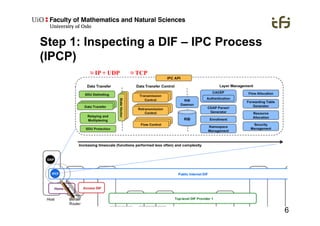

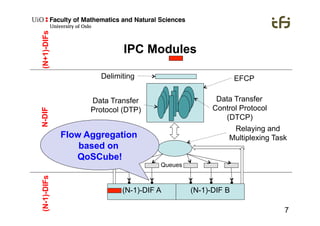

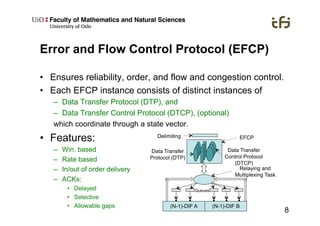

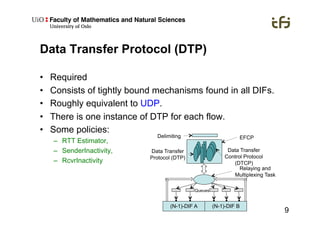

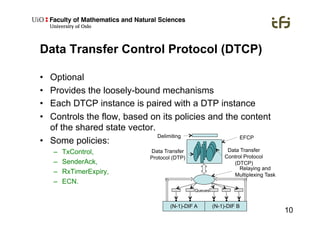

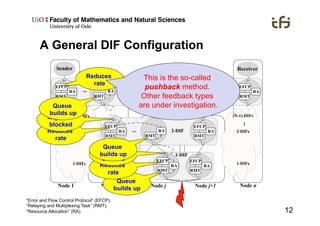

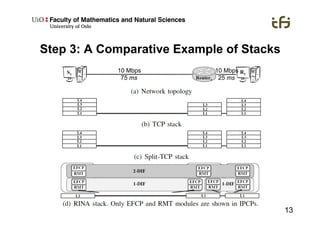

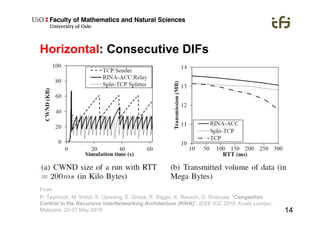

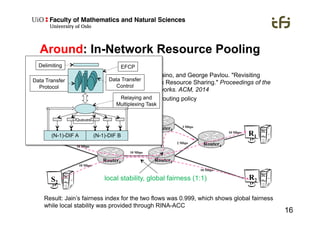

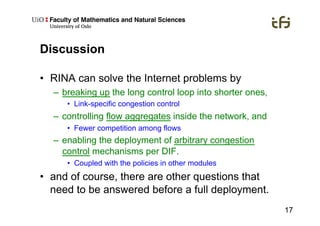

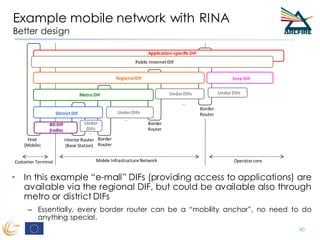

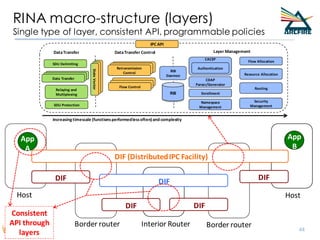

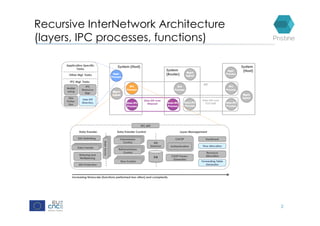

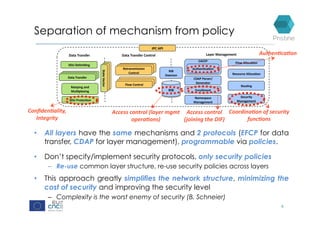

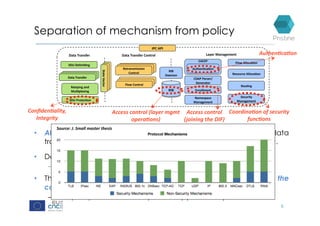

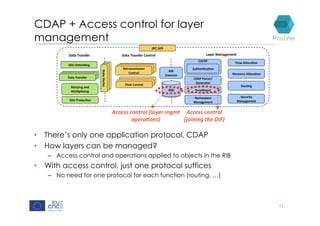

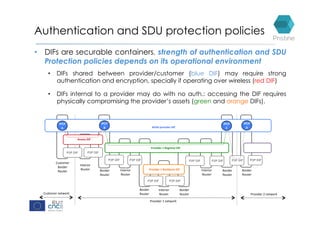

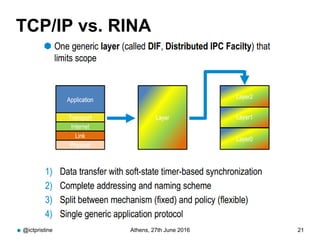

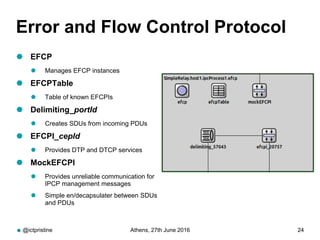

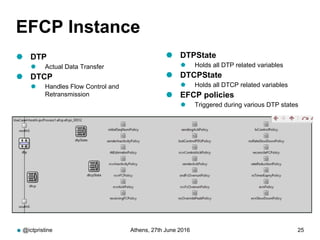

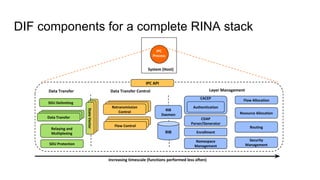

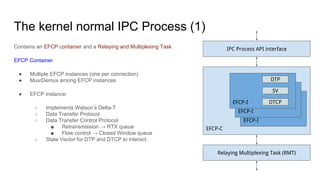

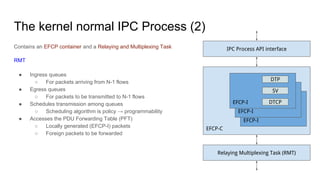

RINA provides a recursive, layered approach to networking where each layer, called a Distributed IPC Facility (DIF), has the same basic functions but with different scopes and policies. Congestion control in RINA is implemented in a distributed manner across DIF layers through the Error and Flow Control Protocol (EFCP). EFCP consists of the Data Transfer Protocol (DTP) and optional Data Transfer Control Protocol (DTCP) which coordinate through a shared state vector to provide reliable data transfer, flow control, and congestion management without the scalability issues faced by TCP in the Internet.

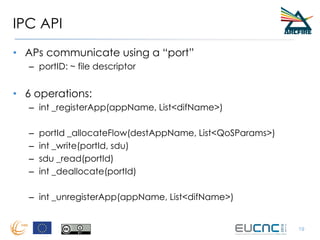

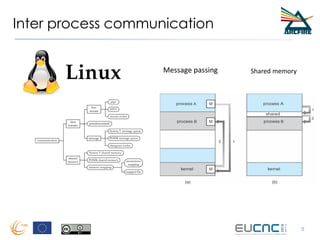

![Message Passing IPC has a simple API

9

fd[1] fd[0]

int pipe (pipefd, /* flags */);

int read (pipefd[0], <buf>, len);

int write (pipefd[1], <buf>, len);](https://image.slidesharecdn.com/eucnc-rina-tutorial-160704080351/85/Eucnc-rina-tutorial-9-320.jpg)