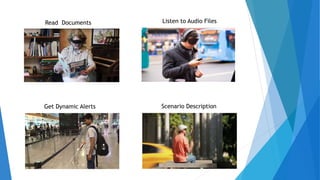

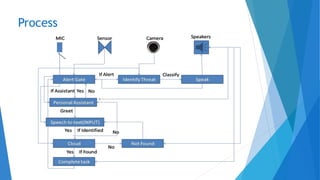

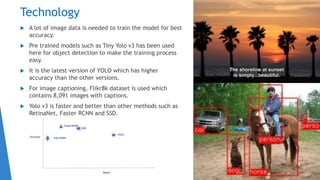

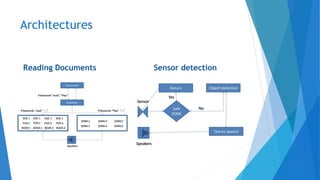

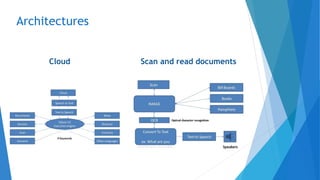

The document details a project aimed at developing AI-powered smart glasses to assist blind and visually impaired individuals in their daily lives, allowing them to navigate and access information through voice commands. The glasses are designed to provide context-aware support, dynamic alerts, and the ability to read text, thus promoting independence for users. Future enhancements aim to make the technology widely available, potentially revolutionizing communication and accessibility for those with disabilities.