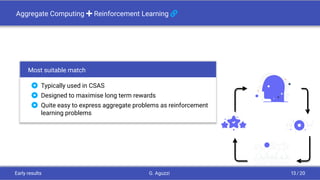

The document discusses integrating machine learning within the aggregate computing paradigm to enhance adaptability in collective self-adaptive systems. Early results indicate that reinforcement learning is particularly suitable for addressing challenges within this framework, offering paths towards more intelligent and robust collective behaviors. Research questions focus on the effective combination of machine learning techniques with varying abstraction levels in aggregate computing.

![Background

Collective (Self-)Adaptive Systems (CSAS) [D’A+19]

Distributed and interconnected systems composed of multiple agents that can perform complex tasks ex-

hibiting robust collective behaviours while achieving system-wide and agent-specific goals.

Background G. Aguzzi 2 / 20](https://image.slidesharecdn.com/main-no-animation-211002122702/85/Doctoral-Symposium-ACSOS-2021-Research-directions-for-Aggregate-Computing-with-Machine-Learning-2-320.jpg)

![Aggregate Computing [BPV15]

A top-down global-to-local approach to express

collective behaviour

Rooted on field-calculus [Aud+18]

Collective behaviour does not depend on system scale

Used in various scenarios ranging from smart cities to

crowd engineering [Cas+19]

Problem i

Building block design is complex

Background G. Aguzzi 3 / 20](https://image.slidesharecdn.com/main-no-animation-211002122702/85/Doctoral-Symposium-ACSOS-2021-Research-directions-for-Aggregate-Computing-with-Machine-Learning-3-320.jpg)

![Machine Learning

Enhance agents with some learning capabilities

Learning improve adaptability, helping agent to act in uncertain environments

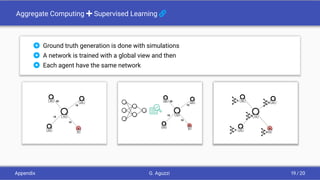

Supervised Learning [D’A+19], Reinforcement Learning [HW98; NNN20; MLF07;

HKT19] and Evolutionary Computing [JMK17; PL05] are typically used in CSAS

Problem i

Solutions are application-specific

Background G. Aguzzi 4 / 20](https://image.slidesharecdn.com/main-no-animation-211002122702/85/Doctoral-Symposium-ACSOS-2021-Research-directions-for-Aggregate-Computing-with-Machine-Learning-4-320.jpg)

![Early results

Setting

Focus on simple but well-known

problems in Aggregate Computing

Learning exploited to guide

building-block improvements

Verifying what kind of approach is

well-suited for Aggregate Computing

Constraints

Learning problem framed as

Homogenous Team Learning [PL05]

Learning performed off-line [PMV13]

Early results G. Aguzzi 8 / 20](https://image.slidesharecdn.com/main-no-animation-211002122702/85/Doctoral-Symposium-ACSOS-2021-Research-directions-for-Aggregate-Computing-with-Machine-Learning-8-320.jpg)

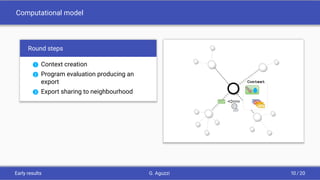

![Computational model [HLM15]

Ensemble of nodes with an

identifier

Each node has a local-view (i.e.

neighbours relationship)

Interaction happens with

message passing (executed

continously).

Early results G. Aguzzi 9 / 20](https://image.slidesharecdn.com/main-no-animation-211002122702/85/Doctoral-Symposium-ACSOS-2021-Research-directions-for-Aggregate-Computing-with-Machine-Learning-9-320.jpg)

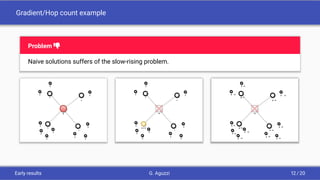

![Gradient/Hop count example [Aud+17]

Definition

A program that produce a computational field where each node contains the distance from a

source zone.

S

10

15

20

15

0

∞

∞

∞

∞

S

10

15

20

15

0

∞

∞

∞

∞

(∞, ∞, ∞, 0)

out = 0 + 10

S

10

15

20

15

0

10

25

25

30

Early results G. Aguzzi 11 / 20](https://image.slidesharecdn.com/main-no-animation-211002122702/85/Doctoral-Symposium-ACSOS-2021-Research-directions-for-Aggregate-Computing-with-Machine-Learning-11-320.jpg)

![Aggregate Computing + Reinforcement Learning: Hop count

Independent Q-Learning

Actions Increase / NoOp

State Temporal Windows of Speed

Reward 0 / Ñ -1

output

Increase

1

NoOp

0

State

[0, 1, 1, 1]

0

-1

Early results G. Aguzzi 14 / 20](https://image.slidesharecdn.com/main-no-animation-211002122702/85/Doctoral-Symposium-ACSOS-2021-Research-directions-for-Aggregate-Computing-with-Machine-Learning-14-320.jpg)

![References I

[D’A+19] Mirko D’Angelo et al. “On learning in collective self-adaptive systems: State of practice

and a 3D framework”. ICSE Workshop on Software Engineering for Adaptive and

Self-Managing Systems 2019-May (2019), pp. 13–24. ISSN: 21567891. DOI:

10.1109/SEAMS.2019.00012.

[Aud+18] Giorgio Audrito et al. “Space-Time Universality of Field Calculus”. Ed. by Giovanna

Di Marzo Serugendo and Michele Loreti. Vol. 10852. Lecture Notes in Computer Science.

Springer, 2018, pp. 1–20. DOI: 10.1007/978-3-319-92408-3_1.

[Cas+19] Roberto Casadei et al. “Self-organising Coordination Regions: A Pattern for Edge

Computing”. Ed. by Hanne Riis Nielson and Emilio Tuosto. Vol. 11533. Lecture Notes in

Computer Science. Springer, 2019, pp. 182–199. DOI: 10.1007/978-3-030-22397-7_11.

[BPV15] Jacob Beal, Danilo Pianini, and Mirko Viroli. “Aggregate Programming for the Internet of

Things”. Computer 48.9 (2015), pp. 22–30. DOI: 10.1109/MC.2015.261.

[HW98] Junling Hu and Michael P. Wellman. “Multiagent Reinforcement Learning: Theoretical

Framework and an Algorithm”. Ed. by Jude W. Shavlik. Morgan Kaufmann, 1998,

pp. 242–250.

References G. Aguzzi 16 / 20](https://image.slidesharecdn.com/main-no-animation-211002122702/85/Doctoral-Symposium-ACSOS-2021-Research-directions-for-Aggregate-Computing-with-Machine-Learning-16-320.jpg)

![References II

[NNN20] Thanh Thi Nguyen, Ngoc Duy Nguyen, and Saeid Nahavandi. “Deep Reinforcement

Learning for Multiagent Systems: A Review of Challenges, Solutions, and Applications”.

IEEE Trans. Cybern. 50.9 (2020), pp. 3826–3839. DOI: 10.1109/TCYB.2020.2977374.

[MLF07] Laëtitia Matignon, Guillaume J. Laurent, and Nadine Le Fort-Piat. “Hysteretic q-learning :

an algorithm for decentralized reinforcement learning in cooperative multi-agent teams”.

IEEE, 2007, pp. 64–69. DOI: 10.1109/IROS.2007.4399095.

[HKT19] Pablo Hernandez-Leal, Bilal Kartal, and Matthew E. Taylor. “A survey and critique of

multiagent deep reinforcement learning”. Auton. Agents Multi Agent Syst. 33.6 (2019),

pp. 750–797. DOI: 10.1007/s10458-019-09421-1.

[JMK17] Junchen Jin, Xiaoliang Ma, and Iisakki Kosonen. “A stochastic optimization framework

for road traffic controls based on evolutionary algorithms and traffic simulation”. Adv.

Eng. Softw. 114 (2017), pp. 348–360. DOI: 10.1016/j.advengsoft.2017.08.005.

[PL05] Liviu Panait and Sean Luke. “Cooperative Multi-Agent Learning: The State of the Art”.

Auton. Agents Multi Agent Syst. 11.3 (2005), pp. 387–434. DOI:

10.1007/s10458-005-2631-2.

References G. Aguzzi 17 / 20](https://image.slidesharecdn.com/main-no-animation-211002122702/85/Doctoral-Symposium-ACSOS-2021-Research-directions-for-Aggregate-Computing-with-Machine-Learning-17-320.jpg)

![References III

[PMV13] Danilo Pianini, Sara Montagna, and Mirko Viroli. “Chemical-oriented Simulation of

Computational Systems with Alchemist”. Journal of Simulation (2013). ISSN: 1747-7778.

DOI: 10.1057/jos.2012.27.

[HLM15] Jianye Hao, Ho-fung Leung, and Zhong Ming. “Multiagent Reinforcement Social Learning

toward Coordination in Cooperative Multiagent Systems”. ACM Trans. Auton. Adapt. Syst.

9.4 (2015), 20:1–20:20. DOI: 10.1145/2644819.

[Aud+17] Giorgio Audrito et al. “Compositional Blocks for Optimal Self-Healing Gradients”. IEEE

Computer Society, 2017, pp. 91–100. DOI: 10.1109/SASO.2017.18.

References G. Aguzzi 18 / 20](https://image.slidesharecdn.com/main-no-animation-211002122702/85/Doctoral-Symposium-ACSOS-2021-Research-directions-for-Aggregate-Computing-with-Machine-Learning-18-320.jpg)