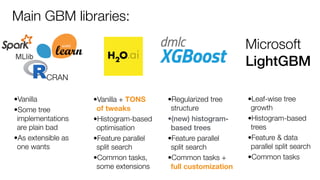

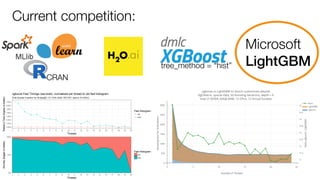

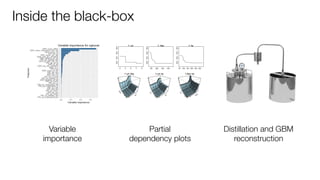

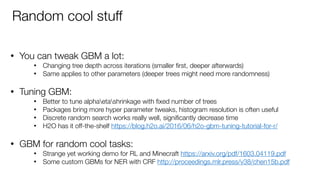

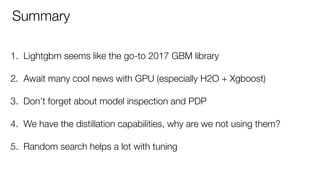

The document discusses advancements and techniques in gradient boosting, particularly focusing on Microsoft's LightGBM as of May 2017. It highlights various features, tuning strategies, and the importance of model inspection methods such as variable importance and partial dependence plots. Additionally, it mentions the ongoing developments in GPU implementations for gradient boosting and emphasizes the need for effective tuning and model evaluation.