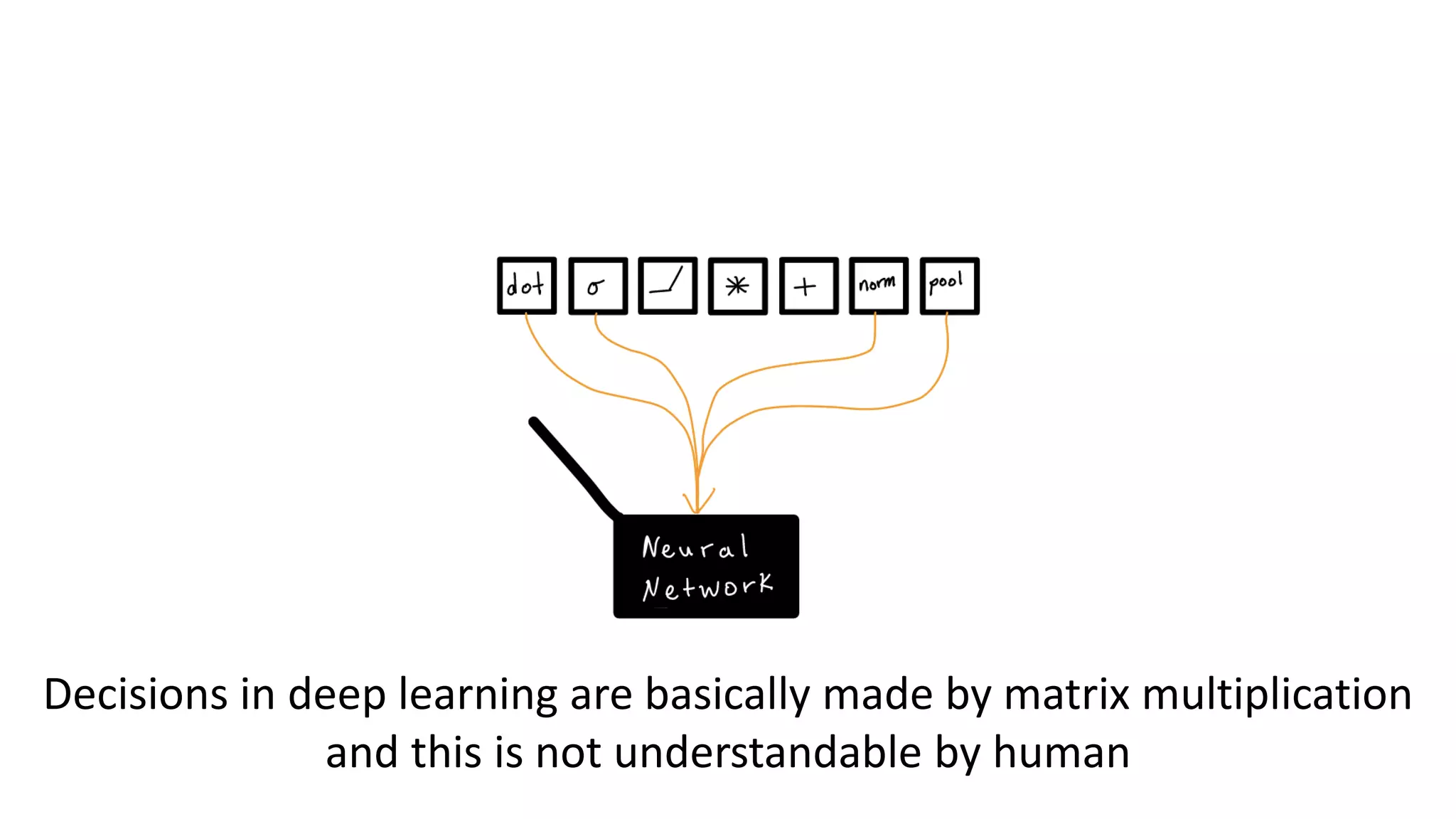

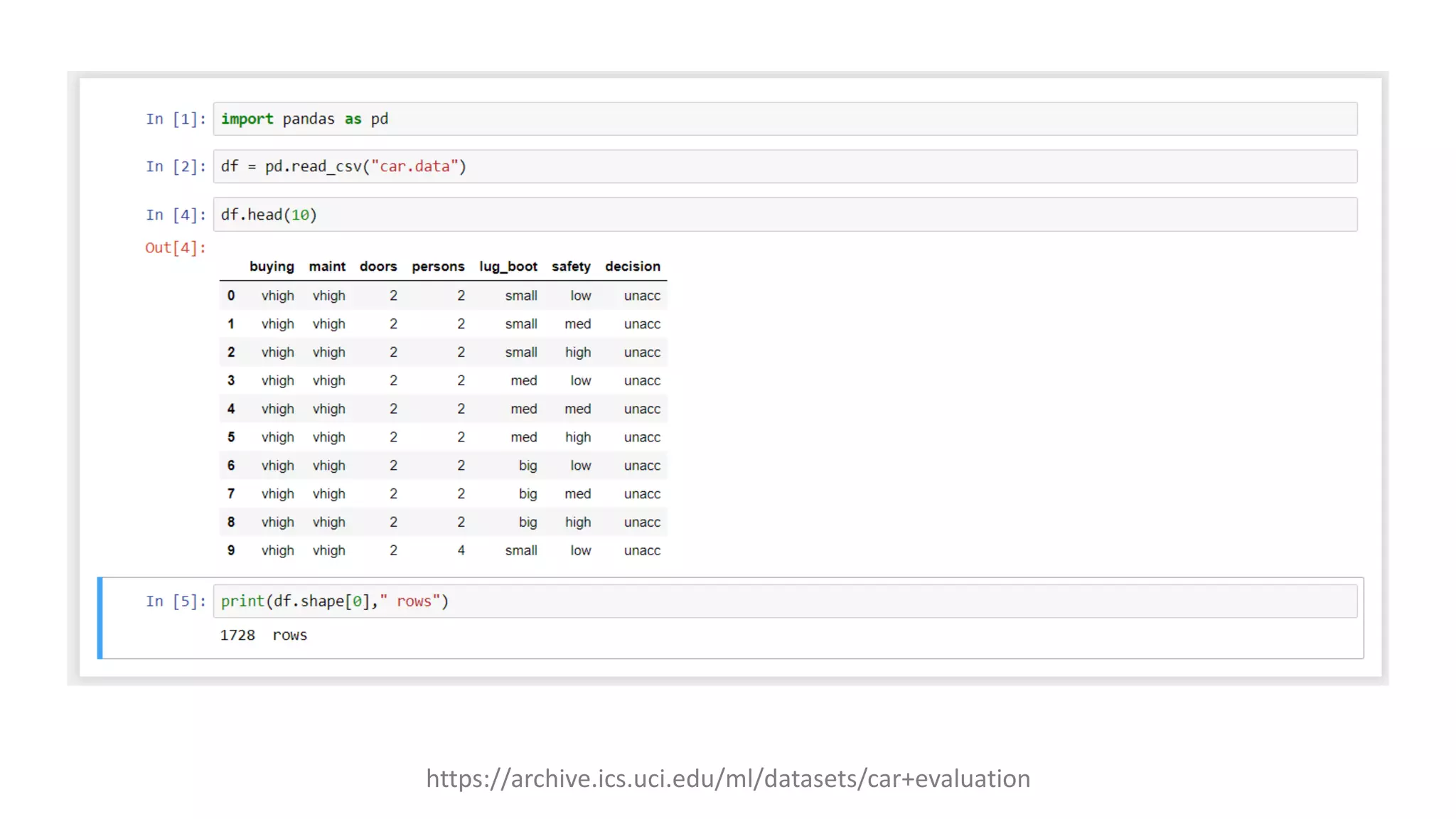

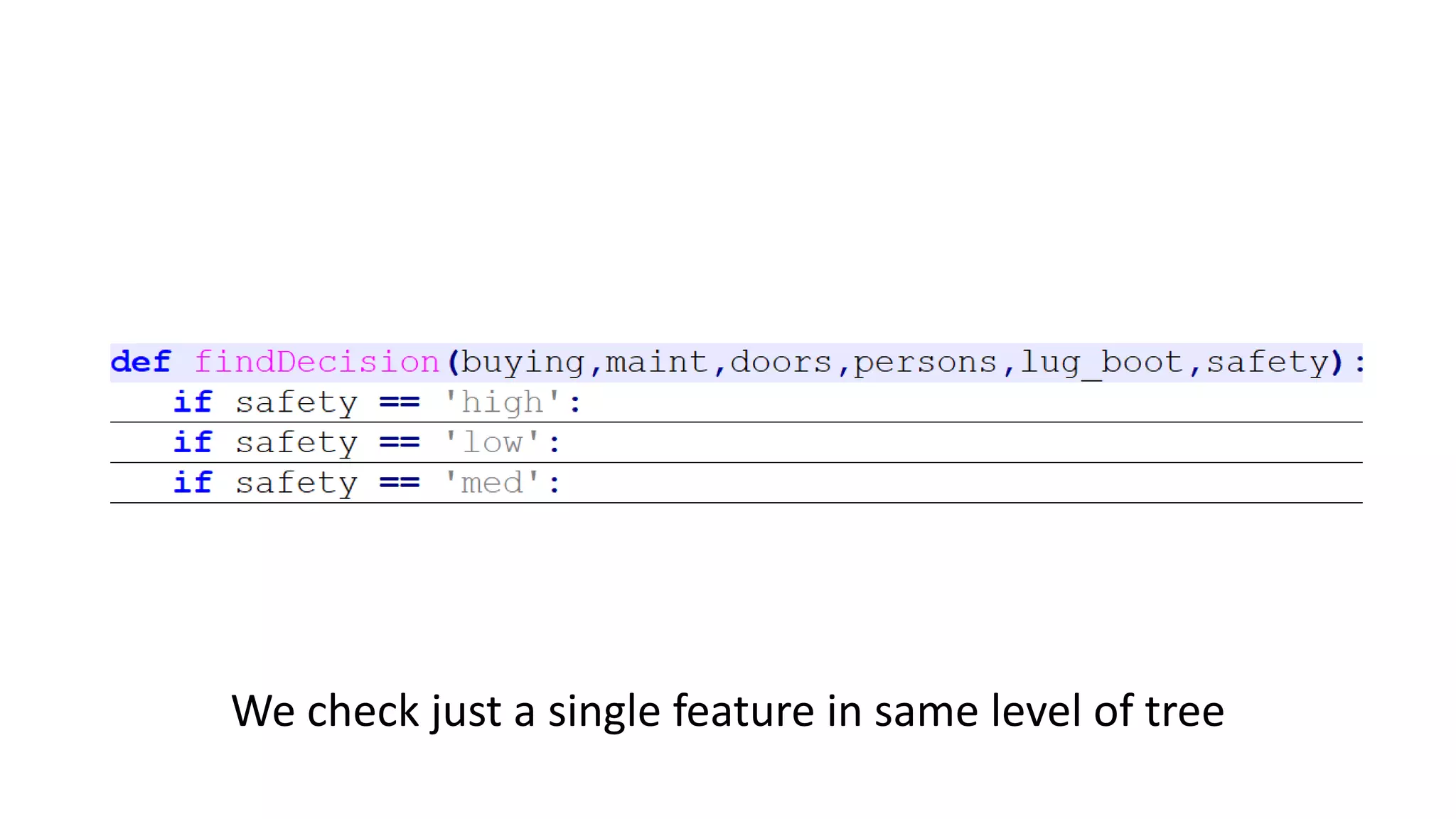

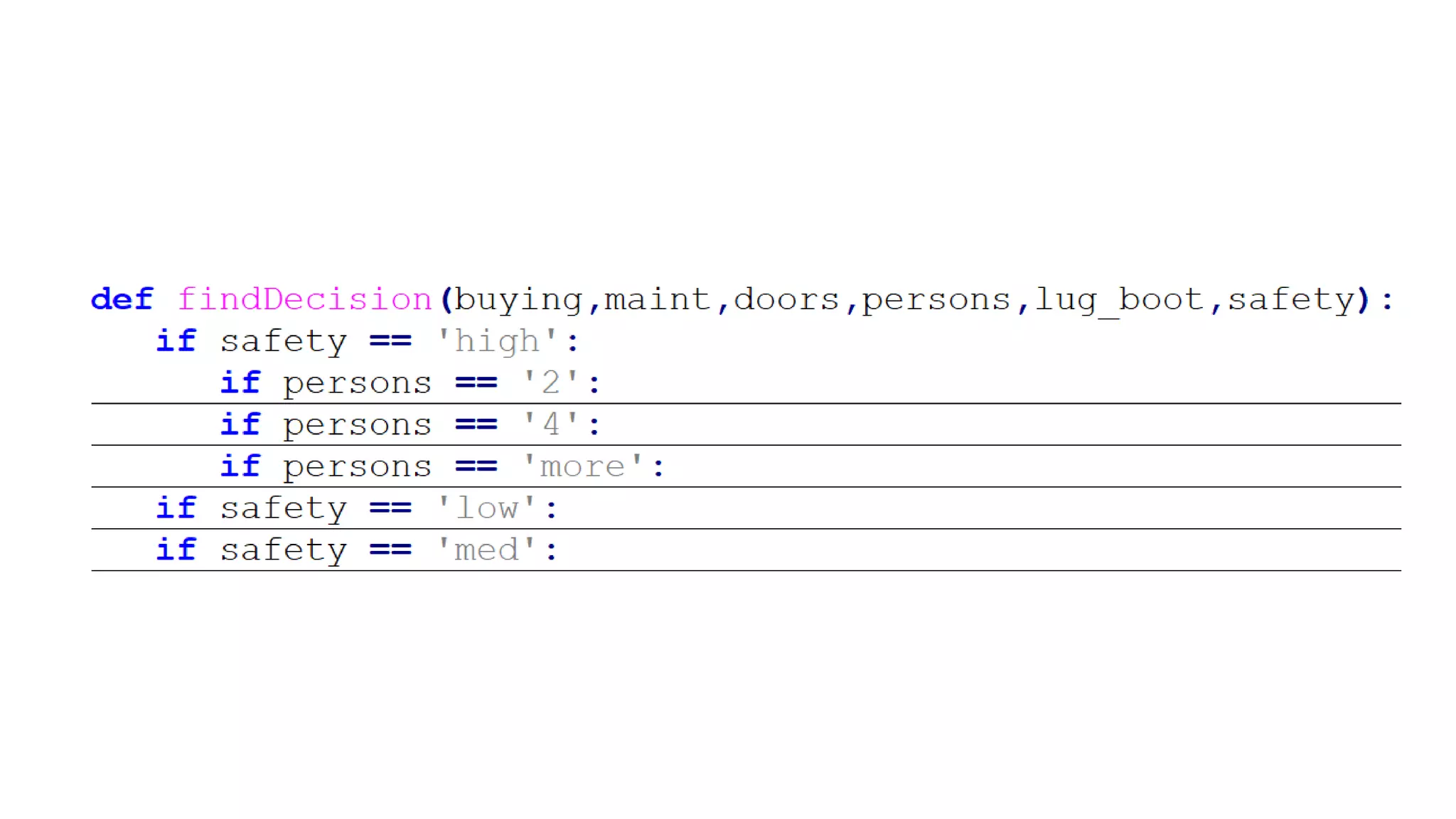

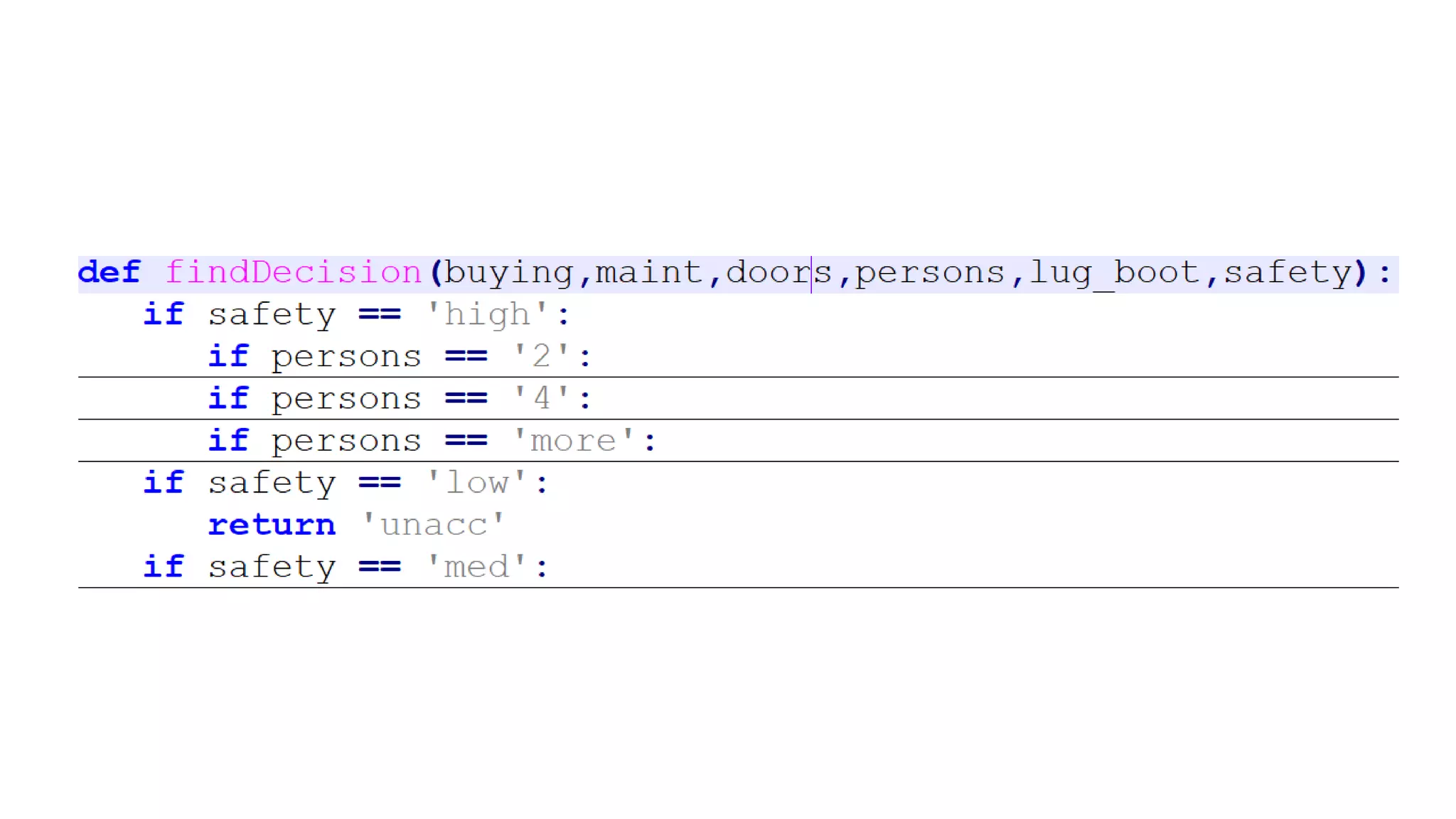

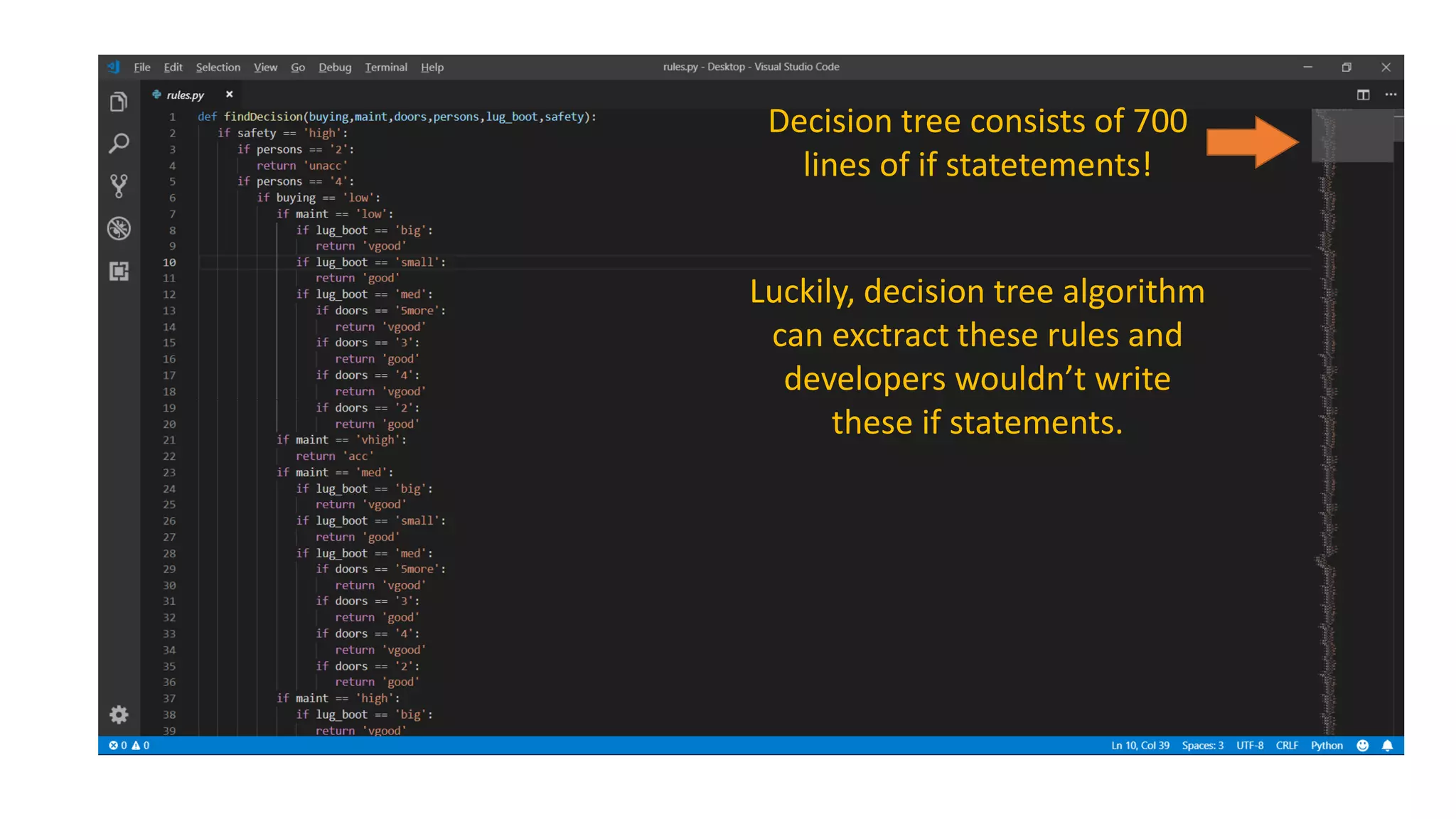

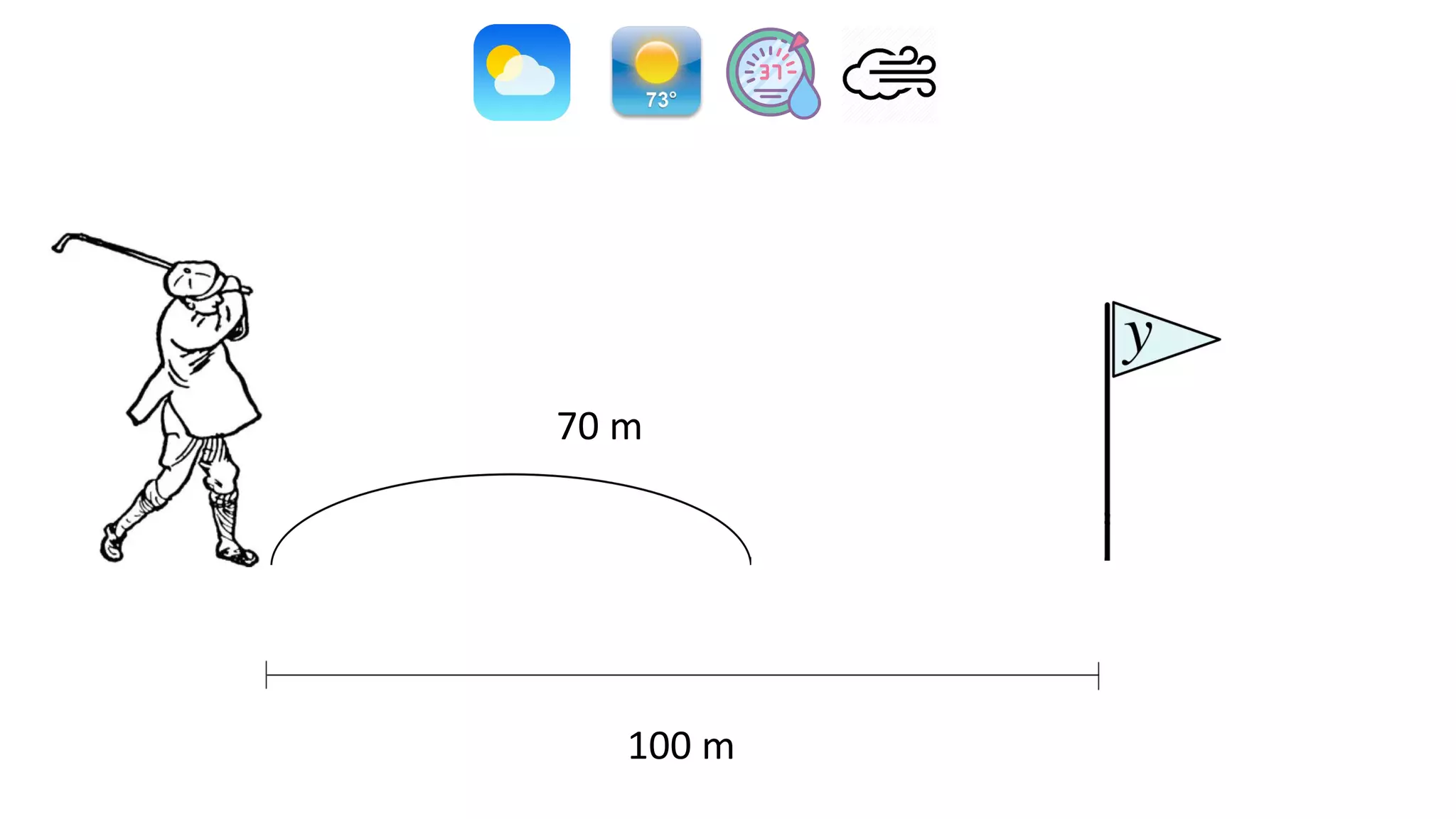

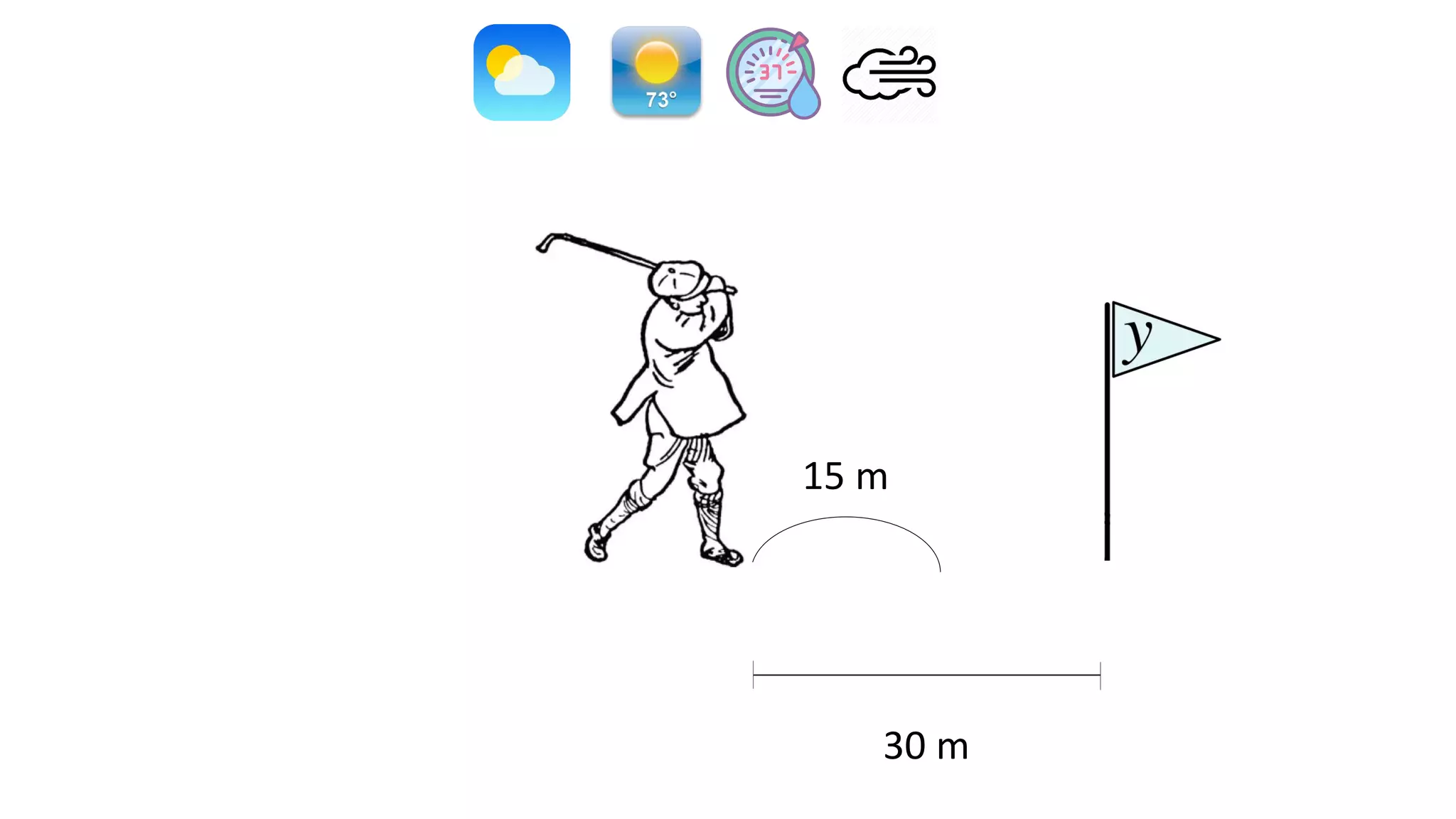

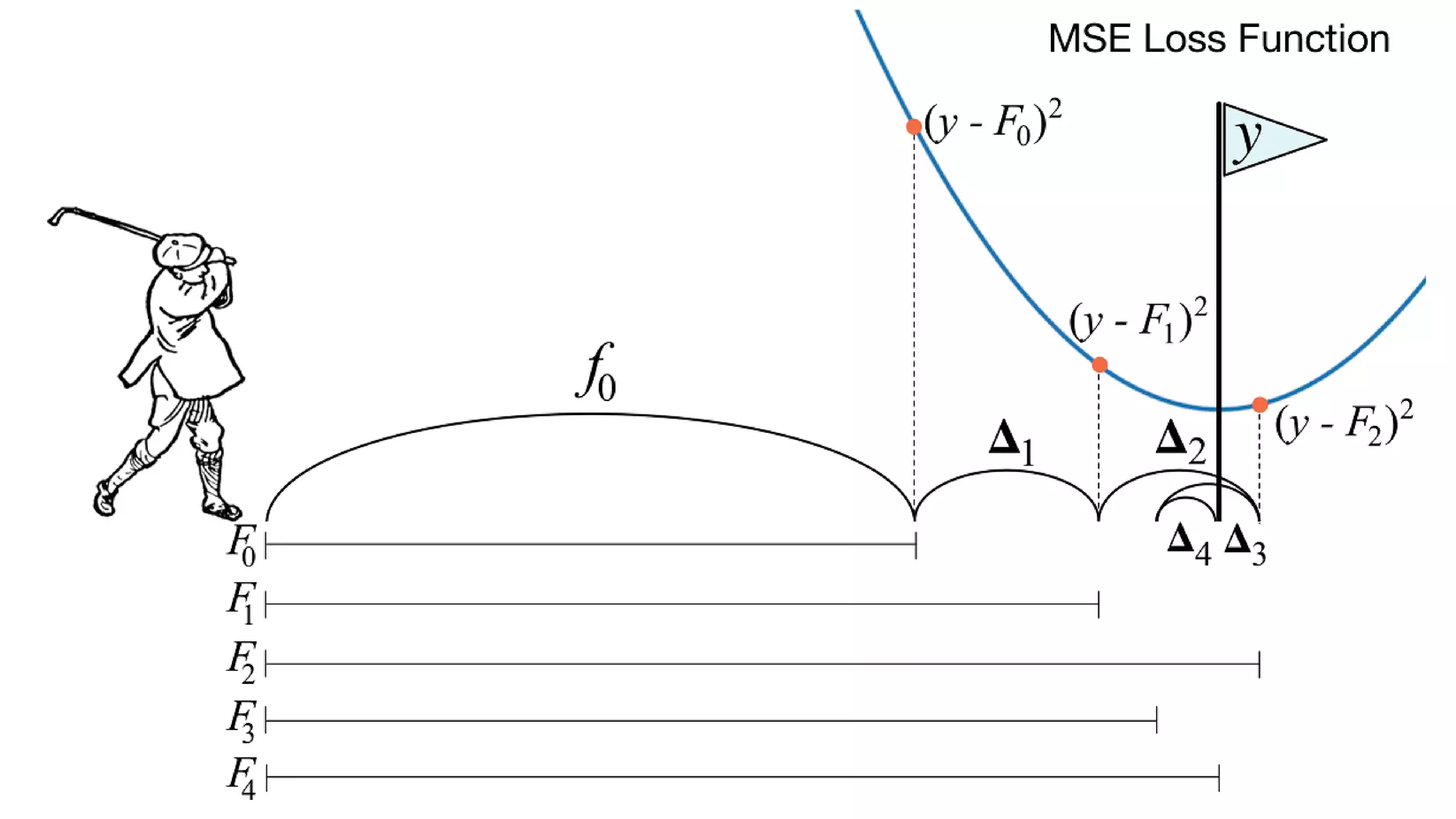

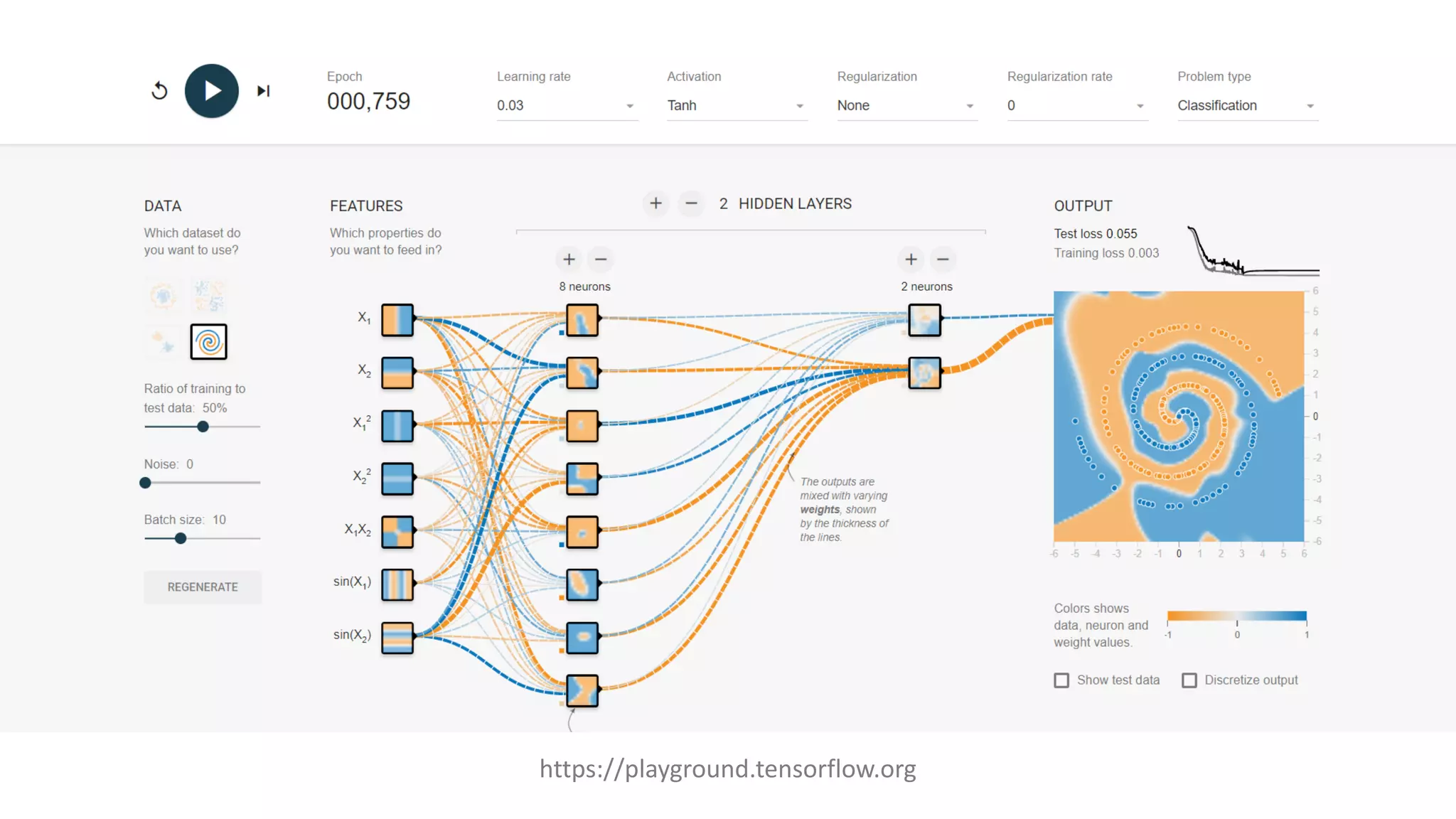

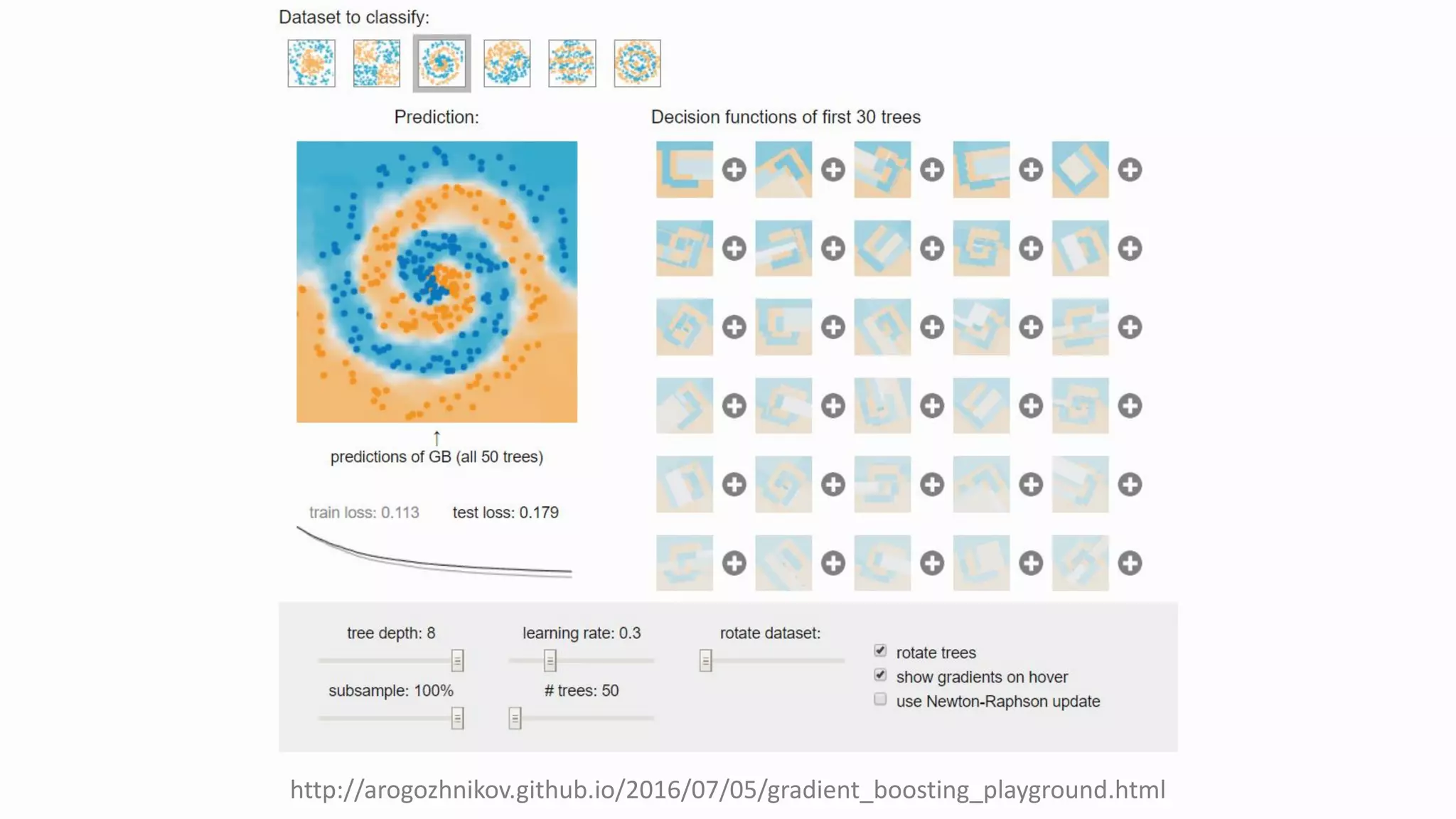

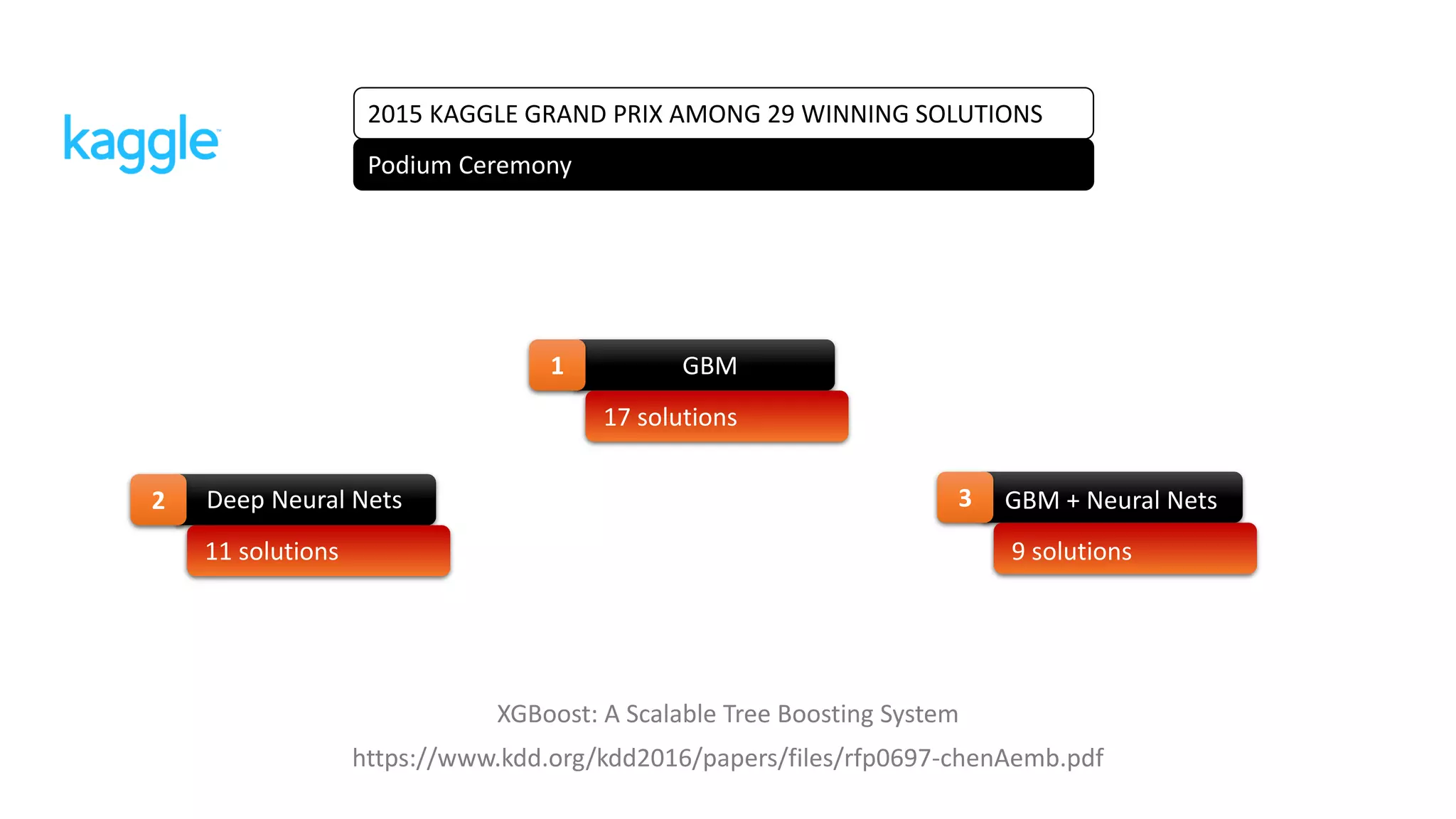

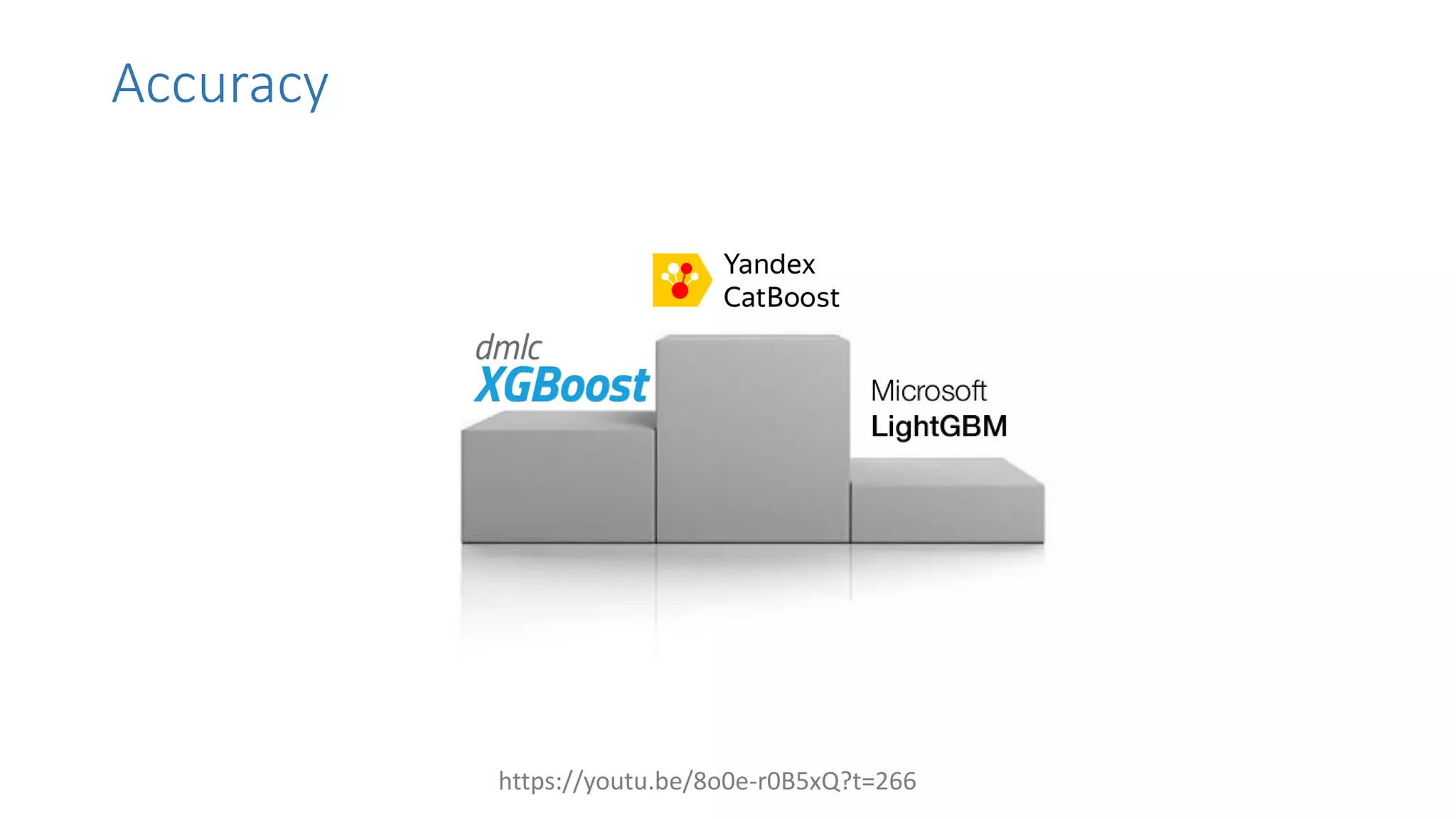

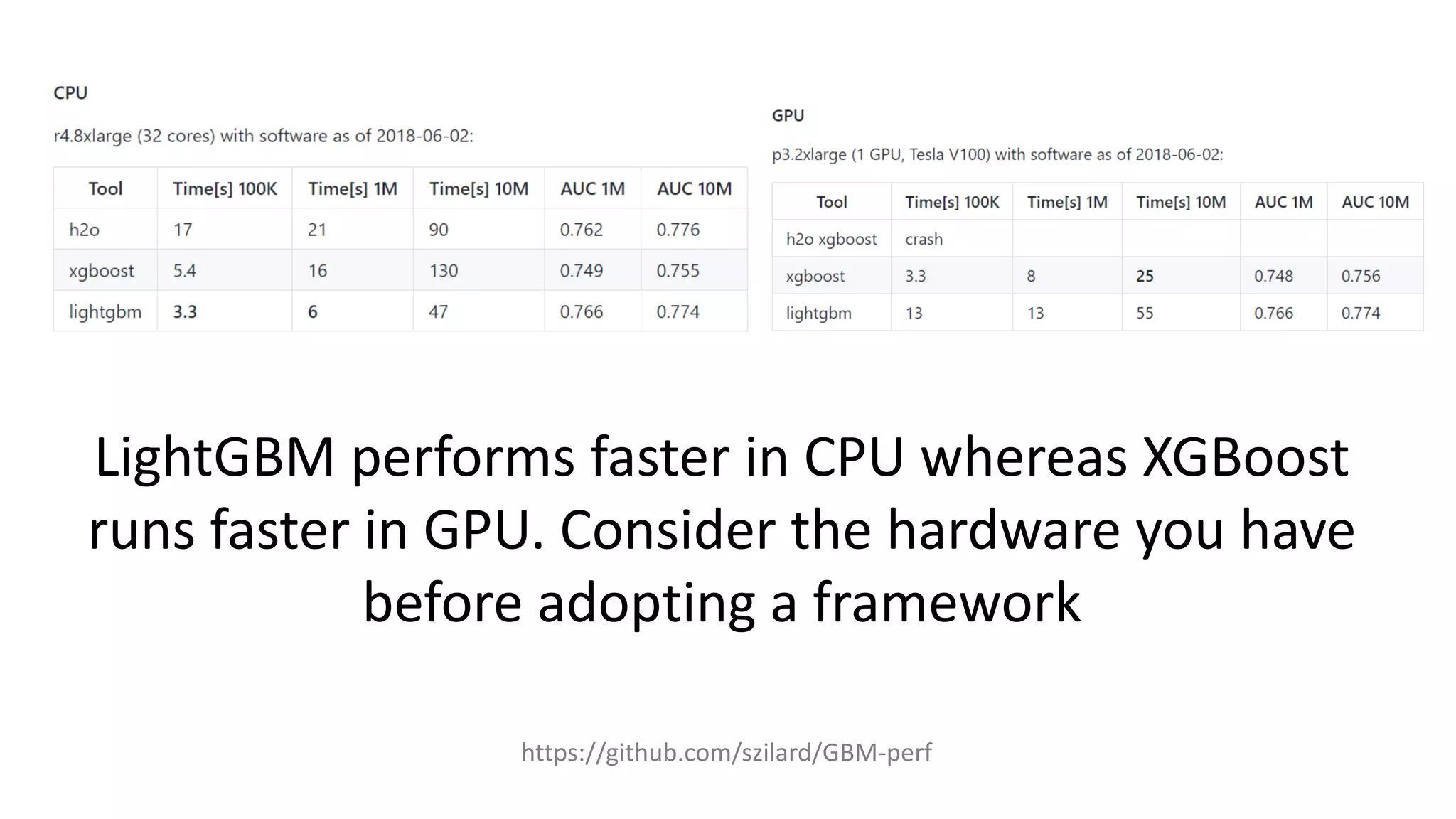

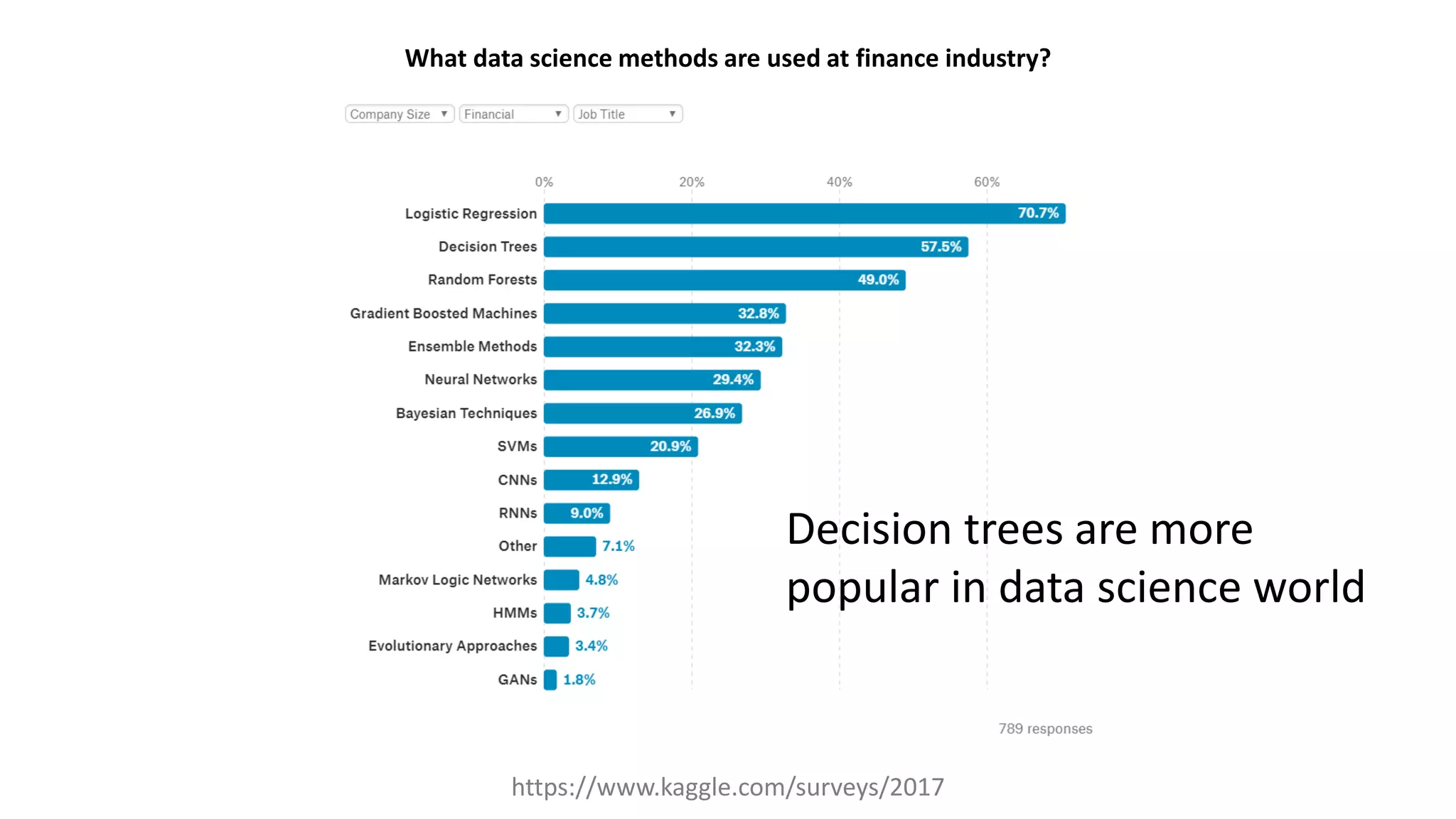

This document compares and contrasts gradient boosting machines (GBM) and deep learning. It notes that while deep learning can perform complex tasks like facial recognition, its decisions are not understandable by humans. GBMs like gradient boosting decision trees address this issue by providing interpretable models with decision rules that can be traced back. The document also discusses popular GBM frameworks like XGBoost and LightGBM and notes that while GBMs are widely used in data science and won many Kaggle competitions, some experts still prefer neural networks.