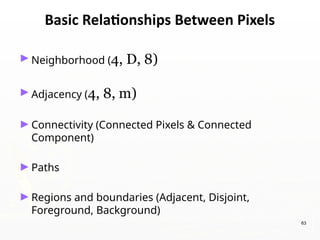

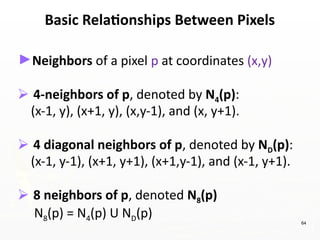

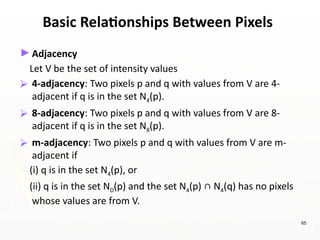

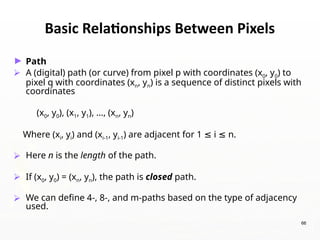

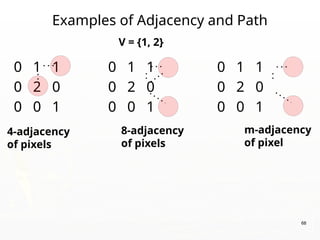

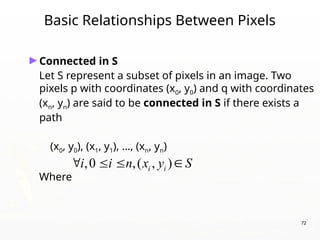

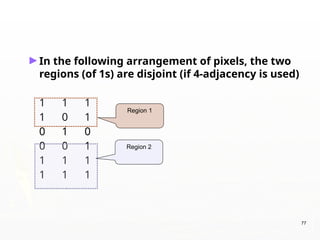

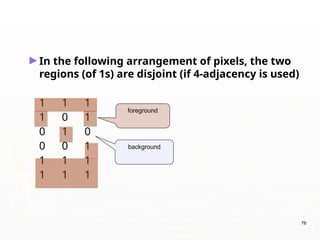

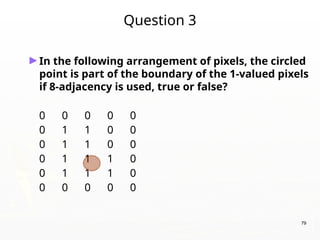

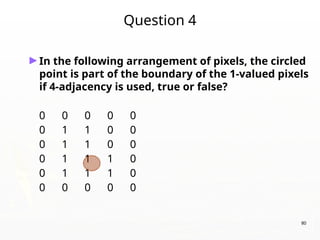

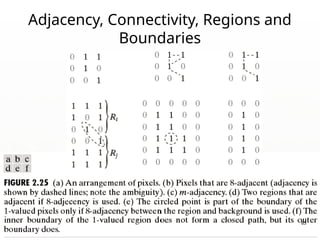

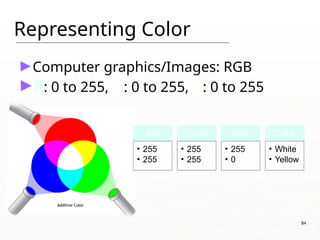

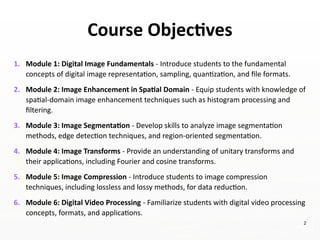

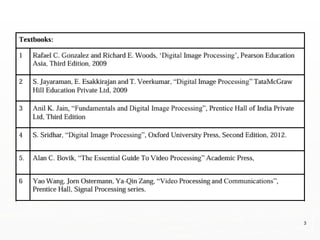

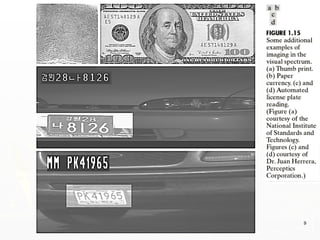

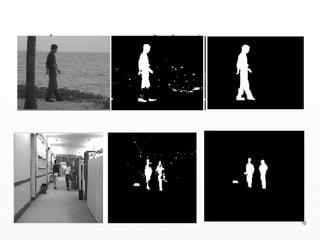

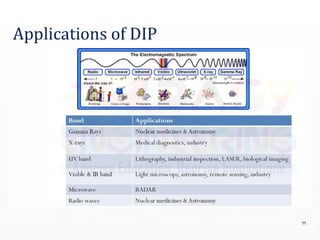

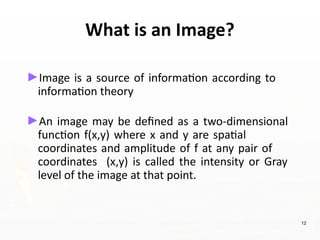

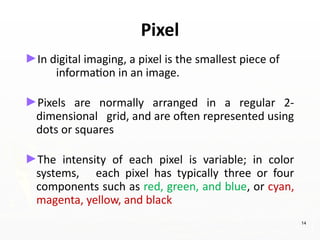

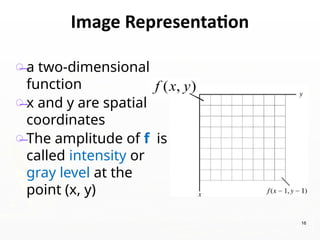

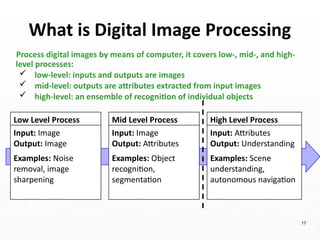

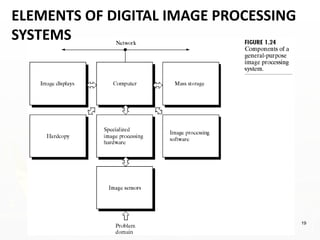

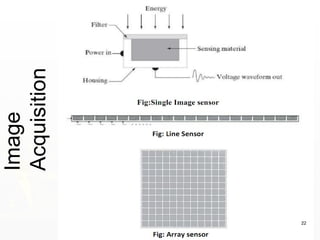

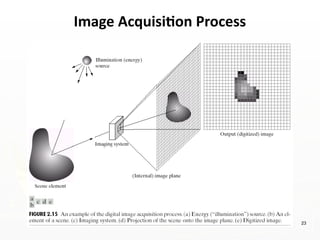

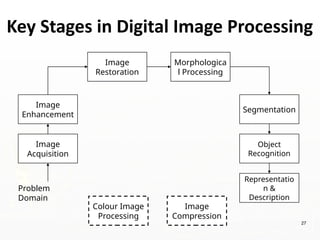

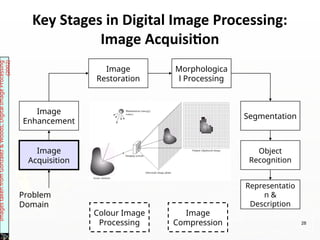

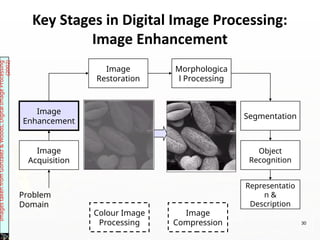

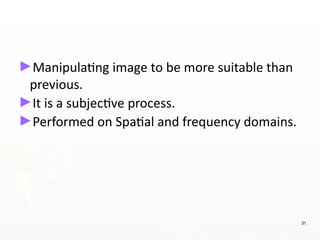

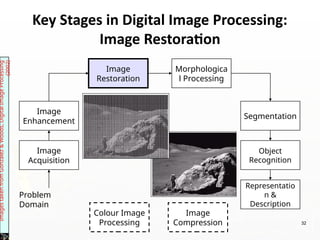

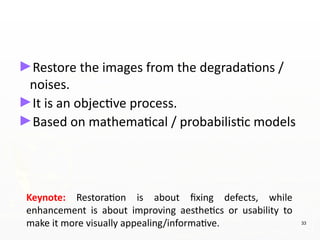

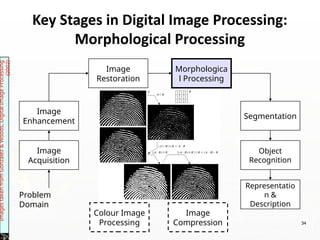

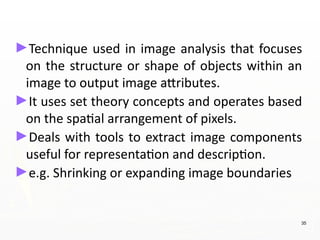

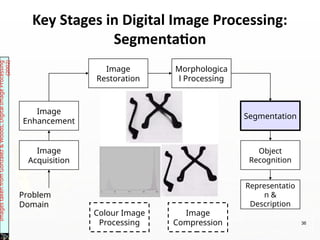

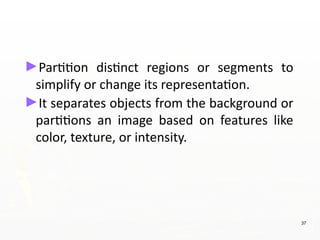

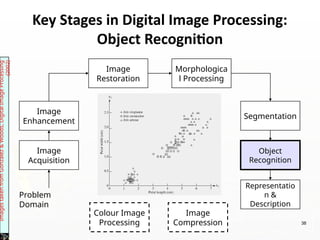

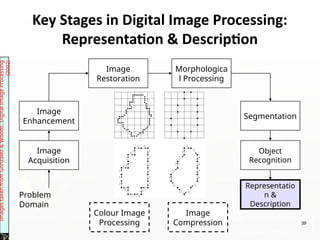

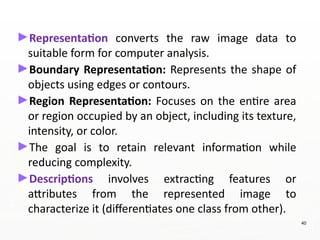

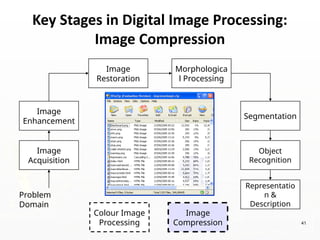

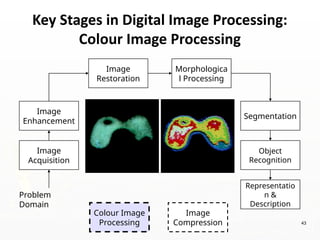

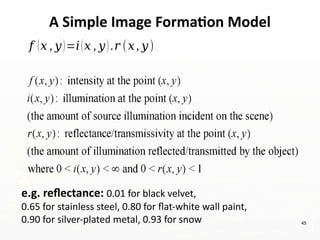

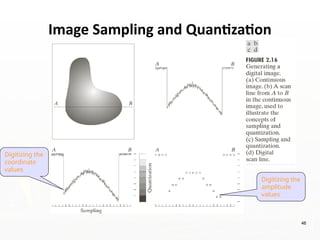

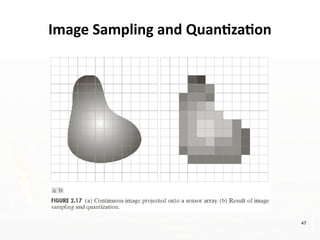

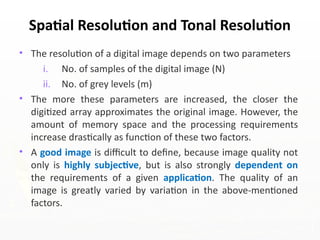

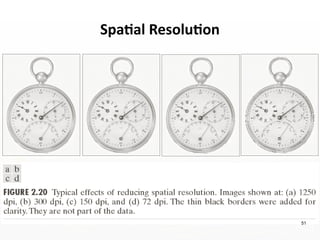

This document outlines the course structure for 'Image and Video Processing' (CSDLO6013), covering six modules ranging from digital image fundamentals to digital video processing. It includes detailed descriptions of key concepts such as pixel representation, image enhancement, segmentation, and various image processing techniques. The document emphasizes the processes involved in digital image systems, including acquisition, processing, and display.

![57

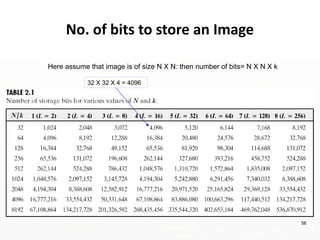

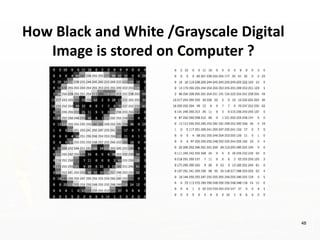

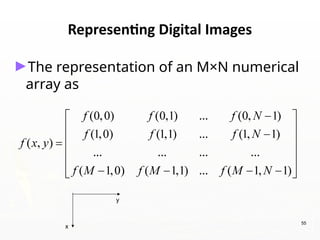

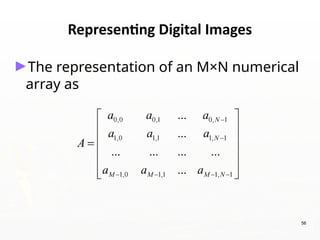

Representing Digital Images

► Discrete intensity interval [0, L-1], L=2k

► The number b of bits required to store a M × N

digitized image

b = M × N × k](https://image.slidesharecdn.com/dip1introdipfundamentals-250116063325-227512ec/85/Digital-Image-Processing-fundamentals-pptx-57-320.jpg)