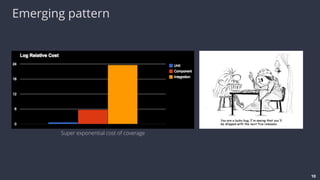

The document discusses the Diffy approach for automatic testing of microservices at Twitter, which aims to improve coverage without the traditional burdens of test writing. It outlines different tiers of testing, their costs, and challenges, highlighting the limitations of unit, component, and integration tests. The Diffy method leverages sample production traffic and existing code assertions to streamline the testing process while managing non-deterministic noise.

![Common Statistical Methods (contd.)

F-Test

H0: Means of a set of populations are equal

Two groups

F = t2, where t is Student’s statistic

Assumptions

Normally distributed populations [1]

Equal variance (Homoscedastic)

Independent samples

[1]

“Power

Func/on

of

the

F-‐Test

Under

Non-‐Normal

Situa/ons”,

by

M.

L.

Tiku.

In

Journal

of

the

American

Sta2s2cal

Associa2on,

Vol.

66,

No.

336

(Dec.,

1971),

pp.

913-‐916. 23](https://image.slidesharecdn.com/diffyref-151015022043-lva1-app6892/85/Diffy-Automatic-Testing-of-Microservices-Twitter-23-320.jpg)