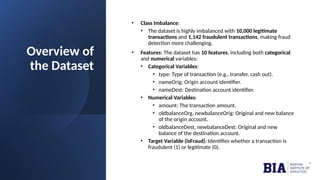

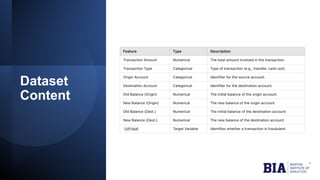

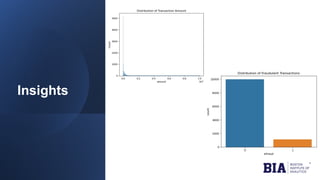

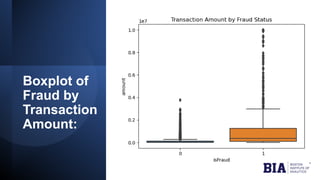

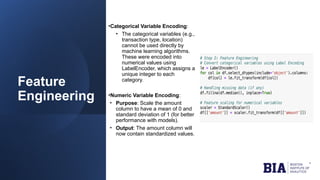

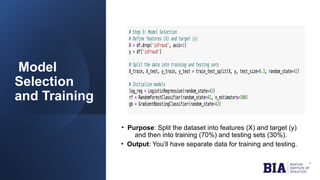

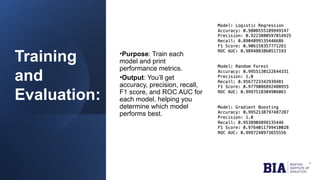

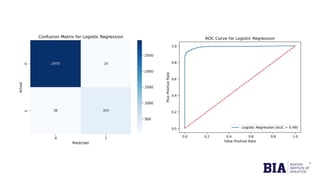

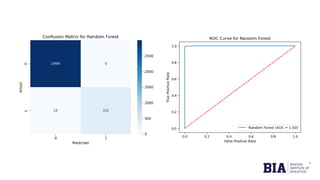

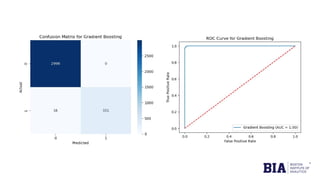

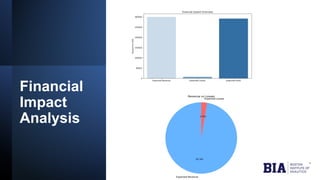

The document discusses fraud detection in financial transactions, emphasizing the importance of identifying fraudulent activities to prevent financial losses and maintain consumer trust. It details a dataset with 11,142 records, highlighting class imbalance between legitimate and fraudulent transactions, and outlines machine learning techniques, including logistic regression, random forest, and gradient boosting, for model training and evaluation. The analysis concluded that gradient boosting was the most effective model in distinguishing between fraudulent and legitimate transactions, although limitations such as class imbalance were noted.