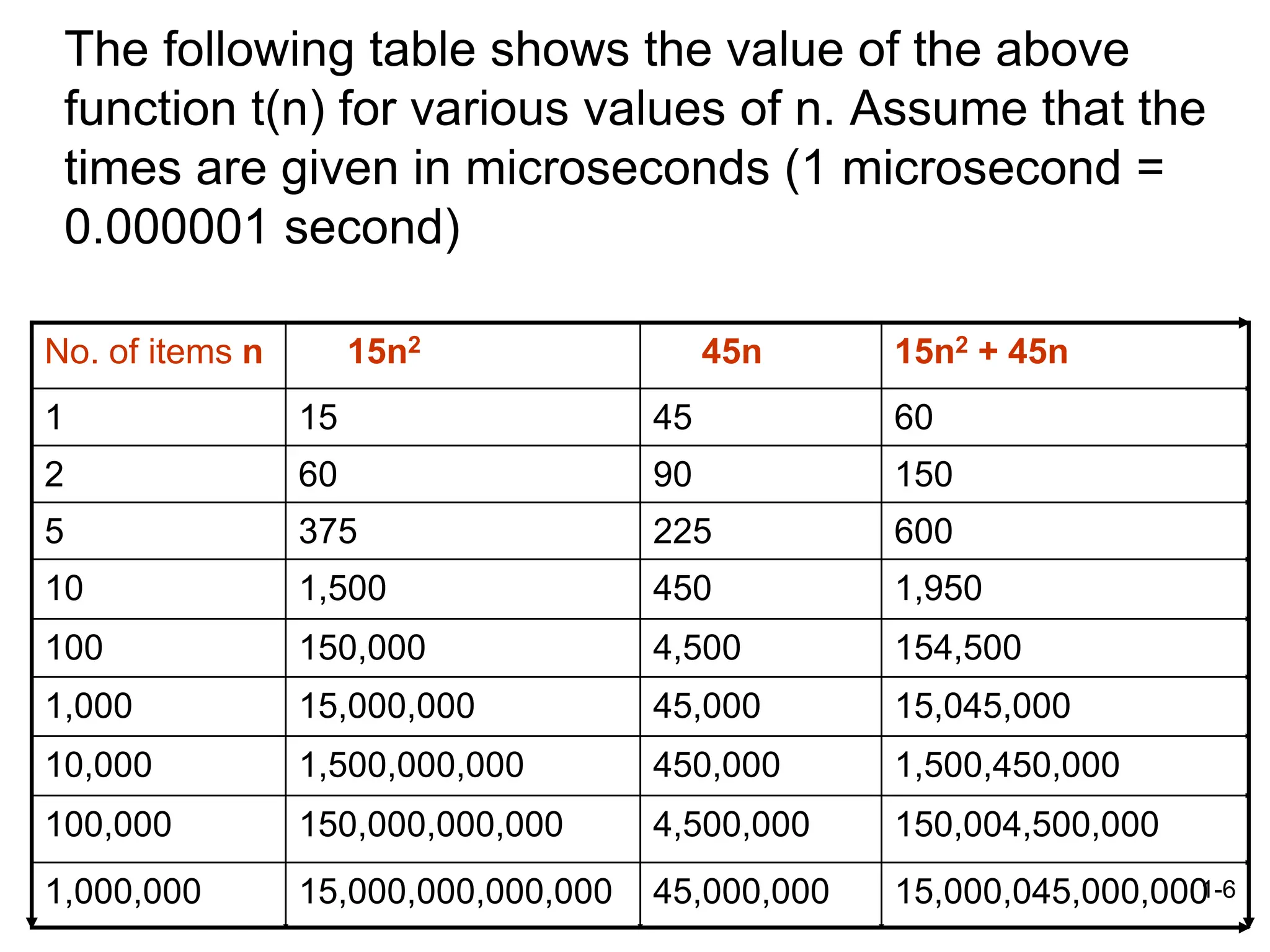

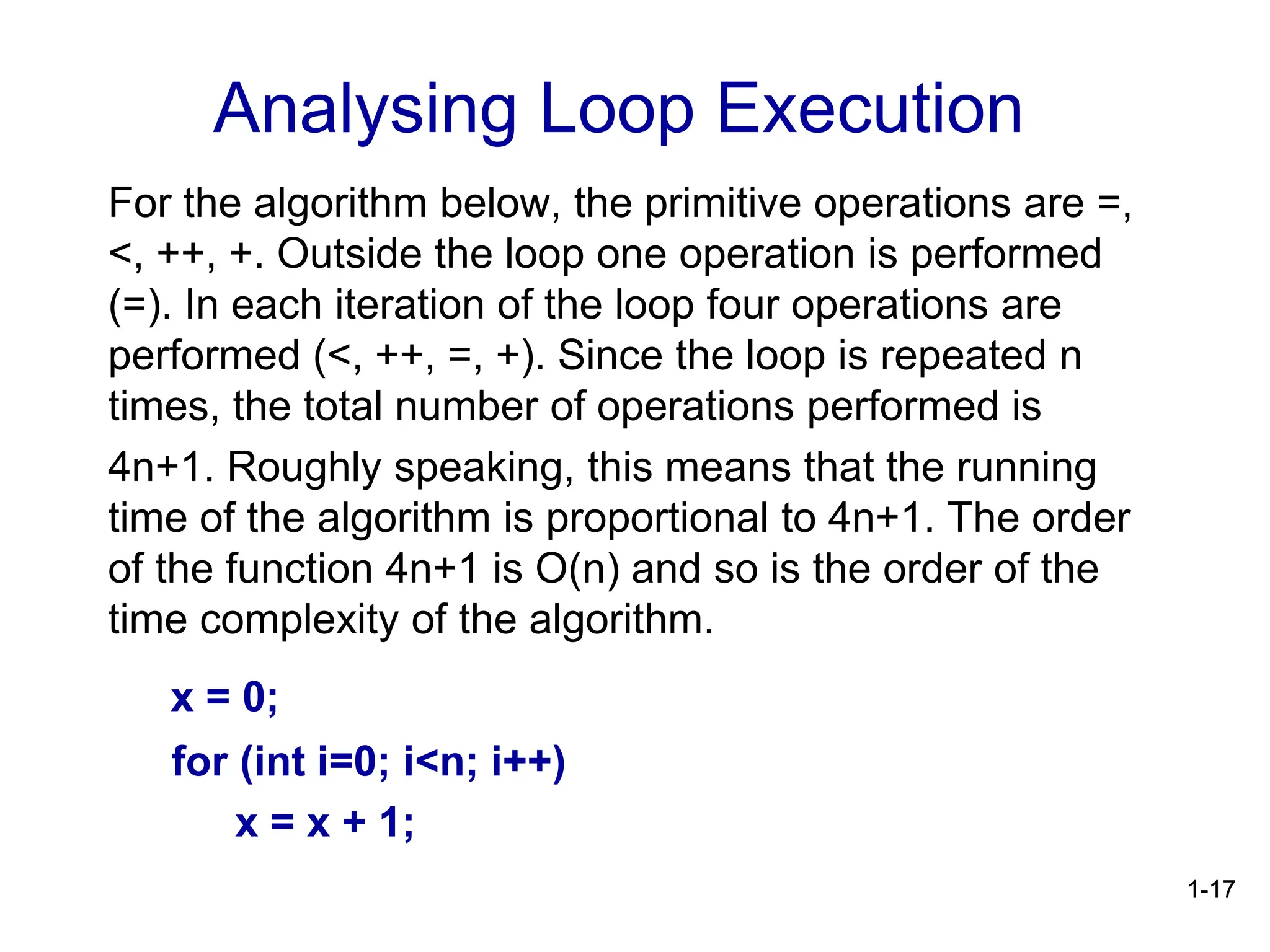

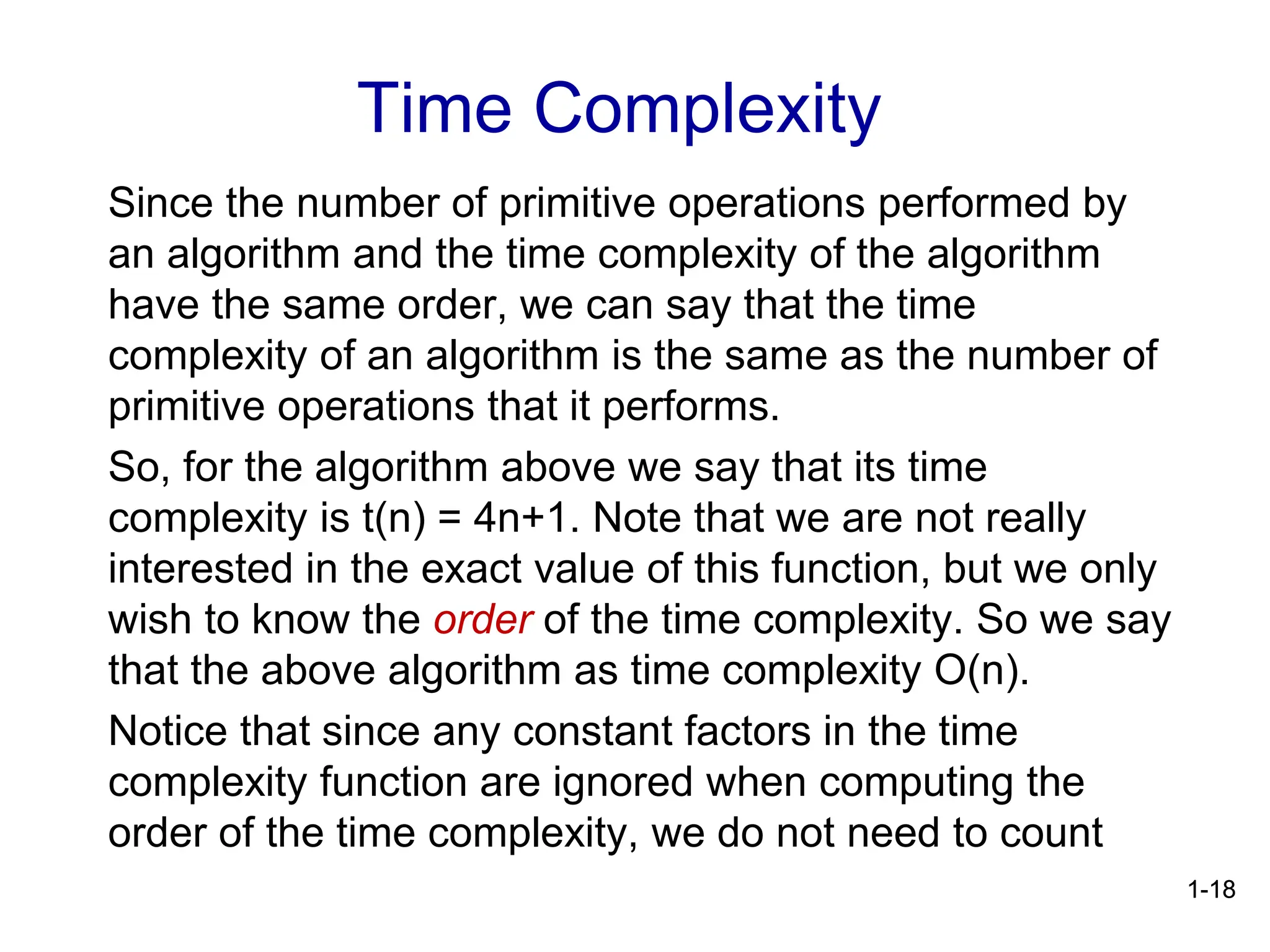

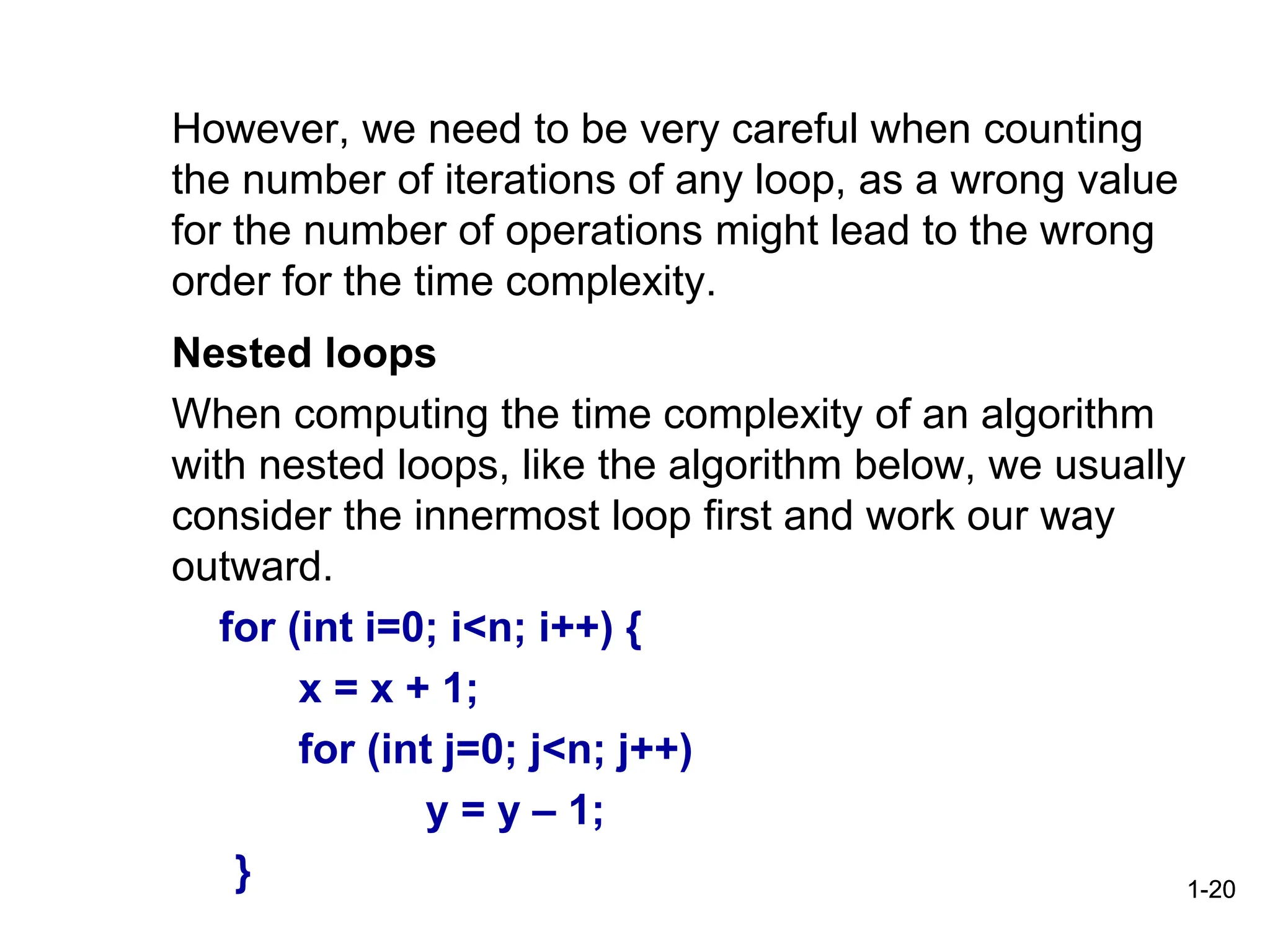

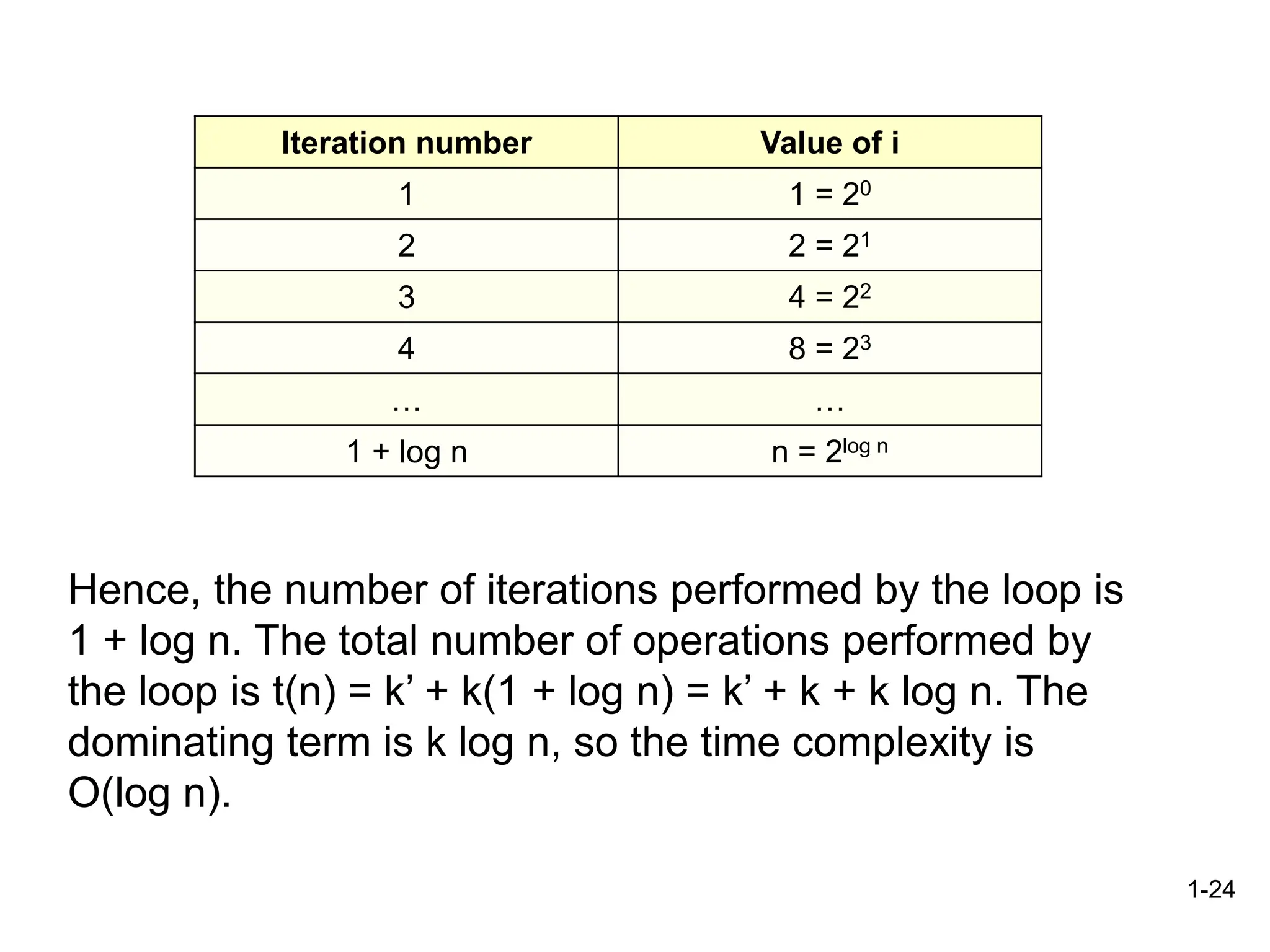

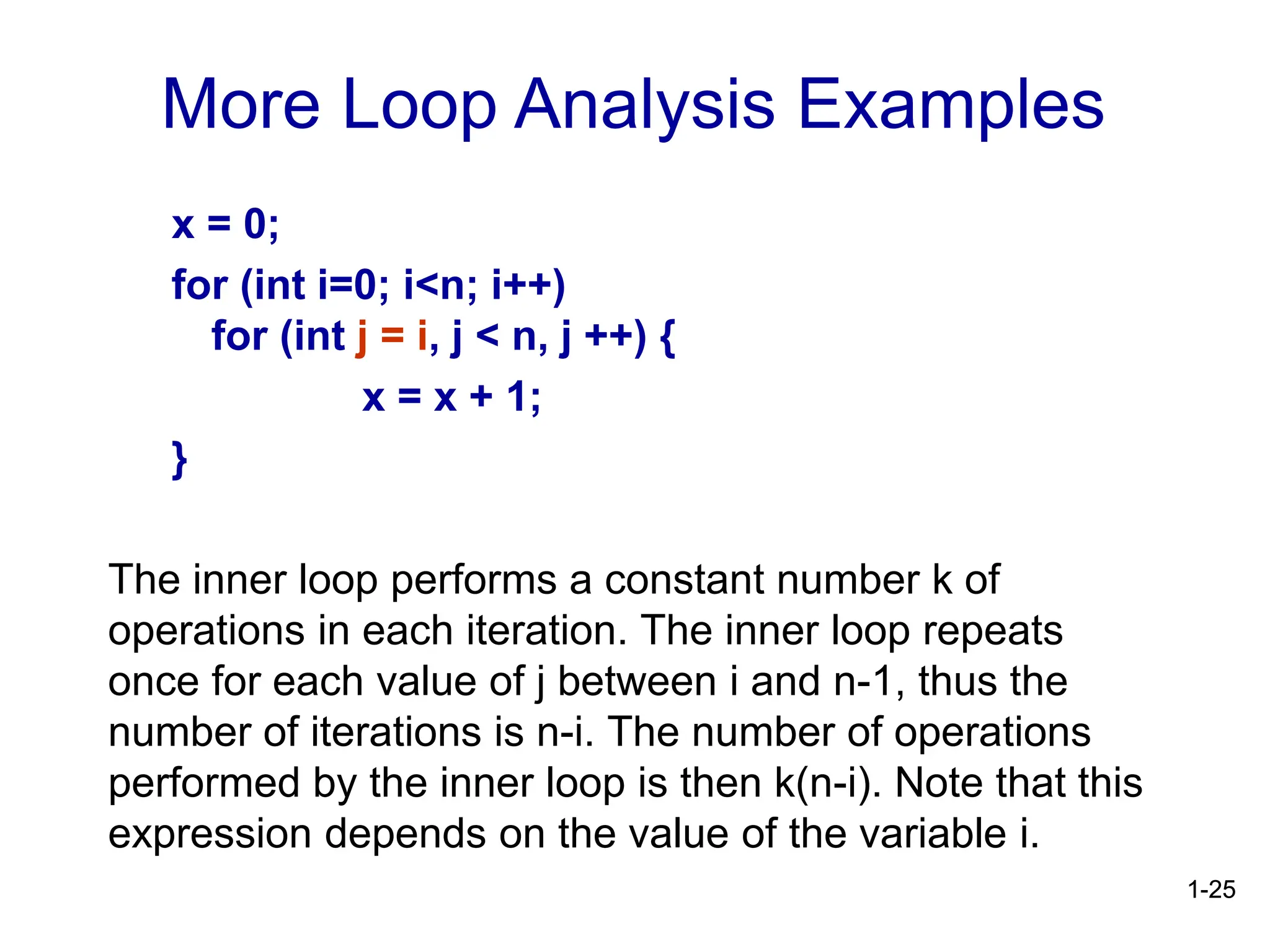

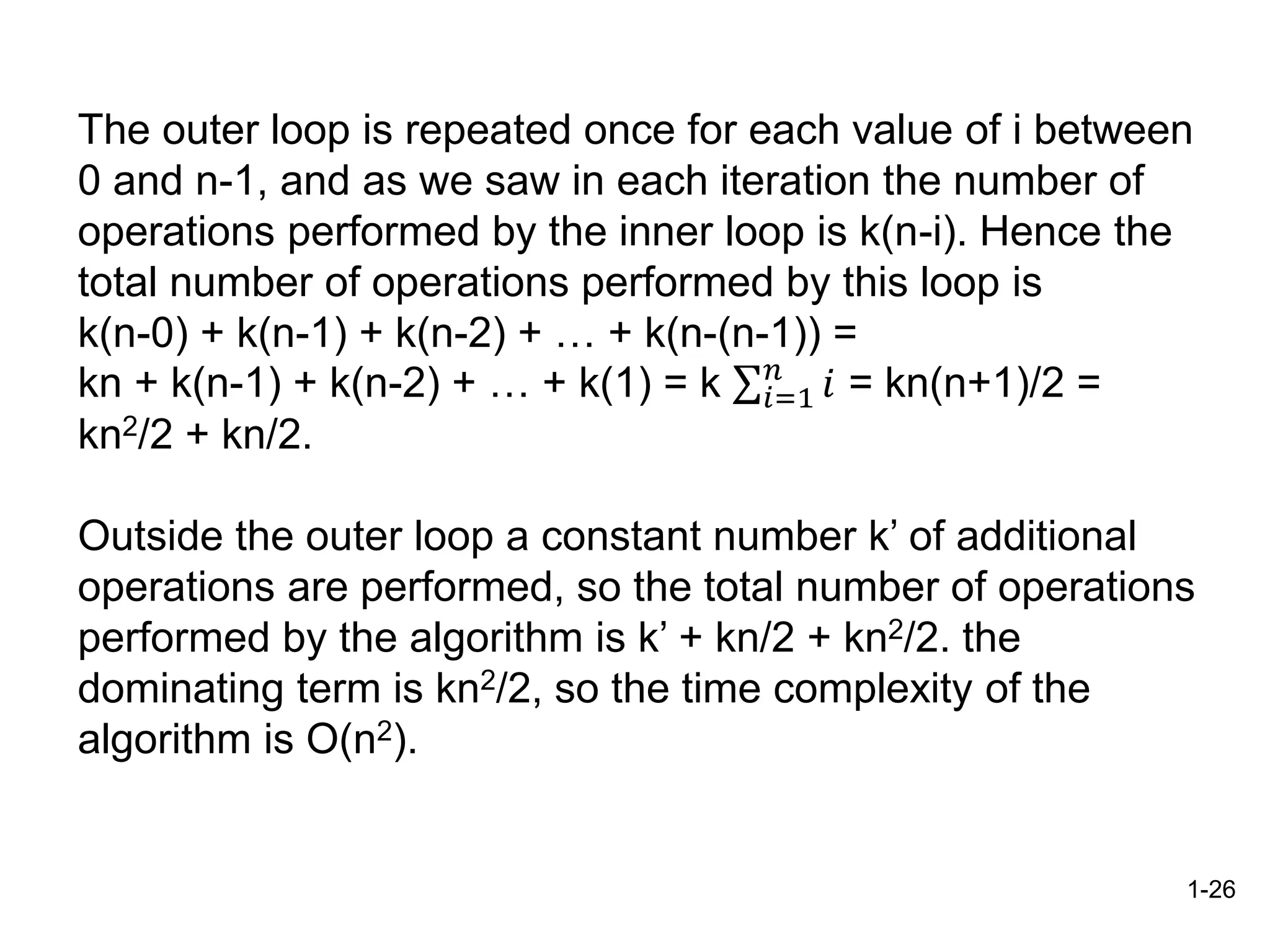

The document discusses the analysis of algorithms, focusing on time complexity, which measures the efficiency of an algorithm based on the size of the input. It introduces concepts such as asymptotic growth and big-oh notation, explaining how to determine the order of time complexity by identifying the most significant terms in a function. The document also emphasizes the importance of counting primitive operations and estimating running time based on loop execution and nested loops.