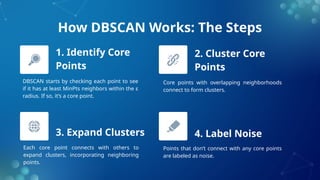

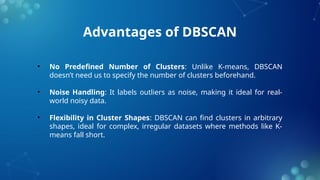

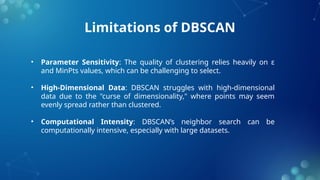

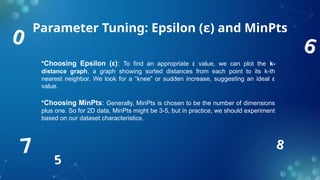

DBSCAN (Density-Based Spatial Clustering of Applications with Noise) is a clustering algorithm that identifies clusters based on data density rather than shape, effectively handling noise and outliers. It operates on two main parameters, epsilon (ε) and minpts, which need careful tuning for effective results. DBSCAN is widely used in applications like geospatial analysis, image processing, anomaly detection, and customer segmentation due to its flexibility in finding irregular-shaped clusters.