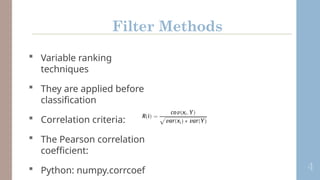

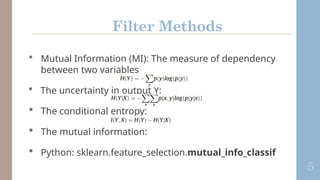

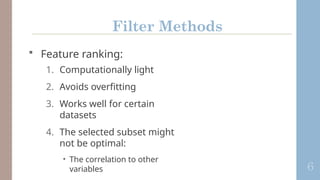

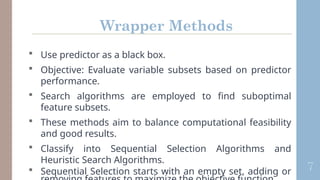

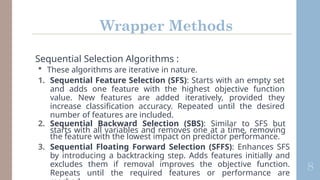

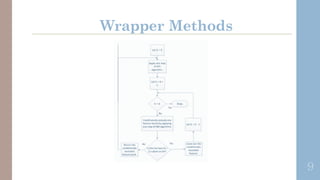

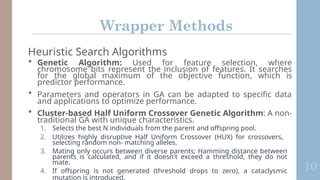

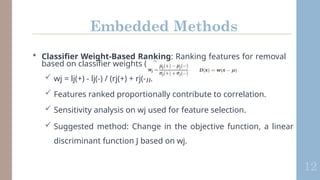

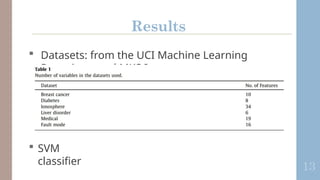

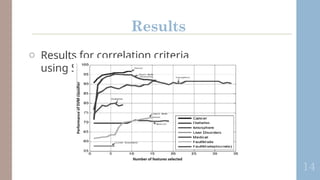

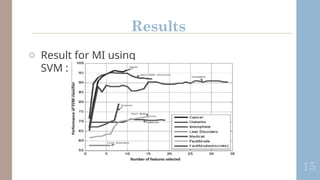

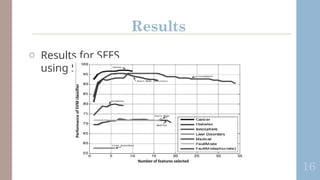

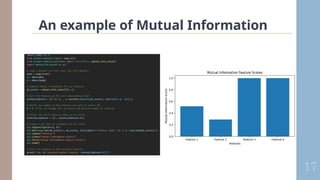

The document outlines various feature selection methods, focusing on filter, wrapper, and embedded techniques designed to enhance predictive performance while reducing irrelevant variables. It details computational strategies like sequential selection and genetic algorithms, as well as the integration of feature selection into model training processes. The conclusion emphasizes that effective feature selection improves model insights, generalization, and identifies irrelevant variables.