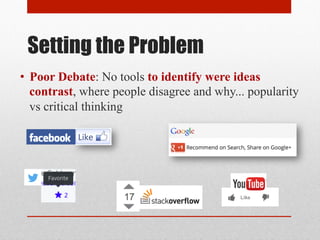

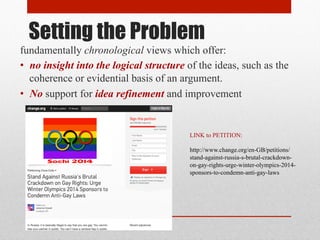

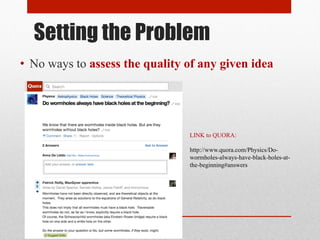

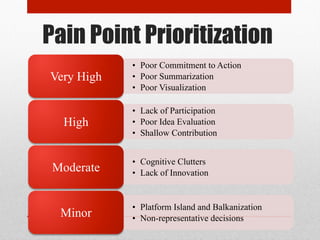

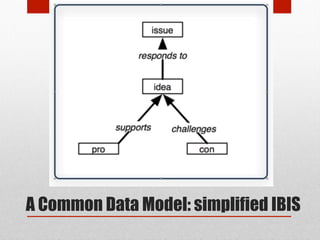

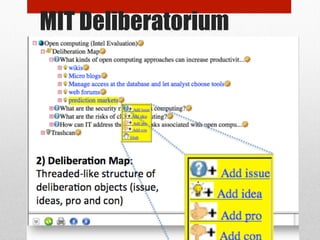

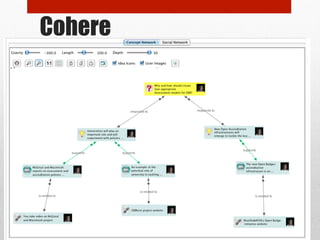

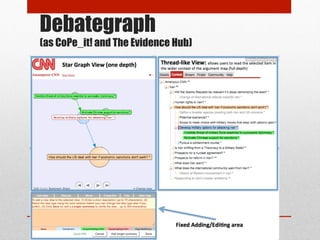

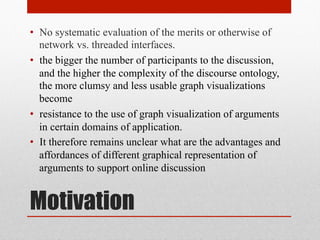

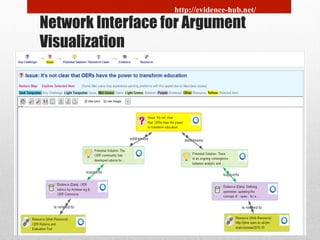

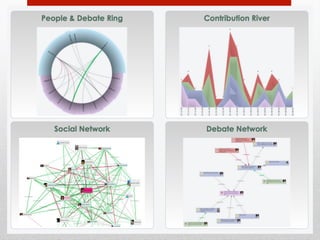

The document presents research on the effectiveness of network versus threaded interfaces for online deliberation, emphasizing issues with traditional chronological formats that hinder idea evaluation and participation. The exploratory study finds that network visualizations improve users' understanding of complex arguments, enhance task performance, and evoke positive emotional responses compared to linear interfaces. Ultimately, it suggests that dynamic network visualizations could enrich online discourse by providing better structural insights into argumentation.