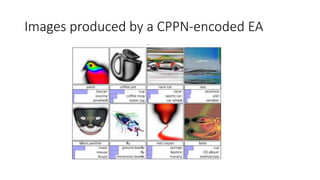

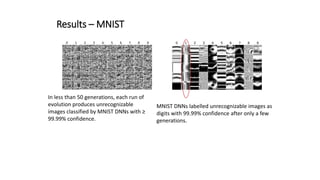

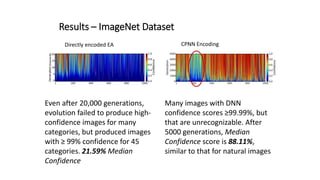

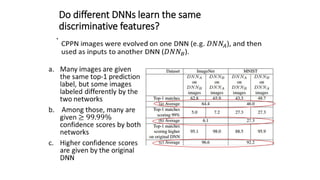

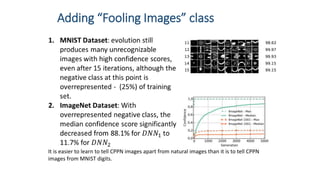

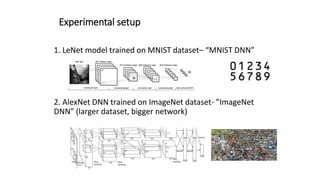

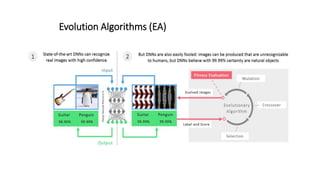

The document discusses the vulnerability of deep neural networks (DNNs) to be misled by unrecognizable images despite high confidence predictions, using trials with the MNIST and ImageNet datasets. It highlights the experimental setup involving various encoding methods, including direct and indirect encoding, and reports that the MNIST DNN was able to classify unrecognizable images with 99.99% confidence after only a few generations. For the ImageNet dataset, while many images achieved high confidence, some categories remained challenging to classify accurately even after extensive generations of evolution.

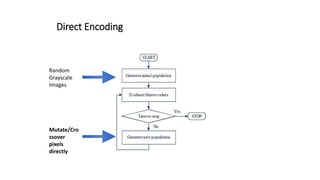

![Direct Encoding

Grayscale values for MNIST, HSV values for ImageNet.

Each pixel value is initialized with uniform random

noise within the [0, 255] range. Those numbers are

independently mutated.](https://image.slidesharecdn.com/deepneuralnetworksareeasilyfooled-230424162537-8ac6e351/85/Deep-Neural-Networks-Are-Easily-Fooled-pptx-5-320.jpg)